Youshan Zhang

CAST-LUT: Tokenizer-Guided HSV Look-Up Tables for Purple Flare Removal

Nov 10, 2025Abstract:Purple flare, a diffuse chromatic aberration artifact commonly found around highlight areas, severely degrades the tone transition and color of the image. Existing traditional methods are based on hand-crafted features, which lack flexibility and rely entirely on fixed priors, while the scarcity of paired training data critically hampers deep learning. To address this issue, we propose a novel network built upon decoupled HSV Look-Up Tables (LUTs). The method aims to simplify color correction by adjusting the Hue (H), Saturation (S), and Value (V) components independently. This approach resolves the inherent color coupling problems in traditional methods. Our model adopts a two-stage architecture: First, a Chroma-Aware Spectral Tokenizer (CAST) converts the input image from RGB space to HSV space and independently encodes the Hue (H) and Value (V) channels into a set of semantic tokens describing the Purple flare status; second, the HSV-LUT module takes these tokens as input and dynamically generates independent correction curves (1D-LUTs) for the three channels H, S, and V. To effectively train and validate our model, we built the first large-scale purple flare dataset with diverse scenes. We also proposed new metrics and a loss function specifically designed for this task. Extensive experiments demonstrate that our model not only significantly outperforms existing methods in visual effects but also achieves state-of-the-art performance on all quantitative metrics.

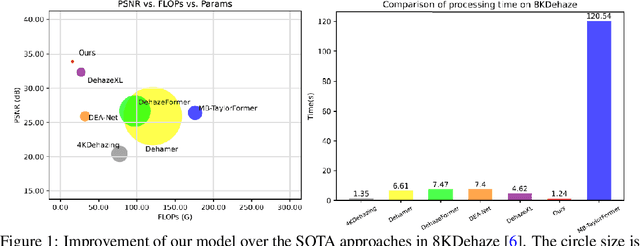

UHD Image Dehazing via anDehazeFormer with Atmospheric-aware KV Cache

May 20, 2025

Abstract:In this paper, we propose an efficient visual transformer framework for ultra-high-definition (UHD) image dehazing that addresses the key challenges of slow training speed and high memory consumption for existing methods. Our approach introduces two key innovations: 1) an \textbf{a}daptive \textbf{n}ormalization mechanism inspired by the nGPT architecture that enables ultra-fast and stable training with a network with a restricted range of parameter expressions; and 2) we devise an atmospheric scattering-aware KV caching mechanism that dynamically optimizes feature preservation based on the physical haze formation model. The proposed architecture improves the training convergence speed by \textbf{5 $\times$} while reducing memory overhead, enabling real-time processing of 50 high-resolution images per second on an RTX4090 GPU. Experimental results show that our approach maintains state-of-the-art dehazing quality while significantly improving computational efficiency for 4K/8K image restoration tasks. Furthermore, we provide a new dehazing image interpretable method with the help of an integrated gradient attribution map. Our code can be found here: https://anonymous.4open.science/r/anDehazeFormer-632E/README.md.

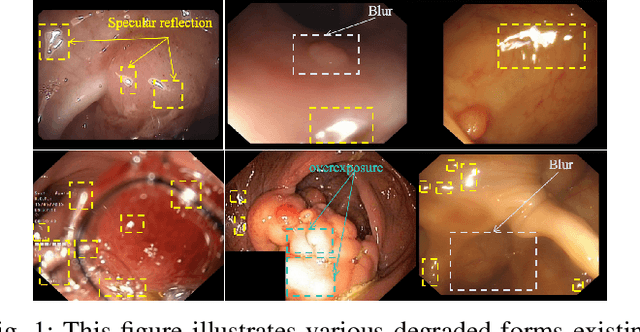

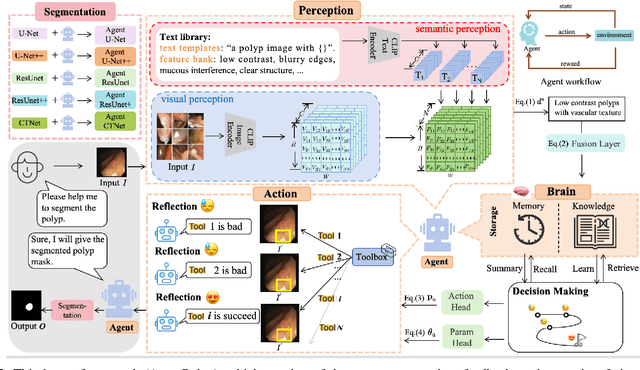

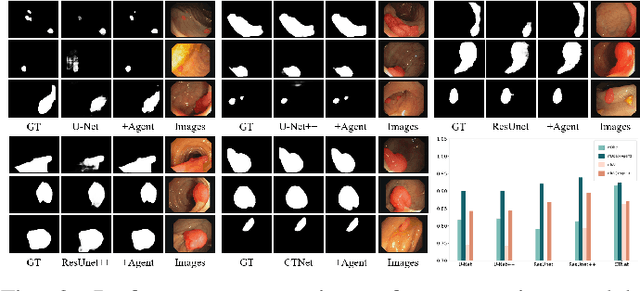

AgentPolyp: Accurate Polyp Segmentation via Image Enhancement Agent

Apr 15, 2025

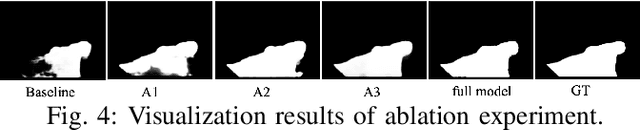

Abstract:Since human and environmental factors interfere, captured polyp images usually suffer from issues such as dim lighting, blur, and overexposure, which pose challenges for downstream polyp segmentation tasks. To address the challenges of noise-induced degradation in polyp images, we present AgentPolyp, a novel framework integrating CLIP-based semantic guidance and dynamic image enhancement with a lightweight neural network for segmentation. The agent first evaluates image quality using CLIP-driven semantic analysis (e.g., identifying ``low-contrast polyps with vascular textures") and adapts reinforcement learning strategies to dynamically apply multi-modal enhancement operations (e.g., denoising, contrast adjustment). A quality assessment feedback loop optimizes pixel-level enhancement and segmentation focus in a collaborative manner, ensuring robust preprocessing before neural network segmentation. This modular architecture supports plug-and-play extensions for various enhancement algorithms and segmentation networks, meeting deployment requirements for endoscopic devices.

Automatic Teaching Platform on Vision Language Retrieval Augmented Generation

Mar 07, 2025Abstract:Automating teaching presents unique challenges, as replicating human interaction and adaptability is complex. Automated systems cannot often provide nuanced, real-time feedback that aligns with students' individual learning paces or comprehension levels, which can hinder effective support for diverse needs. This is especially challenging in fields where abstract concepts require adaptive explanations. In this paper, we propose a vision language retrieval augmented generation (named VL-RAG) system that has the potential to bridge this gap by delivering contextually relevant, visually enriched responses that can enhance comprehension. By leveraging a database of tailored answers and images, the VL-RAG system can dynamically retrieve information aligned with specific questions, creating a more interactive and engaging experience that fosters deeper understanding and active student participation. It allows students to explore concepts visually and verbally, promoting deeper understanding and reducing the need for constant human oversight while maintaining flexibility to expand across different subjects and course material.

Confident Pseudo-labeled Diffusion Augmentation for Canine Cardiomegaly Detection

Jan 13, 2025

Abstract:Canine cardiomegaly, marked by an enlarged heart, poses serious health risks if undetected, requiring accurate diagnostic methods. Current detection models often rely on small, poorly annotated datasets and struggle to generalize across diverse imaging conditions, limiting their real-world applicability. To address these issues, we propose a Confident Pseudo-labeled Diffusion Augmentation (CDA) model for identifying canine cardiomegaly. Our approach addresses the challenge of limited high-quality training data by employing diffusion models to generate synthetic X-ray images and annotate Vertebral Heart Score key points, thereby expanding the dataset. We also employ a pseudo-labeling strategy with Monte Carlo Dropout to select high-confidence labels, refine the synthetic dataset, and improve accuracy. Iteratively incorporating these labels enhances the model's performance, overcoming the limitations of existing approaches. Experimental results show that the CDA model outperforms traditional methods, achieving state-of-the-art accuracy in canine cardiomegaly detection. The code implementation is available at https://github.com/Shira7z/CDA.

SST-EM: Advanced Metrics for Evaluating Semantic, Spatial and Temporal Aspects in Video Editing

Jan 13, 2025

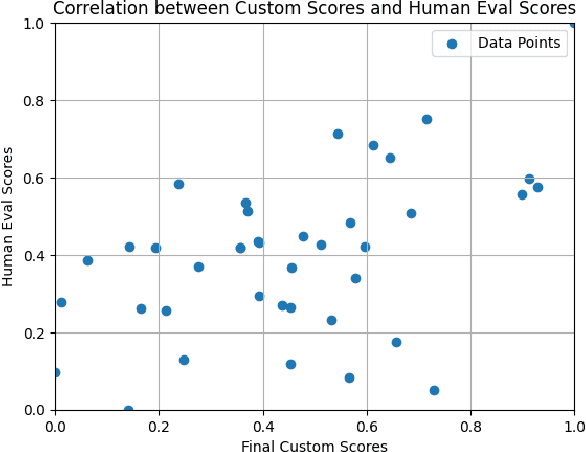

Abstract:Video editing models have advanced significantly, but evaluating their performance remains challenging. Traditional metrics, such as CLIP text and image scores, often fall short: text scores are limited by inadequate training data and hierarchical dependencies, while image scores fail to assess temporal consistency. We present SST-EM (Semantic, Spatial, and Temporal Evaluation Metric), a novel evaluation framework that leverages modern Vision-Language Models (VLMs), Object Detection, and Temporal Consistency checks. SST-EM comprises four components: (1) semantic extraction from frames using a VLM, (2) primary object tracking with Object Detection, (3) focused object refinement via an LLM agent, and (4) temporal consistency assessment using a Vision Transformer (ViT). These components are integrated into a unified metric with weights derived from human evaluations and regression analysis. The name SST-EM reflects its focus on Semantic, Spatial, and Temporal aspects of video evaluation. SST-EM provides a comprehensive evaluation of semantic fidelity and temporal smoothness in video editing. The source code is available in the \textbf{\href{https://github.com/custommetrics-sst/SST_CustomEvaluationMetrics.git}{GitHub Repository}}.

Leapfrog Latent Consistency Model (LLCM) for Medical Images Generation

Nov 22, 2024

Abstract:The scarcity of accessible medical image data poses a significant obstacle in effectively training deep learning models for medical diagnosis, as hospitals refrain from sharing their data due to privacy concerns. In response, we gathered a diverse dataset named MedImgs, which comprises over 250,127 images spanning 61 disease types and 159 classes of both humans and animals from open-source repositories. We propose a Leapfrog Latent Consistency Model (LLCM) that is distilled from a retrained diffusion model based on the collected MedImgs dataset, which enables our model to generate real-time high-resolution images. We formulate the reverse diffusion process as a probability flow ordinary differential equation (PF-ODE) and solve it in latent space using the Leapfrog algorithm. This formulation enables rapid sampling without necessitating additional iterations. Our model demonstrates state-of-the-art performance in generating medical images. Furthermore, our model can be fine-tuned with any custom medical image datasets, facilitating the generation of a vast array of images. Our experimental results outperform those of existing models on unseen dog cardiac X-ray images. Source code is available at https://github.com/lskdsjy/LeapfrogLCM.

SparrowVQE: Visual Question Explanation for Course Content Understanding

Nov 12, 2024

Abstract:Visual Question Answering (VQA) research seeks to create AI systems to answer natural language questions in images, yet VQA methods often yield overly simplistic and short answers. This paper aims to advance the field by introducing Visual Question Explanation (VQE), which enhances the ability of VQA to provide detailed explanations rather than brief responses and address the need for more complex interaction with visual content. We first created an MLVQE dataset from a 14-week streamed video machine learning course, including 885 slide images, 110,407 words of transcripts, and 9,416 designed question-answer (QA) pairs. Next, we proposed a novel SparrowVQE, a small 3 billion parameters multimodal model. We trained our model with a three-stage training mechanism consisting of multimodal pre-training (slide images and transcripts feature alignment), instruction tuning (tuning the pre-trained model with transcripts and QA pairs), and domain fine-tuning (fine-tuning slide image and QA pairs). Eventually, our SparrowVQE can understand and connect visual information using the SigLIP model with transcripts using the Phi-2 language model with an MLP adapter. Experimental results demonstrate that our SparrowVQE achieves better performance in our developed MLVQE dataset and outperforms state-of-the-art methods in the other five benchmark VQA datasets. The source code is available at \url{https://github.com/YoushanZhang/SparrowVQE}.

KatzBot: Revolutionizing Academic Chatbot for Enhanced Communication

Oct 21, 2024

Abstract:Effective communication within universities is crucial for addressing the diverse information needs of students, alumni, and external stakeholders. However, existing chatbot systems often fail to deliver accurate, context-specific responses, resulting in poor user experiences. In this paper, we present KatzBot, an innovative chatbot powered by KatzGPT, a custom Large Language Model (LLM) fine-tuned on domain-specific academic data. KatzGPT is trained on two university-specific datasets: 6,280 sentence-completion pairs and 7,330 question-answer pairs. KatzBot outperforms established existing open source LLMs, achieving higher accuracy and domain relevance. KatzBot offers a user-friendly interface, significantly enhancing user satisfaction in real-world applications. The source code is publicly available at \url{https://github.com/AiAI-99/katzbot}.

Complex Image-Generative Diffusion Transformer for Audio Denoising

Jun 13, 2024

Abstract:The audio denoising technique has captured widespread attention in the deep neural network field. Recently, the audio denoising problem has been converted into an image generation task, and deep learning-based approaches have been applied to tackle this problem. However, its performance is still limited, leaving room for further improvement. In order to enhance audio denoising performance, this paper introduces a complex image-generative diffusion transformer that captures more information from the complex Fourier domain. We explore a novel diffusion transformer by integrating the transformer with a diffusion model. Our proposed model demonstrates the scalability of the transformer and expands the receptive field of sparse attention using attention diffusion. Our work is among the first to utilize diffusion transformers to deal with the image generation task for audio denoising. Extensive experiments on two benchmark datasets demonstrate that our proposed model outperforms state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge