Yanting Yang

UniVLA: Learning to Act Anywhere with Task-centric Latent Actions

May 09, 2025Abstract:A generalist robot should perform effectively across various environments. However, most existing approaches heavily rely on scaling action-annotated data to enhance their capabilities. Consequently, they are often limited to single physical specification and struggle to learn transferable knowledge across different embodiments and environments. To confront these limitations, we propose UniVLA, a new framework for learning cross-embodiment vision-language-action (VLA) policies. Our key innovation is to derive task-centric action representations from videos with a latent action model. This enables us to exploit extensive data across a wide spectrum of embodiments and perspectives. To mitigate the effect of task-irrelevant dynamics, we incorporate language instructions and establish a latent action model within the DINO feature space. Learned from internet-scale videos, the generalist policy can be deployed to various robots through efficient latent action decoding. We obtain state-of-the-art results across multiple manipulation and navigation benchmarks, as well as real-robot deployments. UniVLA achieves superior performance over OpenVLA with less than 1/20 of pretraining compute and 1/10 of downstream data. Continuous performance improvements are observed as heterogeneous data, even including human videos, are incorporated into the training pipeline. The results underscore UniVLA's potential to facilitate scalable and efficient robot policy learning.

NeuroLIP: Interpretable and Fair Cross-Modal Alignment of fMRI and Phenotypic Text

Mar 27, 2025

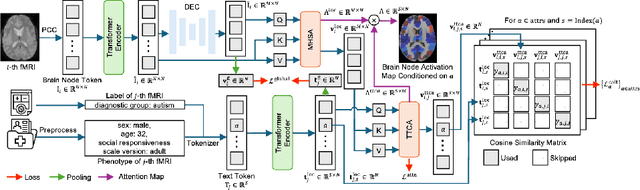

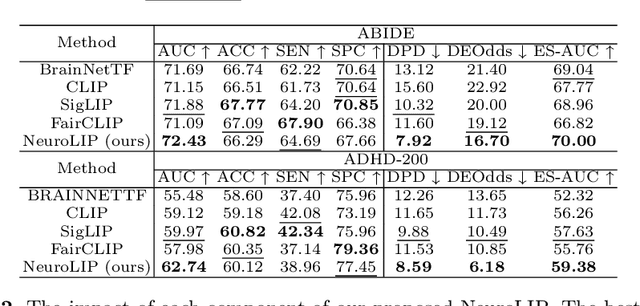

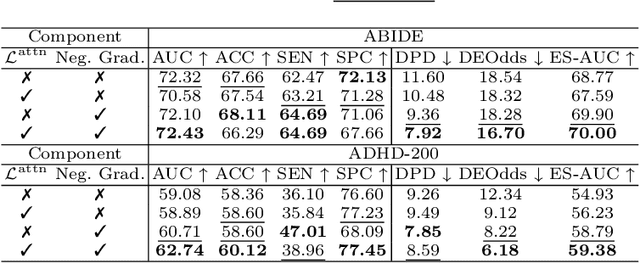

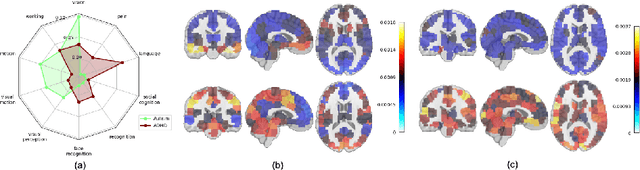

Abstract:Integrating functional magnetic resonance imaging (fMRI) connectivity data with phenotypic textual descriptors (e.g., disease label, demographic data) holds significant potential to advance our understanding of neurological conditions. However, existing cross-modal alignment methods often lack interpretability and risk introducing biases by encoding sensitive attributes together with diagnostic-related features. In this work, we propose NeuroLIP, a novel cross-modal contrastive learning framework. We introduce text token-conditioned attention (TTCA) and cross-modal alignment via localized tokens (CALT) to the brain region-level embeddings with each disease-related phenotypic token. It improves interpretability via token-level attention maps, revealing brain region-disease associations. To mitigate bias, we propose a loss for sensitive attribute disentanglement that maximizes the attention distance between disease tokens and sensitive attribute tokens, reducing unintended correlations in downstream predictions. Additionally, we incorporate a negative gradient technique that reverses the sign of CALT loss on sensitive attributes, further discouraging the alignment of these features. Experiments on neuroimaging datasets (ABIDE and ADHD-200) demonstrate NeuroLIP's superiority in terms of fairness metrics while maintaining the overall best standard metric performance. Qualitative visualization of attention maps highlights neuroanatomical patterns aligned with diagnostic characteristics, validated by the neuroscientific literature. Our work advances the development of transparent and equitable neuroimaging AI.

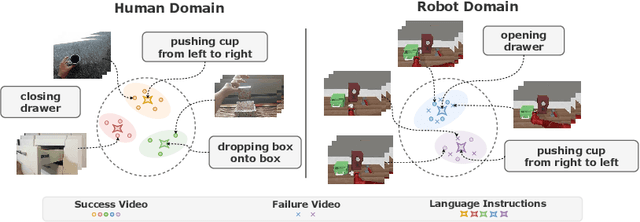

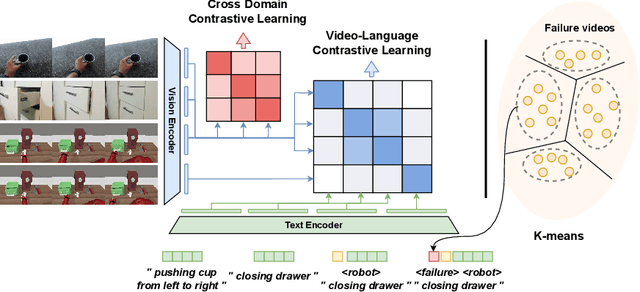

Adapt2Reward: Adapting Video-Language Models to Generalizable Robotic Rewards via Failure Prompts

Jul 20, 2024

Abstract:For a general-purpose robot to operate in reality, executing a broad range of instructions across various environments is imperative. Central to the reinforcement learning and planning for such robotic agents is a generalizable reward function. Recent advances in vision-language models, such as CLIP, have shown remarkable performance in the domain of deep learning, paving the way for open-domain visual recognition. However, collecting data on robots executing various language instructions across multiple environments remains a challenge. This paper aims to transfer video-language models with robust generalization into a generalizable language-conditioned reward function, only utilizing robot video data from a minimal amount of tasks in a singular environment. Unlike common robotic datasets used for training reward functions, human video-language datasets rarely contain trivial failure videos. To enhance the model's ability to distinguish between successful and failed robot executions, we cluster failure video features to enable the model to identify patterns within. For each cluster, we integrate a newly trained failure prompt into the text encoder to represent the corresponding failure mode. Our language-conditioned reward function shows outstanding generalization to new environments and new instructions for robot planning and reinforcement learning.

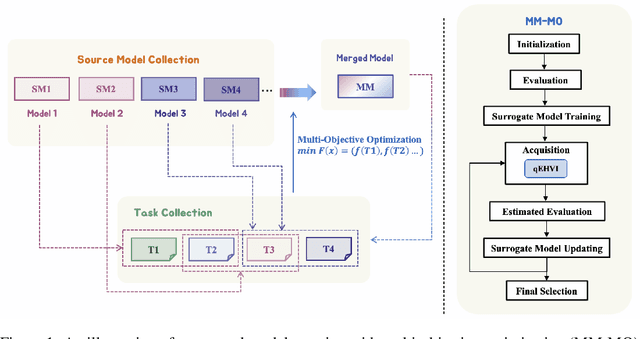

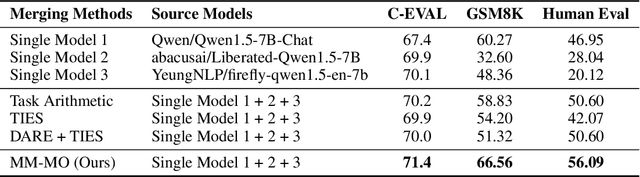

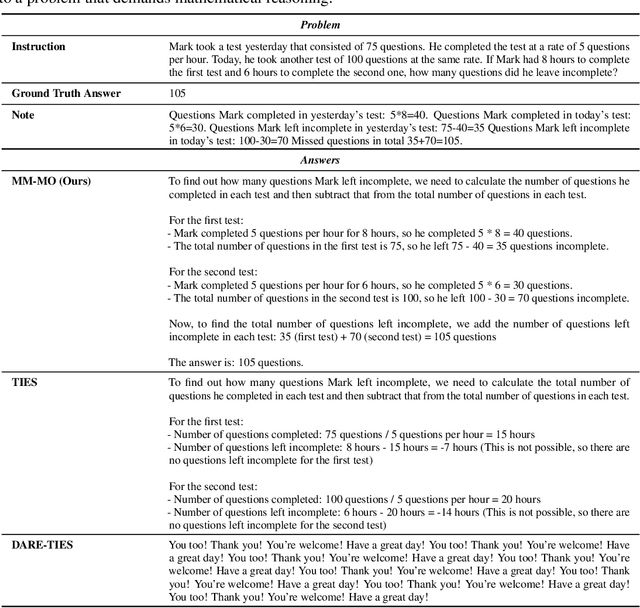

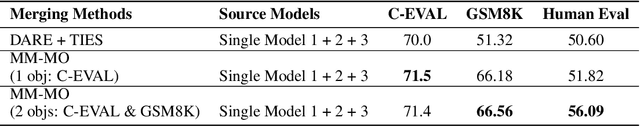

It's Morphing Time: Unleashing the Potential of Multiple LLMs via Multi-objective Optimization

Jun 29, 2024

Abstract:In this paper, we introduce a novel approach for large language model merging via black-box multi-objective optimization algorithms. The goal of model merging is to combine multiple models, each excelling in different tasks, into a single model that outperforms any of the individual source models. However, model merging faces two significant challenges: First, existing methods rely heavily on human intuition and customized strategies. Second, parameter conflicts often arise during merging, and while methods like DARE [1] can alleviate this issue, they tend to stochastically drop parameters, risking the loss of important delta parameters. To address these challenges, we propose the MM-MO method, which automates the search for optimal merging configurations using multi-objective optimization algorithms, eliminating the need for human intuition. During the configuration searching process, we use estimated performance across multiple diverse tasks as optimization objectives in order to alleviate the parameter conflicting between different source models without losing crucial delta parameters. We conducted comparative experiments with other mainstream model merging methods, demonstrating that our method consistently outperforms them. Moreover, our experiments reveal that even task types not explicitly targeted as optimization objectives show performance improvements, indicating that our method enhances the overall potential of the model rather than merely overfitting to specific task types. This approach provides a significant advancement in model merging techniques, offering a robust and plug-and-play solution for integrating diverse models into a unified, high-performing model.

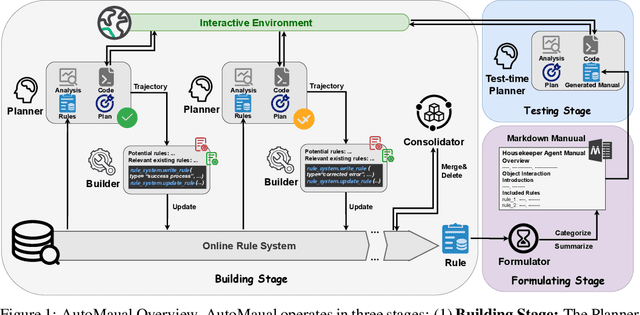

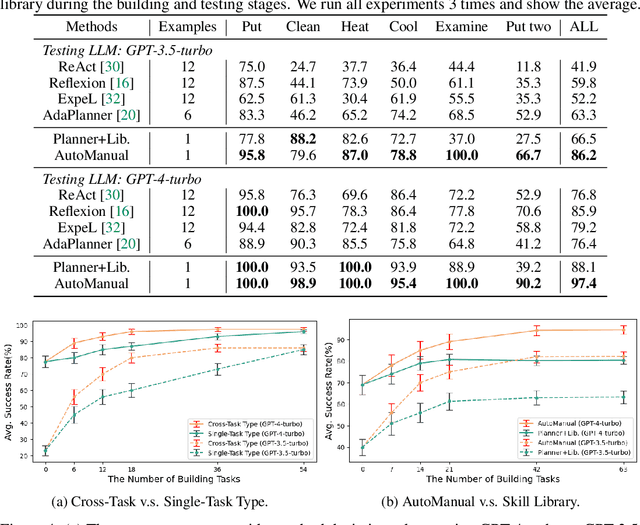

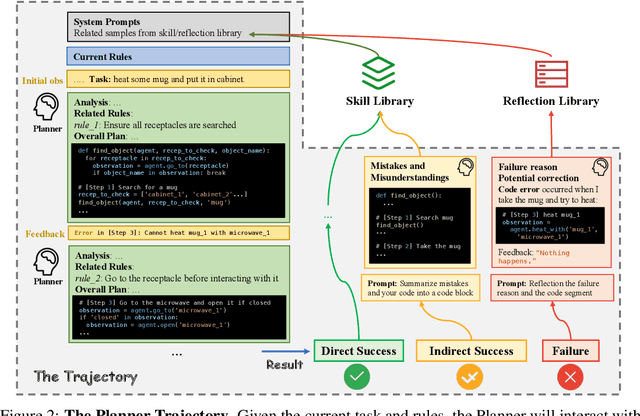

AutoManual: Generating Instruction Manuals by LLM Agents via Interactive Environmental Learning

May 25, 2024

Abstract:Large Language Models (LLM) based agents have shown promise in autonomously completing tasks across various domains, e.g., robotics, games, and web navigation. However, these agents typically require elaborate design and expert prompts to solve tasks in specific domains, which limits their adaptability. We introduce AutoManual, a framework enabling LLM agents to autonomously build their understanding through interaction and adapt to new environments. AutoManual categorizes environmental knowledge into diverse rules and optimizes them in an online fashion by two agents: 1) The Planner codes actionable plans based on current rules for interacting with the environment. 2) The Builder updates the rules through a well-structured rule system that facilitates online rule management and essential detail retention. To mitigate hallucinations in managing rules, we introduce \textit{case-conditioned prompting} strategy for the Builder. Finally, the Formulator agent compiles these rules into a comprehensive manual. The self-generated manual can not only improve the adaptability but also guide the planning of smaller LLMs while being human-readable. Given only one simple demonstration, AutoManual significantly improves task success rates, achieving 97.4\% with GPT-4-turbo and 86.2\% with GPT-3.5-turbo on ALFWorld benchmark tasks. The source code will be available soon.

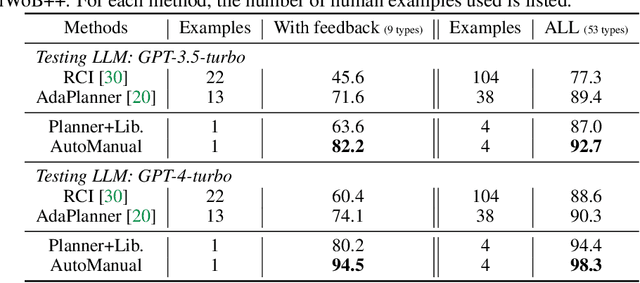

Multimodal Pretraining, Adaptation, and Generation for Recommendation: A Survey

Mar 31, 2024

Abstract:Personalized recommendation serves as a ubiquitous channel for users to discover information or items tailored to their interests. However, traditional recommendation models primarily rely on unique IDs and categorical features for user-item matching, potentially overlooking the nuanced essence of raw item contents across multiple modalities such as text, image, audio, and video. This underutilization of multimodal data poses a limitation to recommender systems, especially in multimedia services like news, music, and short-video platforms. The recent advancements in pretrained multimodal models offer new opportunities and challenges in developing content-aware recommender systems. This survey seeks to provide a comprehensive exploration of the latest advancements and future trajectories in multimodal pretraining, adaptation, and generation techniques, as well as their applications to recommender systems. Furthermore, we discuss open challenges and opportunities for future research in this domain. We hope that this survey, along with our tutorial materials, will inspire further research efforts to advance this evolving landscape.

Learnable Community-Aware Transformer for Brain Connectome Analysis with Token Clustering

Mar 13, 2024Abstract:Neuroscientific research has revealed that the complex brain network can be organized into distinct functional communities, each characterized by a cohesive group of regions of interest (ROIs) with strong interconnections. These communities play a crucial role in comprehending the functional organization of the brain and its implications for neurological conditions, including Autism Spectrum Disorder (ASD) and biological differences, such as in gender. Traditional models have been constrained by the necessity of predefined community clusters, limiting their flexibility and adaptability in deciphering the brain's functional organization. Furthermore, these models were restricted by a fixed number of communities, hindering their ability to accurately represent the brain's dynamic nature. In this study, we present a token clustering brain transformer-based model ($\texttt{TC-BrainTF}$) for joint community clustering and classification. Our approach proposes a novel token clustering (TC) module based on the transformer architecture, which utilizes learnable prompt tokens with orthogonal loss where each ROI embedding is projected onto the prompt embedding space, effectively clustering ROIs into communities and reducing the dimensions of the node representation via merging with communities. Our results demonstrate that our learnable community-aware model $\texttt{TC-BrainTF}$ offers improved accuracy in identifying ASD and classifying genders through rigorous testing on ABIDE and HCP datasets. Additionally, the qualitative analysis on $\texttt{TC-BrainTF}$ has demonstrated the effectiveness of the designed TC module and its relevance to neuroscience interpretations.

Deep Learning-based MRI Reconstruction with Artificial Fourier Transform (AFT)-Net

Dec 18, 2023Abstract:The deep complex-valued neural network provides a powerful way to leverage complex number operations and representations, which has succeeded in several phase-based applications. However, most previously published networks have not fully accessed the impact of complex-valued networks in the frequency domain. Here, we introduced a unified complex-valued deep learning framework - artificial Fourier transform network (AFT-Net) - which combined domain-manifold learning and complex-valued neural networks. The AFT-Net can be readily used to solve the image inverse problems in domain-transform, especially for accelerated magnetic resonance imaging (MRI) reconstruction and other applications. While conventional methods only accept magnitude images, the proposed method takes raw k-space data in the frequency domain as inputs, allowing a mapping between the k-space domain and the image domain to be determined through cross-domain learning. We show that AFT-Net achieves superior accelerated MRI reconstruction and is comparable to existing approaches. Also, our approach can be applied to different tasks like denoised MRS reconstruction and different datasets with various contrasts. The AFT-Net presented here is a valuable preprocessing component for different preclinical studies and provides an innovative alternative for solving inverse problems in imaging and spectroscopy.

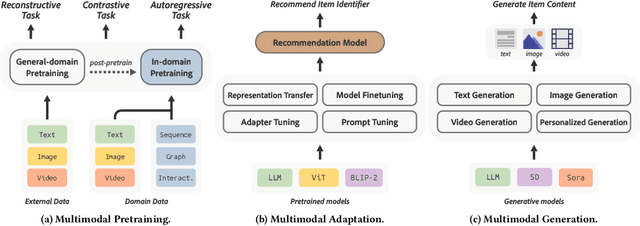

DisCover: Disentangled Music Representation Learning for Cover Song Identification

Jul 19, 2023

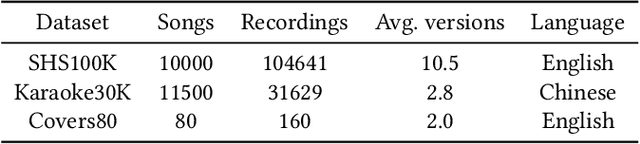

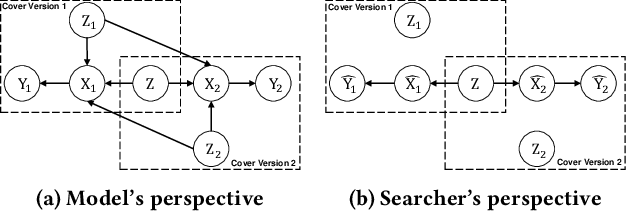

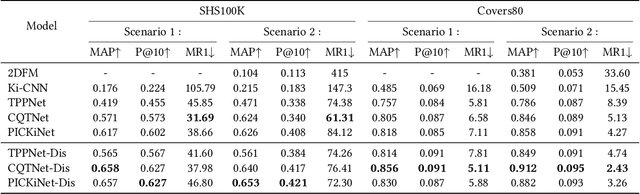

Abstract:In the field of music information retrieval (MIR), cover song identification (CSI) is a challenging task that aims to identify cover versions of a query song from a massive collection. Existing works still suffer from high intra-song variances and inter-song correlations, due to the entangled nature of version-specific and version-invariant factors in their modeling. In this work, we set the goal of disentangling version-specific and version-invariant factors, which could make it easier for the model to learn invariant music representations for unseen query songs. We analyze the CSI task in a disentanglement view with the causal graph technique, and identify the intra-version and inter-version effects biasing the invariant learning. To block these effects, we propose the disentangled music representation learning framework (DisCover) for CSI. DisCover consists of two critical components: (1) Knowledge-guided Disentanglement Module (KDM) and (2) Gradient-based Adversarial Disentanglement Module (GADM), which block intra-version and inter-version biased effects, respectively. KDM minimizes the mutual information between the learned representations and version-variant factors that are identified with prior domain knowledge. GADM identifies version-variant factors by simulating the representation transitions between intra-song versions, and exploits adversarial distillation for effect blocking. Extensive comparisons with best-performing methods and in-depth analysis demonstrate the effectiveness of DisCover and the and necessity of disentanglement for CSI.

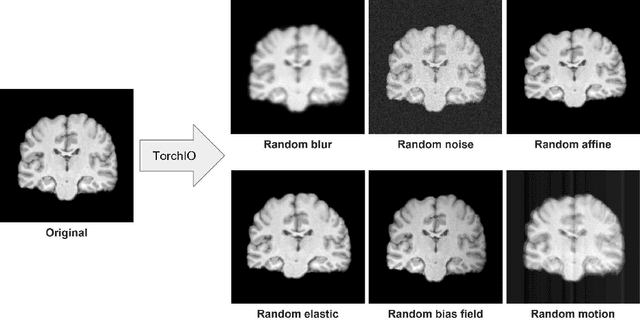

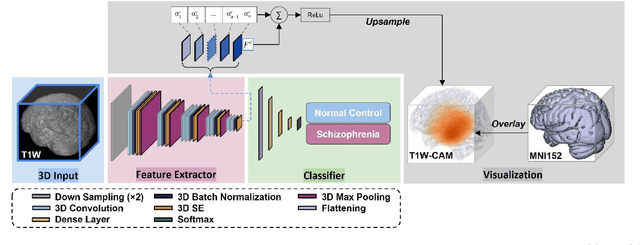

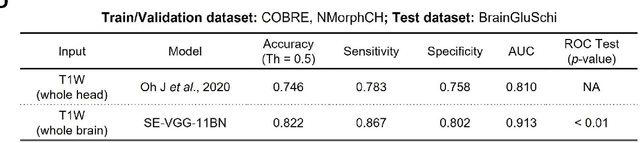

Detecting Schizophrenia with 3D Structural Brain MRI Using Deep Learning

Jul 07, 2022

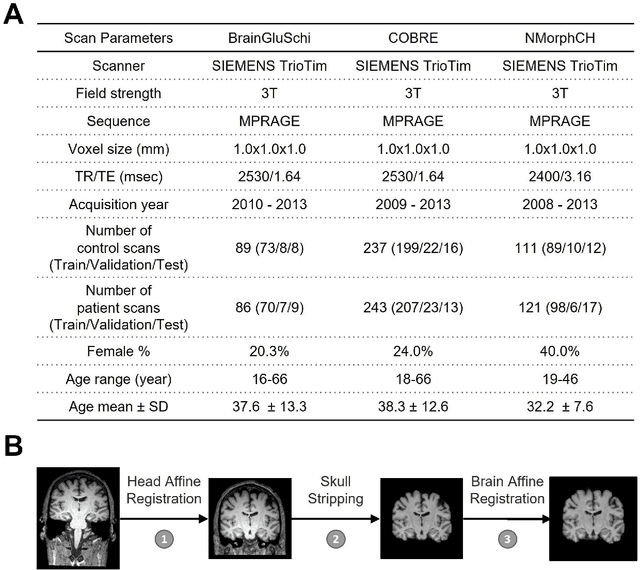

Abstract:Schizophrenia is a chronic neuropsychiatric disorder that causes distinct structural alterations within the brain. We hypothesize that deep learning applied to a structural neuroimaging dataset could detect disease-related alteration and improve classification and diagnostic accuracy. We tested this hypothesis using a single, widely available, and conventional T1-weighted MRI scan, from which we extracted the 3D whole-brain structure using standard post-processing methods. A deep learning model was then developed, optimized, and evaluated on three open datasets with T1-weighted MRI scans of patients with schizophrenia. Our proposed model outperformed the benchmark model, which was also trained with structural MR images using a 3D CNN architecture. Our model is capable of almost perfectly (area under the ROC curve = 0.987) distinguishing schizophrenia patients from healthy controls on unseen structural MRI scans. Regional analysis localized subcortical regions and ventricles as the most predictive brain regions. Subcortical structures serve a pivotal role in cognitive, affective, and social functions in humans, and structural abnormalities of these regions have been associated with schizophrenia. Our finding corroborates that schizophrenia is associated with widespread alterations in subcortical brain structure and the subcortical structural information provides prominent features in diagnostic classification. Together, these results further demonstrate the potential of deep learning to improve schizophrenia diagnosis and identify its structural neuroimaging signatures from a single, standard T1-weighted brain MRI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge