NeuroLIP: Interpretable and Fair Cross-Modal Alignment of fMRI and Phenotypic Text

Paper and Code

Mar 27, 2025

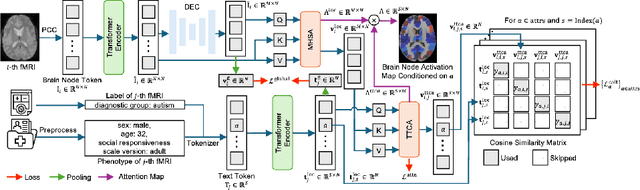

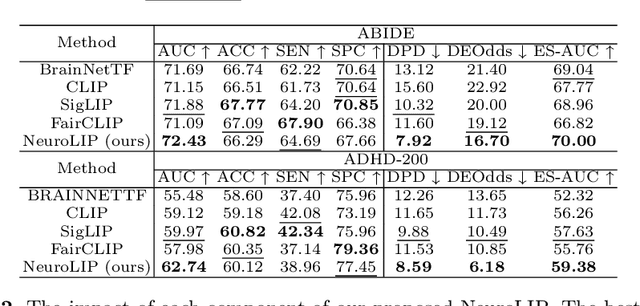

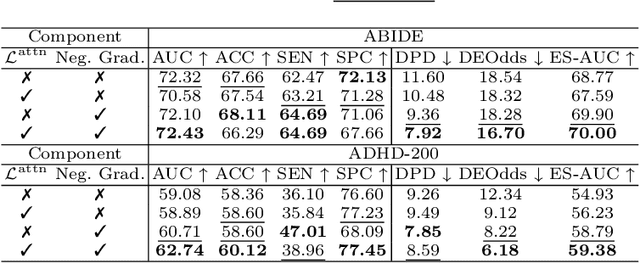

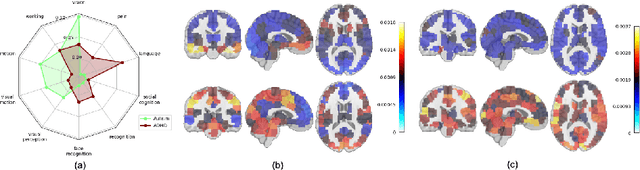

Integrating functional magnetic resonance imaging (fMRI) connectivity data with phenotypic textual descriptors (e.g., disease label, demographic data) holds significant potential to advance our understanding of neurological conditions. However, existing cross-modal alignment methods often lack interpretability and risk introducing biases by encoding sensitive attributes together with diagnostic-related features. In this work, we propose NeuroLIP, a novel cross-modal contrastive learning framework. We introduce text token-conditioned attention (TTCA) and cross-modal alignment via localized tokens (CALT) to the brain region-level embeddings with each disease-related phenotypic token. It improves interpretability via token-level attention maps, revealing brain region-disease associations. To mitigate bias, we propose a loss for sensitive attribute disentanglement that maximizes the attention distance between disease tokens and sensitive attribute tokens, reducing unintended correlations in downstream predictions. Additionally, we incorporate a negative gradient technique that reverses the sign of CALT loss on sensitive attributes, further discouraging the alignment of these features. Experiments on neuroimaging datasets (ABIDE and ADHD-200) demonstrate NeuroLIP's superiority in terms of fairness metrics while maintaining the overall best standard metric performance. Qualitative visualization of attention maps highlights neuroanatomical patterns aligned with diagnostic characteristics, validated by the neuroscientific literature. Our work advances the development of transparent and equitable neuroimaging AI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge