Hong Qian

Victor

Surrogate-Assisted Evolutionary Reinforcement Learning Based on Autoencoder and Hyperbolic Neural Network

May 26, 2025Abstract:Evolutionary Reinforcement Learning (ERL), training the Reinforcement Learning (RL) policies with Evolutionary Algorithms (EAs), have demonstrated enhanced exploration capabilities and greater robustness than using traditional policy gradient. However, ERL suffers from the high computational costs and low search efficiency, as EAs require evaluating numerous candidate policies with expensive simulations, many of which are ineffective and do not contribute meaningfully to the training. One intuitive way to reduce the ineffective evaluations is to adopt the surrogates. Unfortunately, existing ERL policies are often modeled as deep neural networks (DNNs) and thus naturally represented as high-dimensional vectors containing millions of weights, which makes the building of effective surrogates for ERL policies extremely challenging. This paper proposes a novel surrogate-assisted ERL that integrates Autoencoders (AE) and Hyperbolic Neural Networks (HNN). Specifically, AE compresses high-dimensional policies into low-dimensional representations while extracting key features as the inputs for the surrogate. HNN, functioning as a classification-based surrogate model, can learn complex nonlinear relationships from sampled data and enable more accurate pre-selection of the sampled policies without real evaluations. The experiments on 10 Atari and 4 Mujoco games have verified that the proposed method outperforms previous approaches significantly. The search trajectories guided by AE and HNN are also visually demonstrated to be more effective, in terms of both exploration and convergence. This paper not only presents the first learnable policy embedding and surrogate-modeling modules for high-dimensional ERL policies, but also empirically reveals when and why they can be successful.

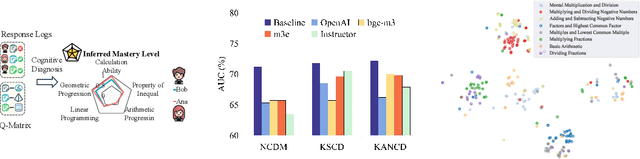

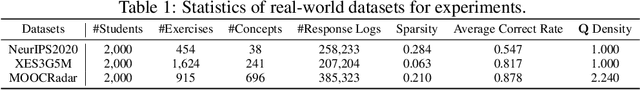

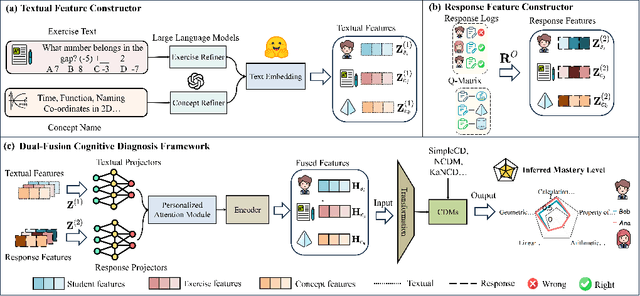

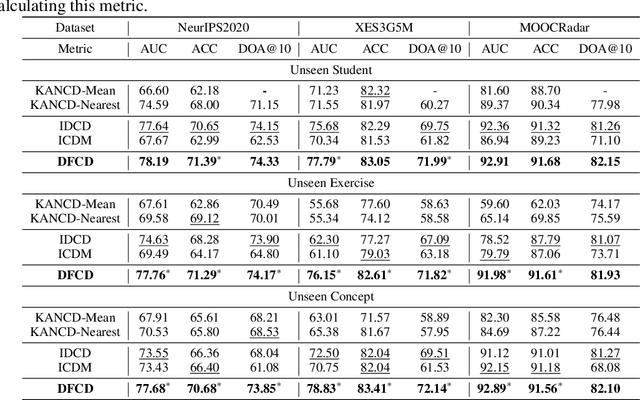

A Dual-Fusion Cognitive Diagnosis Framework for Open Student Learning Environments

Oct 19, 2024

Abstract:Cognitive diagnosis model (CDM) is a fundamental and upstream component in intelligent education. It aims to infer students' mastery levels based on historical response logs. However, existing CDMs usually follow the ID-based embedding paradigm, which could often diminish the effectiveness of CDMs in open student learning environments. This is mainly because they can hardly directly infer new students' mastery levels or utilize new exercises or knowledge without retraining. Textual semantic information, due to its unified feature space and easy accessibility, can help alleviate this issue. Unfortunately, directly incorporating semantic information may not benefit CDMs, since it does not capture response-relevant features and thus discards the individual characteristics of each student. To this end, this paper proposes a dual-fusion cognitive diagnosis framework (DFCD) to address the challenge of aligning two different modalities, i.e., textual semantic features and response-relevant features. Specifically, in DFCD, we first propose the exercise-refiner and concept-refiner to make the exercises and knowledge concepts more coherent and reasonable via large language models. Then, DFCD encodes the refined features using text embedding models to obtain the semantic information. For response-related features, we propose a novel response matrix to fully incorporate the information within the response logs. Finally, DFCD designs a dual-fusion module to merge the two modal features. The ultimate representations possess the capability of inference in open student learning environments and can be also plugged in existing CDMs. Extensive experiments across real-world datasets show that DFCD achieves superior performance by integrating different modalities and strong adaptability in open student learning environments.

LLMOPT: Learning to Define and Solve General Optimization Problems from Scratch

Oct 17, 2024

Abstract:Optimization problems are prevalent across various scenarios. Formulating and then solving optimization problems described by natural language often requires highly specialized human expertise, which could block the widespread application of optimization-based decision making. To make problem formulating and solving automated, leveraging large language models (LLMs) has emerged as a potential way. However, this kind of way suffers from the issue of optimization generalization. Namely, the accuracy of most current LLM-based methods and the generality of optimization problem types that they can model are still limited. In this paper, we propose a unified learning-based framework called LLMOPT to boost optimization generalization. Starting from the natural language descriptions of optimization problems and a pre-trained LLM, LLMOPT constructs the introduced five-element formulation as a universal model for learning to define diverse optimization problem types. Then, LLMOPT employs the multi-instruction tuning to enhance both problem formalization and solver code generation accuracy and generality. After that, to prevent hallucinations in LLMs, such as sacrificing solving accuracy to avoid execution errors, model alignment and self-correction mechanism are adopted in LLMOPT. We evaluate the optimization generalization ability of LLMOPT and compared methods across six real-world datasets covering roughly 20 fields such as health, environment, energy and manufacturing, etc. Extensive experiment results show that LLMOPT is able to model various optimization problem types such as linear/nonlinear programming, mixed integer programming and combinatorial optimization, and achieves a notable 11.08% average solving accuracy improvement compared with the state-of-the-art methods. The code is available at https://github.com/caigaojiang/LLMOPT.

Preference Diffusion for Recommendation

Oct 17, 2024

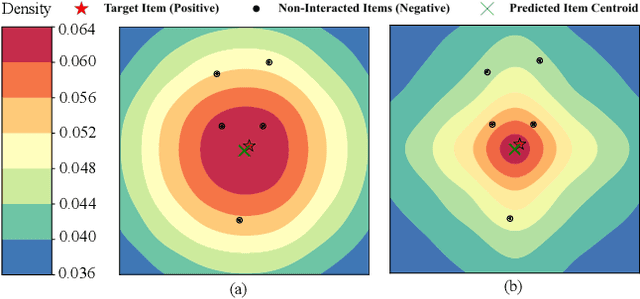

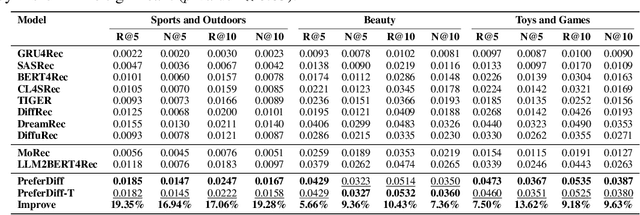

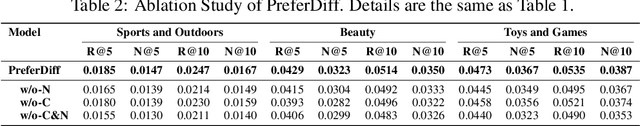

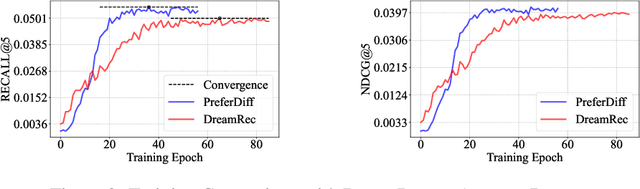

Abstract:Recommender systems predict personalized item rankings based on user preference distributions derived from historical behavior data. Recently, diffusion models (DMs) have gained attention in recommendation for their ability to model complex distributions, yet current DM-based recommenders often rely on traditional objectives like mean squared error (MSE) or recommendation objectives, which are not optimized for personalized ranking tasks or fail to fully leverage DM's generative potential. To address this, we propose PreferDiff, a tailored optimization objective for DM-based recommenders. PreferDiff transforms BPR into a log-likelihood ranking objective and integrates multiple negative samples to better capture user preferences. Specifically, we employ variational inference to handle the intractability through minimizing the variational upper bound and replaces MSE with cosine error to improve alignment with recommendation tasks. Finally, we balance learning generation and preference to enhance the training stability of DMs. PreferDiff offers three key benefits: it is the first personalized ranking loss designed specifically for DM-based recommenders and it improves ranking and faster convergence by addressing hard negatives. We also prove that it is theoretically connected to Direct Preference Optimization which indicates that it has the potential to align user preferences in DM-based recommenders via generative modeling. Extensive experiments across three benchmarks validate its superior recommendation performance and commendable general sequential recommendation capabilities. Our codes are available at \url{https://github.com/lswhim/PreferDiff}.

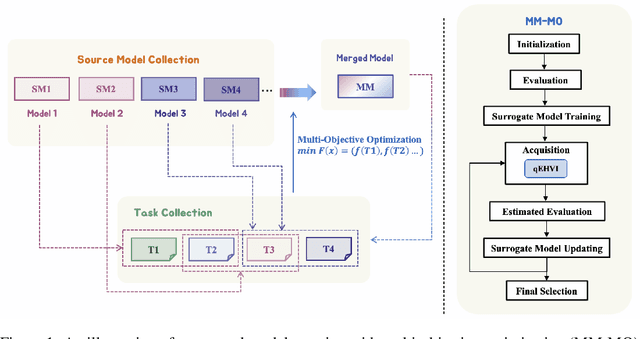

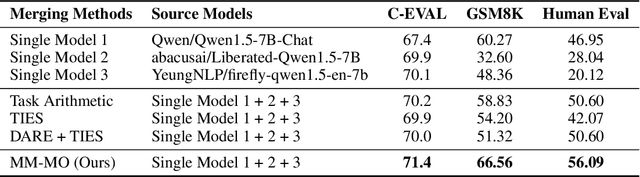

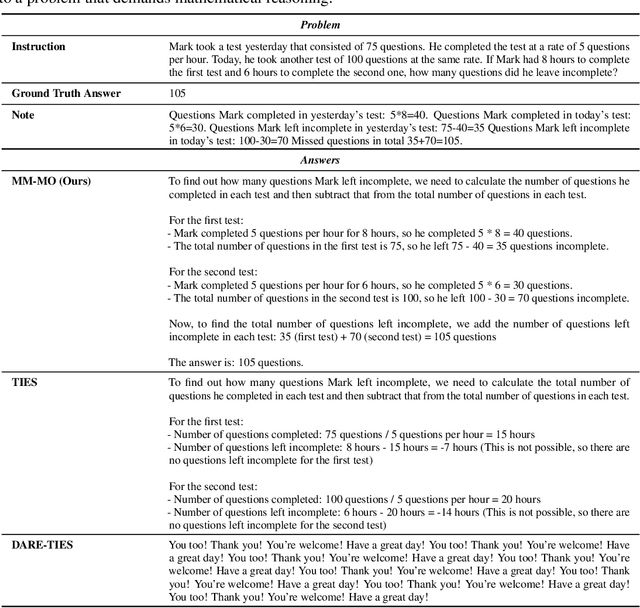

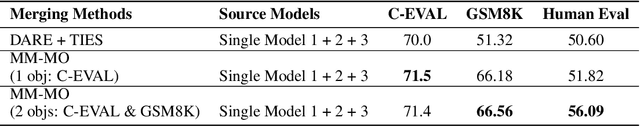

It's Morphing Time: Unleashing the Potential of Multiple LLMs via Multi-objective Optimization

Jun 29, 2024

Abstract:In this paper, we introduce a novel approach for large language model merging via black-box multi-objective optimization algorithms. The goal of model merging is to combine multiple models, each excelling in different tasks, into a single model that outperforms any of the individual source models. However, model merging faces two significant challenges: First, existing methods rely heavily on human intuition and customized strategies. Second, parameter conflicts often arise during merging, and while methods like DARE [1] can alleviate this issue, they tend to stochastically drop parameters, risking the loss of important delta parameters. To address these challenges, we propose the MM-MO method, which automates the search for optimal merging configurations using multi-objective optimization algorithms, eliminating the need for human intuition. During the configuration searching process, we use estimated performance across multiple diverse tasks as optimization objectives in order to alleviate the parameter conflicting between different source models without losing crucial delta parameters. We conducted comparative experiments with other mainstream model merging methods, demonstrating that our method consistently outperforms them. Moreover, our experiments reveal that even task types not explicitly targeted as optimization objectives show performance improvements, indicating that our method enhances the overall potential of the model rather than merely overfitting to specific task types. This approach provides a significant advancement in model merging techniques, offering a robust and plug-and-play solution for integrating diverse models into a unified, high-performing model.

Leveraging Pedagogical Theories to Understand Student Learning Process with Graph-based Reasonable Knowledge Tracing

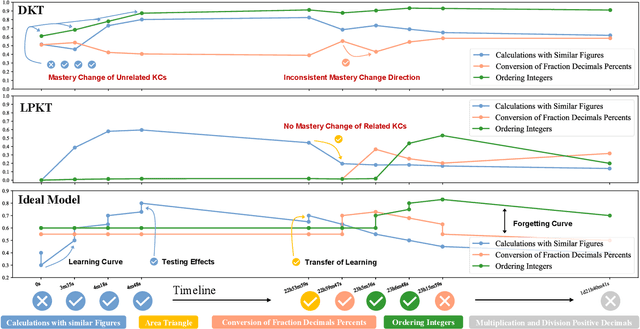

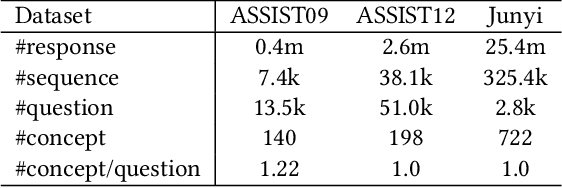

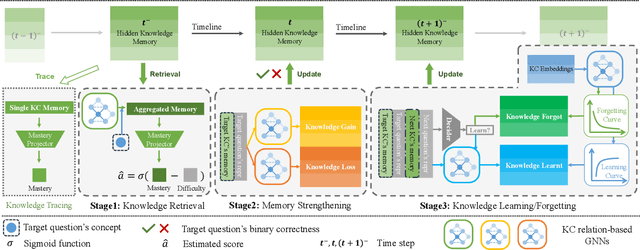

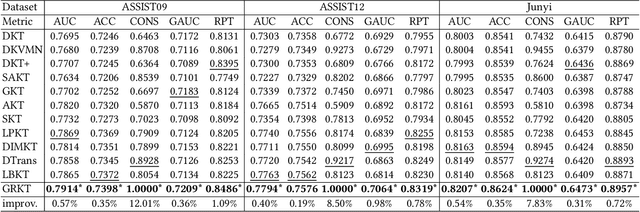

Jun 07, 2024

Abstract:Knowledge tracing (KT) is a crucial task in intelligent education, focusing on predicting students' performance on given questions to trace their evolving knowledge. The advancement of deep learning in this field has led to deep-learning knowledge tracing (DLKT) models that prioritize high predictive accuracy. However, many existing DLKT methods overlook the fundamental goal of tracking students' dynamical knowledge mastery. These models do not explicitly model knowledge mastery tracing processes or yield unreasonable results that educators find difficulty to comprehend and apply in real teaching scenarios. In response, our research conducts a preliminary analysis of mainstream KT approaches to highlight and explain such unreasonableness. We introduce GRKT, a graph-based reasonable knowledge tracing method to address these issues. By leveraging graph neural networks, our approach delves into the mutual influences of knowledge concepts, offering a more accurate representation of how the knowledge mastery evolves throughout the learning process. Additionally, we propose a fine-grained and psychological three-stage modeling process as knowledge retrieval, memory strengthening, and knowledge learning/forgetting, to conduct a more reasonable knowledge tracing process. Comprehensive experiments demonstrate that GRKT outperforms eleven baselines across three datasets, not only enhancing predictive accuracy but also generating more reasonable knowledge tracing results. This makes our model a promising advancement for practical implementation in educational settings. The source code is available at https://github.com/JJCui96/GRKT.

Expensive Multi-Objective Bayesian Optimization Based on Diffusion Models

May 14, 2024

Abstract:Multi-objective Bayesian optimization (MOBO) has shown promising performance on various expensive multi-objective optimization problems (EMOPs). However, effectively modeling complex distributions of the Pareto optimal solutions is difficult with limited function evaluations. Existing Pareto set learning algorithms may exhibit considerable instability in such expensive scenarios, leading to significant deviations between the obtained solution set and the Pareto set (PS). In this paper, we propose a novel Composite Diffusion Model based Pareto Set Learning algorithm, namely CDM-PSL, for expensive MOBO. CDM-PSL includes both unconditional and conditional diffusion model for generating high-quality samples. Besides, we introduce an information entropy based weighting method to balance different objectives of EMOPs. This method is integrated with the guiding strategy, ensuring that all the objectives are appropriately balanced and given due consideration during the optimization process; Extensive experimental results on both synthetic benchmarks and real-world problems demonstrates that our proposed algorithm attains superior performance compared with various state-of-the-art MOBO algorithms.

Towards Geometry-Aware Pareto Set Learning for Neural Multi-Objective Combinatorial Optimization

May 14, 2024Abstract:Multi-objective combinatorial optimization (MOCO) problems are prevalent in various real-world applications. Most existing neural methods for MOCO problems rely solely on decomposition and utilize precise hypervolume to enhance diversity. However, these methods often approximate only limited regions of the Pareto front and spend excessive time on diversity enhancement because of ambiguous decomposition and time-consuming hypervolume calculation. To address these limitations, we design a Geometry-Aware Pareto set Learning algorithm named GAPL, which provides a novel geometric perspective for neural MOCO via a Pareto attention model based on hypervolume expectation maximization. In addition, we propose a hypervolume residual update strategy to enable the Pareto attention model to capture both local and non-local information of the Pareto set/front. We also design a novel inference approach to further improve quality of the solution set and speed up hypervolume calculation and local subset selection. Experimental results on three classic MOCO problems demonstrate that our GAPL outperforms state-of-the-art neural baselines via superior decomposition and efficient diversity enhancement.

Inductive Cognitive Diagnosis for Fast Student Learning in Web-Based Online Intelligent Education Systems

Apr 17, 2024

Abstract:Cognitive diagnosis aims to gauge students' mastery levels based on their response logs. Serving as a pivotal module in web-based online intelligent education systems (WOIESs), it plays an upstream and fundamental role in downstream tasks like learning item recommendation and computerized adaptive testing. WOIESs are open learning environment where numerous new students constantly register and complete exercises. In WOIESs, efficient cognitive diagnosis is crucial to fast feedback and accelerating student learning. However, the existing cognitive diagnosis methods always employ intrinsically transductive student-specific embeddings, which become slow and costly due to retraining when dealing with new students who are unseen during training. To this end, this paper proposes an inductive cognitive diagnosis model (ICDM) for fast new students' mastery levels inference in WOIESs. Specifically, in ICDM, we propose a novel student-centered graph (SCG). Rather than inferring mastery levels through updating student-specific embedding, we derive the inductive mastery levels as the aggregated outcomes of students' neighbors in SCG. Namely, SCG enables to shift the task from finding the most suitable student-specific embedding that fits the response logs to finding the most suitable representations for different node types in SCG, and the latter is more efficient since it no longer requires retraining. To obtain this representation, ICDM consists of a construction-aggregation-generation-transformation process to learn the final representation of students, exercises and concepts. Extensive experiments across real-world datasets show that, compared with the existing cognitive diagnosis methods that are always transductive, ICDM is much more faster while maintains the competitive inference performance for new students.

On the Opportunities of Green Computing: A Survey

Nov 09, 2023

Abstract:Artificial Intelligence (AI) has achieved significant advancements in technology and research with the development over several decades, and is widely used in many areas including computing vision, natural language processing, time-series analysis, speech synthesis, etc. During the age of deep learning, especially with the arise of Large Language Models, a large majority of researchers' attention is paid on pursuing new state-of-the-art (SOTA) results, resulting in ever increasing of model size and computational complexity. The needs for high computing power brings higher carbon emission and undermines research fairness by preventing small or medium-sized research institutions and companies with limited funding in participating in research. To tackle the challenges of computing resources and environmental impact of AI, Green Computing has become a hot research topic. In this survey, we give a systematic overview of the technologies used in Green Computing. We propose the framework of Green Computing and devide it into four key components: (1) Measures of Greenness, (2) Energy-Efficient AI, (3) Energy-Efficient Computing Systems and (4) AI Use Cases for Sustainability. For each components, we discuss the research progress made and the commonly used techniques to optimize the AI efficiency. We conclude that this new research direction has the potential to address the conflicts between resource constraints and AI development. We encourage more researchers to put attention on this direction and make AI more environmental friendly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge