Jia Guo

A Survey of Pinching-Antenna Systems (PASS)

Jan 26, 2026Abstract:The pinching-antenna system (PASS), recently proposed as a flexible-antenna technology, has been regarded as a promising solution for several challenges in next-generation wireless networks. It provides large-scale antenna reconfiguration, establishes stable line-of-sight links, mitigates signal blockage, and exploits near-field advantages through its distinctive architecture. This article aims to present a comprehensive overview of the state of the art in PASS. The fundamental principles of PASS are first discussed, including its hardware architecture, circuit and physical models, and signal models. Several emerging PASS designs, such as segmented PASS (S-PASS), center-fed PASS (C-PASS), and multi-mode PASS (M-PASS), are subsequently introduced, and their design features are discussed. In addition, the properties and promising applications of PASS for wireless sensing are reviewed. On this basis, recent progress in the performance analysis of PASS for both communications and sensing is surveyed, and the performance gains achieved by PASS are highlighted. Existing research contributions in optimization and machine learning are also summarized, with the practical challenges of beamforming and resource allocation being identified in relation to the unique transmission structure and propagation characteristics of PASS. Finally, several variants of PASS are presented, and key implementation challenges that remain open for future study are discussed.

Native Intelligence Emerges from Large-Scale Clinical Practice: A Retinal Foundation Model with Deployment Efficiency

Dec 16, 2025Abstract:Current retinal foundation models remain constrained by curated research datasets that lack authentic clinical context, and require extensive task-specific optimization for each application, limiting their deployment efficiency in low-resource settings. Here, we show that these barriers can be overcome by building clinical native intelligence directly from real-world medical practice. Our key insight is that large-scale telemedicine programs, where expert centers provide remote consultations across distributed facilities, represent a natural reservoir for learning clinical image interpretation. We present ReVision, a retinal foundation model that learns from the natural alignment between 485,980 color fundus photographs and their corresponding diagnostic reports, accumulated through a decade-long telemedicine program spanning 162 medical institutions across China. Through extensive evaluation across 27 ophthalmic benchmarks, we demonstrate that ReVison enables deployment efficiency with minimal local resources. Without any task-specific training, ReVision achieves zero-shot disease detection with an average AUROC of 0.946 across 12 public benchmarks and 0.952 on 3 independent clinical cohorts. When minimal adaptation is feasible, ReVision matches extensively fine-tuned alternatives while requiring orders of magnitude fewer trainable parameters and labeled examples. The learned representations also transfer effectively to new clinical sites, imaging domains, imaging modalities, and systemic health prediction tasks. In a prospective reader study with 33 ophthalmologists, ReVision's zero-shot assistance improved diagnostic accuracy by 14.8% across all experience levels. These results demonstrate that clinical native intelligence can be directly extracted from clinical archives without any further annotation to build medical AI systems suited to various low-resource settings.

Collaborative Reconstruction and Repair for Multi-class Industrial Anomaly Detection

Dec 12, 2025Abstract:Industrial anomaly detection is a challenging open-set task that aims to identify unknown anomalous patterns deviating from normal data distribution. To avoid the significant memory consumption and limited generalizability brought by building separate models per class, we focus on developing a unified framework for multi-class anomaly detection. However, under this challenging setting, conventional reconstruction-based networks often suffer from an identity mapping problem, where they directly replicate input features regardless of whether they are normal or anomalous, resulting in detection failures. To address this issue, this study proposes a novel framework termed Collaborative Reconstruction and Repair (CRR), which transforms the reconstruction to repairation. First, we optimize the decoder to reconstruct normal samples while repairing synthesized anomalies. Consequently, it generates distinct representations for anomalous regions and similar representations for normal areas compared to the encoder's output. Second, we implement feature-level random masking to ensure that the representations from decoder contain sufficient local information. Finally, to minimize detection errors arising from the discrepancies between feature representations from the encoder and decoder, we train a segmentation network supervised by synthetic anomaly masks, thereby enhancing localization performance. Extensive experiments on industrial datasets that CRR effectively mitigates the identity mapping issue and achieves state-of-the-art performance in multi-class industrial anomaly detection.

Every Step Evolves: Scaling Reinforcement Learning for Trillion-Scale Thinking Model

Oct 21, 2025

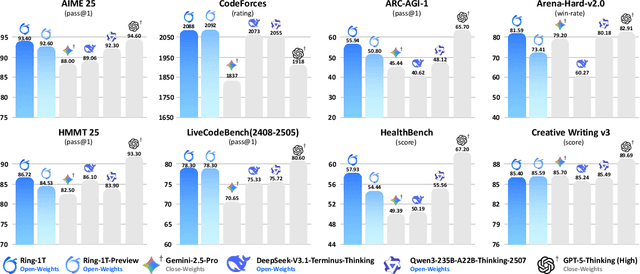

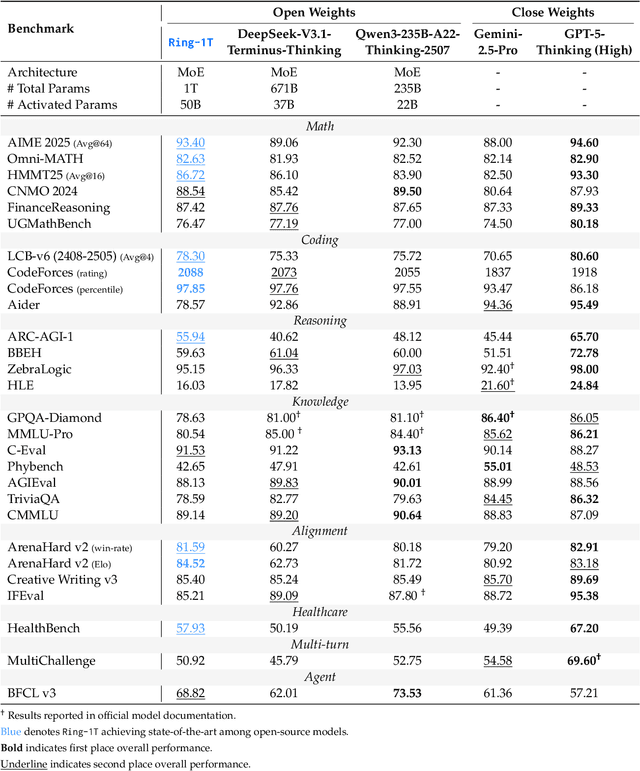

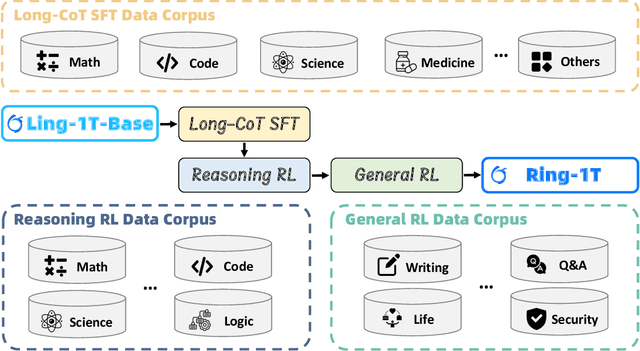

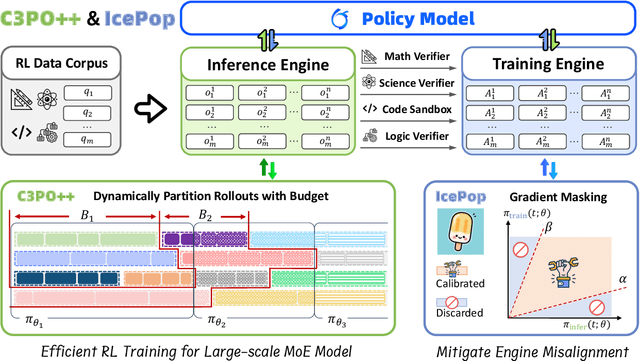

Abstract:We present Ring-1T, the first open-source, state-of-the-art thinking model with a trillion-scale parameter. It features 1 trillion total parameters and activates approximately 50 billion per token. Training such models at a trillion-parameter scale introduces unprecedented challenges, including train-inference misalignment, inefficiencies in rollout processing, and bottlenecks in the RL system. To address these, we pioneer three interconnected innovations: (1) IcePop stabilizes RL training via token-level discrepancy masking and clipping, resolving instability from training-inference mismatches; (2) C3PO++ improves resource utilization for long rollouts under a token budget by dynamically partitioning them, thereby obtaining high time efficiency; and (3) ASystem, a high-performance RL framework designed to overcome the systemic bottlenecks that impede trillion-parameter model training. Ring-1T delivers breakthrough results across critical benchmarks: 93.4 on AIME-2025, 86.72 on HMMT-2025, 2088 on CodeForces, and 55.94 on ARC-AGI-v1. Notably, it attains a silver medal-level result on the IMO-2025, underscoring its exceptional reasoning capabilities. By releasing the complete 1T parameter MoE model to the community, we provide the research community with direct access to cutting-edge reasoning capabilities. This contribution marks a significant milestone in democratizing large-scale reasoning intelligence and establishes a new baseline for open-source model performance.

Pinching-Antenna Systems (PASS): A Tutorial

Aug 11, 2025Abstract:Pinching antenna systems (PASS) present a breakthrough among the flexible-antenna technologies, and distinguish themselves by facilitating large-scale antenna reconfiguration, line-of-sight creation, scalable implementation, and near-field benefits, thus bringing wireless communications from the last mile to the last meter. A comprehensive tutorial is presented in this paper. First, the fundamentals of PASS are discussed, including PASS signal models, hardware models, power radiation models, and pinching antenna activation methods. Building upon this, the information-theoretic capacity limits achieved by PASS are characterized, and several typical performance metrics of PASS-based communications are analyzed to demonstrate its superiority over conventional antenna technologies. Next, the pinching beamforming design is investigated. The corresponding power scaling law is first characterized. For the joint transmit and pinching design in the general multiple-waveguide case, 1) a pair of transmission strategies is proposed for PASS-based single-user communications to validate the superiority of PASS, namely sub-connected and fully connected structures; and 2) three practical protocols are proposed for facilitating PASS-based multi-user communications, namely waveguide switching, waveguide division, and waveguide multiplexing. A possible implementation of PASS in wideband communications is further highlighted. Moreover, the channel state information acquisition in PASS is elaborated with a pair of promising solutions. To overcome the high complexity and suboptimality inherent in conventional convex-optimization-based approaches, machine-learning-based methods for operating PASS are also explored, focusing on selected deep neural network architectures and training algorithms. Finally, several promising applications of PASS in next-generation wireless networks are highlighted.

When Attention is Beneficial for Learning Wireless Resource Allocation Efficiently?

Jul 03, 2025

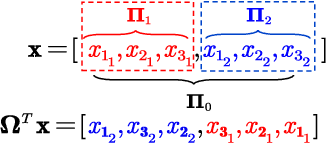

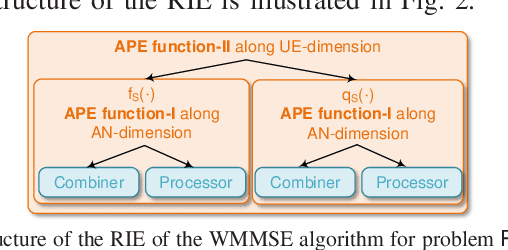

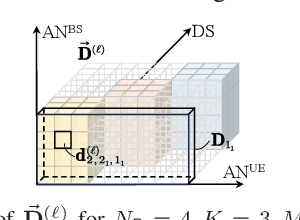

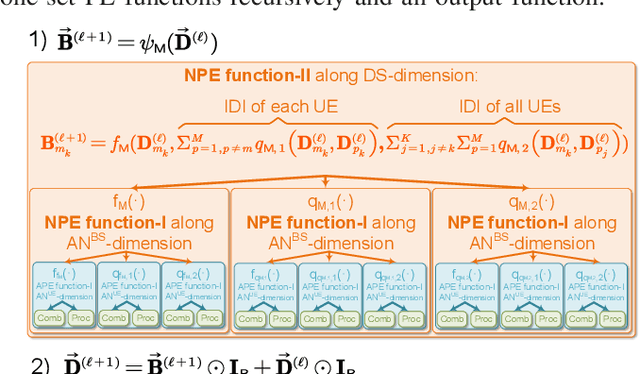

Abstract:Owing to the use of attention mechanism to leverage the dependency across tokens, Transformers are efficient for natural language processing. By harnessing permutation properties broadly exist in resource allocation policies, each mapping measurable environmental parameters (e.g., channel matrix) to optimized variables (e.g., precoding matrix), graph neural networks (GNNs) are promising for learning these policies efficiently in terms of scalability and generalizability. To reap the benefits of both architectures, there is a recent trend of incorporating attention mechanism with GNNs for learning wireless policies. Nevertheless, is the attention mechanism really needed for resource allocation? In this paper, we strive to answer this question by analyzing the structures of functions defined on sets and numerical algorithms, given that the permutation properties of wireless policies are induced by the involved sets (say user set). In particular, we prove that the permutation equivariant functions on a single set can be recursively expressed by two types of functions: one involves attention, and the other does not. We proceed to re-express the numerical algorithms for optimizing several representative resource allocation problems in recursive forms. We find that when interference (say multi-user or inter-data stream interference) is not reflected in the measurable parameters of a policy, attention needs to be used to model the interference. With the insight, we establish a framework of designing GNNs by aligning with the structures. By taking reconfigurable intelligent surface-aided hybrid precoding as an example, the learning efficiency of the proposed GNN is validated via simulations.

Ring-lite: Scalable Reasoning via C3PO-Stabilized Reinforcement Learning for LLMs

Jun 18, 2025Abstract:We present Ring-lite, a Mixture-of-Experts (MoE)-based large language model optimized via reinforcement learning (RL) to achieve efficient and robust reasoning capabilities. Built upon the publicly available Ling-lite model, a 16.8 billion parameter model with 2.75 billion activated parameters, our approach matches the performance of state-of-the-art (SOTA) small-scale reasoning models on challenging benchmarks (e.g., AIME, LiveCodeBench, GPQA-Diamond) while activating only one-third of the parameters required by comparable models. To accomplish this, we introduce a joint training pipeline integrating distillation with RL, revealing undocumented challenges in MoE RL training. First, we identify optimization instability during RL training, and we propose Constrained Contextual Computation Policy Optimization(C3PO), a novel approach that enhances training stability and improves computational throughput via algorithm-system co-design methodology. Second, we empirically demonstrate that selecting distillation checkpoints based on entropy loss for RL training, rather than validation metrics, yields superior performance-efficiency trade-offs in subsequent RL training. Finally, we develop a two-stage training paradigm to harmonize multi-domain data integration, addressing domain conflicts that arise in training with mixed dataset. We will release the model, dataset, and code.

Search is All You Need for Few-shot Anomaly Detection

Apr 16, 2025

Abstract:Few-shot anomaly detection (FSAD) has emerged as a crucial yet challenging task in industrial inspection, where normal distribution modeling must be accomplished with only a few normal images. While existing approaches typically employ multi-modal foundation models combining language and vision modalities for prompt-guided anomaly detection, these methods often demand sophisticated prompt engineering and extensive manual tuning. In this paper, we demonstrate that a straightforward nearest-neighbor search framework can surpass state-of-the-art performance in both single-class and multi-class FSAD scenarios. Our proposed method, VisionAD, consists of four simple yet essential components: (1) scalable vision foundation models that extract universal and discriminative features; (2) dual augmentation strategies - support augmentation to enhance feature matching adaptability and query augmentation to address the oversights of single-view prediction; (3) multi-layer feature integration that captures both low-frequency global context and high-frequency local details with minimal computational overhead; and (4) a class-aware visual memory bank enabling efficient one-for-all multi-class detection. Extensive evaluations across MVTec-AD, VisA, and Real-IAD benchmarks demonstrate VisionAD's exceptional performance. Using only 1 normal images as support, our method achieves remarkable image-level AUROC scores of 97.4%, 94.8%, and 70.8% respectively, outperforming current state-of-the-art approaches by significant margins (+1.6%, +3.2%, and +1.4%). The training-free nature and superior few-shot capabilities of VisionAD make it particularly appealing for real-world applications where samples are scarce or expensive to obtain. Code is available at https://github.com/Qiqigeww/VisionAD.

Holistic Capability Preservation: Towards Compact Yet Comprehensive Reasoning Models

Apr 09, 2025Abstract:This technical report presents Ring-Lite-Distill, a lightweight reasoning model derived from our open-source Mixture-of-Experts (MoE) Large Language Models (LLMs) Ling-Lite. This study demonstrates that through meticulous high-quality data curation and ingenious training paradigms, the compact MoE model Ling-Lite can be further trained to achieve exceptional reasoning capabilities, while maintaining its parameter-efficient architecture with only 2.75 billion activated parameters, establishing an efficient lightweight reasoning architecture. In particular, in constructing this model, we have not merely focused on enhancing advanced reasoning capabilities, exemplified by high-difficulty mathematical problem solving, but rather aimed to develop a reasoning model with more comprehensive competency coverage. Our approach ensures coverage across reasoning tasks of varying difficulty levels while preserving generic capabilities, such as instruction following, tool use, and knowledge retention. We show that, Ring-Lite-Distill's reasoning ability reaches a level comparable to DeepSeek-R1-Distill-Qwen-7B, while its general capabilities significantly surpass those of DeepSeek-R1-Distill-Qwen-7B. The models are accessible at https://huggingface.co/inclusionAI

Learning Precoding in Multi-user Multi-antenna Systems: Transformer or Graph Transformer?

Mar 04, 2025Abstract:Transformers have been designed for channel acquisition tasks such as channel prediction and other tasks such as precoding, while graph neural networks (GNNs) have been demonstrated to be efficient for learning a multitude of communication tasks. Nonetheless, whether or not Transformers are efficient for the tasks other than channel acquisition and how to reap the benefits of both architectures are less understood. In this paper, we take learning precoding policies in multi-user multi-antenna systems as an example to answer the questions. We notice that a Transformer tailored for precoding can reflect multiuser interference, which is essential for its generalizability to the number of users. Yet the tailored Transformer can only leverage partial permutation property of precoding policies and hence is not generalizable to the number of antennas, same as a GNN learning over a homogeneous graph. To provide useful insight, we establish the relation between Transformers and the GNNs that learn over heterogeneous graphs. Based on the relation, we propose Graph Transformers, namely 2D- and 3D-Gformers, for exploiting the permutation properties of baseband precoding and hybrid precoding policies. The learning performance, inference and training complexity, and size-generalizability of the Gformers are evaluated and compared with Transformers and GNNs via simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge