Xiaoxia Xu

Multi-Mode Pinching Antenna Systems Enabled Multi-User Communications

Jan 28, 2026Abstract:This paper proposes a novel multi-mode pinching-antenna systems (PASS) framework. Multiple data streams can be transmitted within a single waveguide through multiple guided modes, thus facilitating efficient multi-user communications through the mode-domain multiplexing. A physic model is derived, which reveals the mode-selective power radiation feature of pinching antennas (PAs). A two-mode PASS enabled two-user downlink communication system is investigated. Considering the mode selectivity of PA power radiation, a practical PA grouping scheme is proposed, where each PA group matches with one specific guided mode and mainly radiates its signal sequentially. Depending on whether the guided mode leaks power to unmatched PAs or not, the proposed PA grouping scheme operates in either the non-leakage or weak-leakage regime. Based on this, the baseband beamforming and PA locations are jointly optimized for sum rate maximization, subject to each user's minimum rate requirement. 1) A simple two-PA case in non-leakage regime is first considered. To solve the formulated problem, a channel orthogonality based solution is proposed. The channel orthogonality is ensured by large-scale and wavelength-scale equality constraints on PA locations. Thus, the optimal beamforming reduces to maximum-ratio transmission (MRT). Moreover, the optimal PA locations are obtained via a Newton-based one-dimension search algorithm that enforces two-scale PA-location constraints by Newton's method. 2) A general multi-PA case in both non-leakage and weak-leakage regimes is further considered. A low-complexity particle-swarm optimization with zero-forcing beamforming (PSO-ZF) algorithm is developed, thus effectively tackling the high-oscillatory and strong-coupled problem. Simulation results demonstrate the superiority of the proposed multi-mode PASS over conventional single-mode PASS and fixed-antenna structures.

A Survey of Pinching-Antenna Systems (PASS)

Jan 26, 2026Abstract:The pinching-antenna system (PASS), recently proposed as a flexible-antenna technology, has been regarded as a promising solution for several challenges in next-generation wireless networks. It provides large-scale antenna reconfiguration, establishes stable line-of-sight links, mitigates signal blockage, and exploits near-field advantages through its distinctive architecture. This article aims to present a comprehensive overview of the state of the art in PASS. The fundamental principles of PASS are first discussed, including its hardware architecture, circuit and physical models, and signal models. Several emerging PASS designs, such as segmented PASS (S-PASS), center-fed PASS (C-PASS), and multi-mode PASS (M-PASS), are subsequently introduced, and their design features are discussed. In addition, the properties and promising applications of PASS for wireless sensing are reviewed. On this basis, recent progress in the performance analysis of PASS for both communications and sensing is surveyed, and the performance gains achieved by PASS are highlighted. Existing research contributions in optimization and machine learning are also summarized, with the practical challenges of beamforming and resource allocation being identified in relation to the unique transmission structure and propagation characteristics of PASS. Finally, several variants of PASS are presented, and key implementation challenges that remain open for future study are discussed.

User Localization and Channel Estimation for Pinching-Antenna Systems (PASS)

Dec 16, 2025

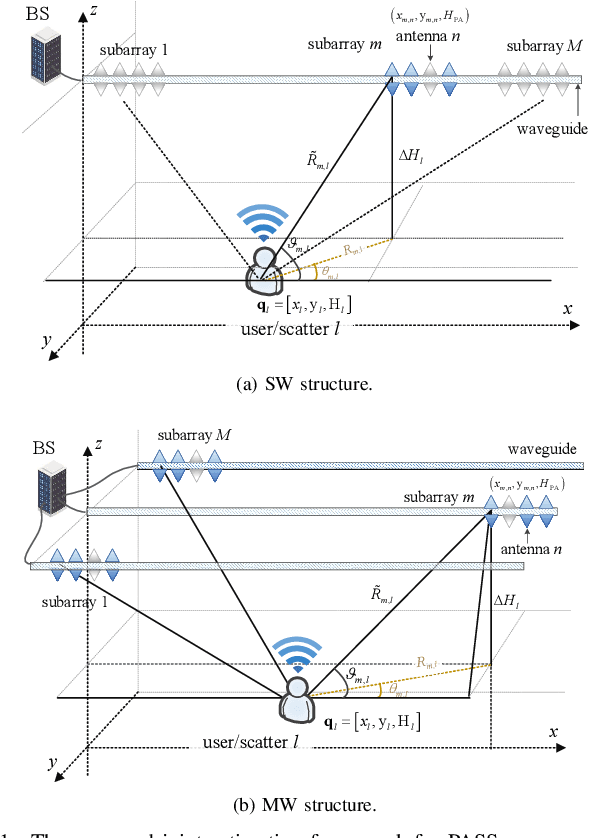

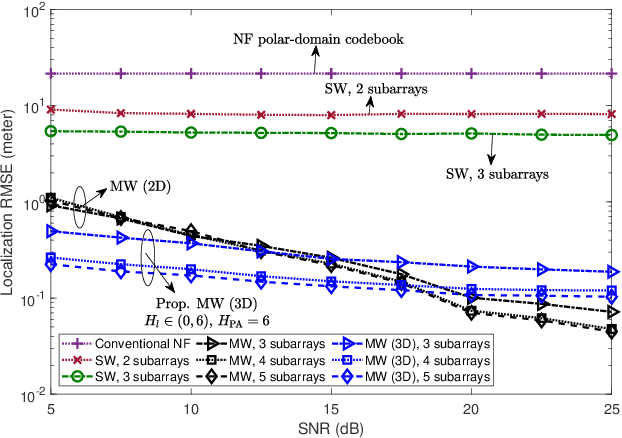

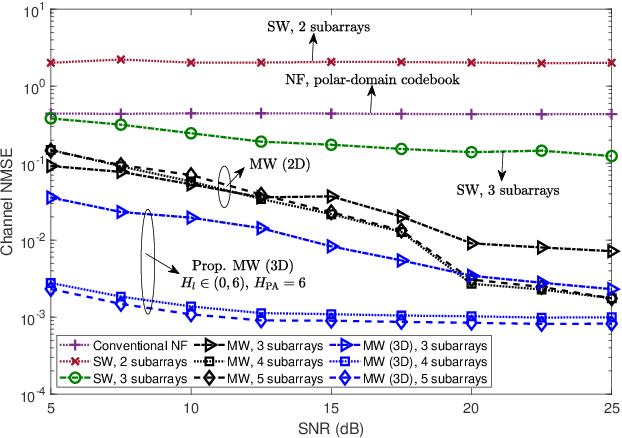

Abstract:This letter proposes a novel user localization and channel estimation framework for pinching-antenna systems (PASS), where pinching antennas are grouped into subarrays on each waveguide to cooperatively estimate user/scatterer locations, thus reconstructing channels. Both single-waveguide (SW) and multi-waveguide (MW) structures are considered. SW consists of multiple alternatingly activated subarrays, while MW deploys one subarray on each waveguide to enable concurrent subarray measurements. For the 2D scenarios with a fixed user/scatter height, an orthogonal matching pursuit-based geometry-consistent localization (OMP-GCL) algorithm is proposed, which leverages inter-subarray geometric relationships and compressed sensing for precise estimation. Theoretical analysis on Cramér-Rao lower bound (CRLB) demonstrates that: 1) The estimation accuracy can be improved by increasing the geometric diversity through multi-subarray deployment; and 2) SW provides a limited geometric diversity within a $180^\circ$ half space and leads to angle ambiguity, while MW enables full-space observations and reduces overheads. The OMP-GCL algorithm is further extended to 3D scenarios, where user and scatter heights are also estimated. Numerical results validate the theoretical analysis, and verify that MW achieves centimeter- and decimeter-level localization accuracy in 2D and 3D scenarios with only three waveguides.

Enabling Wireless Power Transfer (WPT) in Pinching Antenna Systems (PASS)

Nov 14, 2025Abstract:A novel pinching antenna system (PASS) enabled wireless power transfer (WPT) framework is proposed, where energy harvesting receivers (EHRs) and information decoding receivers (IDRs) coexist. By activating pinching antennas (PAs) near both receivers and flexibly adjusting PAs' power radiation ratios, both energy harvesting efficiency and communication quality can be enhanced. A bi-level optimization problem is formulated to overcome the strong coupling between optimization variables. The upper level jointly optimizes transmit beamforming, PA positions, and feasible interval of power radiation ratios for power conversion efficiency (PCE) maximization under rate requirements, while the lower level refines power radiation ratio for the sum rate maximization. Efficient solutions are developed for both two-user and multi-user scenarios. 1) For the two-user case, where an EHR and an IDR coexist, the alternating optimization (AO)-based and weighted minimum mean square error (WMMSE)-based algorithms are developed to achieve the stationary solutions of transmit beamforming, PA positions, and power radiation ratios. 2) For the multi-user case, a quadratic transform-Lagrangian dual transform (QT-LDT) algorithm is proposed to iteratively update PCE and sum rate by optimizing PA positions and power radiation ratios individually. Closed-form solutions are derived for both maximization problems. Numerical simulation results demonstrate that the proposed PASS-WPT framework significantly outperforms conventional MIMO and the baseline PASS with fixed power radiation, which demonstrates that: i) Compared to the conventional MIMO and baseline PASS, the proposed PASS-WPT framework achieves 81.45% and 43.19% improvements in PCE of EHRs, and ii) also increases the sum rate by 77.81% and 31.91% for IDRs.

LLM Enabled Beam Training for Pinching Antenna Systems (PASS)

Nov 12, 2025Abstract:To enable intelligent beam training, a large language model (LLM)-enabled beam training framework is proposed for the pinching antenna system (PASS) in downlink multi-user multiple-input multiple-output (MIMO) communications. A novel LLM-based beam training supervised learning mechanism is developed, allowing context-aware and environment-adaptive probing for PASS to reduce overheads. Both single-user and multi-user cases are considered. 1) For single-user case, the LLM-based pinching beamforming codebook generation problem is formulated to maximize the beamforming gain. Then, the optimal transmit beamforming is obtained by maximum ratio transmission (MRT). 2) For multi-user case, a joint codebook generation and beam selection problem is formulated based on the system sum rate under the minimum mean square error (MMSE) transmit beamforming. The training labels for pinching beamforming are constructed by selecting the beam combination that maximizes system performance from each user's Top-S candidate beams. Based on pretrained Generative Pre-trained Transformers (GPTs), the LLM is trained in an end-to-end fashion to minimize the cross-entropy loss. Simulation results demonstrate that: i) For single-user case, the proposed LLM-enabled PASS attains over 95% Top-1 accuracy in beam selection and achieves 51.92% improvements in beamforming gains compared to conventional method. ii) For multi-user case, the proposed LLM-enabled PASS framework significantly outperforms both the LLM-based massive MIMO and conventional PASS beam training, achieving up to 57.14% and 33.33% improvements in sum rate, respectively.

Pinching-Antenna Systems (PASS): A Tutorial

Aug 11, 2025Abstract:Pinching antenna systems (PASS) present a breakthrough among the flexible-antenna technologies, and distinguish themselves by facilitating large-scale antenna reconfiguration, line-of-sight creation, scalable implementation, and near-field benefits, thus bringing wireless communications from the last mile to the last meter. A comprehensive tutorial is presented in this paper. First, the fundamentals of PASS are discussed, including PASS signal models, hardware models, power radiation models, and pinching antenna activation methods. Building upon this, the information-theoretic capacity limits achieved by PASS are characterized, and several typical performance metrics of PASS-based communications are analyzed to demonstrate its superiority over conventional antenna technologies. Next, the pinching beamforming design is investigated. The corresponding power scaling law is first characterized. For the joint transmit and pinching design in the general multiple-waveguide case, 1) a pair of transmission strategies is proposed for PASS-based single-user communications to validate the superiority of PASS, namely sub-connected and fully connected structures; and 2) three practical protocols are proposed for facilitating PASS-based multi-user communications, namely waveguide switching, waveguide division, and waveguide multiplexing. A possible implementation of PASS in wideband communications is further highlighted. Moreover, the channel state information acquisition in PASS is elaborated with a pair of promising solutions. To overcome the high complexity and suboptimality inherent in conventional convex-optimization-based approaches, machine-learning-based methods for operating PASS are also explored, focusing on selected deep neural network architectures and training algorithms. Finally, several promising applications of PASS in next-generation wireless networks are highlighted.

Pinching-Antenna Systems (PASS): Power Radiation Model and Optimal Beamforming Design

Apr 30, 2025Abstract:Pinching-antenna systems (PASS) improve wireless links by configuring the locations of activated pinching antennas along dielectric waveguides, namely pinching beamforming. In this paper, a novel adjustable power radiation model is proposed for PASS, where power radiation ratios of pinching antennas can be flexibly controlled by tuning the spacing between pinching antennas and waveguides. A closed-form pinching antenna spacing arrangement strategy is derived to achieve the commonly assumed equal-power radiation. Based on this, a practical PASS framework relying on discrete activation is considered, where pinching antennas can only be activated among a set of predefined locations. A transmit power minimization problem is formulated, which jointly optimizes the transmit beamforming, pinching beamforming, and the numbers of activated pinching antennas, subject to each user's minimum rate requirement. (1) To solve the resulting highly coupled mixed-integer nonlinear programming (MINLP) problem, branch-and-bound (BnB)-based algorithms are proposed for both single-user and multi-user scenarios, which is guaranteed to converge to globally optimal solutions. (2) A low-complexity many-to-many matching algorithm is further developed. Combined with the Karush-Kuhn-Tucker (KKT) theory, locally optimal and pairwise-stable solutions are obtained within polynomial-time complexity. Simulation results demonstrate that: (i) PASS significantly outperforms conventional multi-antenna architectures, particularly when the number of users and the spatial range increase; and (ii) The proposed matching-based algorithm achieves near-optimal performance, resulting in only a slight performance loss while significantly reducing computational overheads. Code is available at https://github.com/xiaoxiaxusummer/PASS_Discrete

Joint Transmit and Pinching Beamforming for PASS: Optimization-Based or Learning-Based?

Feb 12, 2025Abstract:A novel pinching antenna system (PASS)-enabled downlink multi-user multiple-input single-output (MISO) framework is proposed. PASS consists of multiple waveguides spanning over thousands of wavelength, which equip numerous low-cost dielectric particles, named pinching antennas (PAs), to radiate signals into free space. The positions of PAs can be reconfigured to change both the large-scale path losses and phases of signals, thus facilitating the novel pinching beamforming design. A sum rate maximization problem is formulated, which jointly optimizes the transmit and pinching beamforming to adaptively achieve constructive signal enhancement and destructive interference mitigation. To solve this highly coupled and nonconvex problem, both optimization-based and learning-based methods are proposed. 1) For the optimization-based method, a majorization-minimization and penalty dual decomposition (MM-PDD) algorithm is developed, which handles the nonconvex complex exponential component using a Lipschitz surrogate function and then invokes PDD for problem decoupling. 2) For the learning-based method, a novel Karush-Kuhn-Tucker (KKT)-guided dual learning (KDL) approach is proposed, which enables KKT solutions to be reconstructed in a data-driven manner by learning dual variables. Following this idea, a KDL-Tranformer algorithm is developed, which captures both inter-PA/inter-user dependencies and channel-state-information (CSI)-beamforming dependencies by attention mechanisms. Simulation results demonstrate that: i) The proposed PASS framework significantly outperforms conventional massive multiple input multiple output (MIMO) system even with a few PAs. ii) The proposed KDL-Transformer can improve over 30% system performance than MM-PDD algorithm, while achieving a millisecond-level response on modern GPUs.

Diffusion Model for Multiple Antenna Communications

Feb 03, 2025

Abstract:The potential of applying diffusion models (DMs) for multiple antenna communications is discussed. A unified framework of applying DM for multiple antenna tasks is first proposed. Then, the tasks are innovatively divided into two categories, i.e., decision-making tasks and generation tasks, depending on whether an optimization of system parameters is involved. For each category, it is conceived 1) how the framework can be used for each task and 2) why the DM is superior to traditional artificial intelligence (TAI) and conventional optimization tasks. It is highlighted that the DMs are well-suited for scenarios with strong interference and noise, excelling in modeling complex data distribution and exploring better actions. A case study of learning beamforming with a DM is then provided, to demonstrate the superiority of the DMs with simulation results. Finally, the applications of DM for emerging multiple antenna technologies and promising research directions are discussed.

Pinching Antenna Systems (PASS): Architecture Designs, Opportunities, and Outlook

Jan 30, 2025

Abstract:This article proposes a novel design for the Pinching Antenna Systems (PASS) and advocates simple yet efficient wireless communications over the `last meter'. First, the potential benefits of PASS are discussed by reviewing an existing prototype. Then, the fundamentals of PASS are introduced, including physical principles, signal models, and communication designs. In contrast to existing multi-antenna systems, PASS brings a novel concept termed \emph{Pinching Beamforming}, which is achieved by dynamically adjusting the positions of PAs. Based on this concept, a couple of practical transmission architectures are proposed for employing PASS, namely non-multiplexing and multiplexing architectures. More particularly, 1) The non-multiplexing architecture is featured by simple baseband signal processing and relies only on the pinching beamforming; while 2) the multiplexing architecture provides enhanced signal manipulation capabilities with joint baseband and pinching beamforming, which is further divided into sub-connected, fully-connected, and phase-shifter-based fully-connected schemes. Furthermore, several emerging scenarios are put forward for integrating PASS into future wireless networks. As a further advance, by demonstrating a few numerical case studies, the significant performance gain of PASS is revealed compared to conventional multi-antenna systems. Finally, several research opportunities and open problems of PASS are highlighted.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge