Qimei Chen

Federated Learning with Integrated Sensing, Communication, and Computation: Frameworks and Performance Analysis

Sep 17, 2024

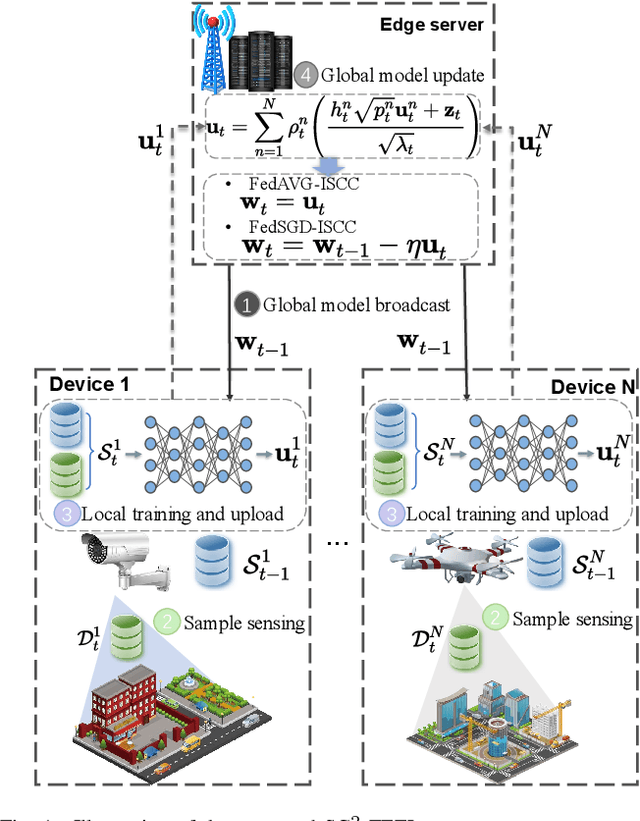

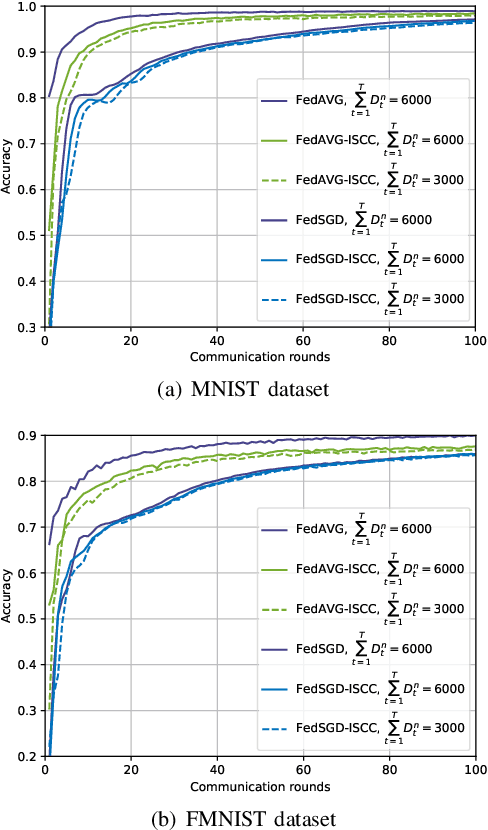

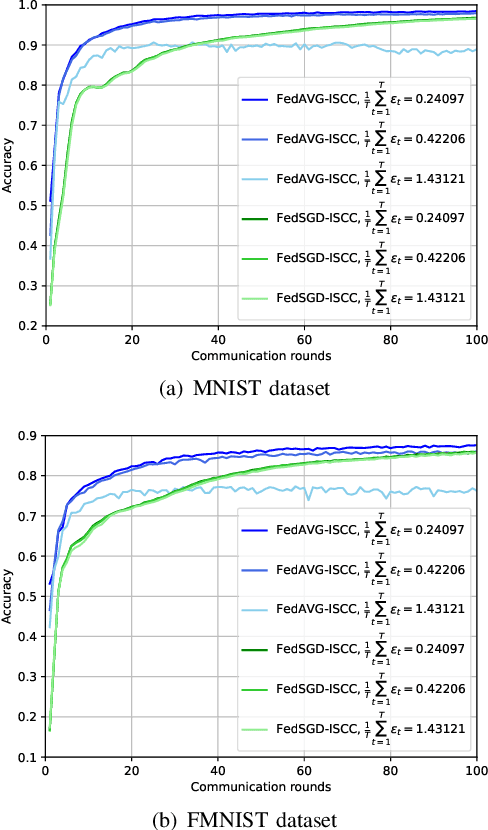

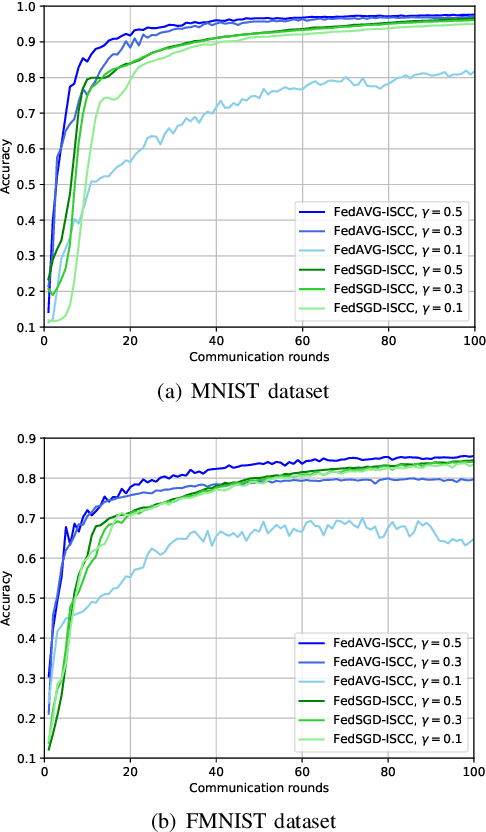

Abstract:With the emergence of integrated sensing, communication, and computation (ISCC) in the upcoming 6G era, federated learning with ISCC (FL-ISCC), integrating sample collection, local training, and parameter exchange and aggregation, has garnered increasing interest for enhancing training efficiency. Currently, FL-ISCC primarily includes two algorithms: FedAVG-ISCC and FedSGD-ISCC. However, the theoretical understanding of the performance and advantages of these algorithms remains limited. To address this gap, we investigate a general FL-ISCC framework, implementing both FedAVG-ISCC and FedSGD-ISCC. We experimentally demonstrate the substantial potential of the ISCC framework in reducing latency and energy consumption in FL. Furthermore, we provide a theoretical analysis and comparison. The results reveal that:1) Both sample collection and communication errors negatively impact algorithm performance, highlighting the need for careful design to optimize FL-ISCC applications. 2) FedAVG-ISCC performs better than FedSGD-ISCC under IID data due to its advantage with multiple local updates. 3) FedSGD-ISCC is more robust than FedAVG-ISCC under non-IID data, where the multiple local updates in FedAVG-ISCC worsen performance as non-IID data increases. FedSGD-ISCC maintains performance levels similar to IID conditions. 4) FedSGD-ISCC is more resilient to communication errors than FedAVG-ISCC, which suffers from significant performance degradation as communication errors increase.Extensive simulations confirm the effectiveness of the FL-ISCC framework and validate our theoretical analysis.

CrossFi: A Cross Domain Wi-Fi Sensing Framework Based on Siamese Network

Aug 21, 2024Abstract:In recent years, Wi-Fi sensing has garnered significant attention due to its numerous benefits, such as privacy protection, low cost, and penetration ability. Extensive research has been conducted in this field, focusing on areas such as gesture recognition, people identification, and fall detection. However, many data-driven methods encounter challenges related to domain shift, where the model fails to perform well in environments different from the training data. One major factor contributing to this issue is the limited availability of Wi-Fi sensing datasets, which makes models learn excessive irrelevant information and over-fit to the training set. Unfortunately, collecting large-scale Wi-Fi sensing datasets across diverse scenarios is a challenging task. To address this problem, we propose CrossFi, a siamese network-based approach that excels in both in-domain scenario and cross-domain scenario, including few-shot, zero-shot scenarios, and even works in few-shot new-class scenario where testing set contains new categories. The core component of CrossFi is a sample-similarity calculation network called CSi-Net, which improves the structure of the siamese network by using an attention mechanism to capture similarity information, instead of simply calculating the distance or cosine similarity. Based on it, we develop an extra Weight-Net that can generate a template for each class, so that our CrossFi can work in different scenarios. Experimental results demonstrate that our CrossFi achieves state-of-the-art performance across various scenarios. In gesture recognition task, our CrossFi achieves an accuracy of 98.17% in in-domain scenario, 91.72% in one-shot cross-domain scenario, 64.81% in zero-shot cross-domain scenario, and 84.75% in one-shot new-class scenario. To facilitate future research, we will release the code for our model upon publication.

Collaborative Edge AI Inference over Cloud-RAN

Apr 09, 2024Abstract:In this paper, a cloud radio access network (Cloud-RAN) based collaborative edge AI inference architecture is proposed. Specifically, geographically distributed devices capture real-time noise-corrupted sensory data samples and extract the noisy local feature vectors, which are then aggregated at each remote radio head (RRH) to suppress sensing noise. To realize efficient uplink feature aggregation, we allow each RRH receives local feature vectors from all devices over the same resource blocks simultaneously by leveraging an over-the-air computation (AirComp) technique. Thereafter, these aggregated feature vectors are quantized and transmitted to a central processor (CP) for further aggregation and downstream inference tasks. Our aim in this work is to maximize the inference accuracy via a surrogate accuracy metric called discriminant gain, which measures the discernibility of different classes in the feature space. The key challenges lie on simultaneously suppressing the coupled sensing noise, AirComp distortion caused by hostile wireless channels, and the quantization error resulting from the limited capacity of fronthaul links. To address these challenges, this work proposes a joint transmit precoding, receive beamforming, and quantization error control scheme to enhance the inference accuracy. Extensive numerical experiments demonstrate the effectiveness and superiority of our proposed optimization algorithm compared to various baselines.

Artificial Intelligence Enabled NOMA Towards Next Generation Multiple Access

Jun 10, 2022

Abstract:This article focuses on the application of artificial intelligence (AI) in non-orthogonal multiple-access (NOMA), which aims to achieve automated, adaptive, and high-efficiency multi-user communications towards next generation multiple access (NGMA). First, the limitations of current scenario-specific multi-antenna NOMA schemes are discussed, and the importance of AI for NGMA is highlighted. Then, to achieve the vision of NGMA, a novel cluster-free NOMA framework is proposed for providing scenario-adaptive NOMA communications, and several promising machine learning solutions are identified. To elaborate further, novel centralized and distributed machine learning paradigms are conceived for efficiently employing the proposed cluster-free NOMA framework in single-cell and multi-cell networks, where numerical results are provided to demonstrate the effectiveness. Furthermore, the interplays between the proposed cluster-free NOMA and emerging wireless techniques are presented. Finally, several open research issues of AI enabled NGMA are discussed.

Distributed Auto-Learning GNN for Multi-Cell Cluster-Free NOMA Communications

Apr 28, 2022

Abstract:A multi-cell cluster-free NOMA framework is proposed, where both intra-cell and inter-cell interference are jointly mitigated via flexible cluster-free successive interference cancellation (SIC) and coordinated beamforming design, respectively. The joint design problem is formulated to maximize the system sum rate while satisfying the SIC decoding requirements and users' data rate constraints. To address this highly complex and coupling non-convex mixed integer nonlinear programming (MINLP), a novel distributed auto-learning graph neural network (AutoGNN) architecture is proposed to alleviate the overwhelming information exchange burdens among base stations (BSs). The proposed AutoGNN can train the GNN model weights whilst automatically learning the optimal GNN architecture, namely the GNN network depth and message embedding sizes, to achieve communication-efficient distributed scheduling. Based on the proposed architecture, a bi-level AutoGNN learning algorithm is further developed to efficiently approximate the hypergradient in model training. It is theoretically proved that the proposed bi-level AutoGNN learning algorithm can converge to a stationary point. Numerical results reveal that: 1) the proposed cluster-free NOMA framework outperforms the conventional cluster-based NOMA framework in the multi-cell scenario; and 2) the proposed AutoGNN architecture significantly reduces the computation and communication overheads compared to the conventional convex optimization-based methods and the conventional GNN with a fixed architecture.

A Generalized Cluster-Free NOMA Framework Towards Next-Generation Multiple Access

Mar 29, 2022

Abstract:A generalized downlink multi-antenna non-orthogonal multiple access (NOMA) transmission framework is proposed with the novel concept of cluster-free successive interference cancellation (SIC). In contrast to conventional NOMA approaches, where SIC is successively carried out within the same cluster, the key idea is that the SIC can be flexibly implemented between any arbitrary users to achieve efficient interference elimination. Based on the proposed framework, a sum rate maximization problem is formulated for jointly optimizing the transmit beamforming and the SIC operations between users, subject to the SIC decoding conditions and users' minimal data rate requirements. To tackle this highly-coupled mixed-integer nonlinear programming problem, an alternating direction method of multipliers-successive convex approximation (ADMM-SCA) algorithm is developed. The original problem is first reformulated into a tractable biconvex augmented Lagrangian (AL) problem by handling the non-convex terms via SCA. Then, this AL problem is decomposed into two subproblems that are iteratively solved by the ADMM to obtain the stationary solution. Moreover, to reduce the computational complexity and alleviate the parameter initialization sensitivity of ADMM-SCA, a Matching-SCA algorithm is proposed. The intractable binary SIC operations are solved through an extended many-to-many matching, which is jointly combined with an SCA process to optimize the transmit beamforming. The proposed Matching-SCA can converge to an enhanced exchange-stable matching that guarantees the local optimality. Numerical results demonstrate that: i) the proposed Matching-SCA algorithm achieves comparable performance and a faster convergence compared to ADMM-SCA; ii) the proposed generalized framework realizes scenario-adaptive communications and outperforms traditional multi-antenna NOMA approaches in various communication regimes.

Semi-asynchronous Hierarchical Federated Learning for Cooperative Intelligent Transportation Systems

Oct 18, 2021

Abstract:Cooperative Intelligent Transport System (C-ITS) is a promising network to provide safety, efficiency, sustainability, and comfortable services for automated vehicles and road infrastructures by taking advantages from participants. However, the components of C-ITS usually generate large amounts of data, which makes it difficult to explore data science. Currently, federated learning has been proposed as an appealing approach to allow users to cooperatively reap the benefits from trained participants. Therefore, in this paper, we propose a novel Semi-asynchronous Hierarchical Federated Learning (SHFL) framework for C-ITS that enables elastic edge to cloud model aggregation from data sensing. We further formulate a joint edge node association and resource allocation problem under the proposed SHFL framework to prevent personalities of heterogeneous road vehicles and achieve communication-efficiency. To deal with our proposed Mixed integer nonlinear programming (MINLP) problem, we introduce a distributed Alternating Direction Method of Multipliers (ADMM)-Block Coordinate Update (BCU) algorithm. With this algorithm, a tradeoff between training accuracy and transmission latency has been derived. Numerical results demonstrate the advantages of the proposed algorithm in terms of training overhead and model performance.

URLLC and eMBB Coexistence in MIMO Non-orthogonal Multiple Access Systems

Sep 13, 2021

Abstract:Enhanced mobile broadband (eMBB) and ultrareliable and low-latency communications (URLLC) are two major expected services in the fifth-generation mobile communication systems (5G). Specifically, eMBB applications support extremely high data rate communications, while URLLC services aim to provide stringent latency with high reliability communications. Due to their differentiated quality-of-service (QoS) requirements, the spectrum sharing between URLLC and eMBB services becomes a challenging scheduling issue. In this paper, we aim to investigate the URLLC and eMBB coscheduling/coexistence problem under a puncturing technique in multiple-input multiple-output (MIMO) non-orthogonal multiple access (NOMA) systems. The objective function is formulated to maximize the data rate of eMBB users while satisfying the latency requirements of URLLC users through joint user selection and power allocation scheduling. To solve this problem, we first introduce an eMBB user clustering mechanism to balance the system performance and computational complexity. Thereafter, we decompose the original problem into two subproblems, namely the scheduling problem of user selection and power allocation. We introduce a Gale-Shapley (GS) theory to solve with the user selection problem, and a successive convex approximation (SCA) and a difference of convex (D.C.) programming to deal with the power allocation problem. Finally, an iterative algorithm is utilized to find the global solution with low computational complexity. Numerical results show the effectiveness of the proposed algorithms, and also verify the proposed approach outperforms other baseline methods.

Millimeter-Wave NR-U and WiGig Coexistence: Joint User Grouping, Beam Coordination and Power Control

Aug 11, 2021

Abstract:Millimeter wave (mmWave) communication is a promising New Radio in Unlicensed (NR-U) technology to meet with the ever-increasing data rate and connectivity requirements in future wireless networks. However, the development of NR-U networks should consider the coexistence with the incumbent Wireless Gigabit (WiGig) networks. In this paper, we introduce a novel multiple-input multiple-output non-orthogonal multiple access (MIMO-NOMA) based mmWave NR-U and WiGig coexistence network for uplink transmission. Our aim for the proposed coexistence network is to maximize the spectral efficiency while ensuring the strict NR-U delay requirement and the WiGig transmission performance in real time environments. A joint user grouping, hybrid beam coordination and power control strategy is proposed, which is formulated as a Lyapunov optimization based mixed-integer nonlinear programming (MINLP) with unit-modulus and nonconvex coupling constraints. Hence, we introduce a penalty dual decomposition (PDD) framework, which first transfers the formulated MINLP into a tractable augmented Lagrangian (AL) problem. Thereafter, we integrate both convex-concave procedure (CCCP) and inexact block coordinate update (BCU) methods to approximately decompose the AL problem into multiple nested convex subproblems, which can be iteratively solved under the PDD framework. Numerical results illustrate the performance improvement ability of the proposed strategy, as well as demonstrate the effectiveness to guarantee the NR-U traffic delay and WiGig network performance.

Graph-Embedded Multi-Agent Learning for Smart Reconfigurable THz MIMO-NOMA Networks

Jul 15, 2021

Abstract:With the accelerated development of immersive applications and the explosive increment of internet-of-things (IoT) terminals, 6G would introduce terahertz (THz) massive multiple-input multiple-output non-orthogonal multiple access (MIMO-NOMA) technologies to meet the ultra-high-speed transmission and massive connectivity requirements. Nevertheless, the unreliability of THz transmissions and the extreme heterogeneity of device requirements pose critical challenges for practical applications. To address these challenges, we propose a novel smart reconfigurable THz MIMO-NOMA framework, which can realize customizable and intelligent communications by flexibly and coordinately reconfiguring hybrid beams through the cooperation between access points (APs) and reconfigurable intelligent surfaces (RISs). The optimization problem is formulated as a decentralized partially-observable Markov decision process (Dec-POMDP) to maximize the network energy efficiency, while guaranteeing the diversified users' performance, via a joint RIS element selection, coordinated discrete phase-shift control, and power allocation strategy. To solve the above non-convex, strongly coupled, and highly complex mixed integer nonlinear programming (MINLP) problem, we propose a novel multi-agent deep reinforcement learning (MADRL) algorithm, namely graph-embedded value-decomposition actor-critic (GE-VDAC), that embeds the interaction information of agents, and learns a locally optimal solution through a distributed policy. Numerical results demonstrate that the proposed algorithm achieves highly customized communications and outperforms traditional MADRL algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge