Tingwei Chen

Perceive Anything: Recognize, Explain, Caption, and Segment Anything in Images and Videos

Jun 05, 2025

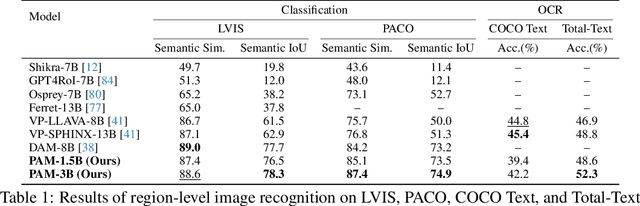

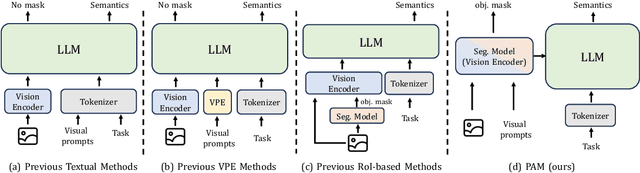

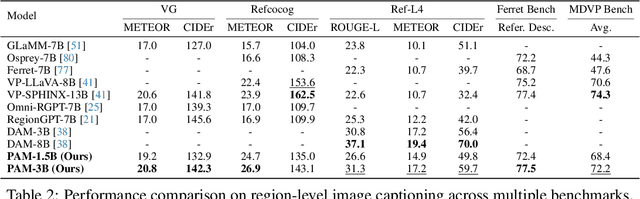

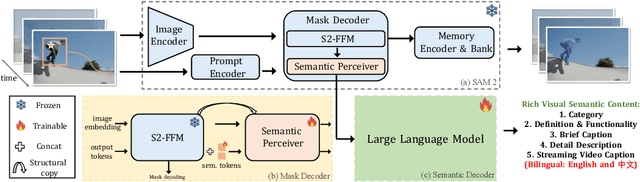

Abstract:We present Perceive Anything Model (PAM), a conceptually straightforward and efficient framework for comprehensive region-level visual understanding in images and videos. Our approach extends the powerful segmentation model SAM 2 by integrating Large Language Models (LLMs), enabling simultaneous object segmentation with the generation of diverse, region-specific semantic outputs, including categories, label definition, functional explanations, and detailed captions. A key component, Semantic Perceiver, is introduced to efficiently transform SAM 2's rich visual features, which inherently carry general vision, localization, and semantic priors into multi-modal tokens for LLM comprehension. To support robust multi-granularity understanding, we also develop a dedicated data refinement and augmentation pipeline, yielding a high-quality dataset of 1.5M image and 0.6M video region-semantic annotations, including novel region-level streaming video caption data. PAM is designed for lightweightness and efficiency, while also demonstrates strong performance across a diverse range of region understanding tasks. It runs 1.2-2.4x faster and consumes less GPU memory than prior approaches, offering a practical solution for real-world applications. We believe that our effective approach will serve as a strong baseline for future research in region-level visual understanding.

First Token Probability Guided RAG for Telecom Question Answering

Jan 11, 2025Abstract:Large Language Models (LLMs) have garnered significant attention for their impressive general-purpose capabilities. For applications requiring intricate domain knowledge, Retrieval-Augmented Generation (RAG) has shown a distinct advantage in incorporating domain-specific information into LLMs. However, existing RAG research has not fully addressed the challenges of Multiple Choice Question Answering (MCQA) in telecommunications, particularly in terms of retrieval quality and mitigating hallucinations. To tackle these challenges, we propose a novel first token probability guided RAG framework. This framework leverages confidence scores to optimize key hyperparameters, such as chunk number and chunk window size, while dynamically adjusting the context. Our method starts by retrieving the most relevant chunks and generates a single token as the potential answer. The probabilities of all options are then normalized to serve as confidence scores, which guide the dynamic adjustment of the context. By iteratively optimizing the hyperparameters based on these confidence scores, we can continuously improve RAG performance. We conducted experiments to validate the effectiveness of our framework, demonstrating its potential to enhance accuracy in domain-specific MCQA tasks.

KNN-MMD: Cross Domain Wi-Fi Sensing Based on Local Distribution Alignment

Dec 06, 2024

Abstract:As a key technology in Integrated Sensing and Communications (ISAC), Wi-Fi sensing has gained widespread application in various settings such as homes, offices, and public spaces. By analyzing the patterns of Channel State Information (CSI), we can obtain information about people's actions for tasks like person identification, gesture recognition, and fall detection. However, the CSI is heavily influenced by the environment, such that even minor environmental changes can significantly alter the CSI patterns. This will cause the performance deterioration and even failure when applying the Wi-Fi sensing model trained in one environment to another. To address this problem, we introduce a K-Nearest Neighbors Maximum Mean Discrepancy (KNN-MMD) model, a few-shot method for cross-domain Wi-Fi sensing. We propose a local distribution alignment method within each category, which outperforms traditional Domain Adaptation (DA) methods based on global alignment. Besides, our method can determine when to stop training, which cannot be realized by most DA methods. As a result, our method is more stable and can be better used in practice. The effectiveness of our method are evaluated in several cross-domain Wi-Fi sensing tasks, including gesture recognition, person identification, fall detection, and action recognition, using both a public dataset and a self-collected dataset. In one-shot scenario, our method achieves accuracy of 93.26%, 81.84%, 77.62%, and 75.30% in the four tasks respectively. To facilitate future research, we will make our code and dataset publicly available upon publication.

LoFi: Vision-Aided Label Generator for Wi-Fi Localization and Tracking

Dec 06, 2024

Abstract:Wi-Fi localization and tracking has shown immense potential due to its privacy-friendliness, wide coverage, permeability, independence from lighting conditions, and low cost. Current methods can be broadly categorized as model-based and data-driven approaches, where data-driven methods show better performance and have less requirement for specialized devices, but struggle with limited datasets for training. Due to limitations in current data collection methods, most datasets only provide coarse-grained ground truth (GT) or limited amount of label points, which greatly hinders the development of data-driven methods. Even though lidar can provide accurate GT, their high cost makes them inaccessible to many users. To address these challenges, we propose LoFi, a vision-aided label generator for Wi-Fi localization and tracking, which can generate ground truth position coordinates solely based on 2D images. The easy and quick data collection method also helps data-driven based methods deploy in practice, since Wi-Fi is a low-generalization modality and when using relevant methods, it always requires fine-tuning the model using newly collected data. Based on our method, we also collect a Wi-Fi tracking and localization dataset using ESP32-S3 and a webcam. To facilitate future research, we will make our code and dataset publicly available upon publication.

CrossFi: A Cross Domain Wi-Fi Sensing Framework Based on Siamese Network

Aug 21, 2024Abstract:In recent years, Wi-Fi sensing has garnered significant attention due to its numerous benefits, such as privacy protection, low cost, and penetration ability. Extensive research has been conducted in this field, focusing on areas such as gesture recognition, people identification, and fall detection. However, many data-driven methods encounter challenges related to domain shift, where the model fails to perform well in environments different from the training data. One major factor contributing to this issue is the limited availability of Wi-Fi sensing datasets, which makes models learn excessive irrelevant information and over-fit to the training set. Unfortunately, collecting large-scale Wi-Fi sensing datasets across diverse scenarios is a challenging task. To address this problem, we propose CrossFi, a siamese network-based approach that excels in both in-domain scenario and cross-domain scenario, including few-shot, zero-shot scenarios, and even works in few-shot new-class scenario where testing set contains new categories. The core component of CrossFi is a sample-similarity calculation network called CSi-Net, which improves the structure of the siamese network by using an attention mechanism to capture similarity information, instead of simply calculating the distance or cosine similarity. Based on it, we develop an extra Weight-Net that can generate a template for each class, so that our CrossFi can work in different scenarios. Experimental results demonstrate that our CrossFi achieves state-of-the-art performance across various scenarios. In gesture recognition task, our CrossFi achieves an accuracy of 98.17% in in-domain scenario, 91.72% in one-shot cross-domain scenario, 64.81% in zero-shot cross-domain scenario, and 84.75% in one-shot new-class scenario. To facilitate future research, we will release the code for our model upon publication.

Modelling the 5G Energy Consumption using Real-world Data: Energy Fingerprint is All You Need

Jun 13, 2024

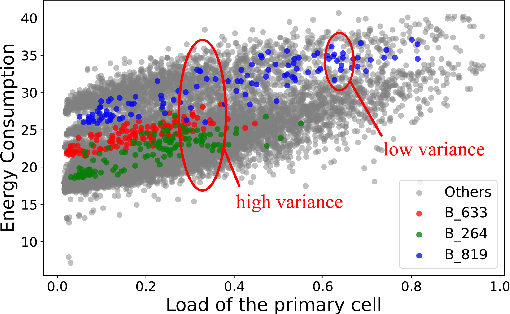

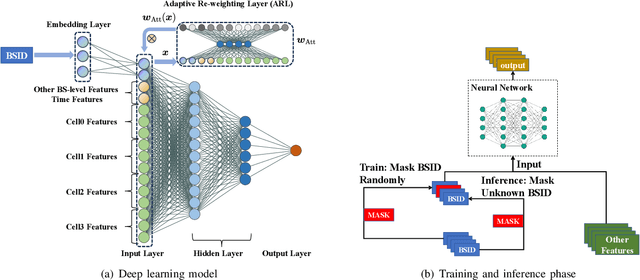

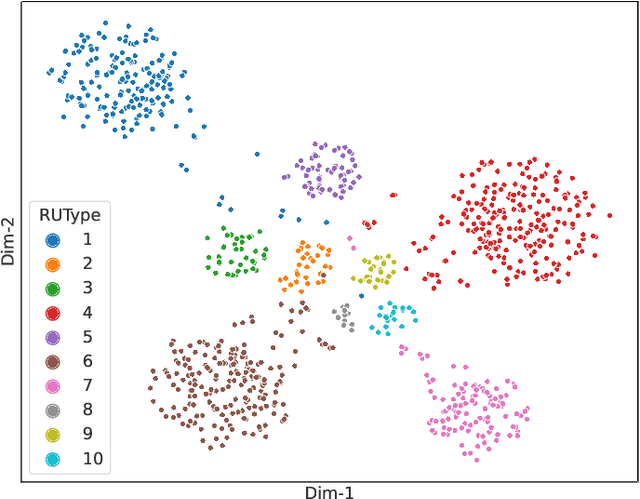

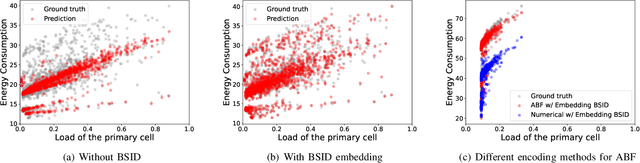

Abstract:The introduction of fifth-generation (5G) radio technology has revolutionized communications, bringing unprecedented automation, capacity, connectivity, and ultra-fast, reliable communications. However, this technological leap comes with a substantial increase in energy consumption, presenting a significant challenge. To improve the energy efficiency of 5G networks, it is imperative to develop sophisticated models that accurately reflect the influence of base station (BS) attributes and operational conditions on energy usage.Importantly, addressing the complexity and interdependencies of these diverse features is particularly challenging, both in terms of data processing and model architecture design. This paper proposes a novel 5G base stations energy consumption modelling method by learning from a real-world dataset used in the ITU 5G Base Station Energy Consumption Modelling Challenge in which our model ranked second. Unlike existing methods that omit the Base Station Identifier (BSID) information and thus fail to capture the unique energy fingerprint in different base stations, we incorporate the BSID into the input features and encoding it with an embedding layer for precise representation. Additionally, we introduce a novel masked training method alongside an attention mechanism to further boost the model's generalization capabilities and accuracy. After evaluation, our method demonstrates significant improvements over existing models, reducing Mean Absolute Percentage Error (MAPE) from 12.75% to 4.98%, leading to a performance gain of more than 60%.

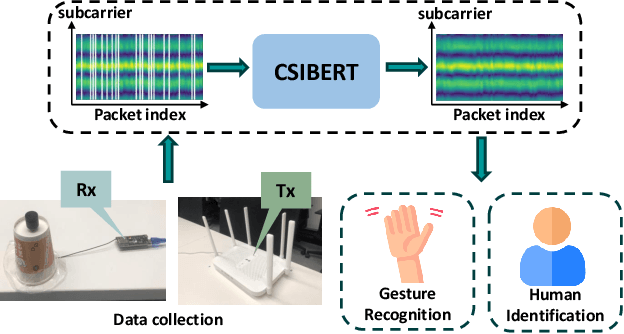

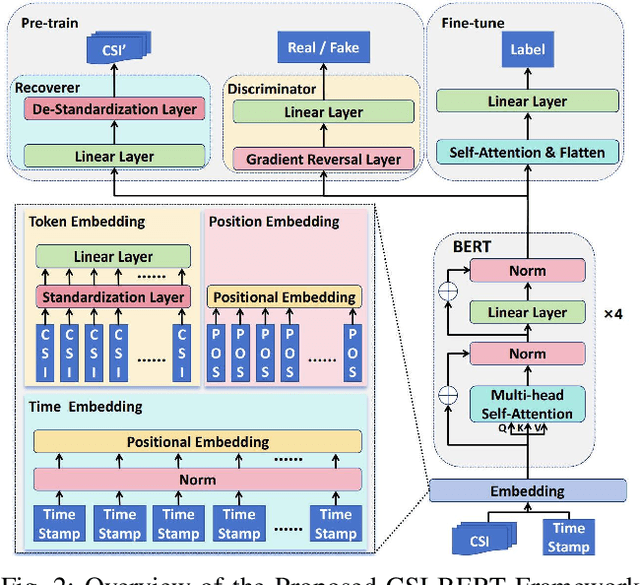

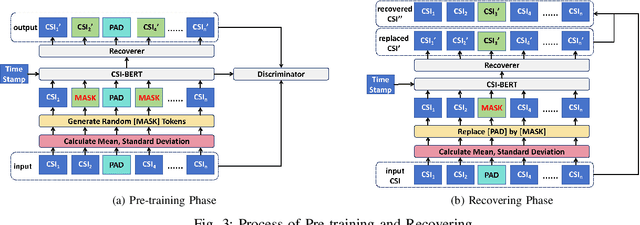

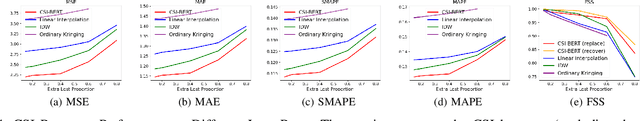

Finding the Missing Data: A BERT-inspired Approach Against Package Loss in Wireless Sensing

Mar 19, 2024

Abstract:Despite the development of various deep learning methods for Wi-Fi sensing, package loss often results in noncontinuous estimation of the Channel State Information (CSI), which negatively impacts the performance of the learning models. To overcome this challenge, we propose a deep learning model based on Bidirectional Encoder Representations from Transformers (BERT) for CSI recovery, named CSI-BERT. CSI-BERT can be trained in an self-supervised manner on the target dataset without the need for additional data. Furthermore, unlike traditional interpolation methods that focus on one subcarrier at a time, CSI-BERT captures the sequential relationships across different subcarriers. Experimental results demonstrate that CSI-BERT achieves lower error rates and faster speed compared to traditional interpolation methods, even when facing with high loss rates. Moreover, by harnessing the recovered CSI obtained from CSI-BERT, other deep learning models like Residual Network and Recurrent Neural Network can achieve an average increase in accuracy of approximately 15\% in Wi-Fi sensing tasks. The collected dataset WiGesture and code for our model are publicly available at https://github.com/RS2002/CSI-BERT.

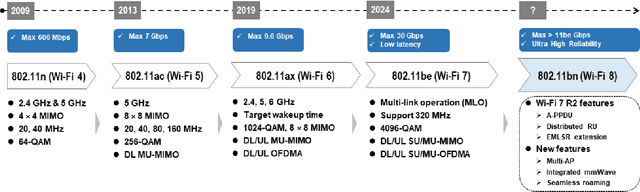

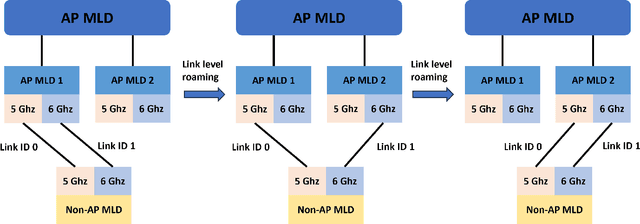

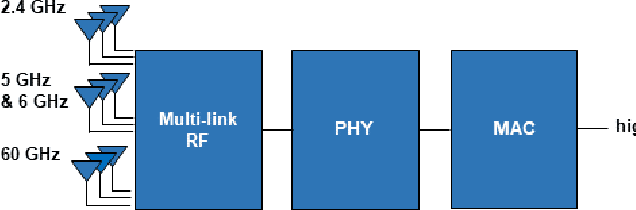

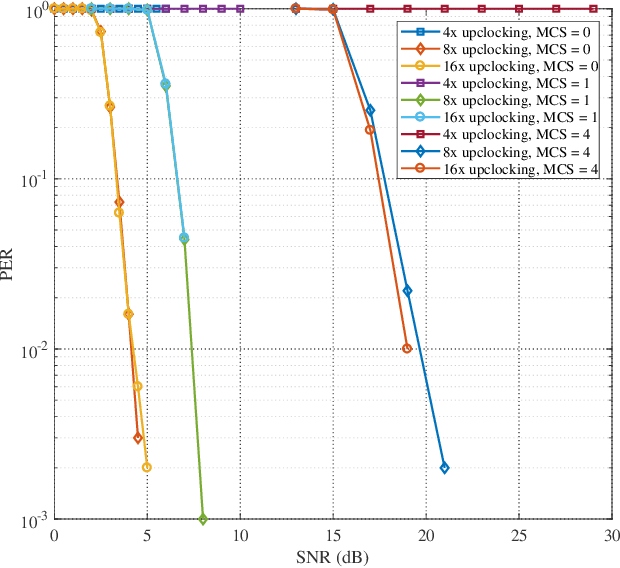

Wi-Fi 8: Embracing the Millimeter-Wave Era

Sep 28, 2023

Abstract:With the increasing demands in communication, Wi-Fi technology is advancing towards its next generation. Building on the foundation of Wi-Fi 7, millimeter-wave technology is anticipated to converge with Wi-Fi 8 in the near future. In this paper, we look into the millimeter-wave technology and other potential feasible features, providing a comprehensive perspective on the future of Wi-Fi 8. Our simulation results demonstrate that significant performance gains can be achieved, even in the presence of hardware impairments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge