Guangxu Zhu

DK-Root: A Joint Data-and-Knowledge-Driven Framework for Root Cause Analysis of QoE Degradations in Mobile Networks

Nov 13, 2025

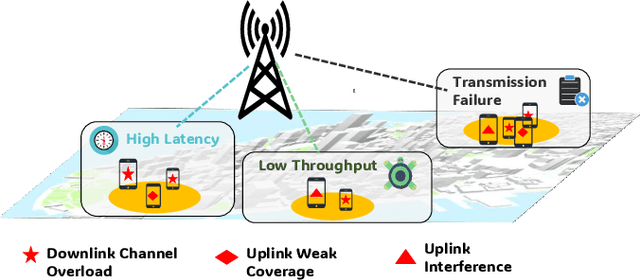

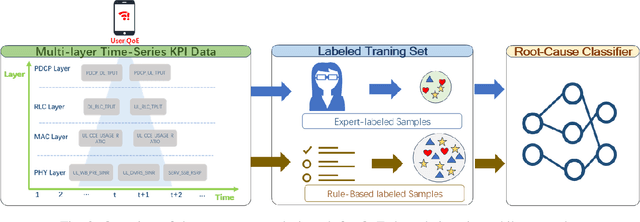

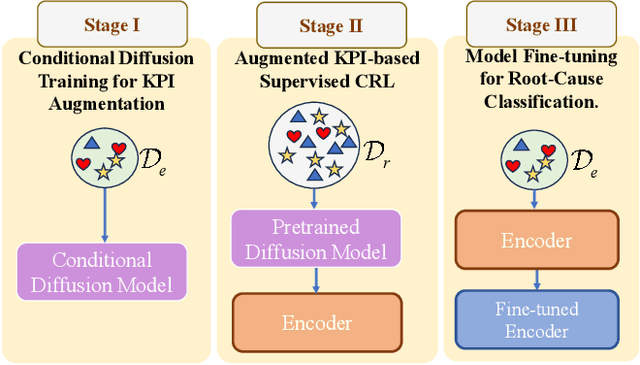

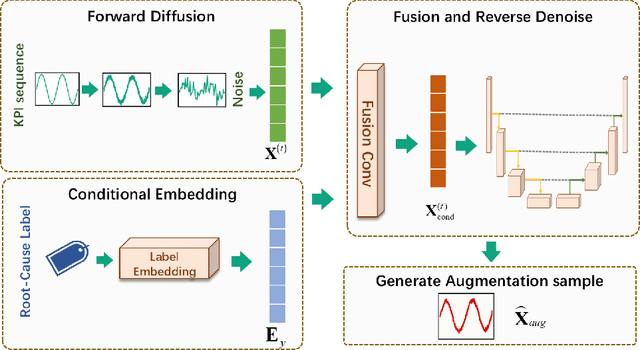

Abstract:Diagnosing the root causes of Quality of Experience (QoE) degradations in operational mobile networks is challenging due to complex cross-layer interactions among kernel performance indicators (KPIs) and the scarcity of reliable expert annotations. Although rule-based heuristics can generate labels at scale, they are noisy and coarse-grained, limiting the accuracy of purely data-driven approaches. To address this, we propose DK-Root, a joint data-and-knowledge-driven framework that unifies scalable weak supervision with precise expert guidance for robust root-cause analysis. DK-Root first pretrains an encoder via contrastive representation learning using abundant rule-based labels while explicitly denoising their noise through a supervised contrastive objective. To supply task-faithful data augmentation, we introduce a class-conditional diffusion model that generates KPIs sequences preserving root-cause semantics, and by controlling reverse diffusion steps, it produces weak and strong augmentations that improve intra-class compactness and inter-class separability. Finally, the encoder and the lightweight classifier are jointly fine-tuned with scarce expert-verified labels to sharpen decision boundaries. Extensive experiments on a real-world, operator-grade dataset demonstrate state-of-the-art accuracy, with DK-Root surpassing traditional ML and recent semi-supervised time-series methods. Ablations confirm the necessity of the conditional diffusion augmentation and the pretrain-finetune design, validating both representation quality and classification gains.

STM3: Mixture of Multiscale Mamba for Long-Term Spatio-Temporal Time-Series Prediction

Aug 17, 2025Abstract:Recently, spatio-temporal time-series prediction has developed rapidly, yet existing deep learning methods struggle with learning complex long-term spatio-temporal dependencies efficiently. The long-term spatio-temporal dependency learning brings two new challenges: 1) The long-term temporal sequence includes multiscale information naturally which is hard to extract efficiently; 2) The multiscale temporal information from different nodes is highly correlated and hard to model. To address these challenges, we propose an efficient \textit{\textbf{S}patio-\textbf{T}emporal \textbf{M}ultiscale \textbf{M}amba} (STM2) that includes a multiscale Mamba architecture to capture the multiscale information efficiently and simultaneously, and an adaptive graph causal convolution network to learn the complex multiscale spatio-temporal dependency. STM2 includes hierarchical information aggregation for different-scale information that guarantees their distinguishability. To capture diverse temporal dynamics across all spatial nodes more efficiently, we further propose an enhanced version termed \textit{\textbf{S}patio-\textbf{T}emporal \textbf{M}ixture of \textbf{M}ultiscale \textbf{M}amba} (STM3) that employs a special Mixture-of-Experts architecture, including a more stable routing strategy and a causal contrastive learning strategy to enhance the scale distinguishability. We prove that STM3 has much better routing smoothness and guarantees the pattern disentanglement for each expert successfully. Extensive experiments on real-world benchmarks demonstrate STM2/STM3's superior performance, achieving state-of-the-art results in long-term spatio-temporal time-series prediction.

Rethinking Multi-User Communication in Semantic Domain: Enhanced OMDMA by Shuffle-Based Orthogonalization and Diffusion Denoising

Jul 28, 2025

Abstract:Inter-user interference remains a critical bottleneck in wireless communication systems, particularly in the emerging paradigm of semantic communication (SemCom). Compared to traditional systems, inter-user interference in SemCom severely degrades key semantic information, often causing worse performance than Gaussian noise under the same power level. To address this challenge, inspired by the recently proposed concept of Orthogonal Model Division Multiple Access (OMDMA) that leverages semantic orthogonality rooted in the personalized joint source and channel (JSCC) models to distinguish users, we propose a novel, scalable framework that eliminates the need for user-specific JSCC models as did in original OMDMA. Our key innovation lies in shuffle-based orthogonalization, where randomly permuting the positions of JSCC feature vectors transforms inter-user interference into Gaussian-like noise. By assigning each user a unique shuffling pattern, the interference is treated as channel noise, enabling effective mitigation using diffusion models (DMs). This approach not only simplifies system design by requiring a single universal JSCC model but also enhances privacy, as shuffling patterns act as implicit private keys. Additionally, we extend the framework to scenarios involving semantically correlated data. By grouping users based on semantic similarity, a cooperative beamforming strategy is introduced to exploit redundancy in correlated data, further improving system performance. Extensive simulations demonstrate that the proposed method outperforms state-of-the-art multi-user SemCom frameworks, achieving superior semantic fidelity, robustness to interference, and scalability-all without requiring additional training overhead.

Low-Complexity Hybrid Beamforming for Multi-Cell mmWave Massive MIMO: A Primitive Kronecker Decomposition Approach

May 15, 2025Abstract:To circumvent the high path loss of mmWave propagation and reduce the hardware cost of massive multiple-input multiple-output antenna systems, full-dimensional hybrid beamforming is critical in 5G and beyond wireless communications. Concerning an uplink multi-cell system with a large-scale uniform planar antenna array, this paper designs an efficient hybrid beamformer using primitive Kronecker decomposition and dynamic factor allocation, where the analog beamformer applies to null the inter-cell interference and simultaneously enhances the desired signals. In contrast, the digital beamformer mitigates the intra-cell interference using the minimum mean square error (MMSE) criterion. Then, due to the low accuracy of phase shifters inherent in the analog beamformer, a low-complexity hybrid beamformer is developed to slow its adjustment speed. Next, an optimality analysis from a subspace perspective is performed, and a sufficient condition for optimal antenna configuration is established. Finally, simulation results demonstrate that the achievable sum rate of the proposed beamformer approaches that of the optimal pure digital MMSE scheme, yet with much lower computational complexity and hardware cost.

FedEMA: Federated Exponential Moving Averaging with Negative Entropy Regularizer in Autonomous Driving

May 01, 2025Abstract:Street Scene Semantic Understanding (denoted as S3U) is a crucial but complex task for autonomous driving (AD) vehicles. Their inference models typically face poor generalization due to domain-shift. Federated Learning (FL) has emerged as a promising paradigm for enhancing the generalization of AD models through privacy-preserving distributed learning. However, these FL AD models face significant temporal catastrophic forgetting when deployed in dynamically evolving environments, where continuous adaptation causes abrupt erosion of historical knowledge. This paper proposes Federated Exponential Moving Average (FedEMA), a novel framework that addresses this challenge through two integral innovations: (I) Server-side model's historical fitting capability preservation via fusing current FL round's aggregation model and a proposed previous FL round's exponential moving average (EMA) model; (II) Vehicle-side negative entropy regularization to prevent FL models' possible overfitting to EMA-introduced temporal patterns. Above two strategies empower FedEMA a dual-objective optimization that balances model generalization and adaptability. In addition, we conduct theoretical convergence analysis for the proposed FedEMA. Extensive experiments both on Cityscapes dataset and Camvid dataset demonstrate FedEMA's superiority over existing approaches, showing 7.12% higher mean Intersection-over-Union (mIoU).

iMacSR: Intermediate Multi-Access Supervision and Regularization in Training Autonomous Driving Models

May 01, 2025Abstract:Deep Learning (DL)-based street scene semantic understanding has become a cornerstone of autonomous driving (AD). DL model performance heavily relies on network depth. Specifically, deeper DL architectures yield better segmentation performance. However, as models grow deeper, traditional one-point supervision at the final layer struggles to optimize intermediate feature representations, leading to subpar training outcomes. To address this, we propose an intermediate Multi-access Supervision and Regularization (iMacSR) strategy. The proposed iMacSR introduces two novel components: (I) mutual information between latent features and ground truth as intermediate supervision loss ensures robust feature alignment at multiple network depths; and (II) negative entropy regularization on hidden features discourages overconfident predictions and mitigates overfitting. These intermediate terms are combined into the original final-layer training loss to form a unified optimization objective, enabling comprehensive optimization across the network hierarchy. The proposed iMacSR provides a robust framework for training deep AD architectures, advancing the performance of perception systems in real-world driving scenarios. In addition, we conduct theoretical convergence analysis for the proposed iMacSR. Extensive experiments on AD benchmarks (i.e., Cityscapes, CamVid, and SynthiaSF datasets) demonstrate that iMacSR outperforms conventional final-layer single-point supervision method up to 9.19% in mean Intersection over Union (mIoU).

Opportunistic Collaborative Planning with Large Vision Model Guided Control and Joint Query-Service Optimization

Apr 25, 2025

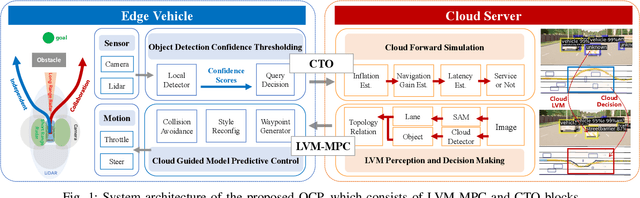

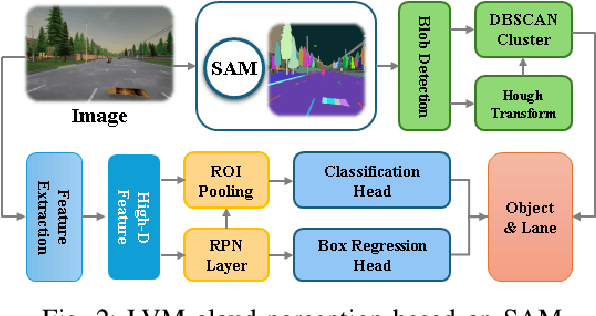

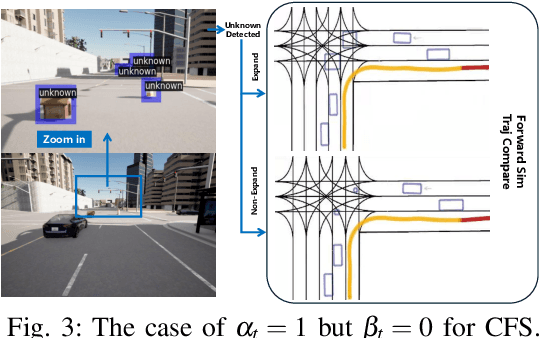

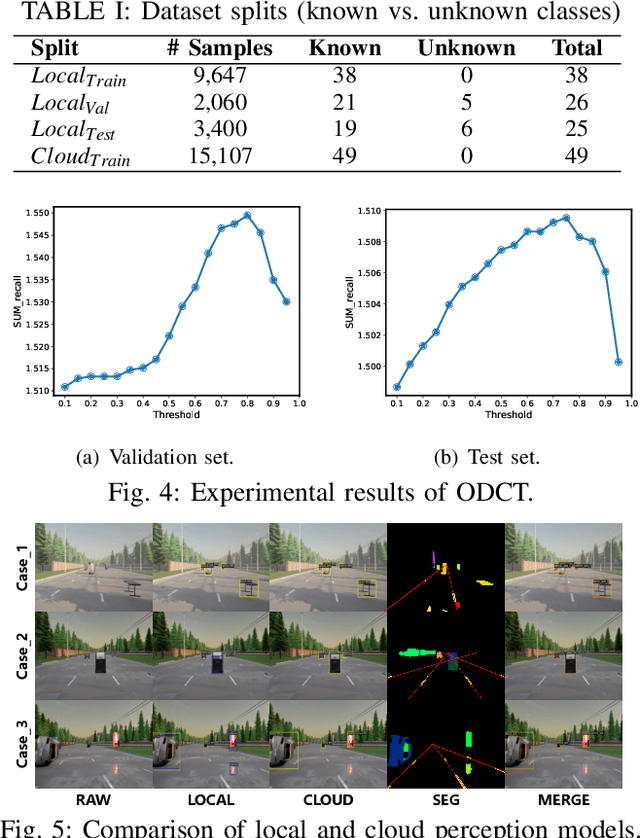

Abstract:Navigating autonomous vehicles in open scenarios is a challenge due to the difficulties in handling unseen objects. Existing solutions either rely on small models that struggle with generalization or large models that are resource-intensive. While collaboration between the two offers a promising solution, the key challenge is deciding when and how to engage the large model. To address this issue, this paper proposes opportunistic collaborative planning (OCP), which seamlessly integrates efficient local models with powerful cloud models through two key innovations. First, we propose large vision model guided model predictive control (LVM-MPC), which leverages the cloud for LVM perception and decision making. The cloud output serves as a global guidance for a local MPC, thereby forming a closed-loop perception-to-control system. Second, to determine the best timing for large model query and service, we propose collaboration timing optimization (CTO), including object detection confidence thresholding (ODCT) and cloud forward simulation (CFS), to decide when to seek cloud assistance and when to offer cloud service. Extensive experiments show that the proposed OCP outperforms existing methods in terms of both navigation time and success rate.

Label Anything: An Interpretable, High-Fidelity and Prompt-Free Annotator

Feb 05, 2025

Abstract:Learning-based street scene semantic understanding in autonomous driving (AD) has advanced significantly recently, but the performance of the AD model is heavily dependent on the quantity and quality of the annotated training data. However, traditional manual labeling involves high cost to annotate the vast amount of required data for training robust model. To mitigate this cost of manual labeling, we propose a Label Anything Model (denoted as LAM), serving as an interpretable, high-fidelity, and prompt-free data annotator. Specifically, we firstly incorporate a pretrained Vision Transformer (ViT) to extract the latent features. On top of ViT, we propose a semantic class adapter (SCA) and an optimization-oriented unrolling algorithm (OptOU), both with a quite small number of trainable parameters. SCA is proposed to fuse ViT-extracted features to consolidate the basis of the subsequent automatic annotation. OptOU consists of multiple cascading layers and each layer contains an optimization formulation to align its output with the ground truth as closely as possible, though which OptOU acts as being interpretable rather than learning-based blackbox nature. In addition, training SCA and OptOU requires only a single pre-annotated RGB seed image, owing to their small volume of learnable parameters. Extensive experiments clearly demonstrate that the proposed LAM can generate high-fidelity annotations (almost 100% in mIoU) for multiple real-world datasets (i.e., Camvid, Cityscapes, and Apolloscapes) and CARLA simulation dataset.

First Token Probability Guided RAG for Telecom Question Answering

Jan 11, 2025Abstract:Large Language Models (LLMs) have garnered significant attention for their impressive general-purpose capabilities. For applications requiring intricate domain knowledge, Retrieval-Augmented Generation (RAG) has shown a distinct advantage in incorporating domain-specific information into LLMs. However, existing RAG research has not fully addressed the challenges of Multiple Choice Question Answering (MCQA) in telecommunications, particularly in terms of retrieval quality and mitigating hallucinations. To tackle these challenges, we propose a novel first token probability guided RAG framework. This framework leverages confidence scores to optimize key hyperparameters, such as chunk number and chunk window size, while dynamically adjusting the context. Our method starts by retrieving the most relevant chunks and generates a single token as the potential answer. The probabilities of all options are then normalized to serve as confidence scores, which guide the dynamic adjustment of the context. By iteratively optimizing the hyperparameters based on these confidence scores, we can continuously improve RAG performance. We conducted experiments to validate the effectiveness of our framework, demonstrating its potential to enhance accuracy in domain-specific MCQA tasks.

Enhancing Large Vision Model in Street Scene Semantic Understanding through Leveraging Posterior Optimization Trajectory

Jan 03, 2025Abstract:To improve the generalization of the autonomous driving (AD) perception model, vehicles need to update the model over time based on the continuously collected data. As time progresses, the amount of data fitted by the AD model expands, which helps to improve the AD model generalization substantially. However, such ever-expanding data is a double-edged sword for the AD model. Specifically, as the fitted data volume grows to exceed the the AD model's fitting capacities, the AD model is prone to under-fitting. To address this issue, we propose to use a pretrained Large Vision Models (LVMs) as backbone coupled with downstream perception head to understand AD semantic information. This design can not only surmount the aforementioned under-fitting problem due to LVMs' powerful fitting capabilities, but also enhance the perception generalization thanks to LVMs' vast and diverse training data. On the other hand, to mitigate vehicles' computational burden of training the perception head while running LVM backbone, we introduce a Posterior Optimization Trajectory (POT)-Guided optimization scheme (POTGui) to accelerate the convergence. Concretely, we propose a POT Generator (POTGen) to generate posterior (future) optimization direction in advance to guide the current optimization iteration, through which the model can generally converge within 10 epochs. Extensive experiments demonstrate that the proposed method improves the performance by over 66.48\% and converges faster over 6 times, compared to the existing state-of-the-art approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge