Yik-Chung Wu

HPTune: Hierarchical Proactive Tuning for Collision-Free Model Predictive Control

Jan 29, 2026Abstract:Parameter tuning is a powerful approach to enhance adaptability in model predictive control (MPC) motion planners. However, existing methods typically operate in a myopic fashion that only evaluates executed actions, leading to inefficient parameter updates due to the sparsity of failure events (e.g., obstacle nearness or collision). To cope with this issue, we propose to extend evaluation from executed to non-executed actions, yielding a hierarchical proactive tuning (HPTune) framework that combines both a fast-level tuning and a slow-level tuning. The fast one adopts risk indicators of predictive closing speed and predictive proximity distance, and the slow one leverages an extended evaluation loss for closed-loop backpropagation. Additionally, we integrate HPTune with the Doppler LiDAR that provides obstacle velocities apart from position-only measurements for enhanced motion predictions, thus facilitating the implementation of HPTune. Extensive experiments on high-fidelity simulator demonstrate that HPTune achieves efficient MPC tuning and outperforms various baseline schemes in complex environments. It is found that HPTune enables situation-tailored motion planning by formulating a safe, agile collision avoidance strategy.

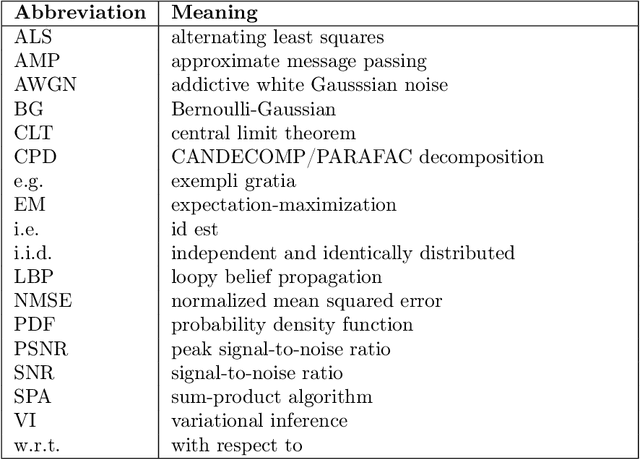

When Bayesian Tensor Completion Meets Multioutput Gaussian Processes: Functional Universality and Rank Learning

Dec 25, 2025Abstract:Functional tensor decomposition can analyze multi-dimensional data with real-valued indices, paving the path for applications in machine learning and signal processing. A limitation of existing approaches is the assumption that the tensor rank-a critical parameter governing model complexity-is known. However, determining the optimal rank is a non-deterministic polynomial-time hard (NP-hard) task and there is a limited understanding regarding the expressive power of functional low-rank tensor models for continuous signals. We propose a rank-revealing functional Bayesian tensor completion (RR-FBTC) method. Modeling the latent functions through carefully designed multioutput Gaussian processes, RR-FBTC handles tensors with real-valued indices while enabling automatic tensor rank determination during the inference process. We establish the universal approximation property of the model for continuous multi-dimensional signals, demonstrating its expressive power in a concise format. To learn this model, we employ the variational inference framework and derive an efficient algorithm with closed-form updates. Experiments on both synthetic and real-world datasets demonstrate the effectiveness and superiority of the RR-FBTC over state-of-the-art approaches. The code is available at https://github.com/OceanSTARLab/RR-FBTC.

A Unified Distributed Algorithm for Hybrid Near-Far Field Activity Detection in Cell-Free Massive MIMO

Sep 18, 2025

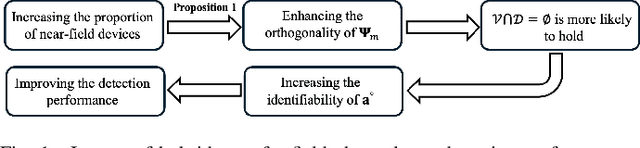

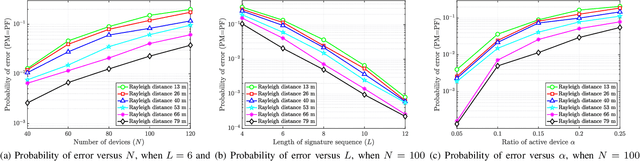

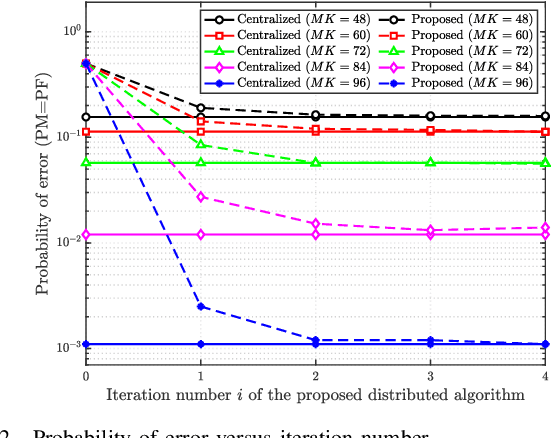

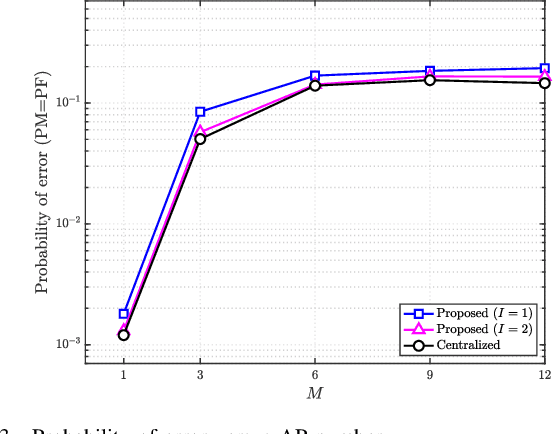

Abstract:A great amount of endeavor has recently been devoted to activity detection for massive machine-type communications in cell-free multiple-input multiple-output (MIMO) systems. However, as the number of antennas at the access points (APs) increases, the Rayleigh distance that separates the near-field and far-field regions also expands, rendering the conventional assumption of far-field propagation alone impractical. To address this challenge, this paper establishes a covariance-based formulation that can effectively capture the statistical property of hybrid near-far field channels. Based on this formulation, we theoretically reveal that increasing the proportion of near-field channels enhances the detection performance. Furthermore, we propose a distributed algorithm, where each AP performs local activity detection and only exchanges the detection results to the central processing unit, thus significantly reducing the computational complexity and the communication overhead. Not only with convergence guarantee, the proposed algorithm is unified in the sense that it can handle single-cell or cell-free systems with either near-field or far-field devices as special cases. Simulation results validate the theoretical analyses and demonstrate the superior performance of the proposed approach compared with existing methods.

Aligning Effective Tokens with Video Anomaly in Large Language Models

Aug 08, 2025Abstract:Understanding abnormal events in videos is a vital and challenging task that has garnered significant attention in a wide range of applications. Although current video understanding Multi-modal Large Language Models (MLLMs) are capable of analyzing general videos, they often struggle to handle anomalies due to the spatial and temporal sparsity of abnormal events, where the redundant information always leads to suboptimal outcomes. To address these challenges, exploiting the representation and generalization capabilities of Vison Language Models (VLMs) and Large Language Models (LLMs), we propose VA-GPT, a novel MLLM designed for summarizing and localizing abnormal events in various videos. Our approach efficiently aligns effective tokens between visual encoders and LLMs through two key proposed modules: Spatial Effective Token Selection (SETS) and Temporal Effective Token Generation (TETG). These modules enable our model to effectively capture and analyze both spatial and temporal information associated with abnormal events, resulting in more accurate responses and interactions. Furthermore, we construct an instruction-following dataset specifically for fine-tuning video-anomaly-aware MLLMs, and introduce a cross-domain evaluation benchmark based on XD-Violence dataset. Our proposed method outperforms existing state-of-the-art methods on various benchmarks.

Distributed Activity Detection for Cell-Free Hybrid Near-Far Field Communications

Jun 17, 2025Abstract:A great amount of endeavor has recently been devoted to activity detection for massive machine-type communications in cell-free massive MIMO. However, in practice, as the number of antennas at the access points (APs) increases, the Rayleigh distance that separates the near-field and far-field regions also expands, rendering the conventional assumption of far-field propagation alone impractical. To address this challenge, this paper considers a hybrid near-far field activity detection in cell-free massive MIMO, and establishes a covariance-based formulation, which facilitates the development of a distributed algorithm to alleviate the computational burden at the central processing unit (CPU). Specifically, each AP performs local activity detection for the devices and then transmits the detection result to the CPU for further processing. In particular, a novel coordinate descent algorithm based on the Sherman-Morrison-Woodbury update with Taylor expansion is proposed to handle the local detection problem at each AP. Moreover, we theoretically analyze how the hybrid near-far field channels affect the detection performance. Simulation results validate the theoretical analysis and demonstrate the superior performance of the proposed approach compared with existing approaches.

FieldFormer: Self-supervised Reconstruction of Physical Fields via Tensor Attention Prior

Jun 13, 2025Abstract:Reconstructing physical field tensors from \textit{in situ} observations, such as radio maps and ocean sound speed fields, is crucial for enabling environment-aware decision making in various applications, e.g., wireless communications and underwater acoustics. Field data reconstruction is often challenging, due to the limited and noisy nature of the observations, necessitating the incorporation of prior information to aid the reconstruction process. Deep neural network-based data-driven structural constraints (e.g., ``deeply learned priors'') have showed promising performance. However, this family of techniques faces challenges such as model mismatches between training and testing phases. This work introduces FieldFormer, a self-supervised neural prior learned solely from the limited {\it in situ} observations without the need of offline training. Specifically, the proposed framework starts with modeling the fields of interest using the tensor Tucker model of a high multilinear rank, which ensures a universal approximation property for all fields. In the sequel, an attention mechanism is incorporated to learn the sparsity pattern that underlies the core tensor in order to reduce the solution space. In this way, a ``complexity-adaptive'' neural representation, grounded in the Tucker decomposition, is obtained that can flexibly represent various types of fields. A theoretical analysis is provided to support the recoverability of the proposed design. Moreover, extensive experiments, using various physical field tensors, demonstrate the superiority of the proposed approach compared to state-of-the-art baselines.

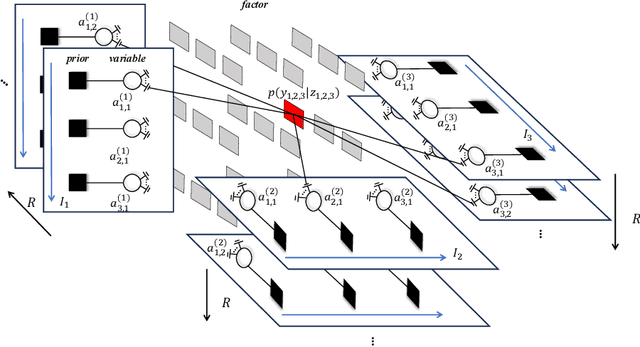

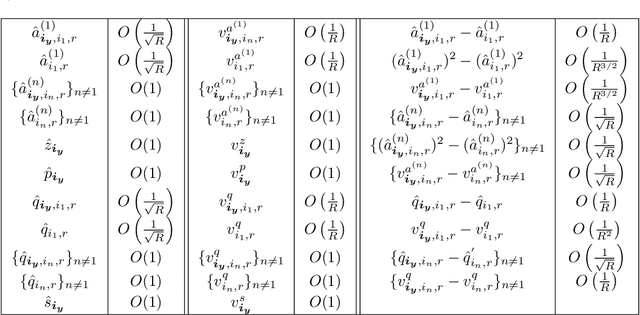

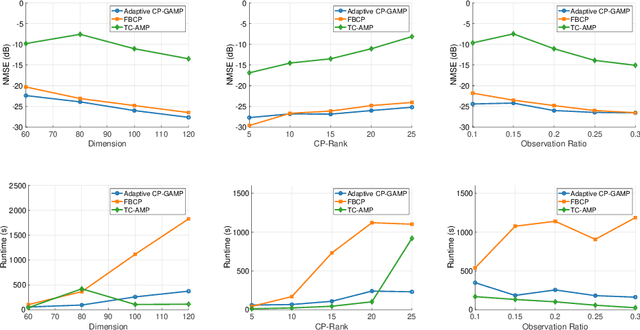

Large-Scale Bayesian Tensor Reconstruction: An Approximate Message Passing Solution

May 22, 2025

Abstract:Tensor CANDECOMP/PARAFAC decomposition (CPD) is a fundamental model for tensor reconstruction. Although the Bayesian framework allows for principled uncertainty quantification and automatic hyperparameter learning, existing methods do not scale well for large tensors because of high-dimensional matrix inversions. To this end, we introduce CP-GAMP, a scalable Bayesian CPD algorithm. This algorithm leverages generalized approximate message passing (GAMP) to avoid matrix inversions and incorporates an expectation-maximization routine to jointly infer the tensor rank and noise power. Through multiple experiments, for synthetic 100x100x100 rank 20 tensors with only 20% elements observed, the proposed algorithm reduces runtime by 82.7% compared to the state-of-the-art variational Bayesian CPD method, while maintaining comparable reconstruction accuracy.

Nonparametric Teaching for Graph Property Learners

May 21, 2025Abstract:Inferring properties of graph-structured data, e.g., the solubility of molecules, essentially involves learning the implicit mapping from graphs to their properties. This learning process is often costly for graph property learners like Graph Convolutional Networks (GCNs). To address this, we propose a paradigm called Graph Neural Teaching (GraNT) that reinterprets the learning process through a novel nonparametric teaching perspective. Specifically, the latter offers a theoretical framework for teaching implicitly defined (i.e., nonparametric) mappings via example selection. Such an implicit mapping is realized by a dense set of graph-property pairs, with the GraNT teacher selecting a subset of them to promote faster convergence in GCN training. By analytically examining the impact of graph structure on parameter-based gradient descent during training, and recasting the evolution of GCNs--shaped by parameter updates--through functional gradient descent in nonparametric teaching, we show for the first time that teaching graph property learners (i.e., GCNs) is consistent with teaching structure-aware nonparametric learners. These new findings readily commit GraNT to enhancing learning efficiency of the graph property learner, showing significant reductions in training time for graph-level regression (-36.62%), graph-level classification (-38.19%), node-level regression (-30.97%) and node-level classification (-47.30%), all while maintaining its generalization performance.

Deep Unfolding with Kernel-based Quantization in MIMO Detection

May 19, 2025Abstract:The development of edge computing places critical demands on energy-efficient model deployment for multiple-input multiple-output (MIMO) detection tasks. Deploying deep unfolding models such as PGD-Nets and ADMM-Nets into resource-constrained edge devices using quantization methods is challenging. Existing quantization methods based on quantization aware training (QAT) suffer from performance degradation due to their reliance on parametric distribution assumption of activations and static quantization step sizes. To address these challenges, this paper proposes a novel kernel-based adaptive quantization (KAQ) framework for deep unfolding networks. By utilizing a joint kernel density estimation (KDE) and maximum mean discrepancy (MMD) approach to align activation distributions between full-precision and quantized models, the need for prior distribution assumptions is eliminated. Additionally, a dynamic step size updating method is introduced to adjust the quantization step size based on the channel conditions of wireless networks. Extensive simulations demonstrate that the accuracy of proposed KAQ framework outperforms traditional methods and successfully reduces the model's inference latency.

iMacSR: Intermediate Multi-Access Supervision and Regularization in Training Autonomous Driving Models

May 01, 2025Abstract:Deep Learning (DL)-based street scene semantic understanding has become a cornerstone of autonomous driving (AD). DL model performance heavily relies on network depth. Specifically, deeper DL architectures yield better segmentation performance. However, as models grow deeper, traditional one-point supervision at the final layer struggles to optimize intermediate feature representations, leading to subpar training outcomes. To address this, we propose an intermediate Multi-access Supervision and Regularization (iMacSR) strategy. The proposed iMacSR introduces two novel components: (I) mutual information between latent features and ground truth as intermediate supervision loss ensures robust feature alignment at multiple network depths; and (II) negative entropy regularization on hidden features discourages overconfident predictions and mitigates overfitting. These intermediate terms are combined into the original final-layer training loss to form a unified optimization objective, enabling comprehensive optimization across the network hierarchy. The proposed iMacSR provides a robust framework for training deep AD architectures, advancing the performance of perception systems in real-world driving scenarios. In addition, we conduct theoretical convergence analysis for the proposed iMacSR. Extensive experiments on AD benchmarks (i.e., Cityscapes, CamVid, and SynthiaSF datasets) demonstrate that iMacSR outperforms conventional final-layer single-point supervision method up to 9.19% in mean Intersection over Union (mIoU).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge