Bo Ai

Sherman

Statistics Approximation-Enabled Distributed Beamforming for Cell-Free Massive MIMO

Feb 03, 2026Abstract:We study a distributed beamforming approach for cell-free massive multiple-input multiple-output networks, referred to as Global Statistics \& Local Instantaneous information-based minimum mean-square error (GSLI-MMSE). The scenario with multi-antenna access points (APs) is considered over three different channel models: correlated Rician fading with fixed or random line-of-sight (LoS) phase-shifts, and correlated Rayleigh fading. With the aid of matrix inversion derivations, we can construct the conventional MMSE combining from the perspective of each AP, where global instantaneous information is involved. Then, for an arbitrary AP, we apply the statistics approximation methodology to approximate instantaneous terms related to other APs by channel statistics to construct the distributed combining scheme at each AP with local instantaneous information and global statistics. With the aid of uplink-downlink duality, we derive the respective GSLI-MMSE precoding schemes. Numerical results showcase that the proposed GSLI-MMSE scheme demonstrates performance comparable to the optimal centralized MMSE scheme, under the stable LoS conditions, e.g., with static users having Rician fading with a fixed LoS path.

Low-Complexity Distributed Combining Design for Near-Field Cell-Free XL-MIMO Systems

Feb 03, 2026Abstract:In this paper, we investigate the low-complexity distributed combining scheme design for near-field cell-free extremely large-scale multiple-input-multiple-output (CF XL-MIMO) systems. Firstly, we construct the uplink spectral efficiency (SE) performance analysis framework for CF XL-MIMO systems over centralized and distributed processing schemes. Notably, we derive the centralized minimum mean-square error (CMMSE) and local minimum mean-square error (LMMSE) combining schemes over arbitrary channel estimators. Then, focusing on the CMMSE and LMMSE combining schemes, we propose five low-complexity distributed combining schemes based on the matrix approximation methodology or the symmetric successive over relaxation (SSOR) algorithm. More specifically, we propose two matrix approximation methodology-aided combining schemes: Global Statistics \& Local Instantaneous information-based MMSE (GSLI-MMSE) and Statistics matrix Inversion-based LMMSE (SI-LMMSE). These two schemes are derived by approximating the global instantaneous information in the CMMSE combining and the local instantaneous information in the LMMSE combining with the global and local statistics information by asymptotic analysis and matrix expectation approximation, respectively. Moreover, by applying the low-complexity SSOR algorithm to iteratively solve the matrix inversion in the LMMSE combining, we derive three distributed SSOR-based LMMSE combining schemes, distinguished from the applied information and initial values.

Low-Complexity Multi-Agent Continual Learning for Stacked Intelligent Metasurface-Assisted Secure Communications

Feb 02, 2026Abstract:Stacked intelligent metasurfaces (SIMs), composed of multiple layers of reconfigurable transmissive metasurfaces, are gaining prominence as a transformative technology for future wireless communication security. This paper investigates the integration of SIM into multi-user multiple-input multiple-output (MIMO) systems to enhance physical layer security. A novel system architecture is proposed, wherein each base station (BS) antenna transmits a dedicated single-user stream, while a multi-layer SIM executes wave-based beamforming in the electromagnetic domain, thereby avoiding the need for complex baseband digital precoding and significantly reducing hardware overhead. To maximize the weighted sum secrecy rate (WSSR), we formulate a joint precoding optimization problem over BS power allocation and SIM phase shifts, which is high-dimensional and non-convex due to the complexity of the objective function and the coupling among optimization variables. To address this, we propose a manifold-enhanced heterogeneous multi-agent continual learning (MHACL) framework that incorporates gradient representation and dual-scale policy optimization to achieve robust performance in dynamic environments with high demands for secure communication. Furthermore, we develop SIM-MHACL (SIMHACL), a low-complexity learning template that embeds phase coordination into a product manifold structure, reducing the exponential search space to linear complexity while maintaining physical feasibility. Simulation results validate that the proposed framework achieves millisecond-level per-iteratio ntraining in SIM-assisted systems, significantly outperforming various baseline schemes, with SIMHACL achieving comparable WSSR to MHACL while reducing computation time by 30\%.

Artificial Intelligence Empowered Channel Prediction: A New Paradigm for Propagation Channel Modeling

Jan 14, 2026Abstract:This paper proposes a novel paradigm centered on Artificial Intelligence (AI)-empowered propagation channel prediction to address the limitations of traditional channel modeling. We present a comprehensive framework that deeply integrates heterogeneous environmental data and physical propagation knowledge into AI models for site-specific channel prediction, which referred to as channel inference. By leveraging AI to infer site-specific wireless channel states, the proposed paradigm enables accurate prediction of channel characteristics at both link and area levels, capturing spatio-temporal evolution of radio propagation. Some novel strategies to realize the paradigm are introduced and discussed, including AI-native and AI-hybrid inference approaches. This paper also investigates how to enhance model generalization through transfer learning and improve interpretability via explainable AI techniques. Our approach demonstrates significant practical efficacy, achieving an average path loss prediction root mean square error (RMSE) of $\sim$ 4 dB and reducing training time by 60\%-75\%. This new modeling paradigm provides a foundational pathway toward high-fidelity, generalizable, and physically consistent propagation channel prediction for future communication networks.

Hierarchical Online-Scheduling for Energy-Efficient Split Inference with Progressive Transmission

Jan 13, 2026Abstract:Device-edge collaborative inference with Deep Neural Networks (DNNs) faces fundamental trade-offs among accuracy, latency and energy consumption. Current scheduling exhibits two drawbacks: a granularity mismatch between coarse, task-level decisions and fine-grained, packet-level channel dynamics, and insufficient awareness of per-task complexity. Consequently, scheduling solely at the task level leads to inefficient resource utilization. This paper proposes a novel ENergy-ACcuracy Hierarchical optimization framework for split Inference, named ENACHI, that jointly optimizes task- and packet-level scheduling to maximize accuracy under energy and delay constraints. A two-tier Lyapunov-based framework is developed for ENACHI, with a progressive transmission technique further integrated to enhance adaptivity. At the task level, an outer drift-plus-penalty loop makes online decisions for DNN partitioning and bandwidth allocation, and establishes a reference power budget to manage the long-term energy-accuracy trade-off. At the packet level, an uncertainty-aware progressive transmission mechanism is employed to adaptively manage per-sample task complexity. This is integrated with a nested inner control loop implementing a novel reference-tracking policy, which dynamically adjusts per-slot transmit power to adapt to fluctuating channel conditions. Experiments on ImageNet dataset demonstrate that ENACHI outperforms state-of-the-art benchmarks under varying deadlines and bandwidths, achieving a 43.12\% gain in inference accuracy with a 62.13\% reduction in energy consumption under stringent deadlines, and exhibits high scalability by maintaining stable energy consumption in congested multi-user scenarios.

A Novel Deep Learning-Based Coarse-to-Fine Frame Synchronization Method for OTFS Systems

Jan 09, 2026Abstract:Orthogonal time frequency space (OTFS) modulation is a robust candidate waveform for future wireless systems, particularly in high-mobility scenarios, as it effectively mitigates the impact of rapidly time-varying channels by mapping symbols in the delay-Doppler (DD) domain. However, accurate frame synchronization in OTFS systems remains a challenge due to the performance limitations of conventional algorithms. To address this, we propose a low-complexity synchronization method based on a coarse-to-fine deep residual network (ResNet) architecture. Unlike traditional approaches relying on high-overhead preamble structures, our method exploits the intrinsic periodic features of OTFS pilots in the delay-time (DT) domain to formulate synchronization as a hierarchical classification problem. Specifically, the proposed architecture employs a two-stage strategy to first narrow the search space and then pinpoint the precise symbol timing offset (STO), thereby significantly reducing computational complexity while maintaining high estimation accuracy. We construct a comprehensive simulation dataset incorporating diverse channel models and randomized STO to validate the method. Extensive simulation results demonstrate that the proposed method achieves robust signal start detection and superior accuracy compared to conventional benchmarks, particularly in low signal-to-noise ratio (SNR) regimes and high-mobility scenarios.

Timeliness-Oriented Scheduling and Resource Allocation in Multi-Region Collaborative Perception

Jan 08, 2026Abstract:Collaborative perception (CP) is a critical technology in applications like autonomous driving and smart cities. It involves the sharing and fusion of information among sensors to overcome the limitations of individual perception, such as blind spots and range limitations. However, CP faces two primary challenges. First, due to the dynamic nature of the environment, the timeliness of the transmitted information is critical to perception performance. Second, with limited computational power at the sensors and constrained wireless bandwidth, the communication volume must be carefully designed to ensure feature representations are both effective and sufficient. This work studies the dynamic scheduling problem in a multi-region CP scenario, and presents a Timeliness-Aware Multi-region Prioritized (TAMP) scheduling algorithm to trade-off perception accuracy and communication resource usage. Timeliness reflects the utility of information that decays as time elapses, which is manifested by the perception performance in CP tasks. We propose an empirical penalty function that maps the joint impact of Age of Information (AoI) and communication volume to perception performance. Aiming to minimize this timeliness-oriented penalty in the long-term, and recognizing that scheduling decisions have a cumulative effect on subsequent system states, we propose the TAMP scheduling algorithm. TAMP is a Lyapunov-based optimization policy that decomposes the long-term average objective into a per-slot prioritization problem, balancing the scheduling worth against resource cost. We validate our algorithm in both intersection and corridor scenarios with the real-world Roadside Cooperative perception (RCooper) dataset. Extensive simulations demonstrate that TAMP outperforms the best-performing baseline, achieving an Average Precision (AP) improvement of up to 27% across various configurations.

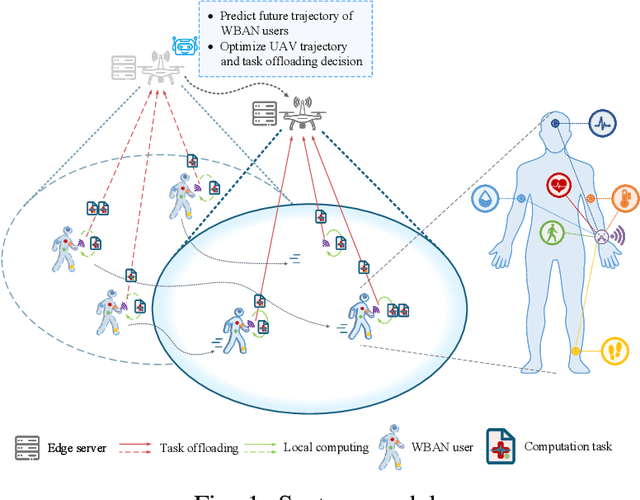

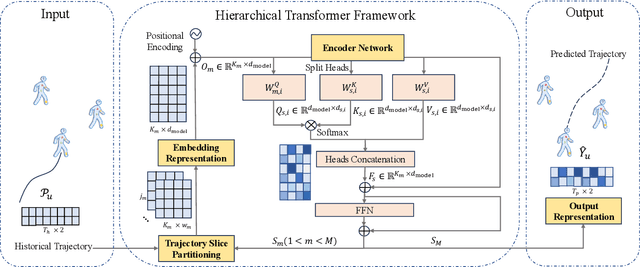

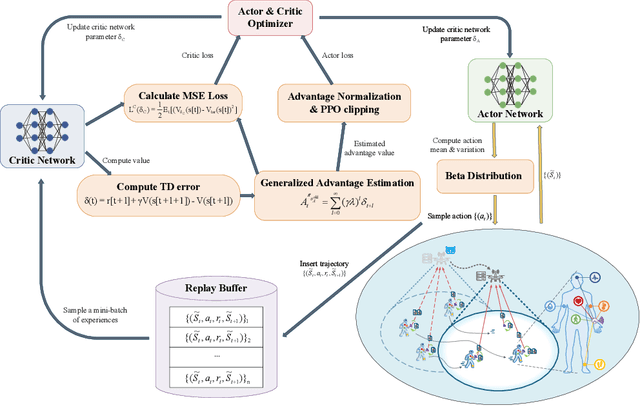

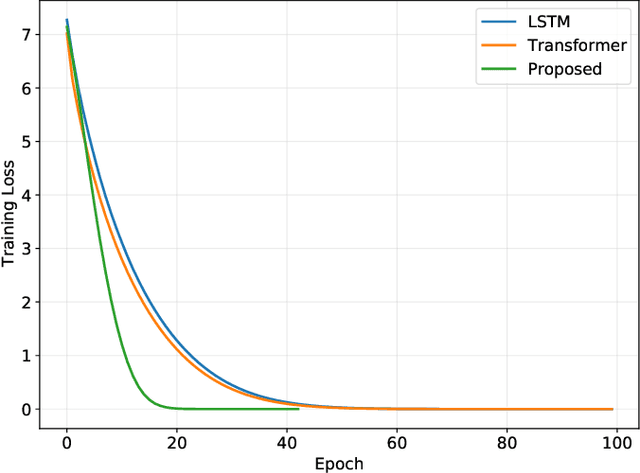

Embodied AI-Enhanced IoMT Edge Computing: UAV Trajectory Optimization and Task Offloading with Mobility Prediction

Dec 24, 2025

Abstract:Due to their inherent flexibility and autonomous operation, unmanned aerial vehicles (UAVs) have been widely used in Internet of Medical Things (IoMT) to provide real-time biomedical edge computing service for wireless body area network (WBAN) users. In this paper, considering the time-varying task criticality characteristics of diverse WBAN users and the dual mobility between WBAN users and UAV, we investigate the dynamic task offloading and UAV flight trajectory optimization problem to minimize the weighted average task completion time of all the WBAN users, under the constraint of UAV energy consumption. To tackle the problem, an embodied AI-enhanced IoMT edge computing framework is established. Specifically, we propose a novel hierarchical multi-scale Transformer-based user trajectory prediction model based on the users' historical trajectory traces captured by the embodied AI agent (i.e., UAV). Afterwards, a prediction-enhanced deep reinforcement learning (DRL) algorithm that integrates predicted users' mobility information is designed for intelligently optimizing UAV flight trajectory and task offloading decisions. Real-word movement traces and simulation results demonstrate the superiority of the proposed methods in comparison with the existing benchmarks.

A Geometry Map-Based Site-Specific Propagation Channel Model for Urban Scenarios

Nov 19, 2025Abstract:With the rapid deployments of 5G and 6G networks, accurate modeling of urban radio propagation has become critical for system design and network planning. However, conventional statistical or empirical models fail to fully capture the influence of detailed geometric features on site-specific channel variances in dense urban environments. In this paper, we propose a geometry map-based propagation channel model that directly extracts key parameters from a 3D geometry map and incorporates the Uniform Theory of Diffraction (UTD) to recursively compute multiple diffraction fields, thereby enabling accurate prediction of site-specific large-scale path loss and time-varying Doppler characteristics in urban scenarios. A well-designed identification algorithm is developed to efficiently detect buildings that significantly affect signal propagation. The proposed model is validated using urban measurement data, showing excellent agreement of path loss in both line-of-sight (LOS) and nonline-of-sight (NLOS) conditions. In particular, for NLOS scenarios with complex diffractions, it outperforms the 3GPP and simplified models, reducing the RMSE by 7.1 dB and 3.18 dB, respectively. Doppler analysis further demonstrates its accuracy in capturing time-varying propagation characteristics, confirming the scalability and generalization of the model in urban environments.

GNN-Enabled Robust Hybrid Beamforming with Score-Based CSI Generation and Denoising

Nov 10, 2025Abstract:Accurate Channel State Information (CSI) is critical for Hybrid Beamforming (HBF) tasks. However, obtaining high-resolution CSI remains challenging in practical wireless communication systems. To address this issue, we propose to utilize Graph Neural Networks (GNNs) and score-based generative models to enable robust HBF under imperfect CSI conditions. Firstly, we develop the Hybrid Message Graph Attention Network (HMGAT) which updates both node and edge features through node-level and edge-level message passing. Secondly, we design a Bidirectional Encoder Representations from Transformers (BERT)-based Noise Conditional Score Network (NCSN) to learn the distribution of high-resolution CSI, facilitating CSI generation and data augmentation to further improve HMGAT's performance. Finally, we present a Denoising Score Network (DSN) framework and its instantiation, termed DeBERT, which can denoise imperfect CSI under arbitrary channel error levels, thereby facilitating robust HBF. Experiments on DeepMIMO urban datasets demonstrate the proposed models' superior generalization, scalability, and robustness across various HBF tasks with perfect and imperfect CSI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge