Yiran Guo

Segment Policy Optimization: Effective Segment-Level Credit Assignment in RL for Large Language Models

May 29, 2025Abstract:Enhancing the reasoning capabilities of large language models effectively using reinforcement learning (RL) remains a crucial challenge. Existing approaches primarily adopt two contrasting advantage estimation granularities: Token-level methods (e.g., PPO) aim to provide the fine-grained advantage signals but suffer from inaccurate estimation due to difficulties in training an accurate critic model. On the other extreme, trajectory-level methods (e.g., GRPO) solely rely on a coarse-grained advantage signal from the final reward, leading to imprecise credit assignment. To address these limitations, we propose Segment Policy Optimization (SPO), a novel RL framework that leverages segment-level advantage estimation at an intermediate granularity, achieving a better balance by offering more precise credit assignment than trajectory-level methods and requiring fewer estimation points than token-level methods, enabling accurate advantage estimation based on Monte Carlo (MC) without a critic model. SPO features three components with novel strategies: (1) flexible segment partition; (2) accurate segment advantage estimation; and (3) policy optimization using segment advantages, including a novel probability-mask strategy. We further instantiate SPO for two specific scenarios: (1) SPO-chain for short chain-of-thought (CoT), featuring novel cutpoint-based partition and chain-based advantage estimation, achieving $6$-$12$ percentage point improvements in accuracy over PPO and GRPO on GSM8K. (2) SPO-tree for long CoT, featuring novel tree-based advantage estimation, which significantly reduces the cost of MC estimation, achieving $7$-$11$ percentage point improvements over GRPO on MATH500 under 2K and 4K context evaluation. We make our code publicly available at https://github.com/AIFrameResearch/SPO.

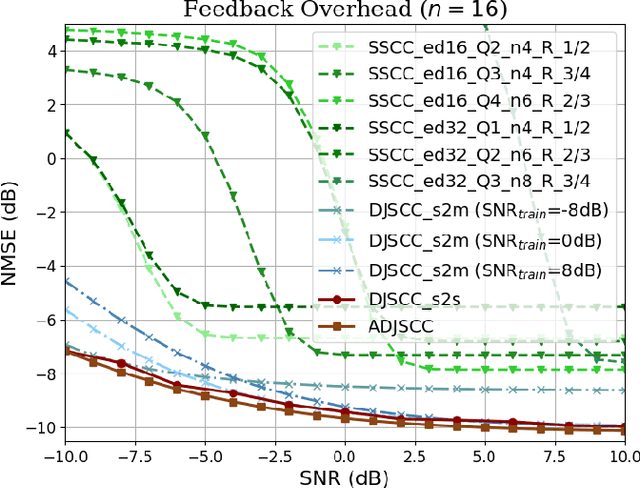

Uplink Assisted Joint Channel Estimation and CSI Feedback: An Approach Based on Deep Joint Source-Channel Coding

Apr 15, 2025Abstract:In frequency division duplex (FDD) multiple-input multiple-output (MIMO) wireless communication systems, the acquisition of downlink channel state information (CSI) is essential for maximizing spatial resource utilization and improving system spectral efficiency. The separate design of modules in AI-based CSI feedback architectures under traditional modular communication frameworks, including channel estimation (CE), CSI compression and feedback, leads to sub-optimal performance. In this paper, we propose an uplink assisted joint CE and and CSI feedback approach via deep learning for downlink CSI acquisition, which mitigates performance degradation caused by distribution bias across separately trained modules in traditional modular communication frameworks. The proposed network adopts a deep joint source-channel coding (DJSCC) architecture to mitigate the cliff effect encountered in the conventional separate source-channel coding. Furthermore, we exploit the uplink CSI as auxiliary information to enhance CSI reconstruction accuracy by leveraging the partial reciprocity between the uplink and downlink channels in FDD systems, without introducing additional overhead. The effectiveness of uplink CSI as assisted information and the necessity of an end-toend multi-module joint training architecture is validated through comprehensive ablation and scalability experiments.

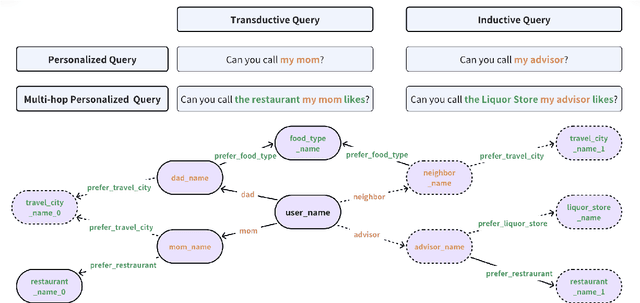

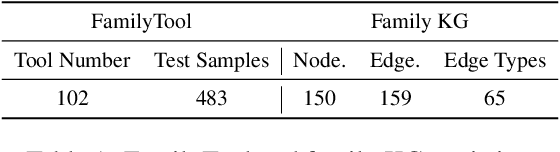

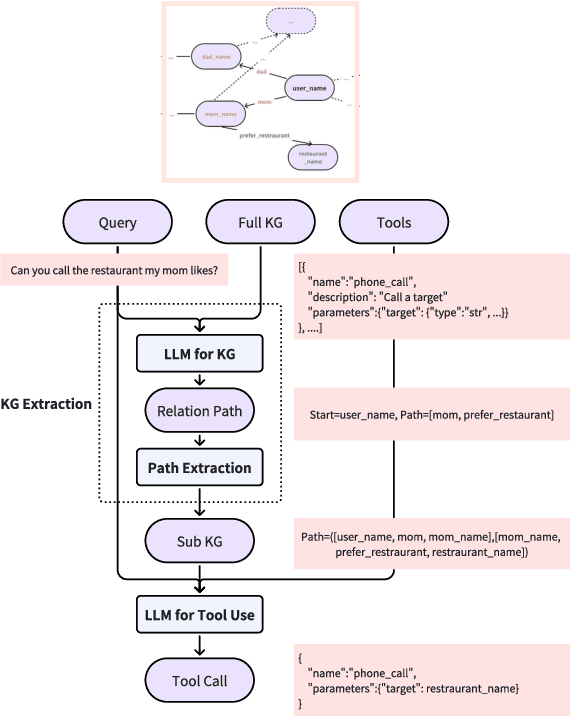

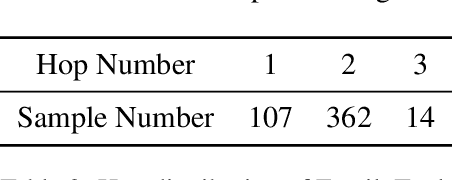

FamilyTool: A Multi-hop Personalized Tool Use Benchmark

Apr 09, 2025

Abstract:The integration of tool learning with Large Language Models (LLMs) has expanded their capabilities in handling complex tasks by leveraging external tools. However, existing benchmarks for tool learning inadequately address critical real-world personalized scenarios, particularly those requiring multi-hop reasoning and inductive knowledge adaptation in dynamic environments. To bridge this gap, we introduce FamilyTool, a novel benchmark grounded in a family-based knowledge graph (KG) that simulates personalized, multi-hop tool use scenarios. FamilyTool challenges LLMs with queries spanning 1 to 3 relational hops (e.g., inferring familial connections and preferences) and incorporates an inductive KG setting where models must adapt to unseen user preferences and relationships without re-training, a common limitation in prior approaches that compromises generalization. We further propose KGETool: a simple KG-augmented evaluation pipeline to systematically assess LLMs' tool use ability in these settings. Experiments reveal significant performance gaps in state-of-the-art LLMs, with accuracy dropping sharply as hop complexity increases and inductive scenarios exposing severe generalization deficits. These findings underscore the limitations of current LLMs in handling personalized, evolving real-world contexts and highlight the urgent need for advancements in tool-learning frameworks. FamilyTool serves as a critical resource for evaluating and advancing LLM agents' reasoning, adaptability, and scalability in complex, dynamic environments. Code and dataset are available at Github.

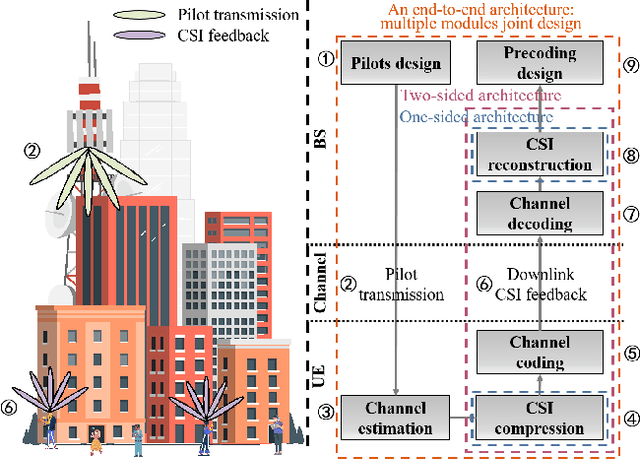

Deep Joint CSI Estimation-Feedback-Precoding for MU-MIMO OFDM Systems

Mar 06, 2025Abstract:As the number of antennas in frequency-division duplex (FDD) multiple-input multiple-output (MIMO) systems increases, acquiring channel state information (CSI) becomes increasingly challenging due to limited spectral resources and feedback overhead. In this paper, we propose an end-to-end network that conducts joint design with pilot design, CSI estimation, CSI feedback, and precoding design in the multi-user MIMO orthogonal frequency-division multiplexing (OFDM) scenario. Multiple communication modules are jointly designed and trained with a common optimization objective to prevent mismatches between modules and discrepancies between individual module objectives and the final system goal. Experimental results demonstrate that, under the same feedback and CE overheads, the proposed joint multi-module end-to-end network achieves a higher multi-user downlink spectral efficiency than traditional algorithms based on separate architecture and partially separated artificial intelligence-based network architectures under comparable channel quality. Furthermore, compared to conventional separate architecture, the proposed network architecture with joint architecture reduces the computational burden and model storage overhead at the UE side, facilitating the deployment of low-overhead multi-module joint architectures in practice. While slightly increasing storage requirements at the base station, it reduces computational complexity and precoding design delay, effectively reducing the effects of channel aging challenges.

Prior-Fitted Networks Scale to Larger Datasets When Treated as Weak Learners

Mar 03, 2025

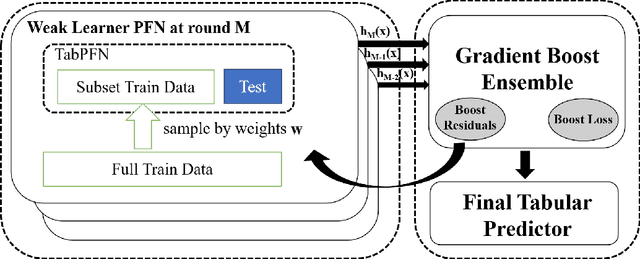

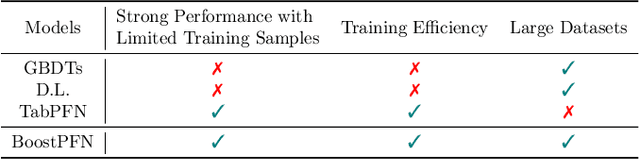

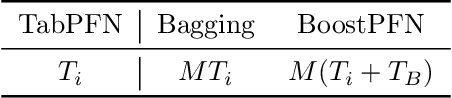

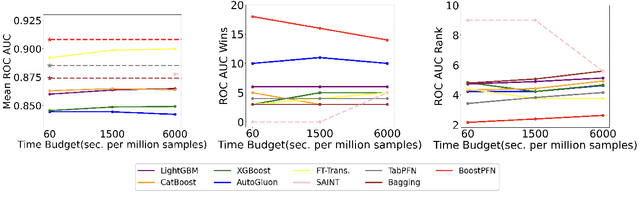

Abstract:Prior-Fitted Networks (PFNs) have recently been proposed to efficiently perform tabular classification tasks. Although they achieve good performance on small datasets, they encounter limitations with larger datasets. These limitations include significant memory consumption and increased computational complexity, primarily due to the impracticality of incorporating all training samples as inputs within these networks. To address these challenges, we investigate the fitting assumption for PFNs and input samples. Building on this understanding, we propose \textit{BoostPFN} designed to enhance the performance of these networks, especially for large-scale datasets. We also theoretically validate the convergence of BoostPFN and our empirical results demonstrate that the BoostPFN method can outperform standard PFNs with the same size of training samples in large datasets and achieve a significant acceleration in training times compared to other established baselines in the field, including widely-used Gradient Boosting Decision Trees (GBDTs), deep learning methods and AutoML systems. High performance is maintained for up to 50x of the pre-training size of PFNs, substantially extending the limit of training samples. Through this work, we address the challenges of efficiently handling large datasets via PFN-based models, paving the way for faster and more effective tabular data classification training and prediction process. Code is available at Github.

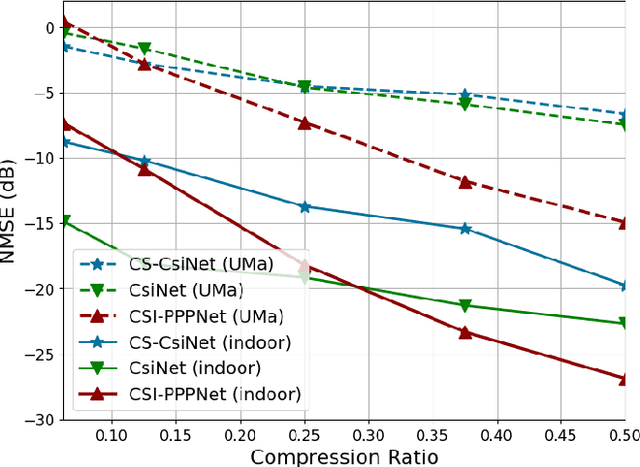

Deep Learning for CSI Feedback: One-Sided Model and Joint Multi-Module Learning Perspectives

May 09, 2024

Abstract:The use of deep learning (DL) for channel state information (CSI) feedback has garnered widespread attention across academia and industry. The mainstream DL architectures, e.g., CsiNet, deploy DL models on the base station (BS) side and the user equipment (UE) side, which are highly coupled and need to be trained jointly. However, two-sided DL models require collaborations between different network vendors and UE vendors, which entails considerable challenges in order to achieve consensus, e.g., model maintenance and responsibility. Furthermore, DL-based CSI feedback design invokes DL to reduce only the CSI feedback error, whereas jointly optimizing several modules at the transceivers would provide more significant gains. This article presents DL-based CSI feedback from the perspectives of one-sided model and joint multi-module learning. We herein introduce various novel one-sided CSI feedback architectures. In particular, the recently proposed CSI-PPPNet provides a one-sided one-for-all framework, which allows a DL model to deal with arbitrary CSI compression ratios. We review different joint multi-module learning methods, where the CSI feedback module is learned jointly with other modules including channel coding, channel estimation, pilot design and precoding design. Finally, future directions and challenges for DL-based CSI feedback are discussed, from the perspectives of inherent limitations of artificial intelligence (AI) and practical deployment issues.

Deep Joint CSI Feedback and Multiuser Precoding for MIMO OFDM Systems

Apr 25, 2024Abstract:The design of precoding plays a crucial role in achieving a high downlink sum-rate in multiuser multiple-input multiple-output (MIMO) orthogonal frequency-division multiplexing (OFDM) systems. In this correspondence, we propose a deep learning based joint CSI feedback and multiuser precoding method in frequency division duplex systems, aiming at maximizing the downlink sum-rate performance in an end-to-end manner. Specifically, the eigenvectors of the CSI matrix are compressed using deep joint source-channel coding techniques. This compression method enhances the resilience of the feedback CSI information against degradation in the feedback channel. A joint multiuser precoding module and a power allocation module are designed to adjust the precoding direction and the precoding power for users based on the feedback CSI information. Experimental results demonstrate that the downlink sum-rate can be significantly improved by using the proposed method, especially in scenarios with low signal-to-noise ratio and low feedback overhead.

TablePuppet: A Generic Framework for Relational Federated Learning

Mar 23, 2024Abstract:Current federated learning (FL) approaches view decentralized training data as a single table, divided among participants either horizontally (by rows) or vertically (by columns). However, these approaches are inadequate for handling distributed relational tables across databases. This scenario requires intricate SQL operations like joins and unions to obtain the training data, which is either costly or restricted by privacy concerns. This raises the question: can we directly run FL on distributed relational tables? In this paper, we formalize this problem as relational federated learning (RFL). We propose TablePuppet, a generic framework for RFL that decomposes the learning process into two steps: (1) learning over join (LoJ) followed by (2) learning over union (LoU). In a nutshell, LoJ pushes learning down onto the vertical tables being joined, and LoU further pushes learning down onto the horizontal partitions of each vertical table. TablePuppet incorporates computation/communication optimizations to deal with the duplicate tuples introduced by joins, as well as differential privacy (DP) to protect against both feature and label leakages. We demonstrate the efficiency of TablePuppet in combination with two widely-used ML training algorithms, stochastic gradient descent (SGD) and alternating direction method of multipliers (ADMM), and compare their computation/communication complexity. We evaluate the SGD/ADMM algorithms developed atop TablePuppet by training diverse ML models. Our experimental results show that TablePuppet achieves model accuracy comparable to the centralized baselines running directly atop the SQL results. Moreover, ADMM takes less communication time than SGD to converge to similar model accuracy.

Swin Transformer-Based CSI Feedback for Massive MIMO

Jan 12, 2024Abstract:For massive multiple-input multiple-output systems in the frequency division duplex (FDD) mode, accurate downlink channel state information (CSI) is required at the base station (BS). However, the increasing number of transmit antennas aggravates the feedback overhead of CSI. Recently, deep learning (DL) has shown considerable potential to reduce CSI feedback overhead. In this paper, we propose a Swin Transformer-based autoencoder network called SwinCFNet for the CSI feedback task. In particular, the proposed method can effectively capture the long-range dependence information of CSI. Moreover, we explore the impact of the number of Swin Transformer blocks and the dimension of feature channels on the performance of SwinCFNet. Experimental results show that SwinCFNet significantly outperforms other DL-based methods with comparable model sizes, especially for the outdoor scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge