Hao Su

Member, IEEE

Learning Physical Principles from Interaction: Self-Evolving Planning via Test-Time Memory

Feb 23, 2026Abstract:Reliable object manipulation requires understanding physical properties that vary across objects and environments. Vision-language model (VLM) planners can reason about friction and stability in general terms; however, they often cannot predict how a specific ball will roll on a particular surface or which stone will provide a stable foundation without direct experience. We present PhysMem, a memory framework that enables VLM robot planners to learn physical principles from interaction at test time, without updating model parameters. The system records experiences, generates candidate hypotheses, and verifies them through targeted interaction before promoting validated knowledge to guide future decisions. A central design choice is verification before application: the system tests hypotheses against new observations rather than applying retrieved experience directly, reducing rigid reliance on prior experience when physical conditions change. We evaluate PhysMem on three real-world manipulation tasks and simulation benchmarks across four VLM backbones. On a controlled brick insertion task, principled abstraction achieves 76% success compared to 23% for direct experience retrieval, and real-world experiments show consistent improvement over 30-minute deployment sessions.

TouchGuide: Inference-Time Steering of Visuomotor Policies via Touch Guidance

Jan 28, 2026Abstract:Fine-grained and contact-rich manipulation remain challenging for robots, largely due to the underutilization of tactile feedback. To address this, we introduce TouchGuide, a novel cross-policy visuo-tactile fusion paradigm that fuses modalities within a low-dimensional action space. Specifically, TouchGuide operates in two stages to guide a pre-trained diffusion or flow-matching visuomotor policy at inference time. First, the policy produces a coarse, visually-plausible action using only visual inputs during early sampling. Second, a task-specific Contact Physical Model (CPM) provides tactile guidance to steer and refine the action, ensuring it aligns with realistic physical contact conditions. Trained through contrastive learning on limited expert demonstrations, the CPM provides a tactile-informed feasibility score to steer the sampling process toward refined actions that satisfy physical contact constraints. Furthermore, to facilitate TouchGuide training with high-quality and cost-effective data, we introduce TacUMI, a data collection system. TacUMI achieves a favorable trade-off between precision and affordability; by leveraging rigid fingertips, it obtains direct tactile feedback, thereby enabling the collection of reliable tactile data. Extensive experiments on five challenging contact-rich tasks, such as shoe lacing and chip handover, show that TouchGuide consistently and significantly outperforms state-of-the-art visuo-tactile policies.

Advances and Innovations in the Multi-Agent Robotic System (MARS) Challenge

Jan 26, 2026Abstract:Recent advancements in multimodal large language models and vision-languageaction models have significantly driven progress in Embodied AI. As the field transitions toward more complex task scenarios, multi-agent system frameworks are becoming essential for achieving scalable, efficient, and collaborative solutions. This shift is fueled by three primary factors: increasing agent capabilities, enhancing system efficiency through task delegation, and enabling advanced human-agent interactions. To address the challenges posed by multi-agent collaboration, we propose the Multi-Agent Robotic System (MARS) Challenge, held at the NeurIPS 2025 Workshop on SpaVLE. The competition focuses on two critical areas: planning and control, where participants explore multi-agent embodied planning using vision-language models (VLMs) to coordinate tasks and policy execution to perform robotic manipulation in dynamic environments. By evaluating solutions submitted by participants, the challenge provides valuable insights into the design and coordination of embodied multi-agent systems, contributing to the future development of advanced collaborative AI systems.

LARM: A Large Articulated-Object Reconstruction Model

Nov 14, 2025

Abstract:Modeling 3D articulated objects with realistic geometry, textures, and kinematics is essential for a wide range of applications. However, existing optimization-based reconstruction methods often require dense multi-view inputs and expensive per-instance optimization, limiting their scalability. Recent feedforward approaches offer faster alternatives but frequently produce coarse geometry, lack texture reconstruction, and rely on brittle, complex multi-stage pipelines. We introduce LARM, a unified feedforward framework that reconstructs 3D articulated objects from sparse-view images by jointly recovering detailed geometry, realistic textures, and accurate joint structures. LARM extends LVSM a recent novel view synthesis (NVS) approach for static 3D objects into the articulated setting by jointly reasoning over camera pose and articulation variation using a transformer-based architecture, enabling scalable and accurate novel view synthesis. In addition, LARM generates auxiliary outputs such as depth maps and part masks to facilitate explicit 3D mesh extraction and joint estimation. Our pipeline eliminates the need for dense supervision and supports high-fidelity reconstruction across diverse object categories. Extensive experiments demonstrate that LARM outperforms state-of-the-art methods in both novel view and state synthesis as well as 3D articulated object reconstruction, generating high-quality meshes that closely adhere to the input images. project page: https://sylviayuan-sy.github.io/larm-site/

FreeArt3D: Training-Free Articulated Object Generation using 3D Diffusion

Oct 29, 2025Abstract:Articulated 3D objects are central to many applications in robotics, AR/VR, and animation. Recent approaches to modeling such objects either rely on optimization-based reconstruction pipelines that require dense-view supervision or on feed-forward generative models that produce coarse geometric approximations and often overlook surface texture. In contrast, open-world 3D generation of static objects has achieved remarkable success, especially with the advent of native 3D diffusion models such as Trellis. However, extending these methods to articulated objects by training native 3D diffusion models poses significant challenges. In this work, we present FreeArt3D, a training-free framework for articulated 3D object generation. Instead of training a new model on limited articulated data, FreeArt3D repurposes a pre-trained static 3D diffusion model (e.g., Trellis) as a powerful shape prior. It extends Score Distillation Sampling (SDS) into the 3D-to-4D domain by treating articulation as an additional generative dimension. Given a few images captured in different articulation states, FreeArt3D jointly optimizes the object's geometry, texture, and articulation parameters without requiring task-specific training or access to large-scale articulated datasets. Our method generates high-fidelity geometry and textures, accurately predicts underlying kinematic structures, and generalizes well across diverse object categories. Despite following a per-instance optimization paradigm, FreeArt3D completes in minutes and significantly outperforms prior state-of-the-art approaches in both quality and versatility.

ORIC: Benchmarking Object Recognition in Incongruous Context for Large Vision-Language Models

Sep 19, 2025Abstract:Large Vision-Language Models (LVLMs) have made significant strides in image caption, visual question answering, and robotics by integrating visual and textual information. However, they remain prone to errors in incongruous contexts, where objects appear unexpectedly or are absent when contextually expected. This leads to two key recognition failures: object misidentification and hallucination. To systematically examine this issue, we introduce the Object Recognition in Incongruous Context Benchmark (ORIC), a novel benchmark that evaluates LVLMs in scenarios where object-context relationships deviate from expectations. ORIC employs two key strategies: (1) LLM-guided sampling, which identifies objects that are present but contextually incongruous, and (2) CLIP-guided sampling, which detects plausible yet nonexistent objects that are likely to be hallucinated, thereby creating an incongruous context. Evaluating 18 LVLMs and two open-vocabulary detection models, our results reveal significant recognition gaps, underscoring the challenges posed by contextual incongruity. This work provides critical insights into LVLMs' limitations and encourages further research on context-aware object recognition.

FFT-Free PAPR Reduction Methods for OFDM Signals

Sep 17, 2025

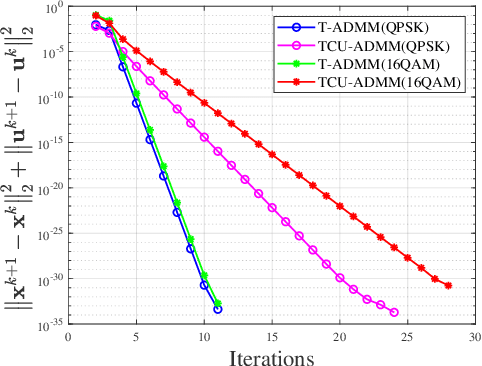

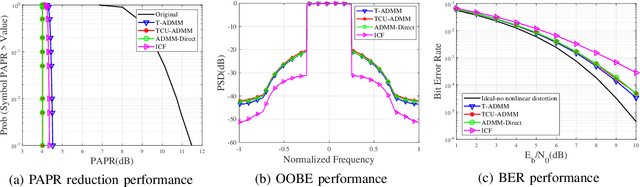

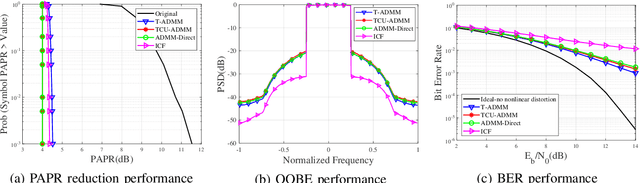

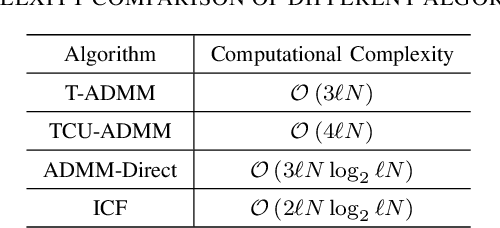

Abstract:In this paper, we propose two low-complexity peak to average power ratio(PAPR) reduction algorithms for orthogonal frequency division multiplexing(OFDM) signals. The main content is as follows: First, a non-convex optimization model is established by minimizing the signal distortion power. Then, a customized alternating direction method of multipliers(ADMM) algorithm is proposed to solve the problem, named time domain ADMM(T-ADMM) along with an improved version called T-ADMM with constrain update(TCU-ADMM). In the algorithms, all subproblems can be solved analytically, and each iteration has linear computational complexity. These algorithms circumvents the challenges posed by repeated fast Fourier transform(FFT) and inverse FFT(IFFT) operations in traditional PAPR reduction algorithms. Additionally, we prove that the T-ADMM algorithm is theoretically guaranteed convergent if proper parameter is chosen. Finally, simulation results demonstrate the effectiveness of the proposed methods.

LodeStar: Long-horizon Dexterity via Synthetic Data Augmentation from Human Demonstrations

Aug 24, 2025Abstract:Developing robotic systems capable of robustly executing long-horizon manipulation tasks with human-level dexterity is challenging, as such tasks require both physical dexterity and seamless sequencing of manipulation skills while robustly handling environment variations. While imitation learning offers a promising approach, acquiring comprehensive datasets is resource-intensive. In this work, we propose a learning framework and system LodeStar that automatically decomposes task demonstrations into semantically meaningful skills using off-the-shelf foundation models, and generates diverse synthetic demonstration datasets from a few human demos through reinforcement learning. These sim-augmented datasets enable robust skill training, with a Skill Routing Transformer (SRT) policy effectively chaining the learned skills together to execute complex long-horizon manipulation tasks. Experimental evaluations on three challenging real-world long-horizon dexterous manipulation tasks demonstrate that our approach significantly improves task performance and robustness compared to previous baselines. Videos are available at lodestar-robot.github.io.

MorphoCopter: Design, Modeling, and Control of a New Transformable Quad-Bi Copter

Jun 08, 2025Abstract:This paper presents a novel morphing quadrotor, named MorphoCopter, covering its design, modeling, control, and experimental tests. It features a unique single rotary joint that enables rapid transformation into an ultra-narrow profile. Although quadrotors have seen widespread adoption in applications such as cinematography, agriculture, and disaster management with increasingly sophisticated control systems, their hardware configurations have remained largely unchanged, limiting their capabilities in certain environments. Our design addresses this by enabling the hardware configuration to change on the fly when required. In standard flight mode, the MorphoCopter adopts an X configuration, functioning as a traditional quadcopter, but can quickly fold into a stacked bicopters arrangement or any configuration in between. Existing morphing designs often sacrifice controllability in compact configurations or rely on complex multi-joint systems. Moreover, our design achieves a greater width reduction than any existing solution. We develop a new inertia and control-action aware adaptive control system that maintains robust performance across all rotary-joint configurations. The prototype can reduce its width from 447 mm to 138 mm (nearly 70\% reduction) in just a few seconds. We validated the MorphoCopter through rigorous simulations and a comprehensive series of flight experiments, including robustness tests, trajectory tracking, and narrow-gap passing tests.

ManiTaskGen: A Comprehensive Task Generator for Benchmarking and Improving Vision-Language Agents on Embodied Decision-Making

May 27, 2025Abstract:Building embodied agents capable of accomplishing arbitrary tasks is a core objective towards achieving embodied artificial general intelligence (E-AGI). While recent work has advanced such general robot policies, their training and evaluation are often limited to tasks within specific scenes, involving restricted instructions and scenarios. Existing benchmarks also typically rely on manual annotation of limited tasks in a few scenes. We argue that exploring the full spectrum of feasible tasks within any given scene is crucial, as they provide both extensive benchmarks for evaluation and valuable resources for agent improvement. Towards this end, we introduce ManiTaskGen, a novel system that automatically generates comprehensive, diverse, feasible mobile manipulation tasks for any given scene. The generated tasks encompass both process-based, specific instructions (e.g., "move object from X to Y") and outcome-based, abstract instructions (e.g., "clear the table"). We apply ManiTaskGen to both simulated and real-world scenes, demonstrating the validity and diversity of the generated tasks. We then leverage these tasks to automatically construct benchmarks, thoroughly evaluating the embodied decision-making capabilities of agents built upon existing vision-language models (VLMs). Furthermore, we propose a simple yet effective method that utilizes ManiTaskGen tasks to enhance embodied decision-making. Overall, this work presents a universal task generation framework for arbitrary scenes, facilitating both benchmarking and improvement of embodied decision-making agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge