Yuanming Shi

Edge Large AI Models: Collaborative Deployment and IoT Applications

May 06, 2025

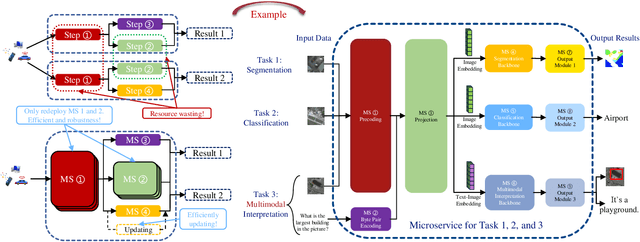

Abstract:Large artificial intelligence models (LAMs) emulate human-like problem-solving capabilities across diverse domains, modalities, and tasks. By leveraging the communication and computation resources of geographically distributed edge devices, edge LAMs enable real-time intelligent services at the network edge. Unlike conventional edge AI, which relies on small or moderate-sized models for direct feature-to-prediction mappings, edge LAMs leverage the intricate coordination of modular components to enable context-aware generative tasks and multi-modal inference. We shall propose a collaborative deployment framework for edge LAM by characterizing the LAM intelligent capabilities and limited edge network resources. Specifically, we propose a collaborative training framework over heterogeneous edge networks that adaptively decomposes LAMs according to computation resources, data modalities, and training objectives, reducing communication and computation overheads during the fine-tuning process. Furthermore, we introduce a microservice-based inference framework that virtualizes the functional modules of edge LAMs according to their architectural characteristics, thereby improving resource utilization and reducing inference latency. The developed edge LAM will provide actionable solutions to enable diversified Internet-of-Things (IoT) applications, facilitated by constructing mappings from diverse sensor data to token representations and fine-tuning based on domain knowledge.

Edge Large AI Models: Revolutionizing 6G Networks

May 01, 2025Abstract:Large artificial intelligence models (LAMs) possess human-like abilities to solve a wide range of real-world problems, exemplifying the potential of experts in various domains and modalities. By leveraging the communication and computation capabilities of geographically dispersed edge devices, edge LAM emerges as an enabling technology to empower the delivery of various real-time intelligent services in 6G. Unlike traditional edge artificial intelligence (AI) that primarily supports a single task using small models, edge LAM is featured by the need of the decomposition and distributed deployment of large models, and the ability to support highly generalized and diverse tasks. However, due to limited communication, computation, and storage resources over wireless networks, the vast number of trainable neurons and the substantial communication overhead pose a formidable hurdle to the practical deployment of edge LAMs. In this paper, we investigate the opportunities and challenges of edge LAMs from the perspectives of model decomposition and resource management. Specifically, we propose collaborative fine-tuning and full-parameter training frameworks, alongside a microservice-assisted inference architecture, to enhance the deployment of edge LAM over wireless networks. Additionally, we investigate the application of edge LAM in air-interface designs, focusing on channel prediction and beamforming. These innovative frameworks and applications offer valuable insights and solutions for advancing 6G technology.

Satellite Federated Fine-Tuning for Foundation Models in Space Computing Power Networks

Apr 14, 2025Abstract:Advancements in artificial intelligence (AI) and low-earth orbit (LEO) satellites have promoted the application of large remote sensing foundation models for various downstream tasks. However, direct downloading of these models for fine-tuning on the ground is impeded by privacy concerns and limited bandwidth. Satellite federated learning (FL) offers a solution by enabling model fine-tuning directly on-board satellites and aggregating model updates without data downloading. Nevertheless, for large foundation models, the computational capacity of satellites is insufficient to support effective on-board fine-tuning in traditional satellite FL frameworks. To address these challenges, we propose a satellite-ground collaborative federated fine-tuning framework. The key of the framework lies in how to reasonably decompose and allocate model components to alleviate insufficient on-board computation capabilities. During fine-tuning, satellites exchange intermediate results with ground stations or other satellites for forward propagation and back propagation, which brings communication challenges due to the special communication topology of space transmission networks, such as intermittent satellite-ground communication, short duration of satellite-ground communication windows, and unstable inter-orbit inter-satellite links (ISLs). To reduce transmission delays, we further introduce tailored communication strategies that integrate both communication and computing resources. Specifically, we propose a parallel intra-orbit communication strategy, a topology-aware satellite-ground communication strategy, and a latency-minimalization inter-orbit communication strategy to reduce space communication costs. Simulation results demonstrate significant reductions in training time with improvements of approximately 33%.

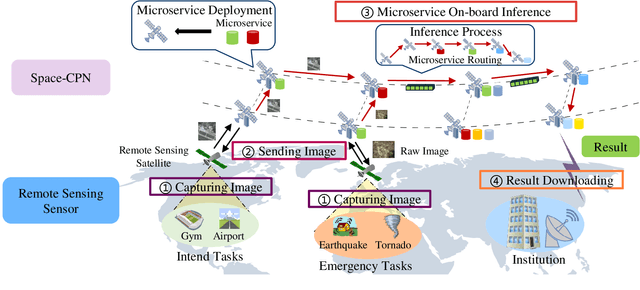

Satellite Edge Artificial Intelligence with Large Models: Architectures and Technologies

Apr 02, 2025Abstract:Driven by the growing demand for intelligent remote sensing applications, large artificial intelligence (AI) models pre-trained on large-scale unlabeled datasets and fine-tuned for downstream tasks have significantly improved learning performance for various downstream tasks due to their generalization capabilities. However, many specific downstream tasks, such as extreme weather nowcasting (e.g., downburst and tornado), disaster monitoring, and battlefield surveillance, require real-time data processing. Traditional methods via transferring raw data to ground stations for processing often cause significant issues in terms of latency and trustworthiness. To address these challenges, satellite edge AI provides a paradigm shift from ground-based to on-board data processing by leveraging the integrated communication-and-computation capabilities in space computing power networks (Space-CPN), thereby enhancing the timeliness, effectiveness, and trustworthiness for remote sensing downstream tasks. Moreover, satellite edge large AI model (LAM) involves both the training (i.e., fine-tuning) and inference phases, where a key challenge lies in developing computation task decomposition principles to support scalable LAM deployment in resource-constrained space networks with time-varying topologies. In this article, we first propose a satellite federated fine-tuning architecture to split and deploy the modules of LAM over space and ground networks for efficient LAM fine-tuning. We then introduce a microservice-empowered satellite edge LAM inference architecture that virtualizes LAM components into lightweight microservices tailored for multi-task multimodal inference. Finally, we discuss the future directions for enhancing the efficiency and scalability of satellite edge LAM, including task-oriented communication, brain-inspired computing, and satellite edge AI network optimization.

VideoScan: Enabling Efficient Streaming Video Understanding via Frame-level Semantic Carriers

Mar 12, 2025

Abstract:This paper introduces VideoScan, an efficient vision-language model (VLM) inference framework designed for real-time video interaction that effectively comprehends and retains streamed video inputs while delivering rapid and accurate responses. A longstanding challenge in video understanding--particularly for long-term or real-time applications--stems from the substantial computational overhead caused by the extensive length of visual tokens. To address this, VideoScan employs a single semantic carrier token to represent each frame, progressively reducing computational and memory overhead during its two-phase inference process: prefilling and decoding. The embedding of the semantic carrier token is derived from an optimized aggregation of frame-level visual features, ensuring compact yet semantically rich representations. Critically, the corresponding key-value pairs are trained to retain contextual semantics from prior frames, enabling efficient memory management without sacrificing temporal coherence. During inference, the visual tokens of each frame are processed only once during the prefilling phase and subsequently discarded in the decoding stage, eliminating redundant computations. This design ensures efficient VLM inference even under stringent real-time constraints. Comprehensive experiments on diverse offline and online benchmarks demonstrate that LLaVA-Video, supported by our method, achieves up to $\sim 5\times$ and $1.29\times$ speedups compared to its original version and previous efficient streaming video understanding approaches, respectively. Crucially, these improvements are attained while maintaining competitive performance and ensuring stable GPU memory consumption (consistently $\sim 18$GB, independent of video duration).

Brain-Inspired Decentralized Satellite Learning in Space Computing Power Networks

Jan 27, 2025

Abstract:Satellite networks are able to collect massive space information with advanced remote sensing technologies, which is essential for real-time applications such as natural disaster monitoring. However, traditional centralized processing by the ground server incurs a severe timeliness issue caused by the transmission bottleneck of raw data. To this end, Space Computing Power Networks (Space-CPN) emerges as a promising architecture to coordinate the computing capability of satellites and enable on board data processing. Nevertheless, due to the natural limitations of solar panels, satellite power system is difficult to meet the energy requirements for ever-increasing intelligent computation tasks of artificial neural networks. To tackle this issue, we propose to employ spiking neural networks (SNNs), which is supported by the neuromorphic computing architecture, for on-board data processing. The extreme sparsity in its computation enables a high energy efficiency. Furthermore, to achieve effective training of these on-board models, we put forward a decentralized neuromorphic learning framework, where a communication-efficient inter-plane model aggregation method is developed with the inspiration from RelaySum. We provide a theoretical analysis to characterize the convergence behavior of the proposed algorithm, which reveals a network diameter related convergence speed. We then formulate a minimum diameter spanning tree problem on the inter-plane connectivity topology and solve it to further improve the learning performance. Extensive experiments are conducted to evaluate the superiority of the proposed method over benchmarks.

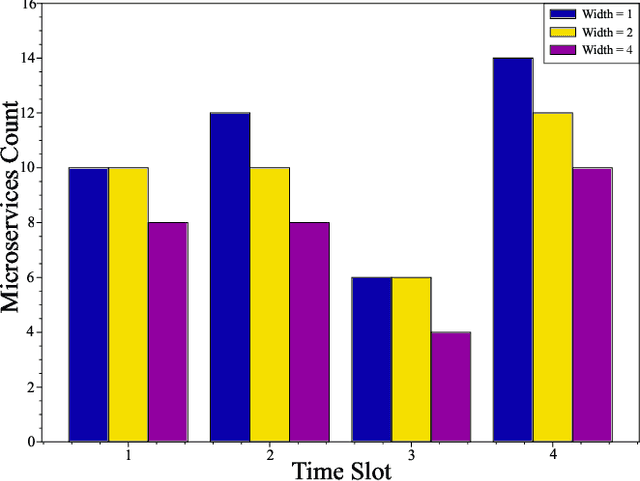

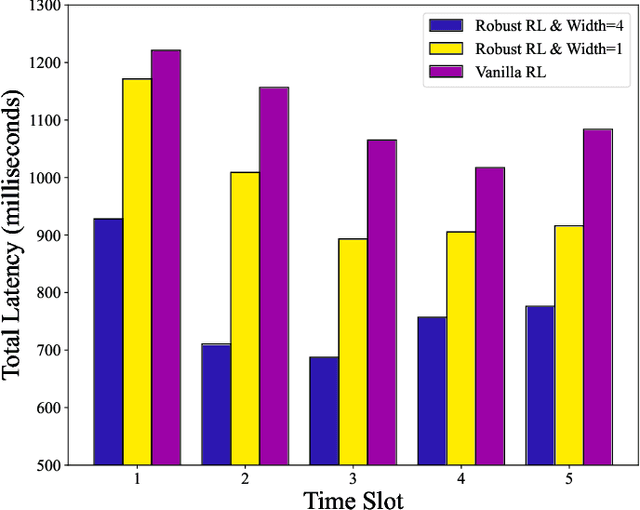

Microservice Deployment in Space Computing Power Networks via Robust Reinforcement Learning

Jan 08, 2025

Abstract:With the growing demand for Earth observation, it is important to provide reliable real-time remote sensing inference services to meet the low-latency requirements. The Space Computing Power Network (Space-CPN) offers a promising solution by providing onboard computing and extensive coverage capabilities for real-time inference. This paper presents a remote sensing artificial intelligence applications deployment framework designed for Low Earth Orbit satellite constellations to achieve real-time inference performance. The framework employs the microservice architecture, decomposing monolithic inference tasks into reusable, independent modules to address high latency and resource heterogeneity. This distributed approach enables optimized microservice deployment, minimizing resource utilization while meeting quality of service and functional requirements. We introduce Robust Optimization to the deployment problem to address data uncertainty. Additionally, we model the Robust Optimization problem as a Partially Observable Markov Decision Process and propose a robust reinforcement learning algorithm to handle the semi-infinite Quality of Service constraints. Our approach yields sub-optimal solutions that minimize accuracy loss while maintaining acceptable computational costs. Simulation results demonstrate the effectiveness of our framework.

Structured IB: Improving Information Bottleneck with Structured Feature Learning

Dec 11, 2024Abstract:The Information Bottleneck (IB) principle has emerged as a promising approach for enhancing the generalization, robustness, and interpretability of deep neural networks, demonstrating efficacy across image segmentation, document clustering, and semantic communication. Among IB implementations, the IB Lagrangian method, employing Lagrangian multipliers, is widely adopted. While numerous methods for the optimizations of IB Lagrangian based on variational bounds and neural estimators are feasible, their performance is highly dependent on the quality of their design, which is inherently prone to errors. To address this limitation, we introduce Structured IB, a framework for investigating potential structured features. By incorporating auxiliary encoders to extract missing informative features, we generate more informative representations. Our experiments demonstrate superior prediction accuracy and task-relevant information preservation compared to the original IB Lagrangian method, even with reduced network size.

Hierarchical Learning and Computing over Space-Ground Integrated Networks

Aug 26, 2024

Abstract:Space-ground integrated networks hold great promise for providing global connectivity, particularly in remote areas where large amounts of valuable data are generated by Internet of Things (IoT) devices, but lacking terrestrial communication infrastructure. The massive data is conventionally transferred to the cloud server for centralized artificial intelligence (AI) models training, raising huge communication overhead and privacy concerns. To address this, we propose a hierarchical learning and computing framework, which leverages the lowlatency characteristic of low-earth-orbit (LEO) satellites and the global coverage of geostationary-earth-orbit (GEO) satellites, to provide global aggregation services for locally trained models on ground IoT devices. Due to the time-varying nature of satellite network topology and the energy constraints of LEO satellites, efficiently aggregating the received local models from ground devices on LEO satellites is highly challenging. By leveraging the predictability of inter-satellite connectivity, modeling the space network as a directed graph, we formulate a network energy minimization problem for model aggregation, which turns out to be a Directed Steiner Tree (DST) problem. We propose a topologyaware energy-efficient routing (TAEER) algorithm to solve the DST problem by finding a minimum spanning arborescence on a substitute directed graph. Extensive simulations under realworld space-ground integrated network settings demonstrate that the proposed TAEER algorithm significantly reduces energy consumption and outperforms benchmarks.

A Survey on Integrated Sensing, Communication, and Computation

Aug 15, 2024

Abstract:The forthcoming generation of wireless technology, 6G, promises a revolutionary leap beyond traditional data-centric services. It aims to usher in an era of ubiquitous intelligent services, where everything is interconnected and intelligent. This vision requires the seamless integration of three fundamental modules: Sensing for information acquisition, communication for information sharing, and computation for information processing and decision-making. These modules are intricately linked, especially in complex tasks such as edge learning and inference. However, the performance of these modules is interdependent, creating a resource competition for time, energy, and bandwidth. Existing techniques like integrated communication and computation (ICC), integrated sensing and computation (ISC), and integrated sensing and communication (ISAC) have made partial strides in addressing this challenge, but they fall short of meeting the extreme performance requirements. To overcome these limitations, it is essential to develop new techniques that comprehensively integrate sensing, communication, and computation. This integrated approach, known as Integrated Sensing, Communication, and Computation (ISCC), offers a systematic perspective for enhancing task performance. This paper begins with a comprehensive survey of historic and related techniques such as ICC, ISC, and ISAC, highlighting their strengths and limitations. It then explores the state-of-the-art signal designs for ISCC, along with network resource management strategies specifically tailored for ISCC. Furthermore, this paper discusses the exciting research opportunities that lie ahead for implementing ISCC in future advanced networks. By embracing ISCC, we can unlock the full potential of intelligent connectivity, paving the way for groundbreaking applications and services.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge