Chunxiao Jiang

Satellite Federated Fine-Tuning for Foundation Models in Space Computing Power Networks

Apr 14, 2025Abstract:Advancements in artificial intelligence (AI) and low-earth orbit (LEO) satellites have promoted the application of large remote sensing foundation models for various downstream tasks. However, direct downloading of these models for fine-tuning on the ground is impeded by privacy concerns and limited bandwidth. Satellite federated learning (FL) offers a solution by enabling model fine-tuning directly on-board satellites and aggregating model updates without data downloading. Nevertheless, for large foundation models, the computational capacity of satellites is insufficient to support effective on-board fine-tuning in traditional satellite FL frameworks. To address these challenges, we propose a satellite-ground collaborative federated fine-tuning framework. The key of the framework lies in how to reasonably decompose and allocate model components to alleviate insufficient on-board computation capabilities. During fine-tuning, satellites exchange intermediate results with ground stations or other satellites for forward propagation and back propagation, which brings communication challenges due to the special communication topology of space transmission networks, such as intermittent satellite-ground communication, short duration of satellite-ground communication windows, and unstable inter-orbit inter-satellite links (ISLs). To reduce transmission delays, we further introduce tailored communication strategies that integrate both communication and computing resources. Specifically, we propose a parallel intra-orbit communication strategy, a topology-aware satellite-ground communication strategy, and a latency-minimalization inter-orbit communication strategy to reduce space communication costs. Simulation results demonstrate significant reductions in training time with improvements of approximately 33%.

Satellite Edge Artificial Intelligence with Large Models: Architectures and Technologies

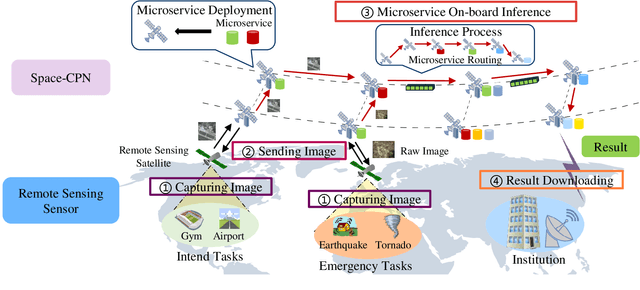

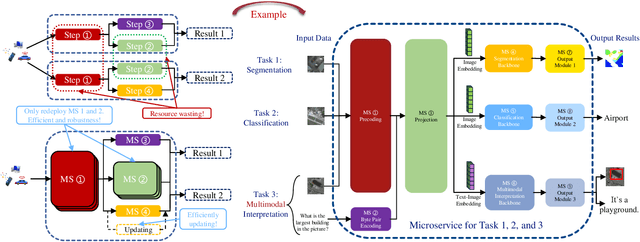

Apr 02, 2025Abstract:Driven by the growing demand for intelligent remote sensing applications, large artificial intelligence (AI) models pre-trained on large-scale unlabeled datasets and fine-tuned for downstream tasks have significantly improved learning performance for various downstream tasks due to their generalization capabilities. However, many specific downstream tasks, such as extreme weather nowcasting (e.g., downburst and tornado), disaster monitoring, and battlefield surveillance, require real-time data processing. Traditional methods via transferring raw data to ground stations for processing often cause significant issues in terms of latency and trustworthiness. To address these challenges, satellite edge AI provides a paradigm shift from ground-based to on-board data processing by leveraging the integrated communication-and-computation capabilities in space computing power networks (Space-CPN), thereby enhancing the timeliness, effectiveness, and trustworthiness for remote sensing downstream tasks. Moreover, satellite edge large AI model (LAM) involves both the training (i.e., fine-tuning) and inference phases, where a key challenge lies in developing computation task decomposition principles to support scalable LAM deployment in resource-constrained space networks with time-varying topologies. In this article, we first propose a satellite federated fine-tuning architecture to split and deploy the modules of LAM over space and ground networks for efficient LAM fine-tuning. We then introduce a microservice-empowered satellite edge LAM inference architecture that virtualizes LAM components into lightweight microservices tailored for multi-task multimodal inference. Finally, we discuss the future directions for enhancing the efficiency and scalability of satellite edge LAM, including task-oriented communication, brain-inspired computing, and satellite edge AI network optimization.

Brain-Inspired Decentralized Satellite Learning in Space Computing Power Networks

Jan 27, 2025

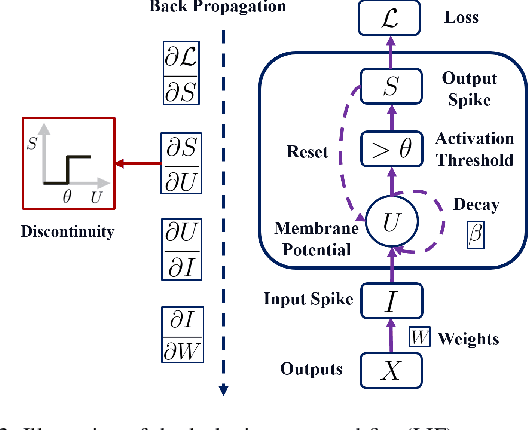

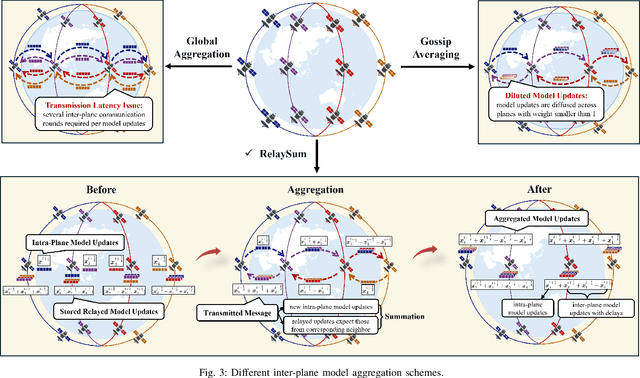

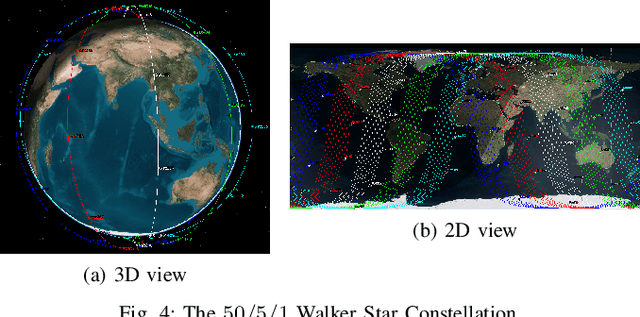

Abstract:Satellite networks are able to collect massive space information with advanced remote sensing technologies, which is essential for real-time applications such as natural disaster monitoring. However, traditional centralized processing by the ground server incurs a severe timeliness issue caused by the transmission bottleneck of raw data. To this end, Space Computing Power Networks (Space-CPN) emerges as a promising architecture to coordinate the computing capability of satellites and enable on board data processing. Nevertheless, due to the natural limitations of solar panels, satellite power system is difficult to meet the energy requirements for ever-increasing intelligent computation tasks of artificial neural networks. To tackle this issue, we propose to employ spiking neural networks (SNNs), which is supported by the neuromorphic computing architecture, for on-board data processing. The extreme sparsity in its computation enables a high energy efficiency. Furthermore, to achieve effective training of these on-board models, we put forward a decentralized neuromorphic learning framework, where a communication-efficient inter-plane model aggregation method is developed with the inspiration from RelaySum. We provide a theoretical analysis to characterize the convergence behavior of the proposed algorithm, which reveals a network diameter related convergence speed. We then formulate a minimum diameter spanning tree problem on the inter-plane connectivity topology and solve it to further improve the learning performance. Extensive experiments are conducted to evaluate the superiority of the proposed method over benchmarks.

Microservice Deployment in Space Computing Power Networks via Robust Reinforcement Learning

Jan 08, 2025

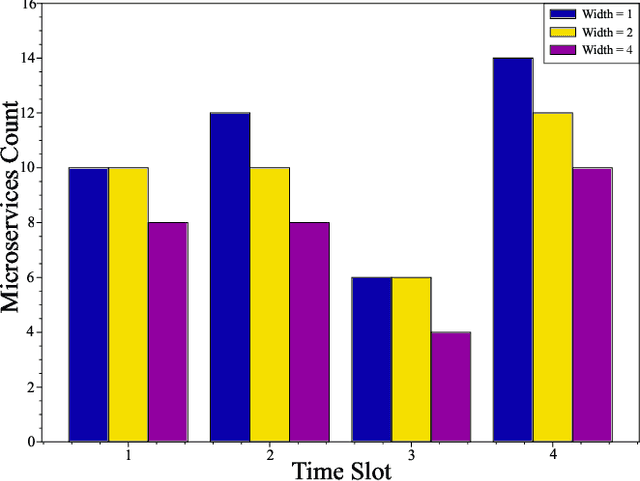

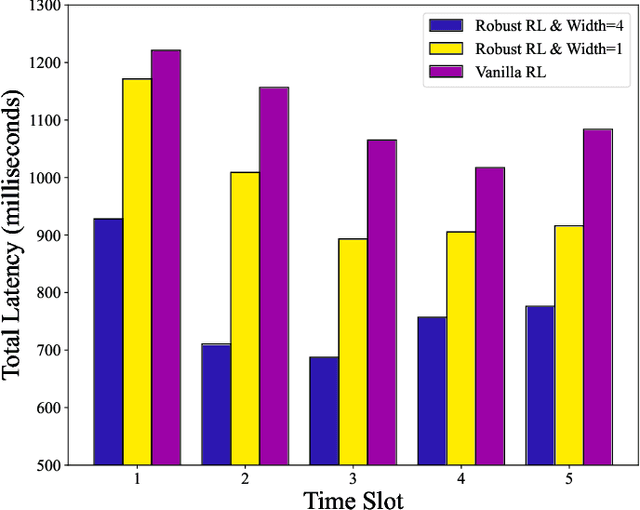

Abstract:With the growing demand for Earth observation, it is important to provide reliable real-time remote sensing inference services to meet the low-latency requirements. The Space Computing Power Network (Space-CPN) offers a promising solution by providing onboard computing and extensive coverage capabilities for real-time inference. This paper presents a remote sensing artificial intelligence applications deployment framework designed for Low Earth Orbit satellite constellations to achieve real-time inference performance. The framework employs the microservice architecture, decomposing monolithic inference tasks into reusable, independent modules to address high latency and resource heterogeneity. This distributed approach enables optimized microservice deployment, minimizing resource utilization while meeting quality of service and functional requirements. We introduce Robust Optimization to the deployment problem to address data uncertainty. Additionally, we model the Robust Optimization problem as a Partially Observable Markov Decision Process and propose a robust reinforcement learning algorithm to handle the semi-infinite Quality of Service constraints. Our approach yields sub-optimal solutions that minimize accuracy loss while maintaining acceptable computational costs. Simulation results demonstrate the effectiveness of our framework.

Joint Optimization of Communication Enhancement and Location Privacy Protection in RIS-Assisted Underwater Communication System

Nov 30, 2024Abstract:As the demand for underwater communication continues to grow, underwater acoustic RIS (UARIS), as an emerging paradigm in underwater acoustic communication (UAC), can significantly improve the communication rate of underwater acoustic systems. However, in open underwater environments, the location of the source node is highly susceptible to being obtained by eavesdropping nodes through correlation analysis, leading to the issue of location privacy in underwater communication systems, which has been overlooked by many studies. To enhance underwater communication and protect location privacy, we propose a novel UARIS architecture integrated with an artificial noise (AN) module. This architecture not only improves communication quality but also introduces noise to interfere with the eavesdroppers' attempts to locate the source node. We derive the Cram\'er-Rao Lower Bound (CRLB) for the localization method deployed by the eavesdroppers and, based on this, model the UARIS-assisted communication scenario as a multi-objective optimization problem. This problem optimizes transmission beamforming, reflective precoding, and noise factors to maximize communication performance and location privacy protection. To efficiently solve this non-convex optimization problem, we develop an iterative algorithm based on fractional programming. Simulation results validate that the proposed system significantly enhances data transmission rates while effectively maintaining the location privacy of the source node in UAC systems.

Hierarchical Learning and Computing over Space-Ground Integrated Networks

Aug 26, 2024

Abstract:Space-ground integrated networks hold great promise for providing global connectivity, particularly in remote areas where large amounts of valuable data are generated by Internet of Things (IoT) devices, but lacking terrestrial communication infrastructure. The massive data is conventionally transferred to the cloud server for centralized artificial intelligence (AI) models training, raising huge communication overhead and privacy concerns. To address this, we propose a hierarchical learning and computing framework, which leverages the lowlatency characteristic of low-earth-orbit (LEO) satellites and the global coverage of geostationary-earth-orbit (GEO) satellites, to provide global aggregation services for locally trained models on ground IoT devices. Due to the time-varying nature of satellite network topology and the energy constraints of LEO satellites, efficiently aggregating the received local models from ground devices on LEO satellites is highly challenging. By leveraging the predictability of inter-satellite connectivity, modeling the space network as a directed graph, we formulate a network energy minimization problem for model aggregation, which turns out to be a Directed Steiner Tree (DST) problem. We propose a topologyaware energy-efficient routing (TAEER) algorithm to solve the DST problem by finding a minimum spanning arborescence on a substitute directed graph. Extensive simulations under realworld space-ground integrated network settings demonstrate that the proposed TAEER algorithm significantly reduces energy consumption and outperforms benchmarks.

Satellite Federated Edge Learning: Architecture Design and Convergence Analysis

Apr 02, 2024

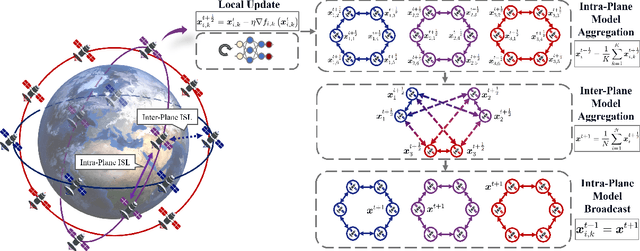

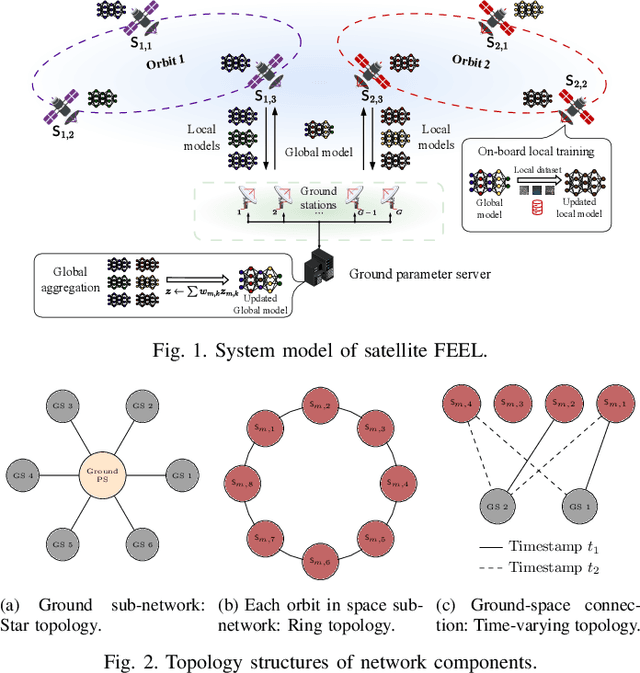

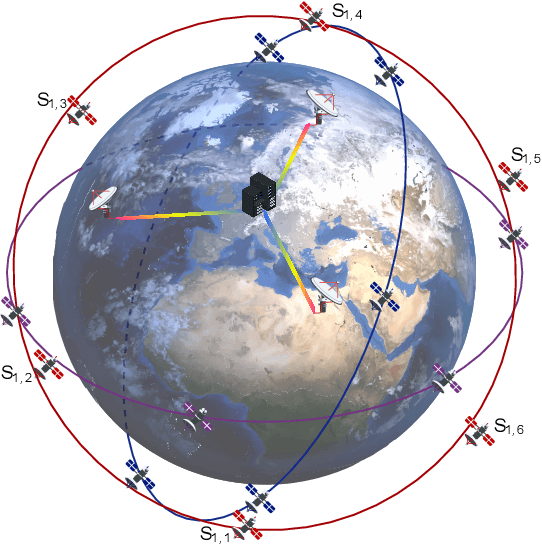

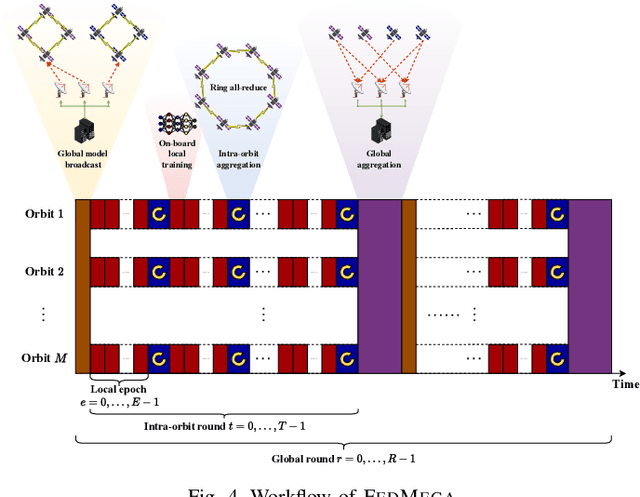

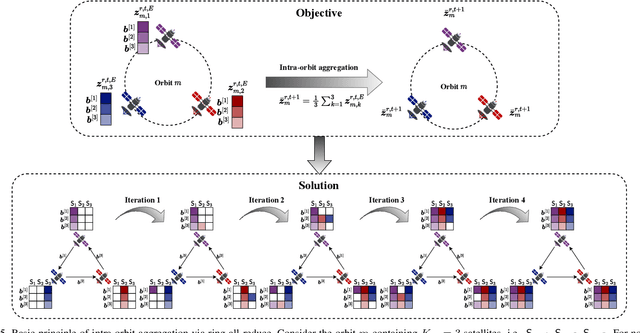

Abstract:The proliferation of low-earth-orbit (LEO) satellite networks leads to the generation of vast volumes of remote sensing data which is traditionally transferred to the ground server for centralized processing, raising privacy and bandwidth concerns. Federated edge learning (FEEL), as a distributed machine learning approach, has the potential to address these challenges by sharing only model parameters instead of raw data. Although promising, the dynamics of LEO networks, characterized by the high mobility of satellites and short ground-to-satellite link (GSL) duration, pose unique challenges for FEEL. Notably, frequent model transmission between the satellites and ground incurs prolonged waiting time and large transmission latency. This paper introduces a novel FEEL algorithm, named FEDMEGA, tailored to LEO mega-constellation networks. By integrating inter-satellite links (ISL) for intra-orbit model aggregation, the proposed algorithm significantly reduces the usage of low data rate and intermittent GSL. Our proposed method includes a ring all-reduce based intra-orbit aggregation mechanism, coupled with a network flow-based transmission scheme for global model aggregation, which enhances transmission efficiency. Theoretical convergence analysis is provided to characterize the algorithm performance. Extensive simulations show that our FEDMEGA algorithm outperforms existing satellite FEEL algorithms, exhibiting an approximate 30% improvement in convergence rate.

Over-the-Air Federated Learning and Optimization

Oct 16, 2023Abstract:Federated learning (FL), as an emerging distributed machine learning paradigm, allows a mass of edge devices to collaboratively train a global model while preserving privacy. In this tutorial, we focus on FL via over-the-air computation (AirComp), which is proposed to reduce the communication overhead for FL over wireless networks at the cost of compromising in the learning performance due to model aggregation error arising from channel fading and noise. We first provide a comprehensive study on the convergence of AirComp-based FedAvg (AirFedAvg) algorithms under both strongly convex and non-convex settings with constant and diminishing learning rates in the presence of data heterogeneity. Through convergence and asymptotic analysis, we characterize the impact of aggregation error on the convergence bound and provide insights for system design with convergence guarantees. Then we derive convergence rates for AirFedAvg algorithms for strongly convex and non-convex objectives. For different types of local updates that can be transmitted by edge devices (i.e., local model, gradient, and model difference), we reveal that transmitting local model in AirFedAvg may cause divergence in the training procedure. In addition, we consider more practical signal processing schemes to improve the communication efficiency and further extend the convergence analysis to different forms of model aggregation error caused by these signal processing schemes. Extensive simulation results under different settings of objective functions, transmitted local information, and communication schemes verify the theoretical conclusions.

Integrated Sensing-Communication-Computation for Edge Artificial Intelligence

Jun 01, 2023Abstract:Edge artificial intelligence (AI) has been a promising solution towards 6G to empower a series of advanced techniques such as digital twin, holographic projection, semantic communications, and auto-driving, for achieving intelligence of everything. The performance of edge AI tasks, including edge learning and edge AI inference, depends on the quality of three highly coupled processes, i.e., sensing for data acquisition, computation for information extraction, and communication for information transmission. However, these three modules need to compete for network resources for enhancing their own quality-of-services. To this end, integrated sensing-communication-computation (ISCC) is of paramount significance for improving resource utilization as well as achieving the customized goals of edge AI tasks. By investigating the interplay among the three modules, this article presents various kinds of ISCC schemes for federated edge learning tasks and edge AI inference tasks in both application and physical layers.

Hybrid Driven Learning for Channel Estimation in Intelligent Reflecting Surface Aided Millimeter Wave Communications

May 30, 2023

Abstract:Intelligent reflecting surfaces (IRS) have been proposed in millimeter wave (mmWave) and terahertz (THz) systems to achieve both coverage and capacity enhancement, where the design of hybrid precoders, combiners, and the IRS typically relies on channel state information. In this paper, we address the problem of uplink wideband channel estimation for IRS aided multiuser multiple-input single-output (MISO) systems with hybrid architectures. Combining the structure of model driven and data driven deep learning approaches, a hybrid driven learning architecture is devised for joint estimation and learning the properties of the channels. For a passive IRS aided system, we propose a residual learned approximate message passing as a model driven network. A denoising and attention network in the data driven network is used to jointly learn spatial and frequency features. Furthermore, we design a flexible hybrid driven network in a hybrid passive and active IRS aided system. Specifically, the depthwise separable convolution is applied to the data driven network, leading to less network complexity and fewer parameters at the IRS side. Numerical results indicate that in both systems, the proposed hybrid driven channel estimation methods significantly outperform existing deep learning-based schemes and effectively reduce the pilot overhead by about 60% in IRS aided systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge