Khaled B. Letaief

Sherman

Communication-Efficient Multi-Modal Edge Inference via Uncertainty-Aware Distributed Learning

Jan 21, 2026Abstract:Semantic communication is emerging as a key enabler for distributed edge intelligence due to its capability to convey task-relevant meaning. However, achieving communication-efficient training and robust inference over wireless links remains challenging. This challenge is further exacerbated for multi-modal edge inference (MMEI) by two factors: 1) prohibitive communication overhead for distributed learning over bandwidth-limited wireless links, due to the \emph{multi-modal} nature of the system; and 2) limited robustness under varying channels and noisy multi-modal inputs. In this paper, we propose a three-stage communication-aware distributed learning framework to improve training and inference efficiency while maintaining robustness over wireless channels. In Stage~I, devices perform local multi-modal self-supervised learning to obtain shared and modality-specific encoders without device--server exchange, thereby reducing the communication cost. In Stage~II, distributed fine-tuning with centralized evidential fusion calibrates per-modality uncertainty and reliably aggregates features distorted by noise or channel fading. In Stage~III, an uncertainty-guided feedback mechanism selectively requests additional features for uncertain samples, optimizing the communication--accuracy tradeoff in the distributed setting. Experiments on RGB--depth indoor scene classification show that the proposed framework attains higher accuracy with far fewer training communication rounds and remains robust to modality degradation or channel variation, outperforming existing self-supervised and fully supervised baselines.

Near-Field Communication with Massive Movable Antennas: An Electrostatic Equilibrium Perspective

Dec 25, 2025Abstract:Recent advancements in large-scale position-reconfigurable antennas have opened up new dimensions to effectively utilize the spatial degrees of freedom (DoFs) of wireless channels. However, the deployment of existing antenna placement schemes is primarily hindered by their limited scalability and frequently overlooked near-field effects in large-scale antenna systems. In this paper, we propose a novel antenna placement approach tailored for near-field massive multiple-input multiple-output systems, which effectively exploits the spatial DoFs to enhance spectral efficiency. For that purpose, we first reformulate the antenna placement problem in the angular domain, resulting in a weighted Fekete problem. We then derive the optimality condition and reveal that the {optimal} antenna placement is in principle an electrostatic equilibrium problem. To further reduce the computational complexity of numerical optimization, we propose an ordinary differential equation (ODE)-based framework to efficiently solve the equilibrium problem. In particular, the optimal antenna positions are characterized by the roots of the polynomial solutions to specific ODEs in the normalized angular domain. By simply adopting a two-step eigenvalue decomposition (EVD) approach, the optimal antenna positions can be efficiently obtained. Furthermore, we perform an asymptotic analysis when the antenna size tends to infinity, which yields a closed-form solution. Simulation results demonstrate that the proposed scheme efficiently harnesses the spatial DoFs of near-field channels with prominent gains in spectral efficiency and maintains robustness against system parameter mismatches. In addition, the derived asymptotic closed-form {solution} closely approaches the theoretical optimum across a wide range of practical scenarios.

Federated Distillation Assisted Vehicle Edge Caching Scheme Based on Lightweight DDPM

Dec 10, 2025

Abstract:Vehicle edge caching is a promising technology that can significantly reduce the latency for vehicle users (VUs) to access content by pre-caching user-interested content at edge nodes. It is crucial to accurately predict the content that VUs are interested in without exposing their privacy. Traditional federated learning (FL) can protect user privacy by sharing models rather than raw data. However, the training of FL requires frequent model transmission, which can result in significant communication overhead. Additionally, vehicles may leave the road side unit (RSU) coverage area before training is completed, leading to training failures. To address these issues, in this letter, we propose a federated distillation-assisted vehicle edge caching scheme based on lightweight denoising diffusion probabilistic model (LDPM). The simulation results demonstrate that the proposed vehicle edge caching scheme has good robustness to variations in vehicle speed, significantly reducing communication overhead and improving cache hit percentage.

Generative AI Meets 6G and Beyond: Diffusion Models for Semantic Communications

Nov 11, 2025Abstract:Semantic communications mark a paradigm shift from bit-accurate transmission toward meaning-centric communication, essential as wireless systems approach theoretical capacity limits. The emergence of generative AI has catalyzed generative semantic communications, where receivers reconstruct content from minimal semantic cues by leveraging learned priors. Among generative approaches, diffusion models stand out for their superior generation quality, stable training dynamics, and rigorous theoretical foundations. However, the field currently lacks systematic guidance connecting diffusion techniques to communication system design, forcing researchers to navigate disparate literatures. This article provides the first comprehensive tutorial on diffusion models for generative semantic communications. We present score-based diffusion foundations and systematically review three technical pillars: conditional diffusion for controllable generation, efficient diffusion for accelerated inference, and generalized diffusion for cross-domain adaptation. In addition, we introduce an inverse problem perspective that reformulates semantic decoding as posterior inference, bridging semantic communications with computational imaging. Through analysis of human-centric, machine-centric, and agent-centric scenarios, we illustrate how diffusion models enable extreme compression while maintaining semantic fidelity and robustness. By bridging generative AI innovations with communication system design, this article aims to establish diffusion models as foundational components of next-generation wireless networks and beyond.

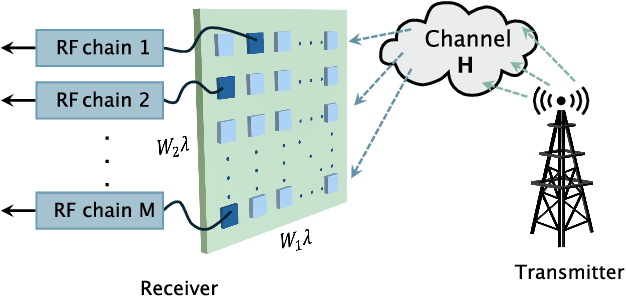

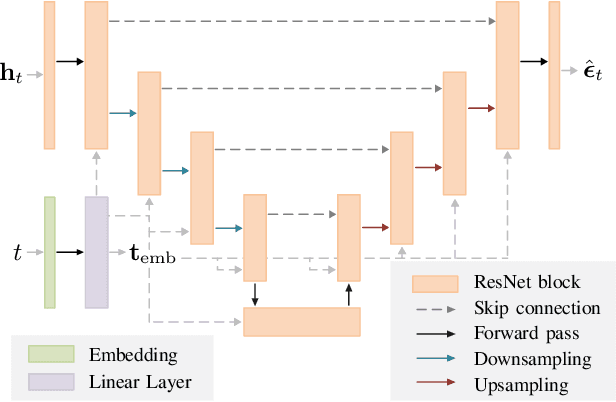

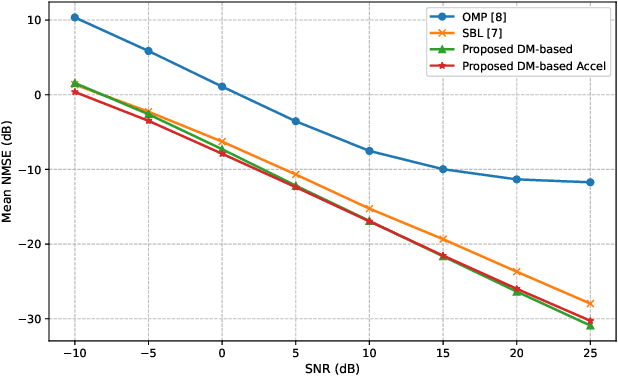

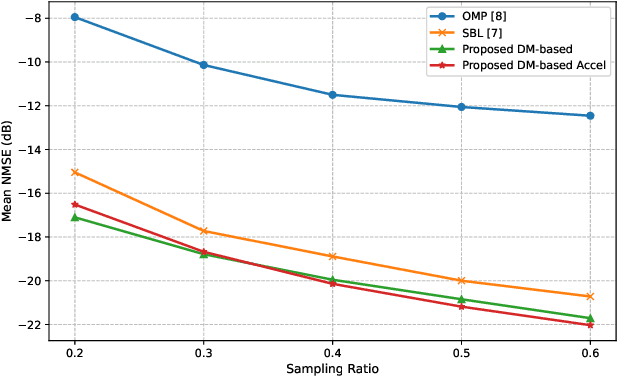

Accurate and Fast Channel Estimation for Fluid Antenna Systems with Diffusion Models

May 08, 2025

Abstract:Fluid antenna systems (FAS) offer enhanced spatial diversity for next-generation wireless systems. However, acquiring accurate channel state information (CSI) remains challenging due to the large number of reconfigurable ports and the limited availability of radio-frequency (RF) chains -- particularly in high-dimensional FAS scenarios. To address this challenge, we propose an efficient posterior sampling-based channel estimator that leverages a diffusion model (DM) with a simplified U-Net architecture to capture the spatial correlation structure of two-dimensional FAS channels. The DM is initially trained offline in an unsupervised way and then applied online as a learned implicit prior to reconstruct CSI from partial observations via posterior sampling through a denoising diffusion restoration model (DDRM). To accelerate the online inference, we introduce a skipped sampling strategy that updates only a subset of latent variables during the sampling process, thereby reducing the computational cost with minimal accuracy degradation. Simulation results demonstrate that the proposed approach achieves significantly higher estimation accuracy and over 20x speedup compared to state-of-the-art compressed sensing-based methods, highlighting its potential for practical deployment in high-dimensional FAS.

Fluid Antenna-Assisted MU-MIMO Systems with Decentralized Baseband Processing

May 08, 2025Abstract:The fluid antenna system (FAS) has emerged as a disruptive technology, offering unprecedented degrees of freedom (DoF) for wireless communication systems. However, optimizing fluid antenna (FA) positions entails significant computational costs, especially when the number of FAs is large. To address this challenge, we introduce a decentralized baseband processing (DBP) architecture to FAS, which partitions the FA array into clusters and enables parallel processing. Based on the DBP architecture, we formulate a weighted sum rate (WSR) maximization problem through joint beamforming and FA position design for FA-assisted multiuser multiple-input multiple-output (MU-MIMO) systems. To solve the WSR maximization problem, we propose a novel decentralized block coordinate ascent (BCA)-based algorithm that leverages matrix fractional programming (FP) and majorization-minimization (MM) methods. The proposed decentralized algorithm achieves low computational, communication, and storage costs, thus unleashing the potential of the DBP architecture. Simulation results show that our proposed algorithm under the DBP architecture reduces computational time by over 70% compared to centralized architectures with negligible WSR performance loss.

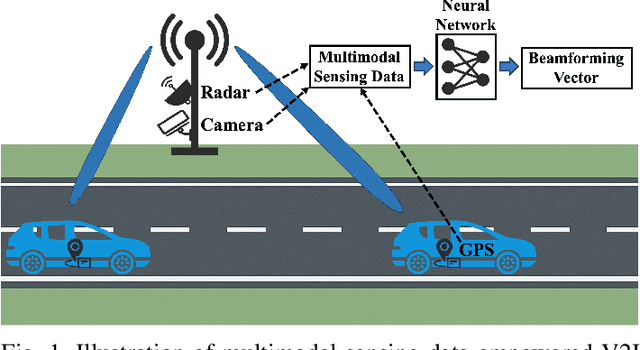

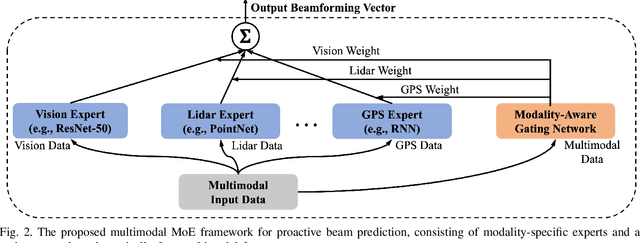

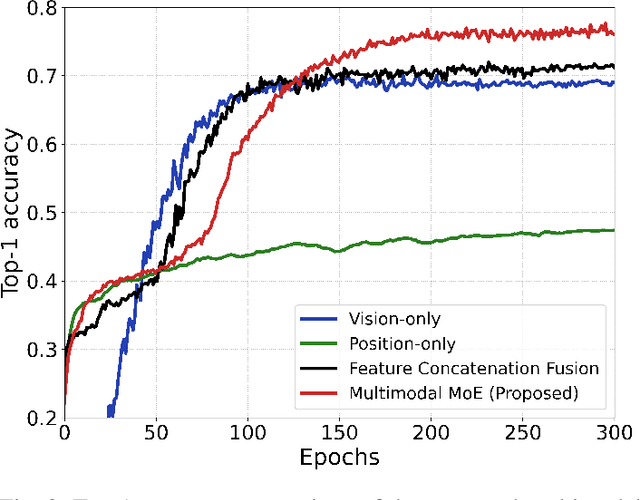

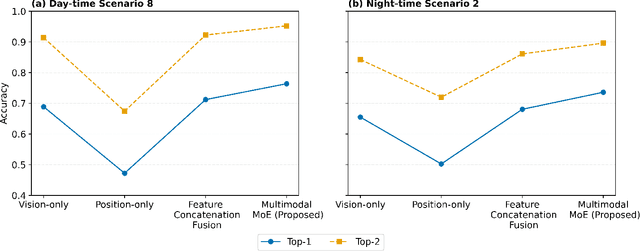

Multimodal Deep Learning-Empowered Beam Prediction in Future THz ISAC Systems

May 05, 2025

Abstract:Integrated sensing and communication (ISAC) systems operating at terahertz (THz) bands are envisioned to enable both ultra-high data-rate communication and precise environmental awareness for next-generation wireless networks. However, the narrow width of THz beams makes them prone to misalignment and necessitates frequent beam prediction in dynamic environments. Multimodal sensing, which integrates complementary modalities such as camera images, positional data, and radar measurements, has recently emerged as a promising solution for proactive beam prediction. Nevertheless, existing multimodal approaches typically employ static fusion architectures that cannot adjust to varying modality reliability and contributions, thereby degrading predictive performance and robustness. To address this challenge, we propose a novel and efficient multimodal mixture-of-experts (MoE) deep learning framework for proactive beam prediction in THz ISAC systems. The proposed multimodal MoE framework employs multiple modality-specific expert networks to extract representative features from individual sensing modalities, and dynamically fuses them using adaptive weights generated by a gating network according to the instantaneous reliability of each modality. Simulation results in realistic vehicle-to-infrastructure (V2I) scenarios demonstrate that the proposed MoE framework outperforms traditional static fusion methods and unimodal baselines in terms of prediction accuracy and adaptability, highlighting its potential in practical THz ISAC systems with ultra-massive multiple-input multiple-output (MIMO).

Satellite Edge Artificial Intelligence with Large Models: Architectures and Technologies

Apr 02, 2025Abstract:Driven by the growing demand for intelligent remote sensing applications, large artificial intelligence (AI) models pre-trained on large-scale unlabeled datasets and fine-tuned for downstream tasks have significantly improved learning performance for various downstream tasks due to their generalization capabilities. However, many specific downstream tasks, such as extreme weather nowcasting (e.g., downburst and tornado), disaster monitoring, and battlefield surveillance, require real-time data processing. Traditional methods via transferring raw data to ground stations for processing often cause significant issues in terms of latency and trustworthiness. To address these challenges, satellite edge AI provides a paradigm shift from ground-based to on-board data processing by leveraging the integrated communication-and-computation capabilities in space computing power networks (Space-CPN), thereby enhancing the timeliness, effectiveness, and trustworthiness for remote sensing downstream tasks. Moreover, satellite edge large AI model (LAM) involves both the training (i.e., fine-tuning) and inference phases, where a key challenge lies in developing computation task decomposition principles to support scalable LAM deployment in resource-constrained space networks with time-varying topologies. In this article, we first propose a satellite federated fine-tuning architecture to split and deploy the modules of LAM over space and ground networks for efficient LAM fine-tuning. We then introduce a microservice-empowered satellite edge LAM inference architecture that virtualizes LAM components into lightweight microservices tailored for multi-task multimodal inference. Finally, we discuss the future directions for enhancing the efficiency and scalability of satellite edge LAM, including task-oriented communication, brain-inspired computing, and satellite edge AI network optimization.

Mutual Information-Empowered Task-Oriented Communication: Principles, Applications and Challenges

Mar 26, 2025

Abstract:Mutual information (MI)-based guidelines have recently proven to be effective for designing task-oriented communication systems, where the ultimate goal is to extract and transmit task-relevant information for downstream task. This paper provides a comprehensive overview of MI-empowered task-oriented communication, highlighting how MI-based methods can serve as a unifying design framework in various task-oriented communication scenarios. We begin with the roadmap of MI for designing task-oriented communication systems, and then introduce the roles and applications of MI to guide feature encoding, transmission optimization, and efficient training with two case studies. We further elaborate the limitations and challenges of MI-based methods. Finally, we identify several open issues in MI-based task-oriented communication to inspire future research.

Communication-Efficient Distributed On-Device LLM Inference Over Wireless Networks

Mar 19, 2025Abstract:Large language models (LLMs) have demonstrated remarkable success across various application domains, but their enormous sizes and computational demands pose significant challenges for deployment on resource-constrained edge devices. To address this issue, we propose a novel distributed on-device LLM inference framework that leverages tensor parallelism to partition the neural network tensors (e.g., weight matrices) of one LLM across multiple edge devices for collaborative inference. A key challenge in tensor parallelism is the frequent all-reduce operations for aggregating intermediate layer outputs across participating devices, which incurs significant communication overhead. To alleviate this bottleneck, we propose an over-the-air computation (AirComp) approach that harnesses the analog superposition property of wireless multiple-access channels to perform fast all-reduce steps. To utilize the heterogeneous computational capabilities of edge devices and mitigate communication distortions, we investigate a joint model assignment and transceiver optimization problem to minimize the average transmission error. The resulting mixed-timescale stochastic non-convex optimization problem is intractable, and we propose an efficient two-stage algorithm to solve it. Moreover, we prove that the proposed algorithm converges almost surely to a stationary point of the original problem. Comprehensive simulation results will show that the proposed framework outperforms existing benchmark schemes, achieving up to 5x inference speed acceleration and improving inference accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge