Linling Kuang

David

Generative Bayesian Spectrum Cartography: Unified Reconstruction and Active Sensing via Diffusion Models

Dec 23, 2025Abstract:High-fidelity spectrum cartography is pivotal for spectrum management and wireless situational awareness, yet it remains a challenging ill-posed inverse problem due to the sparsity and irregularity of observations. Furthermore, existing approaches often decouple reconstruction from sensing, lacking a principled mechanism for informative sampling. To address these limitations, this paper proposes a unified diffusion-based Bayesian framework that jointly addresses spectrum reconstruction and active sensing. We formulate the reconstruction task as a conditional generation process driven by a learned diffusion prior. Specifically, we derive tractable, closed-form posterior transition kernels for the reverse diffusion process, which enforce consistency with both linear Gaussian and non-linear quantized measurements. Leveraging the intrinsic probabilistic nature of diffusion models, we further develop an uncertainty-aware active sampling strategy. This strategy quantifies reconstruction uncertainty to adaptively guide sensing agents toward the most informative locations, thereby maximizing spectral efficiency. Extensive experiments demonstrate that the proposed framework significantly outperforms state-of-the-art interpolation, sparsity-based, and deep learning baselines in terms of reconstruction accuracy, sampling efficiency, and robustness to low-bit quantization.

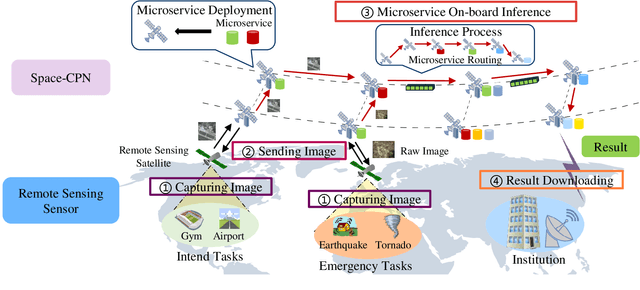

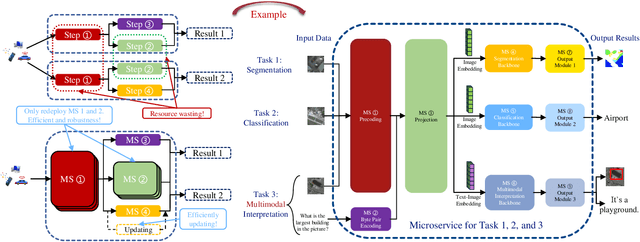

Satellite Edge Artificial Intelligence with Large Models: Architectures and Technologies

Apr 02, 2025Abstract:Driven by the growing demand for intelligent remote sensing applications, large artificial intelligence (AI) models pre-trained on large-scale unlabeled datasets and fine-tuned for downstream tasks have significantly improved learning performance for various downstream tasks due to their generalization capabilities. However, many specific downstream tasks, such as extreme weather nowcasting (e.g., downburst and tornado), disaster monitoring, and battlefield surveillance, require real-time data processing. Traditional methods via transferring raw data to ground stations for processing often cause significant issues in terms of latency and trustworthiness. To address these challenges, satellite edge AI provides a paradigm shift from ground-based to on-board data processing by leveraging the integrated communication-and-computation capabilities in space computing power networks (Space-CPN), thereby enhancing the timeliness, effectiveness, and trustworthiness for remote sensing downstream tasks. Moreover, satellite edge large AI model (LAM) involves both the training (i.e., fine-tuning) and inference phases, where a key challenge lies in developing computation task decomposition principles to support scalable LAM deployment in resource-constrained space networks with time-varying topologies. In this article, we first propose a satellite federated fine-tuning architecture to split and deploy the modules of LAM over space and ground networks for efficient LAM fine-tuning. We then introduce a microservice-empowered satellite edge LAM inference architecture that virtualizes LAM components into lightweight microservices tailored for multi-task multimodal inference. Finally, we discuss the future directions for enhancing the efficiency and scalability of satellite edge LAM, including task-oriented communication, brain-inspired computing, and satellite edge AI network optimization.

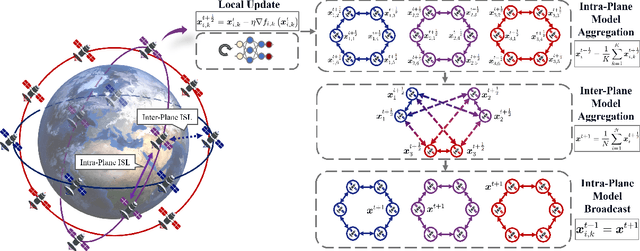

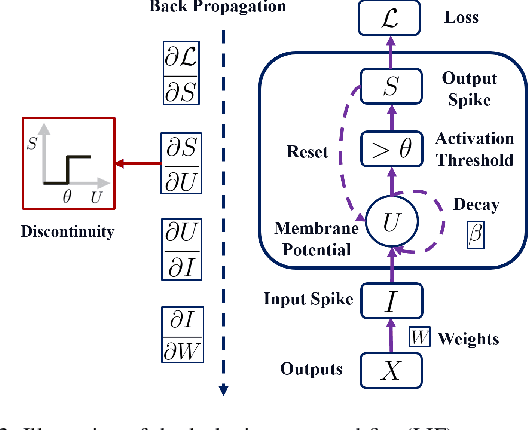

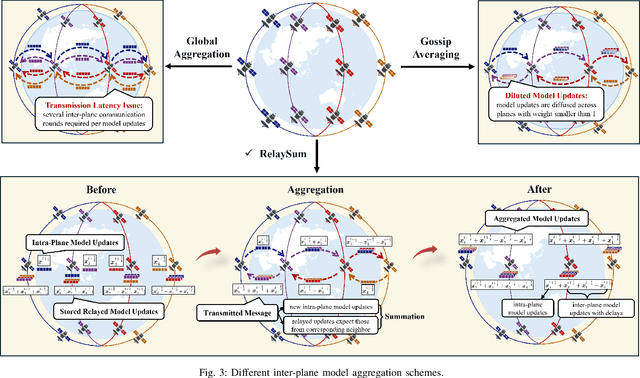

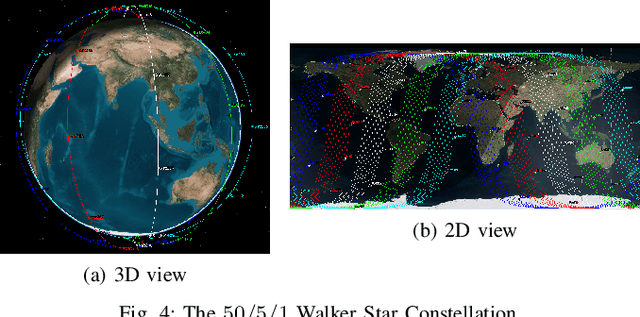

Brain-Inspired Decentralized Satellite Learning in Space Computing Power Networks

Jan 27, 2025

Abstract:Satellite networks are able to collect massive space information with advanced remote sensing technologies, which is essential for real-time applications such as natural disaster monitoring. However, traditional centralized processing by the ground server incurs a severe timeliness issue caused by the transmission bottleneck of raw data. To this end, Space Computing Power Networks (Space-CPN) emerges as a promising architecture to coordinate the computing capability of satellites and enable on board data processing. Nevertheless, due to the natural limitations of solar panels, satellite power system is difficult to meet the energy requirements for ever-increasing intelligent computation tasks of artificial neural networks. To tackle this issue, we propose to employ spiking neural networks (SNNs), which is supported by the neuromorphic computing architecture, for on-board data processing. The extreme sparsity in its computation enables a high energy efficiency. Furthermore, to achieve effective training of these on-board models, we put forward a decentralized neuromorphic learning framework, where a communication-efficient inter-plane model aggregation method is developed with the inspiration from RelaySum. We provide a theoretical analysis to characterize the convergence behavior of the proposed algorithm, which reveals a network diameter related convergence speed. We then formulate a minimum diameter spanning tree problem on the inter-plane connectivity topology and solve it to further improve the learning performance. Extensive experiments are conducted to evaluate the superiority of the proposed method over benchmarks.

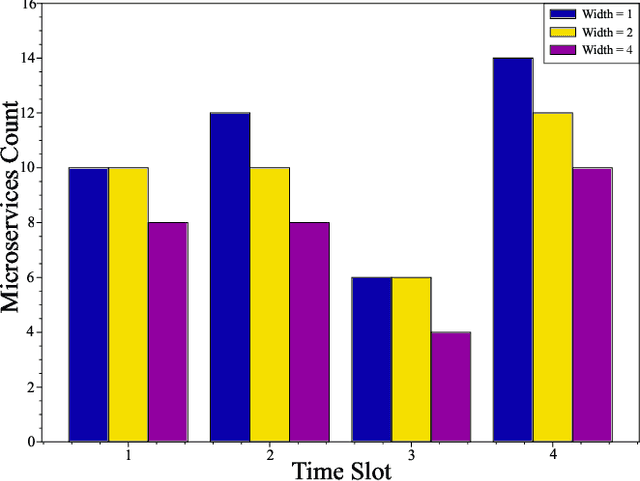

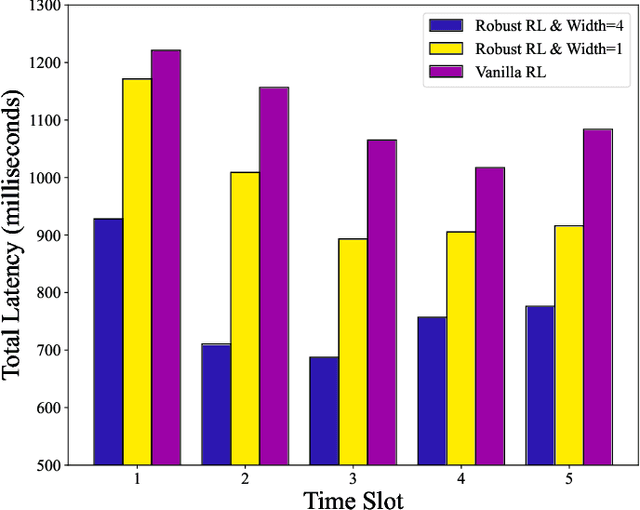

Microservice Deployment in Space Computing Power Networks via Robust Reinforcement Learning

Jan 08, 2025

Abstract:With the growing demand for Earth observation, it is important to provide reliable real-time remote sensing inference services to meet the low-latency requirements. The Space Computing Power Network (Space-CPN) offers a promising solution by providing onboard computing and extensive coverage capabilities for real-time inference. This paper presents a remote sensing artificial intelligence applications deployment framework designed for Low Earth Orbit satellite constellations to achieve real-time inference performance. The framework employs the microservice architecture, decomposing monolithic inference tasks into reusable, independent modules to address high latency and resource heterogeneity. This distributed approach enables optimized microservice deployment, minimizing resource utilization while meeting quality of service and functional requirements. We introduce Robust Optimization to the deployment problem to address data uncertainty. Additionally, we model the Robust Optimization problem as a Partially Observable Markov Decision Process and propose a robust reinforcement learning algorithm to handle the semi-infinite Quality of Service constraints. Our approach yields sub-optimal solutions that minimize accuracy loss while maintaining acceptable computational costs. Simulation results demonstrate the effectiveness of our framework.

Improving Decoupled Posterior Sampling for Inverse Problems using Data Consistency Constraint

Dec 01, 2024

Abstract:Diffusion models have shown strong performances in solving inverse problems through posterior sampling while they suffer from errors during earlier steps. To mitigate this issue, several Decoupled Posterior Sampling methods have been recently proposed. However, the reverse process in these methods ignores measurement information, leading to errors that impede effective optimization in subsequent steps. To solve this problem, we propose Guided Decoupled Posterior Sampling (GDPS) by integrating a data consistency constraint in the reverse process. The constraint performs a smoother transition within the optimization process, facilitating a more effective convergence toward the target distribution. Furthermore, we extend our method to latent diffusion models and Tweedie's formula, demonstrating its scalability. We evaluate GDPS on the FFHQ and ImageNet datasets across various linear and nonlinear tasks under both standard and challenging conditions. Experimental results demonstrate that GDPS achieves state-of-the-art performance, improving accuracy over existing methods.

Hierarchical Learning and Computing over Space-Ground Integrated Networks

Aug 26, 2024

Abstract:Space-ground integrated networks hold great promise for providing global connectivity, particularly in remote areas where large amounts of valuable data are generated by Internet of Things (IoT) devices, but lacking terrestrial communication infrastructure. The massive data is conventionally transferred to the cloud server for centralized artificial intelligence (AI) models training, raising huge communication overhead and privacy concerns. To address this, we propose a hierarchical learning and computing framework, which leverages the lowlatency characteristic of low-earth-orbit (LEO) satellites and the global coverage of geostationary-earth-orbit (GEO) satellites, to provide global aggregation services for locally trained models on ground IoT devices. Due to the time-varying nature of satellite network topology and the energy constraints of LEO satellites, efficiently aggregating the received local models from ground devices on LEO satellites is highly challenging. By leveraging the predictability of inter-satellite connectivity, modeling the space network as a directed graph, we formulate a network energy minimization problem for model aggregation, which turns out to be a Directed Steiner Tree (DST) problem. We propose a topologyaware energy-efficient routing (TAEER) algorithm to solve the DST problem by finding a minimum spanning arborescence on a substitute directed graph. Extensive simulations under realworld space-ground integrated network settings demonstrate that the proposed TAEER algorithm significantly reduces energy consumption and outperforms benchmarks.

Approximate Message Passing with Nearest Neighbor Sparsity Pattern Learning

Jan 04, 2016

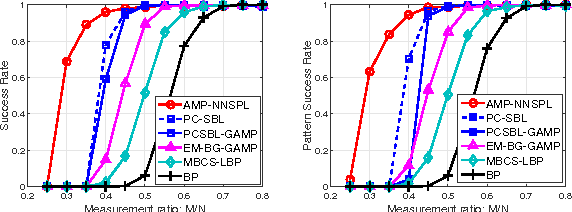

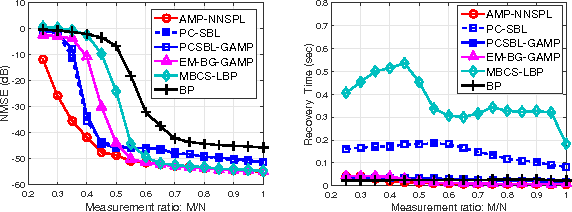

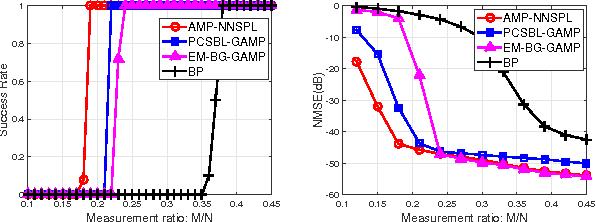

Abstract:We consider the problem of recovering clustered sparse signals with no prior knowledge of the sparsity pattern. Beyond simple sparsity, signals of interest often exhibits an underlying sparsity pattern which, if leveraged, can improve the reconstruction performance. However, the sparsity pattern is usually unknown a priori. Inspired by the idea of k-nearest neighbor (k-NN) algorithm, we propose an efficient algorithm termed approximate message passing with nearest neighbor sparsity pattern learning (AMP-NNSPL), which learns the sparsity pattern adaptively. AMP-NNSPL specifies a flexible spike and slab prior on the unknown signal and, after each AMP iteration, sets the sparse ratios as the average of the nearest neighbor estimates via expectation maximization (EM). Experimental results on both synthetic and real data demonstrate the superiority of our proposed algorithm both in terms of reconstruction performance and computational complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge