Ting Wang

Michael Pokorny

RASA: Routing-Aware Safety Alignment for Mixture-of-Experts Models

Feb 04, 2026Abstract:Mixture-of-Experts (MoE) language models introduce unique challenges for safety alignment due to their sparse routing mechanisms, which can enable degenerate optimization behaviors under standard full-parameter fine-tuning. In our preliminary experiments, we observe that naively applying full-parameter safety fine-tuning to MoE models can reduce attack success rates through routing or expert dominance effects, rather than by directly repairing Safety-Critical Experts. To address this challenge, we propose RASA, a routing-aware expert-level alignment framework that explicitly repairs Safety-Critical Experts while preventing routing-based bypasses. RASA identifies experts disproportionately activated by successful jailbreaks, selectively fine-tunes only these experts under fixed routing, and subsequently enforces routing consistency with safety-aligned contexts. Across two representative MoE architectures and a diverse set of jailbreak attacks, RASA achieves near-perfect robustness, strong cross-attack generalization, and substantially reduced over-refusal, while preserving general capabilities on benchmarks such as MMLU, GSM8K, and TruthfulQA. Our results suggest that robust MoE safety alignment benefits from targeted expert repair rather than global parameter updates, offering a practical and architecture-preserving alternative to prior approaches.

Orthogonal Hierarchical Decomposition for Structure-Aware Table Understanding with Large Language Models

Feb 02, 2026Abstract:Complex tables with multi-level headers, merged cells and heterogeneous layouts pose persistent challenges for LLMs in both understanding and reasoning. Existing approaches typically rely on table linearization or normalized grid modeling. However, these representations struggle to explicitly capture hierarchical structures and cross-dimensional dependencies, which can lead to misalignment between structural semantics and textual representations for non-standard tables. To address this issue, we propose an Orthogonal Hierarchical Decomposition (OHD) framework that constructs structure-preserving input representations of complex tables for LLMs. OHD introduces an Orthogonal Tree Induction (OTI) method based on spatial--semantic co-constraints, which decomposes irregular tables into a column tree and a row tree to capture vertical and horizontal hierarchical dependencies, respectively. Building on this representation, we design a dual-pathway association protocol to symmetrically reconstruct semantic lineage of each cell, and incorporate an LLM as a semantic arbitrator to align multi-level semantic information. We evaluate OHD framework on two complex table question answering benchmarks, AITQA and HiTab. Experimental results show that OHD consistently outperforms existing representation paradigms across multiple evaluation metrics.

Enhancing Medical Large Vision-Language Models via Alignment Distillation

Dec 21, 2025

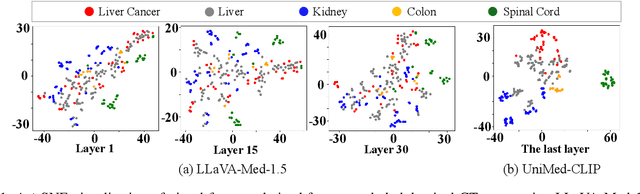

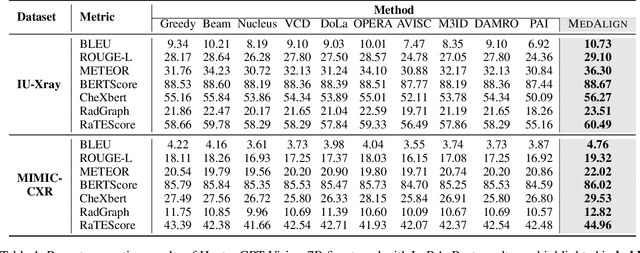

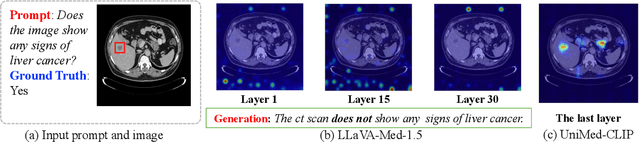

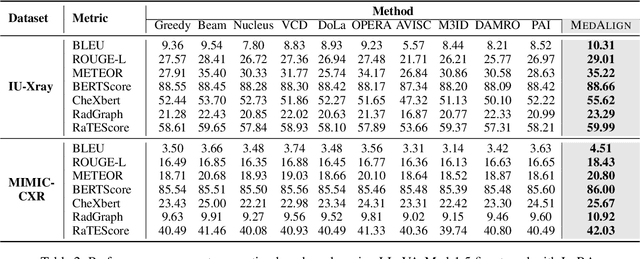

Abstract:Medical Large Vision-Language Models (Med-LVLMs) have shown promising results in clinical applications, but often suffer from hallucinated outputs due to misaligned visual understanding. In this work, we identify two fundamental limitations contributing to this issue: insufficient visual representation learning and poor visual attention alignment. To address these problems, we propose MEDALIGN, a simple, lightweight alignment distillation framework that transfers visual alignment knowledge from a domain-specific Contrastive Language-Image Pre-training (CLIP) model to Med-LVLMs. MEDALIGN introduces two distillation losses: a spatial-aware visual alignment loss based on visual token-level similarity structures, and an attention-aware distillation loss that guides attention toward diagnostically relevant regions. Extensive experiments on medical report generation and medical visual question answering (VQA) benchmarks show that MEDALIGN consistently improves both performance and interpretability, yielding more visually grounded outputs.

Synthetic Voices, Real Threats: Evaluating Large Text-to-Speech Models in Generating Harmful Audio

Nov 14, 2025Abstract:Modern text-to-speech (TTS) systems, particularly those built on Large Audio-Language Models (LALMs), generate high-fidelity speech that faithfully reproduces input text and mimics specified speaker identities. While prior misuse studies have focused on speaker impersonation, this work explores a distinct content-centric threat: exploiting TTS systems to produce speech containing harmful content. Realizing such threats poses two core challenges: (1) LALM safety alignment frequently rejects harmful prompts, yet existing jailbreak attacks are ill-suited for TTS because these systems are designed to faithfully vocalize any input text, and (2) real-world deployment pipelines often employ input/output filters that block harmful text and audio. We present HARMGEN, a suite of five attacks organized into two families that address these challenges. The first family employs semantic obfuscation techniques (Concat, Shuffle) that conceal harmful content within text. The second leverages audio-modality exploits (Read, Spell, Phoneme) that inject harmful content through auxiliary audio channels while maintaining benign textual prompts. Through evaluation across five commercial LALMs-based TTS systems and three datasets spanning two languages, we demonstrate that our attacks substantially reduce refusal rates and increase the toxicity of generated speech. We further assess both reactive countermeasures deployed by audio-streaming platforms and proactive defenses implemented by TTS providers. Our analysis reveals critical vulnerabilities: deepfake detectors underperform on high-fidelity audio; reactive moderation can be circumvented by adversarial perturbations; while proactive moderation detects 57-93% of attacks. Our work highlights a previously underexplored content-centric misuse vector for TTS and underscore the need for robust cross-modal safeguards throughout training and deployment.

Scale-Aware Relay and Scale-Adaptive Loss for Tiny Object Detection in Aerial Images

Nov 13, 2025

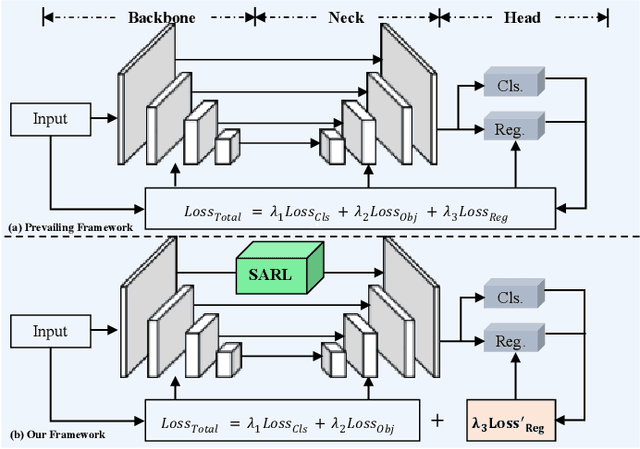

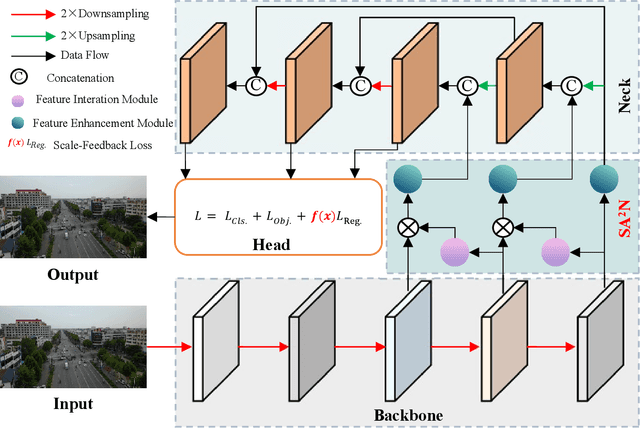

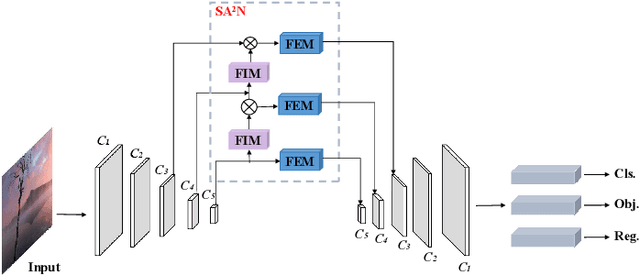

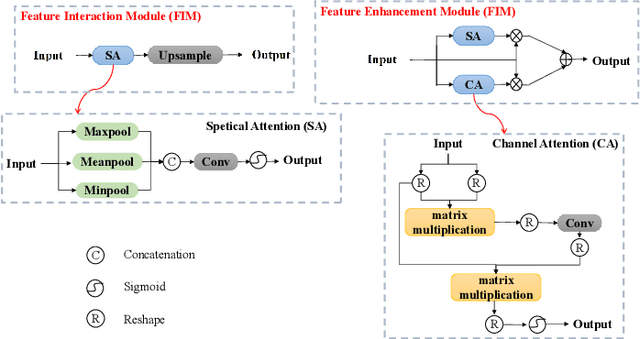

Abstract:Recently, despite the remarkable advancements in object detection, modern detectors still struggle to detect tiny objects in aerial images. One key reason is that tiny objects carry limited features that are inevitably degraded or lost during long-distance network propagation. Another is that smaller objects receive disproportionately greater regression penalties than larger ones during training. To tackle these issues, we propose a Scale-Aware Relay Layer (SARL) and a Scale-Adaptive Loss (SAL) for tiny object detection, both of which are seamlessly compatible with the top-performing frameworks. Specifically, SARL employs a cross-scale spatial-channel attention to progressively enrich the meaningful features of each layer and strengthen the cross-layer feature sharing. SAL reshapes the vanilla IoU-based losses so as to dynamically assign lower weights to larger objects. This loss is able to focus training on tiny objects while reducing the influence on large objects. Extensive experiments are conducted on three benchmarks (\textit{i.e.,} AI-TOD, DOTA-v2.0 and VisDrone2019), and the results demonstrate that the proposed method boosts the generalization ability by 5.5\% Average Precision (AP) when embedded in YOLOv5 (anchor-based) and YOLOx (anchor-free) baselines. Moreover, it also promotes the robust performance with 29.0\% AP on the real-world noisy dataset (\textit{i.e.,} AI-TOD-v2.0).

Beyond Plain Demos: A Demo-centric Anchoring Paradigm for In-Context Learning in Alzheimer's Disease Detection

Nov 10, 2025Abstract:Detecting Alzheimer's disease (AD) from narrative transcripts challenges large language models (LLMs): pre-training rarely covers this out-of-distribution task, and all transcript demos describe the same scene, producing highly homogeneous contexts. These factors cripple both the model's built-in task knowledge (\textbf{task cognition}) and its ability to surface subtle, class-discriminative cues (\textbf{contextual perception}). Because cognition is fixed after pre-training, improving in-context learning (ICL) for AD detection hinges on enriching perception through better demonstration (demo) sets. We demonstrate that standard ICL quickly saturates, its demos lack diversity (context width) and fail to convey fine-grained signals (context depth), and that recent task vector (TV) approaches improve broad task adaptation by injecting TV into the LLMs' hidden states (HSs), they are ill-suited for AD detection due to the mismatch of injection granularity, strength and position. To address these bottlenecks, we introduce \textbf{DA4ICL}, a demo-centric anchoring framework that jointly expands context width via \emph{\textbf{Diverse and Contrastive Retrieval}} (DCR) and deepens each demo's signal via \emph{\textbf{Projected Vector Anchoring}} (PVA) at every Transformer layer. Across three AD benchmarks, DA4ICL achieves large, stable gains over both ICL and TV baselines, charting a new paradigm for fine-grained, OOD and low-resource LLM adaptation.

Explicit Knowledge-Guided In-Context Learning for Early Detection of Alzheimer's Disease

Nov 09, 2025Abstract:Detecting Alzheimer's Disease (AD) from narrative transcripts remains a challenging task for large language models (LLMs), particularly under out-of-distribution (OOD) and data-scarce conditions. While in-context learning (ICL) provides a parameter-efficient alternative to fine-tuning, existing ICL approaches often suffer from task recognition failure, suboptimal demonstration selection, and misalignment between label words and task objectives, issues that are amplified in clinical domains like AD detection. We propose Explicit Knowledge In-Context Learners (EK-ICL), a novel framework that integrates structured explicit knowledge to enhance reasoning stability and task alignment in ICL. EK-ICL incorporates three knowledge components: confidence scores derived from small language models (SLMs) to ground predictions in task-relevant patterns, parsing feature scores to capture structural differences and improve demo selection, and label word replacement to resolve semantic misalignment with LLM priors. In addition, EK-ICL employs a parsing-based retrieval strategy and ensemble prediction to mitigate the effects of semantic homogeneity in AD transcripts. Extensive experiments across three AD datasets demonstrate that EK-ICL significantly outperforms state-of-the-art fine-tuning and ICL baselines. Further analysis reveals that ICL performance in AD detection is highly sensitive to the alignment of label semantics and task-specific context, underscoring the importance of explicit knowledge in clinical reasoning under low-resource conditions.

Optical Link Tomography: First Field Trial and 4D Extension

Oct 10, 2025Abstract:Optical link tomography (OLT) is a rapidly evolving field that allows the multi-span, end-to-end visualization of optical power along fiber links in multiple dimensions from network endpoints, solely by processing signals received at coherent receivers. This paper has two objectives: (1) to report the first field trial of OLT, using a commercial transponder under standard DWDM transmission, and (2) to extend its capability to visualize across 4D (distance, time, frequency, and polarization), allowing for locating and measuring multiple QoT degradation causes, including time-varying power anomalies, spectral anomalies, and excessive polarization dependent loss. We also address a critical aspect of OLT, i.e., its need for high fiber launch power, by improving power profile signal-to-noise ratio through averaging across all available dimensions. Consequently, multiple loss anomalies in a field-deployed link are observed even at launch power lower than the system-optimal level. The applications and use cases of OLT from network commissioning to provisioning and operation for current and near-term network scenarios are also discussed.

* 12 pages, 7 figures, accepted version for Journal of Lightwave Technology

Physics-informed deep operator network for traffic state estimation

Aug 18, 2025

Abstract:Traffic state estimation (TSE) fundamentally involves solving high-dimensional spatiotemporal partial differential equations (PDEs) governing traffic flow dynamics from limited, noisy measurements. While Physics-Informed Neural Networks (PINNs) enforce PDE constraints point-wise, this paper adopts a physics-informed deep operator network (PI-DeepONet) framework that reformulates TSE as an operator learning problem. Our approach trains a parameterized neural operator that maps sparse input data to the full spatiotemporal traffic state field, governed by the traffic flow conservation law. Crucially, unlike PINNs that enforce PDE constraints point-wise, PI-DeepONet integrates traffic flow conservation model and the fundamental diagram directly into the operator learning process, ensuring physical consistency while capturing congestion propagation, spatial correlations, and temporal evolution. Experiments on the NGSIM dataset demonstrate superior performance over state-of-the-art baselines. Further analysis reveals insights into optimal function generation strategies and branch network complexity. Additionally, the impact of input function generation methods and the number of functions on model performance is explored, highlighting the robustness and efficacy of proposed framework.

Precise Zero-Shot Pointwise Ranking with LLMs through Post-Aggregated Global Context Information

Jun 12, 2025Abstract:Recent advancements have successfully harnessed the power of Large Language Models (LLMs) for zero-shot document ranking, exploring a variety of prompting strategies. Comparative approaches like pairwise and listwise achieve high effectiveness but are computationally intensive and thus less practical for larger-scale applications. Scoring-based pointwise approaches exhibit superior efficiency by independently and simultaneously generating the relevance scores for each candidate document. However, this independence ignores critical comparative insights between documents, resulting in inconsistent scoring and suboptimal performance. In this paper, we aim to improve the effectiveness of pointwise methods while preserving their efficiency through two key innovations: (1) We propose a novel Global-Consistent Comparative Pointwise Ranking (GCCP) strategy that incorporates global reference comparisons between each candidate and an anchor document to generate contrastive relevance scores. We strategically design the anchor document as a query-focused summary of pseudo-relevant candidates, which serves as an effective reference point by capturing the global context for document comparison. (2) These contrastive relevance scores can be efficiently Post-Aggregated with existing pointwise methods, seamlessly integrating essential Global Context information in a training-free manner (PAGC). Extensive experiments on the TREC DL and BEIR benchmark demonstrate that our approach significantly outperforms previous pointwise methods while maintaining comparable efficiency. Our method also achieves competitive performance against comparative methods that require substantially more computational resources. More analyses further validate the efficacy of our anchor construction strategy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge