Fu Zhang

MaRS Laboratory, Dept. of Mechanical Engineering, The University of Hong Kong

Sparse Video Generation Propels Real-World Beyond-the-View Vision-Language Navigation

Feb 05, 2026Abstract:Why must vision-language navigation be bound to detailed and verbose language instructions? While such details ease decision-making, they fundamentally contradict the goal for navigation in the real-world. Ideally, agents should possess the autonomy to navigate in unknown environments guided solely by simple and high-level intents. Realizing this ambition introduces a formidable challenge: Beyond-the-View Navigation (BVN), where agents must locate distant, unseen targets without dense and step-by-step guidance. Existing large language model (LLM)-based methods, though adept at following dense instructions, often suffer from short-sighted behaviors due to their reliance on short-horimzon supervision. Simply extending the supervision horizon, however, destabilizes LLM training. In this work, we identify that video generation models inherently benefit from long-horizon supervision to align with language instructions, rendering them uniquely suitable for BVN tasks. Capitalizing on this insight, we propose introducing the video generation model into this field for the first time. Yet, the prohibitive latency for generating videos spanning tens of seconds makes real-world deployment impractical. To bridge this gap, we propose SparseVideoNav, achieving sub-second trajectory inference guided by a generated sparse future spanning a 20-second horizon. This yields a remarkable 27x speed-up compared to the unoptimized counterpart. Extensive real-world zero-shot experiments demonstrate that SparseVideoNav achieves 2.5x the success rate of state-of-the-art LLM baselines on BVN tasks and marks the first realization of such capability in challenging night scenes.

6DAttack: Backdoor Attacks in the 6DoF Pose Estimation

Dec 22, 2025

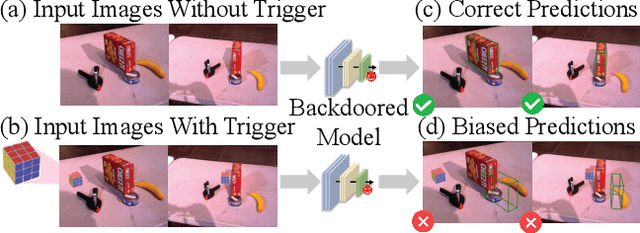

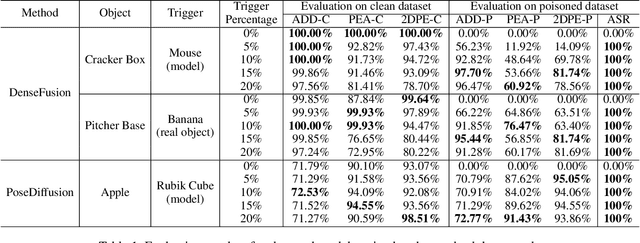

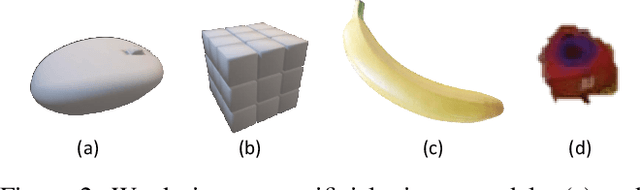

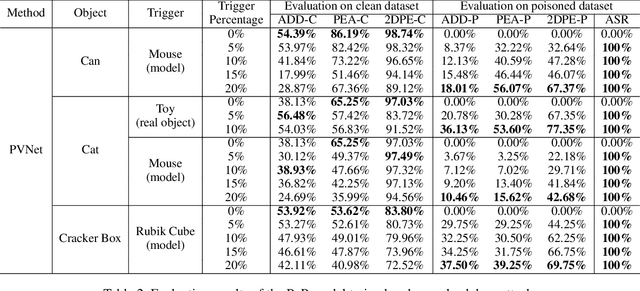

Abstract:Deep learning advances have enabled accurate six-degree-of-freedom (6DoF) object pose estimation, widely used in robotics, AR/VR, and autonomous systems. However, backdoor attacks pose significant security risks. While most research focuses on 2D vision, 6DoF pose estimation remains largely unexplored. Unlike traditional backdoors that only change classes, 6DoF attacks must control continuous parameters like translation and rotation, rendering 2D methods inapplicable. We propose 6DAttack, a framework using 3D object triggers to induce controlled erroneous poses while maintaining normal behavior. Evaluations on PVNet, DenseFusion, and PoseDiffusion across LINEMOD, YCB-Video, and CO3D show high attack success rates (ASRs) without compromising clean performance. Backdoored models achieve up to 100% clean ADD accuracy and 100% ASR, with triggered samples reaching 97.70% ADD-P. Furthermore, a representative defense remains ineffective. Our findings reveal a serious, underexplored threat to 6DoF pose estimation.

FAST-Calib: LiDAR-Camera Extrinsic Calibration in One Second

Jul 23, 2025Abstract:This paper proposes FAST-Calib, a fast and user-friendly LiDAR-camera extrinsic calibration tool based on a custom-made 3D target. FAST-Calib supports both mechanical and solid-state LiDARs by leveraging an efficient and reliable edge extraction algorithm that is agnostic to LiDAR scan patterns. It also compensates for edge dilation artifacts caused by LiDAR spot spread through ellipse fitting, and supports joint optimization across multiple scenes. We validate FAST-Calib on three LiDAR models (Ouster, Avia, and Mid360), each paired with a wide-angle camera. Experimental results demonstrate superior accuracy and robustness compared to existing methods. With point-to-point registration errors consistently below 6.5mm and total processing time under 0.7s, FAST-Calib provides an efficient, accurate, and target-based automatic calibration pipeline. We have open-sourced our code and dataset on GitHub to benefit the robotics community.

Mesh-Learner: Texturing Mesh with Spherical Harmonics

Apr 28, 2025Abstract:In this paper, we present a 3D reconstruction and rendering framework termed Mesh-Learner that is natively compatible with traditional rasterization pipelines. It integrates mesh and spherical harmonic (SH) texture (i.e., texture filled with SH coefficients) into the learning process to learn each mesh s view-dependent radiance end-to-end. Images are rendered by interpolating surrounding SH Texels at each pixel s sampling point using a novel interpolation method. Conversely, gradients from each pixel are back-propagated to the related SH Texels in SH textures. Mesh-Learner exploits graphic features of rasterization pipeline (texture sampling, deferred rendering) to render, which makes Mesh-Learner naturally compatible with tools (e.g., Blender) and tasks (e.g., 3D reconstruction, scene rendering, reinforcement learning for robotics) that are based on rasterization pipelines. Our system can train vast, unlimited scenes because we transfer only the SH textures within the frustum to the GPU for training. At other times, the SH textures are stored in CPU RAM, which results in moderate GPU memory usage. The rendering results on interpolation and extrapolation sequences in the Replica and FAST-LIVO2 datasets achieve state-of-the-art performance compared to existing state-of-the-art methods (e.g., 3D Gaussian Splatting and M2-Mapping). To benefit the society, the code will be available at https://github.com/hku-mars/Mesh-Learner.

Flying through cluttered and dynamic environments with LiDAR

Apr 24, 2025

Abstract:Navigating unmanned aerial vehicles (UAVs) through cluttered and dynamic environments remains a significant challenge, particularly when dealing with fast-moving or sudden-appearing obstacles. This paper introduces a complete LiDAR-based system designed to enable UAVs to avoid various moving obstacles in complex environments. Benefiting the high computational efficiency of perception and planning, the system can operate in real time using onboard computing resources with low latency. For dynamic environment perception, we have integrated our previous work, M-detector, into the system. M-detector ensures that moving objects of different sizes, colors, and types are reliably detected. For dynamic environment planning, we incorporate dynamic object predictions into the integrated planning and control (IPC) framework, namely DynIPC. This integration allows the UAV to utilize predictions about dynamic obstacles to effectively evade them. We validate our proposed system through both simulations and real-world experiments. In simulation tests, our system outperforms state-of-the-art baselines across several metrics, including success rate, time consumption, average flight time, and maximum velocity. In real-world trials, our system successfully navigates through forests, avoiding moving obstacles along its path.

FERMI: Flexible Radio Mapping with a Hybrid Propagation Model and Scalable Autonomous Data Collection

Apr 21, 2025Abstract:Communication is fundamental for multi-robot collaboration, with accurate radio mapping playing a crucial role in predicting signal strength between robots. However, modeling radio signal propagation in large and occluded environments is challenging due to complex interactions between signals and obstacles. Existing methods face two key limitations: they struggle to predict signal strength for transmitter-receiver pairs not present in the training set, while also requiring extensive manual data collection for modeling, making them impractical for large, obstacle-rich scenarios. To overcome these limitations, we propose FERMI, a flexible radio mapping framework. FERMI combines physics-based modeling of direct signal paths with a neural network to capture environmental interactions with radio signals. This hybrid model learns radio signal propagation more efficiently, requiring only sparse training data. Additionally, FERMI introduces a scalable planning method for autonomous data collection using a multi-robot team. By increasing parallelism in data collection and minimizing robot travel costs between regions, overall data collection efficiency is significantly improved. Experiments in both simulation and real-world scenarios demonstrate that FERMI enables accurate signal prediction and generalizes well to unseen positions in complex environments. It also supports fully autonomous data collection and scales to different team sizes, offering a flexible solution for creating radio maps. Our code is open-sourced at https://github.com/ymLuo1214/Flexible-Radio-Mapping.

Efficient Swept Volume-Based Trajectory Generation for Arbitrary-Shaped Ground Robot Navigation

Apr 10, 2025Abstract:Navigating an arbitrary-shaped ground robot safely in cluttered environments remains a challenging problem. The existing trajectory planners that account for the robot's physical geometry severely suffer from the intractable runtime. To achieve both computational efficiency and Continuous Collision Avoidance (CCA) of arbitrary-shaped ground robot planning, we proposed a novel coarse-to-fine navigation framework that significantly accelerates planning. In the first stage, a sampling-based method selectively generates distinct topological paths that guarantee a minimum inflated margin. In the second stage, a geometry-aware front-end strategy is designed to discretize these topologies into full-state robot motion sequences while concurrently partitioning the paths into SE(2) sub-problems and simpler R2 sub-problems for back-end optimization. In the final stage, an SVSDF-based optimizer generates trajectories tailored to these sub-problems and seamlessly splices them into a continuous final motion plan. Extensive benchmark comparisons show that the proposed method is one to several orders of magnitude faster than the cutting-edge methods in runtime while maintaining a high planning success rate and ensuring CCA.

LiDAR-based Quadrotor Autonomous Inspection System in Cluttered Environments

Mar 29, 2025Abstract:In recent years, autonomous unmanned aerial vehicle (UAV) technology has seen rapid advancements, significantly improving operational efficiency and mitigating risks associated with manual tasks in domains such as industrial inspection, agricultural monitoring, and search-and-rescue missions. Despite these developments, existing UAV inspection systems encounter two critical challenges: limited reliability in complex, unstructured, and GNSS-denied environments, and a pronounced dependency on skilled operators. To overcome these limitations, this study presents a LiDAR-based UAV inspection system employing a dual-phase workflow: human-in-the-loop inspection and autonomous inspection. During the human-in-the-loop phase, untrained pilots are supported by autonomous obstacle avoidance, enabling them to generate 3D maps, specify inspection points, and schedule tasks. Inspection points are then optimized using the Traveling Salesman Problem (TSP) to create efficient task sequences. In the autonomous phase, the quadrotor autonomously executes the planned tasks, ensuring safe and efficient data acquisition. Comprehensive field experiments conducted in various environments, including slopes, landslides, agricultural fields, factories, and forests, confirm the system's reliability and flexibility. Results reveal significant enhancements in inspection efficiency, with autonomous operations reducing trajectory length by up to 40\% and flight time by 57\% compared to human-in-the-loop operations. These findings underscore the potential of the proposed system to enhance UAV-based inspections in safety-critical and resource-constrained scenarios.

M2UD: A Multi-model, Multi-scenario, Uneven-terrain Dataset for Ground Robot with Localization and Mapping Evaluation

Mar 16, 2025

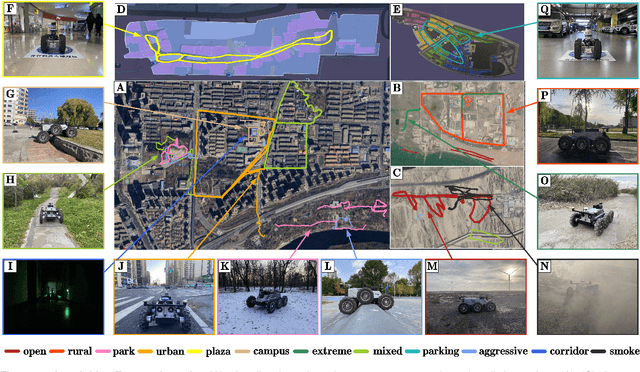

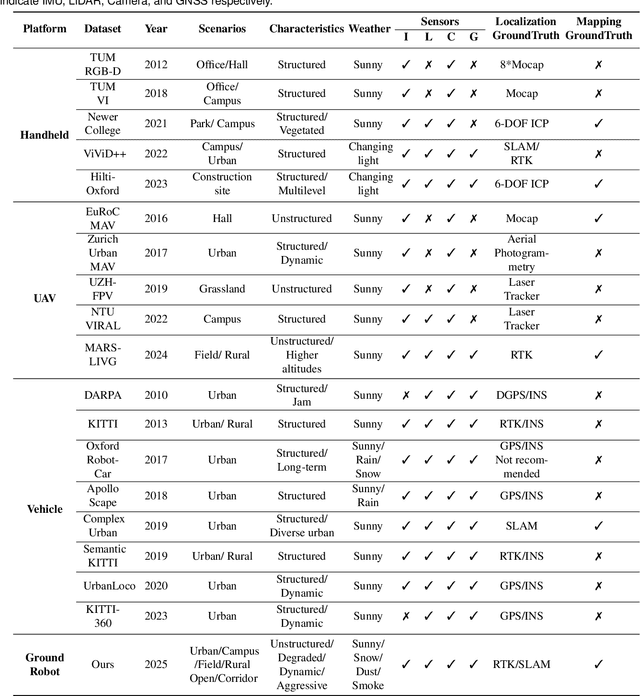

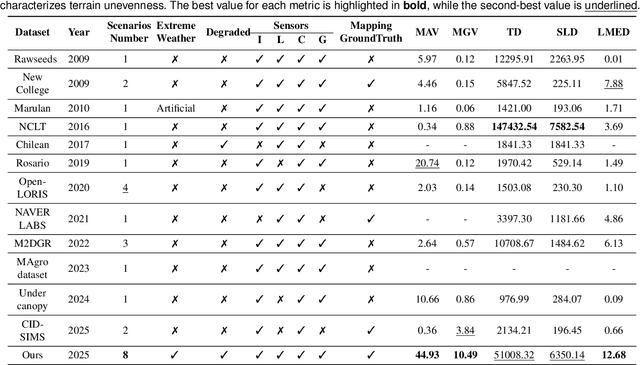

Abstract:Ground robots play a crucial role in inspection, exploration, rescue, and other applications. In recent years, advancements in LiDAR technology have made sensors more accurate, lightweight, and cost-effective. Therefore, researchers increasingly integrate sensors, for SLAM studies, providing robust technical support for ground robots and expanding their application domains. Public datasets are essential for advancing SLAM technology. However, existing datasets for ground robots are typically restricted to flat-terrain motion with 3 DOF and cover only a limited range of scenarios. Although handheld devices and UAV exhibit richer and more aggressive movements, their datasets are predominantly confined to small-scale environments due to endurance limitations. To fill these gap, we introduce M2UD, a multi-modal, multi-scenario, uneven-terrain SLAM dataset for ground robots. This dataset contains a diverse range of highly challenging environments, including cities, open fields, long corridors, and mixed scenarios. Additionally, it presents extreme weather conditions. The aggressive motion and degradation characteristics of this dataset not only pose challenges for testing and evaluating existing SLAM methods but also advance the development of more advanced SLAM algorithms. To benchmark SLAM algorithms, M2UD provides smoothed ground truth localization data obtained via RTK and introduces a novel localization evaluation metric that considers both accuracy and efficiency. Additionally, we utilize a high-precision laser scanner to acquire ground truth maps of two representative scenes, facilitating the development and evaluation of mapping algorithms. We select 12 localization sequences and 2 mapping sequences to evaluate several classical SLAM algorithms, verifying usability of the dataset. To enhance usability, the dataset is accompanied by a suite of development kits.

GS-SDF: LiDAR-Augmented Gaussian Splatting and Neural SDF for Geometrically Consistent Rendering and Reconstruction

Mar 13, 2025Abstract:Digital twins are fundamental to the development of autonomous driving and embodied artificial intelligence. However, achieving high-granularity surface reconstruction and high-fidelity rendering remains a challenge. Gaussian splatting offers efficient photorealistic rendering but struggles with geometric inconsistencies due to fragmented primitives and sparse observational data in robotics applications. Existing regularization methods, which rely on render-derived constraints, often fail in complex environments. Moreover, effectively integrating sparse LiDAR data with Gaussian splatting remains challenging. We propose a unified LiDAR-visual system that synergizes Gaussian splatting with a neural signed distance field. The accurate LiDAR point clouds enable a trained neural signed distance field to offer a manifold geometry field, This motivates us to offer an SDF-based Gaussian initialization for physically grounded primitive placement and a comprehensive geometric regularization for geometrically consistent rendering and reconstruction. Experiments demonstrate superior reconstruction accuracy and rendering quality across diverse trajectories. To benefit the community, the codes will be released at https://github.com/hku-mars/GS-SDF.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge