Huajie Wu

Flying through cluttered and dynamic environments with LiDAR

Apr 24, 2025

Abstract:Navigating unmanned aerial vehicles (UAVs) through cluttered and dynamic environments remains a significant challenge, particularly when dealing with fast-moving or sudden-appearing obstacles. This paper introduces a complete LiDAR-based system designed to enable UAVs to avoid various moving obstacles in complex environments. Benefiting the high computational efficiency of perception and planning, the system can operate in real time using onboard computing resources with low latency. For dynamic environment perception, we have integrated our previous work, M-detector, into the system. M-detector ensures that moving objects of different sizes, colors, and types are reliably detected. For dynamic environment planning, we incorporate dynamic object predictions into the integrated planning and control (IPC) framework, namely DynIPC. This integration allows the UAV to utilize predictions about dynamic obstacles to effectively evade them. We validate our proposed system through both simulations and real-world experiments. In simulation tests, our system outperforms state-of-the-art baselines across several metrics, including success rate, time consumption, average flight time, and maximum velocity. In real-world trials, our system successfully navigates through forests, avoiding moving obstacles along its path.

LiDAR-based Quadrotor Autonomous Inspection System in Cluttered Environments

Mar 29, 2025Abstract:In recent years, autonomous unmanned aerial vehicle (UAV) technology has seen rapid advancements, significantly improving operational efficiency and mitigating risks associated with manual tasks in domains such as industrial inspection, agricultural monitoring, and search-and-rescue missions. Despite these developments, existing UAV inspection systems encounter two critical challenges: limited reliability in complex, unstructured, and GNSS-denied environments, and a pronounced dependency on skilled operators. To overcome these limitations, this study presents a LiDAR-based UAV inspection system employing a dual-phase workflow: human-in-the-loop inspection and autonomous inspection. During the human-in-the-loop phase, untrained pilots are supported by autonomous obstacle avoidance, enabling them to generate 3D maps, specify inspection points, and schedule tasks. Inspection points are then optimized using the Traveling Salesman Problem (TSP) to create efficient task sequences. In the autonomous phase, the quadrotor autonomously executes the planned tasks, ensuring safe and efficient data acquisition. Comprehensive field experiments conducted in various environments, including slopes, landslides, agricultural fields, factories, and forests, confirm the system's reliability and flexibility. Results reveal significant enhancements in inspection efficiency, with autonomous operations reducing trajectory length by up to 40\% and flight time by 57\% compared to human-in-the-loop operations. These findings underscore the potential of the proposed system to enhance UAV-based inspections in safety-critical and resource-constrained scenarios.

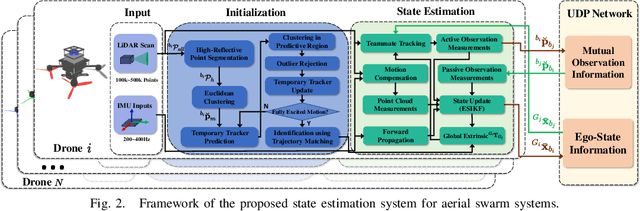

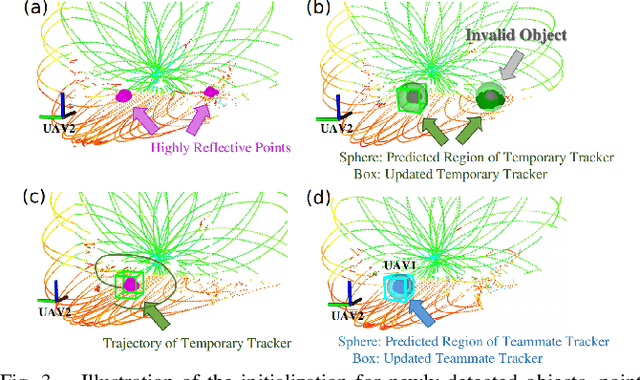

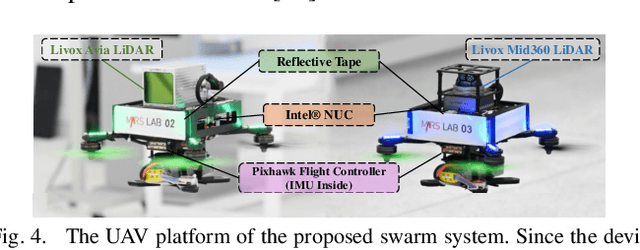

Decentralized LiDAR-inertial Swarm Odometry

Sep 14, 2022

Abstract:Accurate self and relative state estimation are the critical preconditions for completing swarm tasks, e.g., collaborative autonomous exploration, target tracking, search and rescue. This paper proposes a fully decentralized state estimation method for aerial swarm systems, in which each drone performs precise ego-state estimation, exchanges ego-state and mutual observation information by wireless communication, and estimates relative state with respect to (w.r.t.) the rest of UAVs, all in real-time and only based on LiDAR-inertial measurements. A novel 3D LiDAR-based drone detection, identification and tracking method is proposed to obtain observations of teammate drones. The mutual observation measurements are then tightly-coupled with IMU and LiDAR measurements to perform real-time and accurate estimation of ego-state and relative state jointly. Extensive real-world experiments show the broad adaptability to complicated scenarios, including GPS-denied scenes, degenerate scenes for camera (dark night) or LiDAR (facing a single wall). Compared with ground-truth provided by motion capture system, the result shows the centimeter-level localization accuracy which outperforms other state-of-the-art LiDAR-inertial odometry for single UAV system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge