Shiliang Shao

M2UD: A Multi-model, Multi-scenario, Uneven-terrain Dataset for Ground Robot with Localization and Mapping Evaluation

Mar 16, 2025

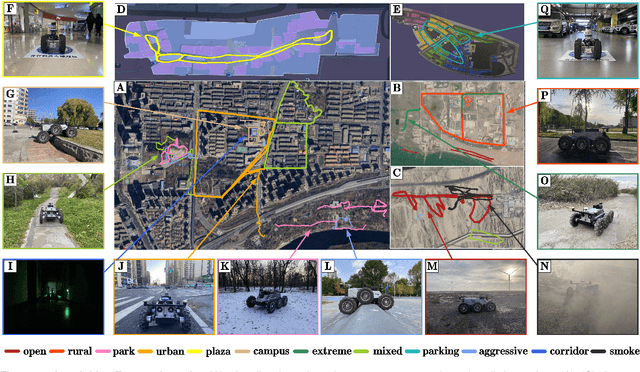

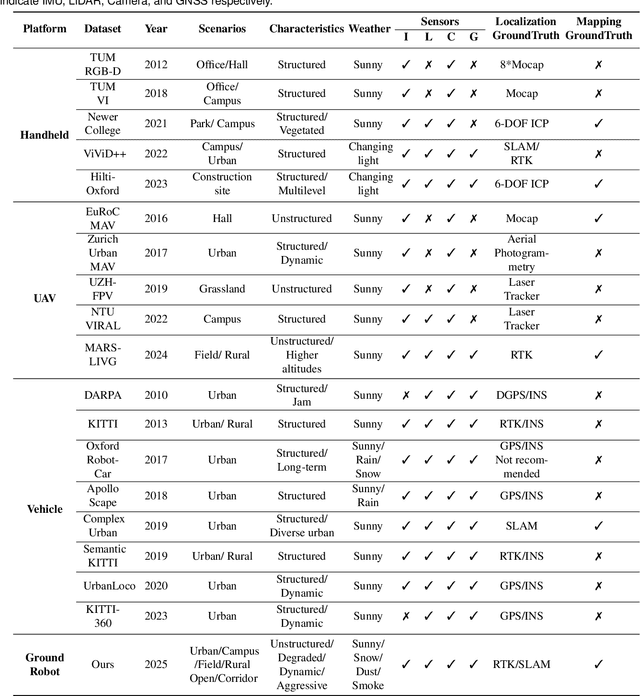

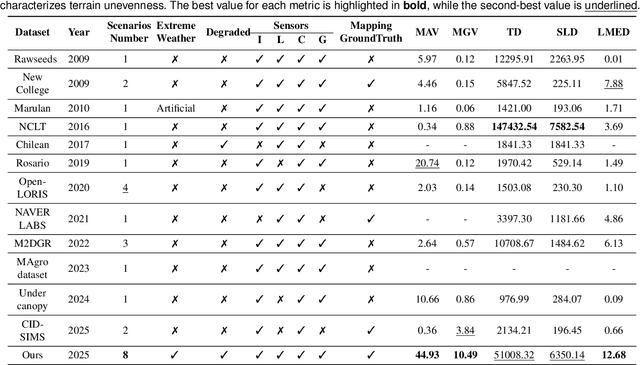

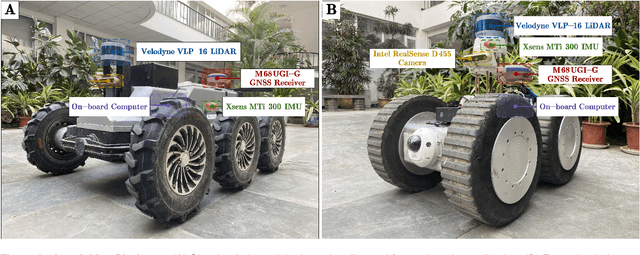

Abstract:Ground robots play a crucial role in inspection, exploration, rescue, and other applications. In recent years, advancements in LiDAR technology have made sensors more accurate, lightweight, and cost-effective. Therefore, researchers increasingly integrate sensors, for SLAM studies, providing robust technical support for ground robots and expanding their application domains. Public datasets are essential for advancing SLAM technology. However, existing datasets for ground robots are typically restricted to flat-terrain motion with 3 DOF and cover only a limited range of scenarios. Although handheld devices and UAV exhibit richer and more aggressive movements, their datasets are predominantly confined to small-scale environments due to endurance limitations. To fill these gap, we introduce M2UD, a multi-modal, multi-scenario, uneven-terrain SLAM dataset for ground robots. This dataset contains a diverse range of highly challenging environments, including cities, open fields, long corridors, and mixed scenarios. Additionally, it presents extreme weather conditions. The aggressive motion and degradation characteristics of this dataset not only pose challenges for testing and evaluating existing SLAM methods but also advance the development of more advanced SLAM algorithms. To benchmark SLAM algorithms, M2UD provides smoothed ground truth localization data obtained via RTK and introduces a novel localization evaluation metric that considers both accuracy and efficiency. Additionally, we utilize a high-precision laser scanner to acquire ground truth maps of two representative scenes, facilitating the development and evaluation of mapping algorithms. We select 12 localization sequences and 2 mapping sequences to evaluate several classical SLAM algorithms, verifying usability of the dataset. To enhance usability, the dataset is accompanied by a suite of development kits.

TRLO: An Efficient LiDAR Odometry with 3D Dynamic Object Tracking and Removal

Oct 17, 2024

Abstract:Simultaneous state estimation and mapping is an essential capability for mobile robots working in dynamic urban environment. The majority of existing SLAM solutions heavily rely on a primarily static assumption. However, due to the presence of moving vehicles and pedestrians, this assumption does not always hold, leading to localization accuracy decreased and maps distorted. To address this challenge, we propose TRLO, a dynamic LiDAR odometry that efficiently improves the accuracy of state estimation and generates a cleaner point cloud map. To efficiently detect dynamic objects in the surrounding environment, a deep learning-based method is applied, generating detection bounding boxes. We then design a 3D multi-object tracker based on Unscented Kalman Filter (UKF) and nearest neighbor (NN) strategy to reliably identify and remove dynamic objects. Subsequently, a fast two-stage iterative nearest point solver is employed to solve the state estimation using cleaned static point cloud. Note that a novel hash-based keyframe database management is proposed for fast access to search keyframes. Furthermore, all the detected object bounding boxes are leveraged to impose posture consistency constraint to further refine the final state estimation. Extensive evaluations and ablation studies conducted on the KITTI and UrbanLoco datasets demonstrate that our approach not only achieves more accurate state estimation but also generates cleaner maps, compared with baselines.

CAD-Mesher: A Convenient, Accurate, Dense Mesh-based Mapping Module in SLAM for Dynamic Environments

Aug 12, 2024Abstract:Most LiDAR odometry and SLAM systems construct maps in point clouds, which are discrete and sparse when zoomed in, making them not directly suitable for navigation. Mesh maps represent a dense and continuous map format with low memory consumption, which can approximate complex structures with simple elements, attracting significant attention of researchers in recent years. However, most implementations operate under a static environment assumption. In effect, moving objects cause ghosting, potentially degrading the quality of meshing. To address these issues, we propose a plug-and-play meshing module adapting to dynamic environments, which can easily integrate with various LiDAR odometry to generally improve the pose estimation accuracy of odometry. In our meshing module, a novel two-stage coarse-to-fine dynamic removal method is designed to effectively filter dynamic objects, generating consistent, accurate, and dense mesh maps. To our best know, this is the first mesh construction method with explicit dynamic removal. Additionally, conducive to Gaussian process in mesh construction, sliding window-based keyframe aggregation and adaptive downsampling strategies are used to ensure the uniformity of point cloud. We evaluate the localization and mapping accuracy on five publicly available datasets. Both qualitative and quantitative results demonstrate the superiority of our method compared with the state-of-the-art algorithms. The code and introduction video are publicly available at https://yaepiii.github.io/CAD-Mesher/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge