Lingwei Zhang

Shared Object Manipulation with a Team of Collaborative Quadrupeds

Oct 01, 2025

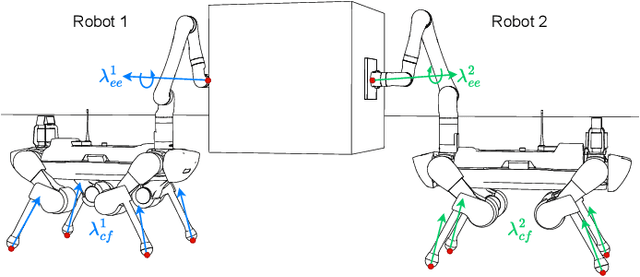

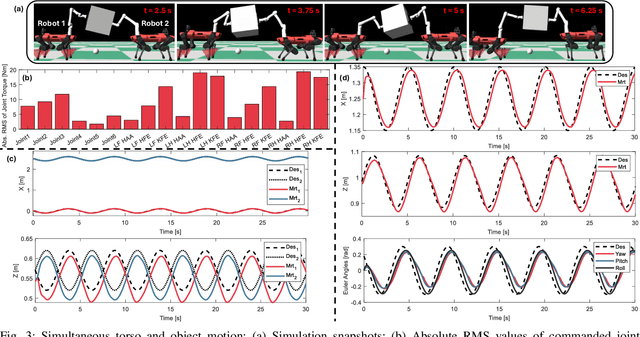

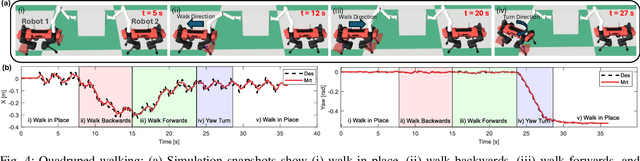

Abstract:Utilizing teams of multiple robots is advantageous for handling bulky objects. Many related works focus on multi-manipulator systems, which are limited by workspace constraints. In this paper, we extend a classical hybrid motion-force controller to a team of legged manipulator systems, enabling collaborative loco-manipulation of rigid objects with a force-closed grasp. Our novel approach allows the robots to flexibly coordinate their movements, achieving efficient and stable object co-manipulation and transport, validated through extensive simulations and real-world experiments.

Whole-Body Control Framework for Humanoid Robots with Heavy Limbs: A Model-Based Approach

Jun 17, 2025Abstract:Humanoid robots often face significant balance issues due to the motion of their heavy limbs. These challenges are particularly pronounced when attempting dynamic motion or operating in environments with irregular terrain. To address this challenge, this manuscript proposes a whole-body control framework for humanoid robots with heavy limbs, using a model-based approach that combines a kino-dynamics planner and a hierarchical optimization problem. The kino-dynamics planner is designed as a model predictive control (MPC) scheme to account for the impact of heavy limbs on mass and inertia distribution. By simplifying the robot's system dynamics and constraints, the planner enables real-time planning of motion and contact forces. The hierarchical optimization problem is formulated using Hierarchical Quadratic Programming (HQP) to minimize limb control errors and ensure compliance with the policy generated by the kino-dynamics planner. Experimental validation of the proposed framework demonstrates its effectiveness. The humanoid robot with heavy limbs controlled by the proposed framework can achieve dynamic walking speeds of up to 1.2~m/s, respond to external disturbances of up to 60~N, and maintain balance on challenging terrains such as uneven surfaces, and outdoor environments.

MEDMKG: Benchmarking Medical Knowledge Exploitation with Multimodal Knowledge Graph

May 22, 2025Abstract:Medical deep learning models depend heavily on domain-specific knowledge to perform well on knowledge-intensive clinical tasks. Prior work has primarily leveraged unimodal knowledge graphs, such as the Unified Medical Language System (UMLS), to enhance model performance. However, integrating multimodal medical knowledge graphs remains largely underexplored, mainly due to the lack of resources linking imaging data with clinical concepts. To address this gap, we propose MEDMKG, a Medical Multimodal Knowledge Graph that unifies visual and textual medical information through a multi-stage construction pipeline. MEDMKG fuses the rich multimodal data from MIMIC-CXR with the structured clinical knowledge from UMLS, utilizing both rule-based tools and large language models for accurate concept extraction and relationship modeling. To ensure graph quality and compactness, we introduce Neighbor-aware Filtering (NaF), a novel filtering algorithm tailored for multimodal knowledge graphs. We evaluate MEDMKG across three tasks under two experimental settings, benchmarking twenty-four baseline methods and four state-of-the-art vision-language backbones on six datasets. Results show that MEDMKG not only improves performance in downstream medical tasks but also offers a strong foundation for developing adaptive and robust strategies for multimodal knowledge integration in medical artificial intelligence.

Online Omnidirectional Jumping Trajectory Planning for Quadrupedal Robots on Uneven Terrains

Nov 07, 2024Abstract:Natural terrain complexity often necessitates agile movements like jumping in animals to improve traversal efficiency. To enable similar capabilities in quadruped robots, complex real-time jumping maneuvers are required. Current research does not adequately address the problem of online omnidirectional jumping and neglects the robot's kinodynamic constraints during trajectory generation. This paper proposes a general and complete cascade online optimization framework for omnidirectional jumping for quadruped robots. Our solution systematically encompasses jumping trajectory generation, a trajectory tracking controller, and a landing controller. It also incorporates environmental perception to navigate obstacles that standard locomotion cannot bypass, such as jumping from high platforms. We introduce a novel jumping plane to parameterize omnidirectional jumping motion and formulate a tightly coupled optimization problem accounting for the kinodynamic constraints, simultaneously optimizing CoM trajectory, Ground Reaction Forces (GRFs), and joint states. To meet the online requirements, we propose an accelerated evolutionary algorithm as the trajectory optimizer to address the complexity of kinodynamic constraints. To ensure stability and accuracy in environmental perception post-landing, we introduce a coarse-to-fine relocalization method that combines global Branch and Bound (BnB) search with Maximum a Posteriori (MAP) estimation for precise positioning during navigation and jumping. The proposed framework achieves jump trajectory generation in approximately 0.1 seconds with a warm start and has been successfully validated on two quadruped robots on uneven terrains. Additionally, we extend the framework's versatility to humanoid robots.

A Fast Online Omnidirectional Quadrupedal Jumping Framework Via Virtual-Model Control and Minimum Jerk Trajectory Generation

Jun 30, 2024Abstract:Exploring the limits of quadruped robot agility, particularly in the context of rapid and real-time planning and execution of omnidirectional jump trajectories, presents significant challenges due to the complex dynamics involved, especially when considering significant impulse contacts. This paper introduces a new framework to enable fast, omnidirectional jumping capabilities for quadruped robots. Utilizing minimum jerk technology, the proposed framework efficiently generates jump trajectories that exploit its analytical solutions, ensuring numerical stability and dynamic compatibility with minimal computational resources. The virtual model control is employed to formulate a Quadratic Programming (QP) optimization problem to accurately track the Center of Mass (CoM) trajectories during the jump phase. The whole-body control strategies facilitate precise and compliant landing motion. Moreover, the different jumping phase is triggered by time-schedule. The framework's efficacy is demonstrated through its implementation on an enhanced version of the open-source Mini Cheetah robot. Omnidirectional jumps-including forward, backward, and other directional-were successfully executed, showcasing the robot's capability to perform rapid and consecutive jumps with an average trajectory generation and tracking solution time of merely 50 microseconds.

Evolutionary-Based Online Motion Planning Framework for Quadruped Robot Jumping

Sep 14, 2023Abstract:Offline evolutionary-based methodologies have supplied a successful motion planning framework for the quadrupedal jump. However, the time-consuming computation caused by massive population evolution in offline evolutionary-based jumping framework significantly limits the popularity in the quadrupedal field. This paper presents a time-friendly online motion planning framework based on meta-heuristic Differential evolution (DE), Latin hypercube sampling, and Configuration space (DLC). The DLC framework establishes a multidimensional optimization problem leveraging centroidal dynamics to determine the ideal trajectory of the center of mass (CoM) and ground reaction forces (GRFs). The configuration space is introduced to the evolutionary optimization in order to condense the searching region. Latin hypercube sampling offers more uniform initial populations of DE under limited sampling points, accelerating away from a local minimum. This research also constructs a collection of pre-motion trajectories as a warm start when the objective state is in the neighborhood of the pre-motion state to drastically reduce the solving time. The proposed methodology is successfully validated via real robot experiments for online jumping trajectory optimization with different jumping motions (e.g., ordinary jumping, flipping, and spinning).

Knowledge is Power: Understanding Causality Makes Legal judgment Prediction Models More Generalizable and Robust

Nov 06, 2022Abstract:Legal judgment Prediction (LJP), aiming to predict a judgment based on fact descriptions, serves as legal assistance to mitigate the great work burden of limited legal practitioners. Most existing methods apply various large-scale pre-trained language models (PLMs) finetuned in LJP tasks to obtain consistent improvements. However, we discover the fact that the state-of-the-art (SOTA) model makes judgment predictions according to wrong (or non-casual) information, which not only weakens the model's generalization capability but also results in severe social problems like discrimination. Here, we analyze the causal mechanism misleading the LJP model to learn the spurious correlations, and then propose a framework to guide the model to learn the underlying causality knowledge in the legal texts. Specifically, we first perform open information extraction (OIE) to refine the text having a high proportion of causal information, according to which we generate a new set of data. Then, we design a model learning the weights of the refined data and the raw data for LJP model training. The extensive experimental results show that our model is more generalizable and robust than the baselines and achieves a new SOTA performance on two commonly used legal-specific datasets.

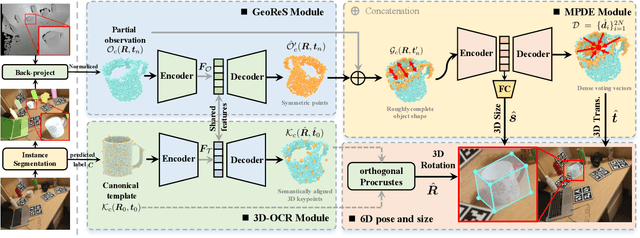

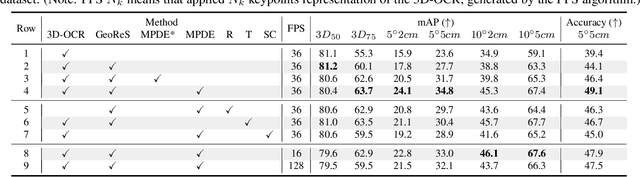

DONet: Learning Category-Level 6D Object Pose and Size Estimation from Depth Observation

Jun 27, 2021

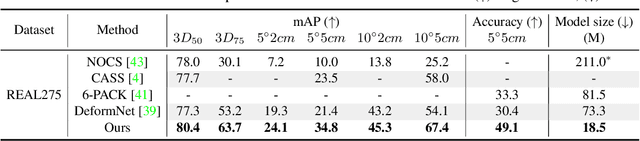

Abstract:We propose a method of Category-level 6D Object Pose and Size Estimation (COPSE) from a single depth image, without external pose-annotated real-world training data. While previous works exploit visual cues in RGB(D) images, our method makes inferences based on the rich geometric information of the object in the depth channel alone. Essentially, our framework explores such geometric information by learning the unified 3D Orientation-Consistent Representations (3D-OCR) module, and further enforced by the property of Geometry-constrained Reflection Symmetry (GeoReS) module. The magnitude information of object size and the center point is finally estimated by Mirror-Paired Dimensional Estimation (MPDE) module. Extensive experiments on the category-level NOCS benchmark demonstrate that our framework competes with state-of-the-art approaches that require labeled real-world images. We also deploy our approach to a physical Baxter robot to perform manipulation tasks on unseen but category-known instances, and the results further validate the efficacy of our proposed model. Our videos are available in the supplementary material.

Blind Motion Deblurring with Cycle Generative Adversarial Networks

Jan 08, 2019

Abstract:Blind motion deblurring is one of the most basic and challenging problems in image processing and computer vision. It aims to recover a sharp image from its blurred version knowing nothing about the blur process. Many existing methods use Maximum A Posteriori (MAP) or Expectation Maximization (EM) frameworks to deal with this kind of problems, but they cannot handle well the figh frequency features of natural images. Most recently, deep neural networks have been emerging as a powerful tool for image deblurring. In this paper, we prove that encoder-decoder architecture gives better results for image deblurring tasks. In addition, we propose a novel end-to-end learning model which refines generative adversarial network by many novel training strategies so as to tackle the problem of deblurring. Experimental results show that our model can capture high frequency features well, and the results on benchmark dataset show that proposed model achieves the competitive performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge