Jinhu Dong

Online Omnidirectional Jumping Trajectory Planning for Quadrupedal Robots on Uneven Terrains

Nov 07, 2024Abstract:Natural terrain complexity often necessitates agile movements like jumping in animals to improve traversal efficiency. To enable similar capabilities in quadruped robots, complex real-time jumping maneuvers are required. Current research does not adequately address the problem of online omnidirectional jumping and neglects the robot's kinodynamic constraints during trajectory generation. This paper proposes a general and complete cascade online optimization framework for omnidirectional jumping for quadruped robots. Our solution systematically encompasses jumping trajectory generation, a trajectory tracking controller, and a landing controller. It also incorporates environmental perception to navigate obstacles that standard locomotion cannot bypass, such as jumping from high platforms. We introduce a novel jumping plane to parameterize omnidirectional jumping motion and formulate a tightly coupled optimization problem accounting for the kinodynamic constraints, simultaneously optimizing CoM trajectory, Ground Reaction Forces (GRFs), and joint states. To meet the online requirements, we propose an accelerated evolutionary algorithm as the trajectory optimizer to address the complexity of kinodynamic constraints. To ensure stability and accuracy in environmental perception post-landing, we introduce a coarse-to-fine relocalization method that combines global Branch and Bound (BnB) search with Maximum a Posteriori (MAP) estimation for precise positioning during navigation and jumping. The proposed framework achieves jump trajectory generation in approximately 0.1 seconds with a warm start and has been successfully validated on two quadruped robots on uneven terrains. Additionally, we extend the framework's versatility to humanoid robots.

A Fast Online Omnidirectional Quadrupedal Jumping Framework Via Virtual-Model Control and Minimum Jerk Trajectory Generation

Jun 30, 2024Abstract:Exploring the limits of quadruped robot agility, particularly in the context of rapid and real-time planning and execution of omnidirectional jump trajectories, presents significant challenges due to the complex dynamics involved, especially when considering significant impulse contacts. This paper introduces a new framework to enable fast, omnidirectional jumping capabilities for quadruped robots. Utilizing minimum jerk technology, the proposed framework efficiently generates jump trajectories that exploit its analytical solutions, ensuring numerical stability and dynamic compatibility with minimal computational resources. The virtual model control is employed to formulate a Quadratic Programming (QP) optimization problem to accurately track the Center of Mass (CoM) trajectories during the jump phase. The whole-body control strategies facilitate precise and compliant landing motion. Moreover, the different jumping phase is triggered by time-schedule. The framework's efficacy is demonstrated through its implementation on an enhanced version of the open-source Mini Cheetah robot. Omnidirectional jumps-including forward, backward, and other directional-were successfully executed, showcasing the robot's capability to perform rapid and consecutive jumps with an average trajectory generation and tracking solution time of merely 50 microseconds.

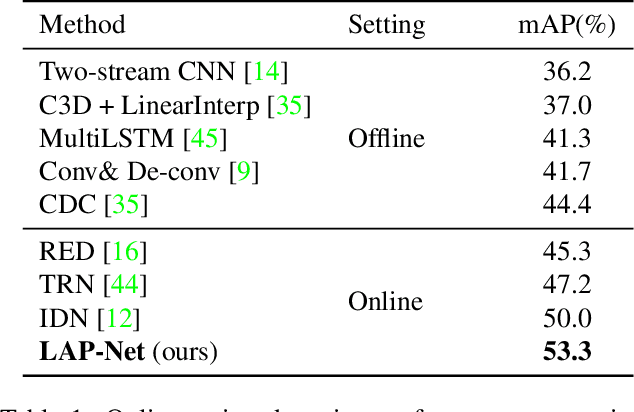

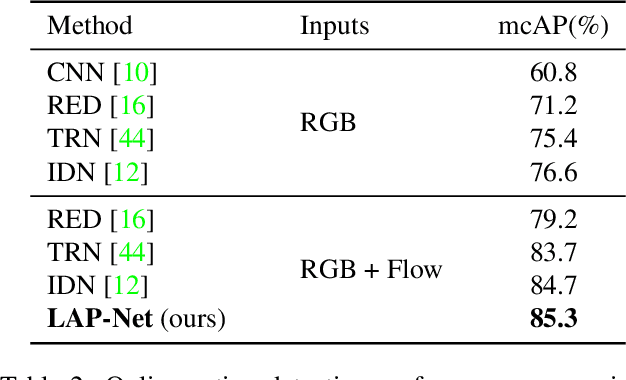

LAP-Net: Adaptive Features Sampling via Learning Action Progression for Online Action Detection

Nov 16, 2020

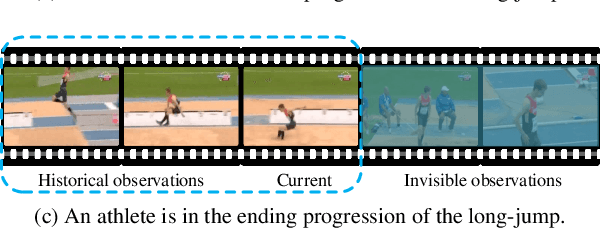

Abstract:Online action detection is a task with the aim of identifying ongoing actions from streaming videos without any side information or access to future frames. Recent methods proposed to aggregate fixed temporal ranges of invisible but anticipated future frames representations as supplementary features and achieved promising performance. They are based on the observation that human beings often detect ongoing actions by contemplating the future vision simultaneously. However, we observed that at different action progressions, the optimal supplementary features should be obtained from distinct temporal ranges instead of simply fixed future temporal ranges. To this end, we introduce an adaptive features sampling strategy to overcome the mentioned variable-ranges of optimal supplementary features. Specifically, in this paper, we propose a novel Learning Action Progression Network termed LAP-Net, which integrates an adaptive features sampling strategy. At each time step, this sampling strategy first estimates current action progression and then decide what temporal ranges should be used to aggregate the optimal supplementary features. We evaluated our LAP-Net on three benchmark datasets, TVSeries, THUMOS-14 and HDD. The extensive experiments demonstrate that with our adaptive feature sampling strategy, the proposed LAP-Net can significantly outperform current state-of-the-art methods with a large margin.

Neuromorphic Visual Odometry System for Intelligent Vehicle Application with Bio-inspired Vision Sensor

Sep 05, 2019

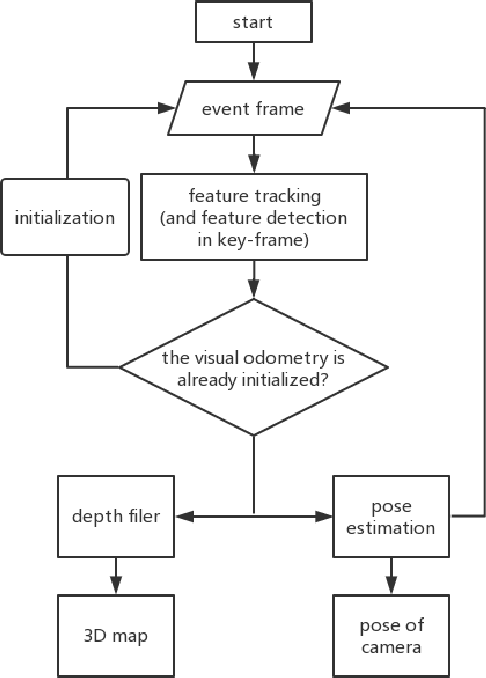

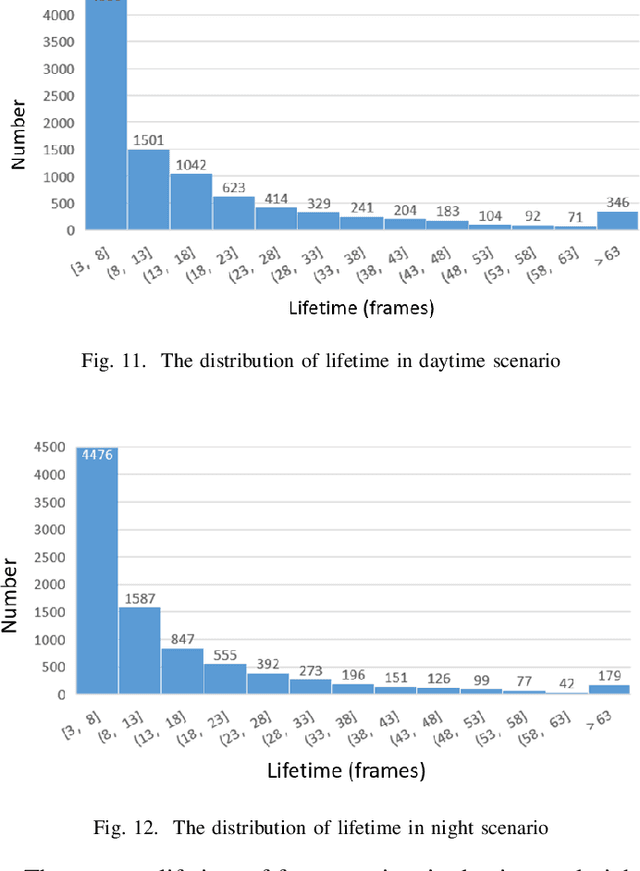

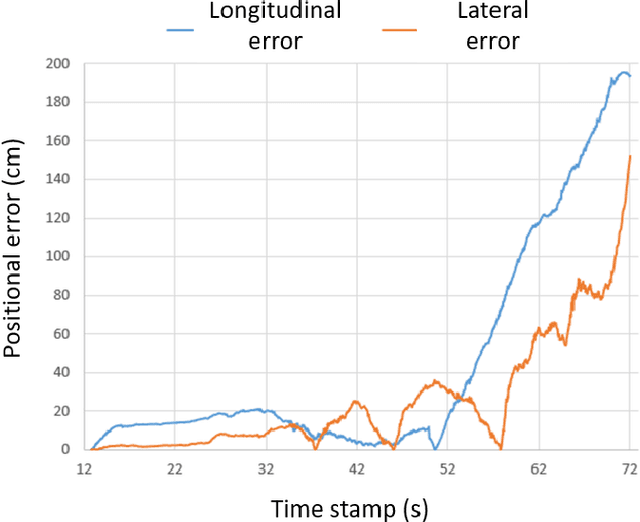

Abstract:The neuromorphic camera is a brand new vision sensor that has emerged in recent years. In contrast to the conventional frame-based camera, the neuromorphic camera only transmits local pixel-level changes at the time of its occurrence and provides an asynchronous event stream with low latency. It has the advantages of extremely low signal delay, low transmission bandwidth requirements, rich information of edges, high dynamic range etc., which make it a promising sensor in the application of in-vehicle visual odometry system. This paper proposes a neuromorphic in-vehicle visual odometry system using feature tracking algorithm. To the best of our knowledge, this is the first in-vehicle visual odometry system that only uses a neuromorphic camera, and its performance test is carried out on actual driving datasets. In addition, an in-depth analysis of the results of the experiment is provided. The work of this paper verifies the feasibility of in-vehicle visual odometry system using neuromorphic cameras.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge