Canbo Ye

Neuromorphic Visual Odometry System for Intelligent Vehicle Application with Bio-inspired Vision Sensor

Sep 05, 2019

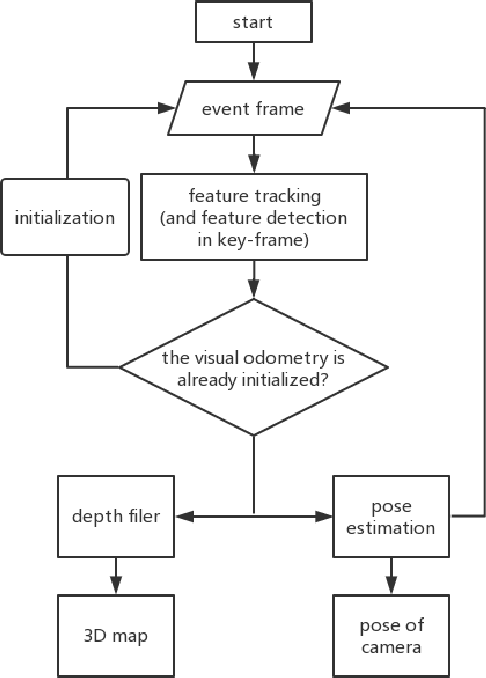

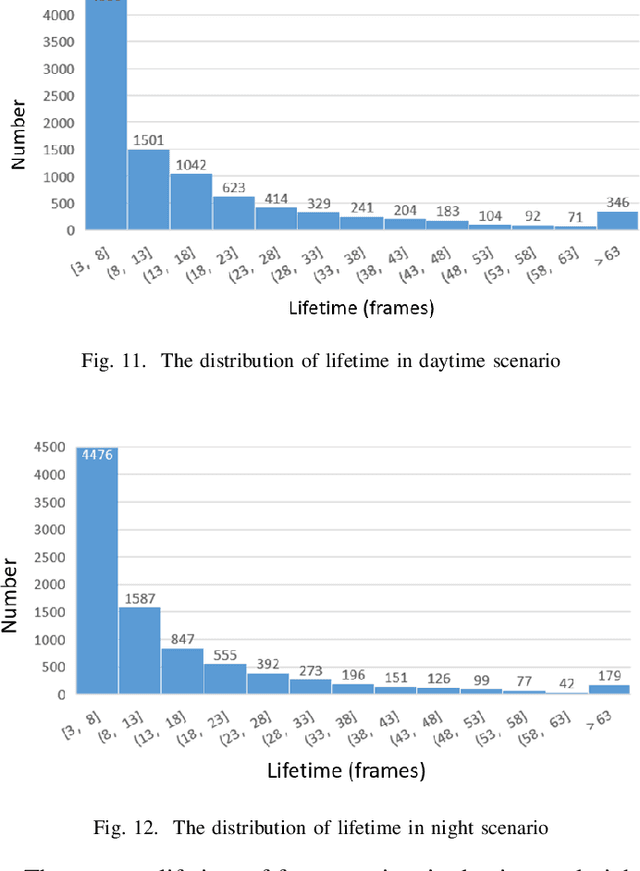

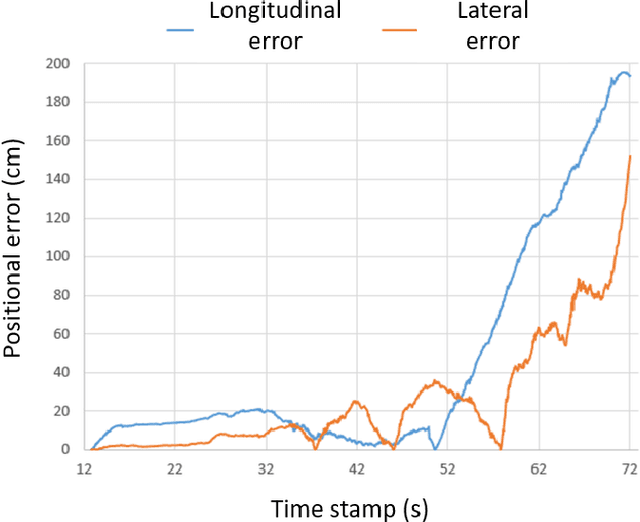

Abstract:The neuromorphic camera is a brand new vision sensor that has emerged in recent years. In contrast to the conventional frame-based camera, the neuromorphic camera only transmits local pixel-level changes at the time of its occurrence and provides an asynchronous event stream with low latency. It has the advantages of extremely low signal delay, low transmission bandwidth requirements, rich information of edges, high dynamic range etc., which make it a promising sensor in the application of in-vehicle visual odometry system. This paper proposes a neuromorphic in-vehicle visual odometry system using feature tracking algorithm. To the best of our knowledge, this is the first in-vehicle visual odometry system that only uses a neuromorphic camera, and its performance test is carried out on actual driving datasets. In addition, an in-depth analysis of the results of the experiment is provided. The work of this paper verifies the feasibility of in-vehicle visual odometry system using neuromorphic cameras.

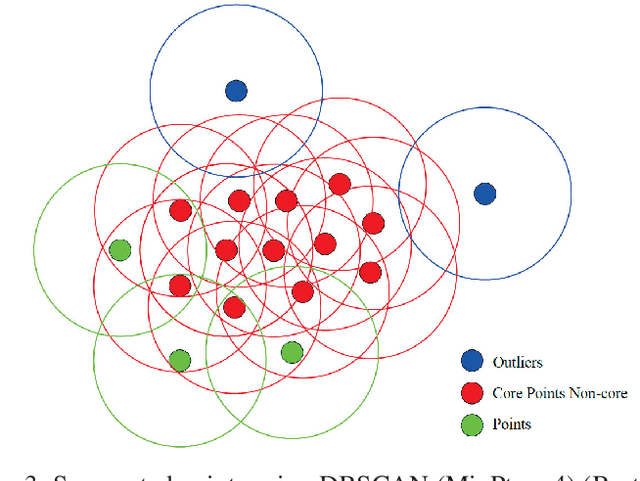

An Efficient L-Shape Fitting Method for Vehicle Pose Detection with 2D LiDAR

Dec 23, 2018

Abstract:Detecting vehicles with strong robustness and high efficiency has become one of the key capabilities of fully autonomous driving cars. This topic has already been widely studied by GPU-accelerated deep learning approaches using image sensors and 3D LiDAR, however, few studies seek to address it with a horizontally mounted 2D laser scanner. 2D laser scanner is equipped on almost every autonomous vehicle for its superiorities in the field of view, lighting invariance, high accuracy and relatively low price. In this paper, we propose a highly efficient search-based L-Shape fitting algorithm for detecting positions and orientations of vehicles with a 2D laser scanner. Differing from the approach to formulating LShape fitting as a complex optimization problem, our method decomposes the L-Shape fitting into two steps: L-Shape vertexes searching and L-Shape corner localization. Our approach is computationally efficient due to its minimized complexity. In on-road experiments, our approach is capable of adapting to various circumstances with high efficiency and robustness.

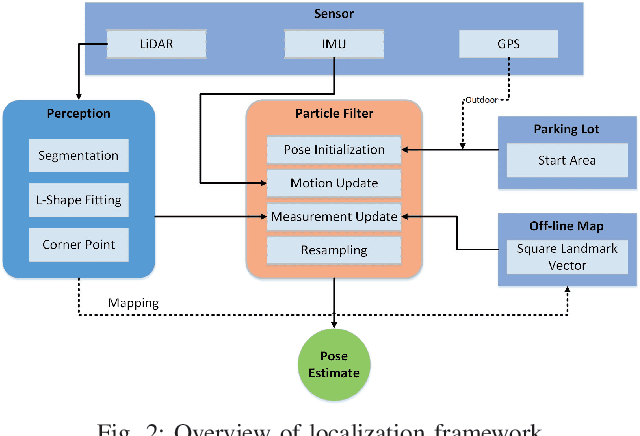

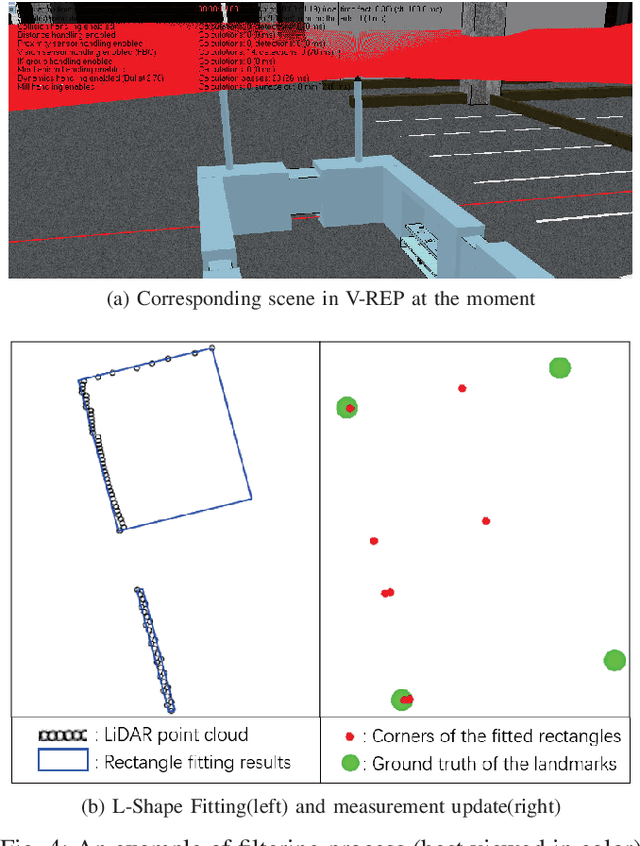

Self-Localization of Parking Robots Using Square-Like Landmarks

Dec 23, 2018

Abstract:In this paper, we present a framework for self-localization of parking robots in a parking lot innovatively using square-like landmarks, aiming to provide a positioning solution with low cost but high accuracy. It utilizes square structures common in parking lots such as pillars, corners or charging piles as robust landmarks and deduces the global pose of the robot in conjunction with an off-line map. The localization is performed in real-time via Particle Filter using a single line scanning LiDAR as main sensor, an odometry as secondary information sources. The system has been tested in a simulation environment built in V-REP, the result of which demonstrates its positioning accuracy below 0.20 m and a corresponding heading error below 1{\deg}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge