LAP-Net: Adaptive Features Sampling via Learning Action Progression for Online Action Detection

Paper and Code

Nov 16, 2020

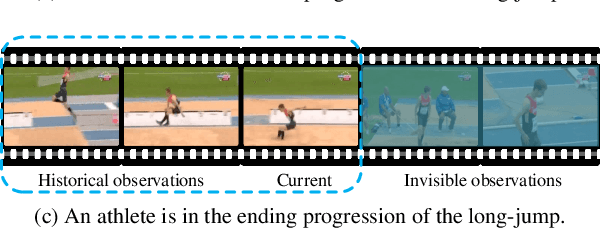

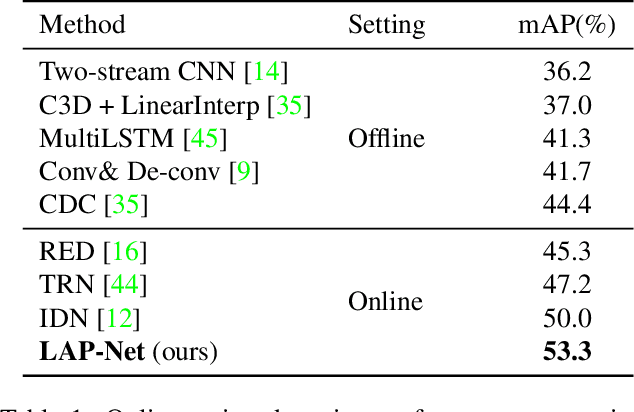

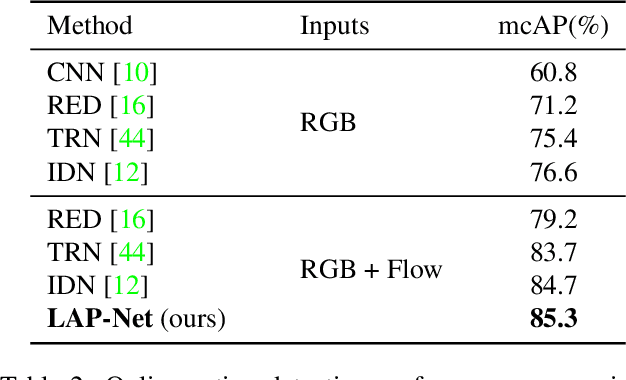

Online action detection is a task with the aim of identifying ongoing actions from streaming videos without any side information or access to future frames. Recent methods proposed to aggregate fixed temporal ranges of invisible but anticipated future frames representations as supplementary features and achieved promising performance. They are based on the observation that human beings often detect ongoing actions by contemplating the future vision simultaneously. However, we observed that at different action progressions, the optimal supplementary features should be obtained from distinct temporal ranges instead of simply fixed future temporal ranges. To this end, we introduce an adaptive features sampling strategy to overcome the mentioned variable-ranges of optimal supplementary features. Specifically, in this paper, we propose a novel Learning Action Progression Network termed LAP-Net, which integrates an adaptive features sampling strategy. At each time step, this sampling strategy first estimates current action progression and then decide what temporal ranges should be used to aggregate the optimal supplementary features. We evaluated our LAP-Net on three benchmark datasets, TVSeries, THUMOS-14 and HDD. The extensive experiments demonstrate that with our adaptive feature sampling strategy, the proposed LAP-Net can significantly outperform current state-of-the-art methods with a large margin.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge