Defeng

David

Score-Informed BiLSTM Correction for Refining MIDI Velocity in Automatic Piano Transcription

Aug 11, 2025Abstract:MIDI is a modern standard for storing music, recording how musical notes are played. Many piano performances have corresponding MIDI scores available online. Some of these are created by the original performer, recording on an electric piano alongside the audio, while others are through manual transcription. In recent years, automatic music transcription (AMT) has rapidly advanced, enabling machines to transcribe MIDI from audio. However, these transcriptions often require further correction. Assuming a perfect timing correction, we focus on the loudness correction in terms of MIDI velocity (a parameter in MIDI for loudness control). This task can be approached through score-informed MIDI velocity estimation, which has undergone several developments. While previous approaches introduced specifically built models to re-estimate MIDI velocity, thereby replacing AMT estimates, we propose a BiLSTM correction module to refine AMT-estimated velocity. Although we did not reach state-of-the-art performance, we validated our method on the well-known AMT system, the high-resolution piano transcription (HPT), and achieved significant improvements.

Pseudo Strong Labels from Frame-Level Predictions for Weakly Supervised Sound Event Detection

Jan 07, 2025Abstract:Weakly Supervised Sound Event Detection (WSSED), which relies on audio tags without precise onset and offset times, has become prevalent due to the scarcity of strongly labeled data that includes exact temporal boundaries for events. This study introduces Frame-level Pseudo Strong Labeling (FPSL) to overcome the lack of temporal information in WSSED by generating pseudo strong labels from frame-level predictions. This enhances temporal localization during training and addresses the limitations of clip-wise weak supervision. We validate our approach across three benchmark datasets (DCASE2017 Task 4, DCASE2018 Task 4, and UrbanSED) and demonstrate significant improvements in key metrics such as the Polyphonic Sound Detection Scores (PSDS), event-based F1 scores, and intersection-based F1 scores. For example, Convolutional Recurrent Neural Networks (CRNNs) trained with FPSL outperform baseline models by 4.9% in PSDS1 on DCASE2017, 7.6% on DCASE2018, and 1.8% on UrbanSED, confirming the effectiveness of our method in enhancing model performance.

Impact of Noisy Labels on Sound Event Detection: Deletion Errors Are More Detrimental Than Insertion Errors

Aug 27, 2024Abstract:This study explores the critical but underexamined impact of label noise on Sound Event Detection (SED), which requires both sound identification and precise temporal localization. We categorize label noise into deletion, insertion, substitution, and subjective types and systematically evaluate their effects on SED using synthetic and real-life datasets. Our analysis shows that deletion noise significantly degrades performance, while insertion noise is relatively benign. Moreover, loss functions effective against classification noise do not perform well for SED due to intra-class imbalance between foreground sound events and background sounds. We demonstrate that loss functions designed to address data imbalance in SED can effectively reduce the impact of noisy labels on system performance. For instance, halving the weight of background sounds in a synthetic dataset improved macro-F1 and micro-F1 scores by approximately $9\%$ with minimal Error Rate increase, with consistent results in real-life datasets. This research highlights the nuanced effects of noisy labels on SED systems and provides practical strategies to enhance model robustness, which are pivotal for both constructing new SED datasets and improving model performance, including efficient utilization of soft and crowdsourced labels.

Unitary Approximate Message Passing for Sparse Bayesian Learning

Jan 25, 2021

Abstract:Sparse Bayesian learning (SBL) can be implemented with low complexity based on the approximate message passing (AMP) algorithm. However, it is vulnerable to 'difficult' measurement matrices, which may cause AMP to diverge. Damped AMP has been used for SBL to alleviate the problem at the cost of reducing convergence speed. In this work, we propose a new SBL algorithm based on structured variational inference, leveraging AMP with a unitary transformation (UAMP). Both single measurement vector and multiple measurement vector problems are investigated. It is shown that, compared to state-of-the-art AMP-based SBL algorithms, the proposed UAMPSBL is more robust and efficient, leading to remarkably better performance.

Approximate Message Passing with Nearest Neighbor Sparsity Pattern Learning

Jan 04, 2016

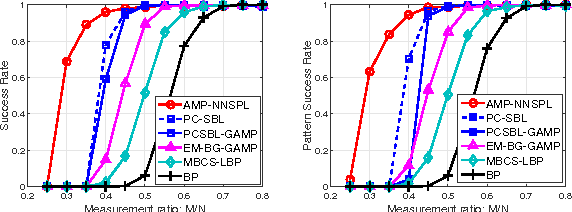

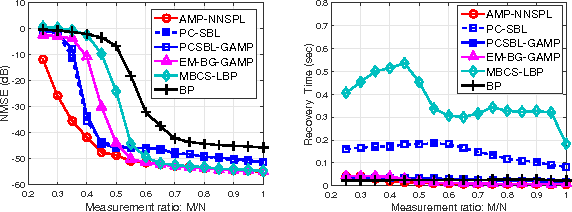

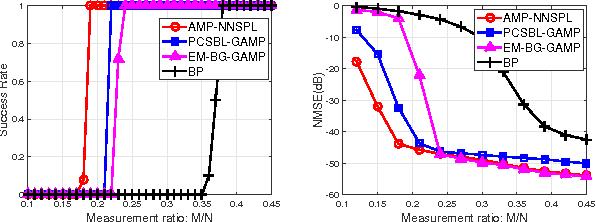

Abstract:We consider the problem of recovering clustered sparse signals with no prior knowledge of the sparsity pattern. Beyond simple sparsity, signals of interest often exhibits an underlying sparsity pattern which, if leveraged, can improve the reconstruction performance. However, the sparsity pattern is usually unknown a priori. Inspired by the idea of k-nearest neighbor (k-NN) algorithm, we propose an efficient algorithm termed approximate message passing with nearest neighbor sparsity pattern learning (AMP-NNSPL), which learns the sparsity pattern adaptively. AMP-NNSPL specifies a flexible spike and slab prior on the unknown signal and, after each AMP iteration, sets the sparse ratios as the average of the nearest neighbor estimates via expectation maximization (EM). Experimental results on both synthetic and real data demonstrate the superiority of our proposed algorithm both in terms of reconstruction performance and computational complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge