Yoshiyuki Kabashima

Learning Linear Regression with Low-Rank Tasks in-Context

Oct 06, 2025Abstract:In-context learning (ICL) is a key building block of modern large language models, yet its theoretical mechanisms remain poorly understood. It is particularly mysterious how ICL operates in real-world applications where tasks have a common structure. In this work, we address this problem by analyzing a linear attention model trained on low-rank regression tasks. Within this setting, we precisely characterize the distribution of predictions and the generalization error in the high-dimensional limit. Moreover, we find that statistical fluctuations in finite pre-training data induce an implicit regularization. Finally, we identify a sharp phase transition of the generalization error governed by task structure. These results provide a framework for understanding how transformers learn to learn the task structure.

Improving Decoupled Posterior Sampling for Inverse Problems using Data Consistency Constraint

Dec 01, 2024

Abstract:Diffusion models have shown strong performances in solving inverse problems through posterior sampling while they suffer from errors during earlier steps. To mitigate this issue, several Decoupled Posterior Sampling methods have been recently proposed. However, the reverse process in these methods ignores measurement information, leading to errors that impede effective optimization in subsequent steps. To solve this problem, we propose Guided Decoupled Posterior Sampling (GDPS) by integrating a data consistency constraint in the reverse process. The constraint performs a smoother transition within the optimization process, facilitating a more effective convergence toward the target distribution. Furthermore, we extend our method to latent diffusion models and Tweedie's formula, demonstrating its scalability. We evaluate GDPS on the FFHQ and ImageNet datasets across various linear and nonlinear tasks under both standard and challenging conditions. Experimental results demonstrate that GDPS achieves state-of-the-art performance, improving accuracy over existing methods.

Generating gradients in the energy landscape using rectified linear type cost functions for efficiently solving 0/1 matrix factorization in Simulated Annealing

Dec 27, 2023

Abstract:The 0/1 matrix factorization defines matrix products using logical AND and OR as product-sum operators, revealing the factors influencing various decision processes. Instances and their characteristics are arranged in rows and columns. Formulating matrix factorization as an energy minimization problem and exploring it with Simulated Annealing (SA) theoretically enables finding a minimum solution in sufficient time. However, searching for the optimal solution in practical time becomes problematic when the energy landscape has many plateaus with flat slopes. In this work, we propose a method to facilitate the solution process by applying a gradient to the energy landscape, using a rectified linear type cost function readily available in modern annealing machines. We also propose a method to quickly obtain a solution by updating the cost function's gradient during the search process. Numerical experiments were conducted, confirming the method's effectiveness with both noise-free artificial and real data.

Compressed Sensing Radar Detectors based on Weighted LASSO

Jun 30, 2023

Abstract:The compressed sensing (CS) model can represent the signal recovery process of a large number of radar systems. The detection problem of such radar systems has been studied in many pieces of literature through the technology of debiased least absolute shrinkage and selection operator (LASSO). While naive LASSO treats all the entries equally, there are many applications in which prior information varies depending on each entry. Weighted LASSO, in which the weights of the regularization terms are tuned depending on the entry-dependent prior, is proven to be more effective with the prior information by many researchers. In the present paper, existing results obtained by methods of statistical mechanics are utilized to derive the debiased weighted LASSO estimator for randomly constructed row-orthogonal measurement matrices. Based on this estimator, we construct a detector, termed the debiased weighted LASSO detector (DWLD), for CS radar systems and prove its advantages. The threshold of this detector can be calculated by false alarm rate, which yields better detection performance than the naive weighted LASSO detector (NWLD) under the Neyman-Pearson principle. The improvement of the detection performance brought by tuning weights is demonstrated by numerical experiments. With the same false alarm rate, the detection probability of DWLD is obviously higher than those of NWLD and the debiased (non-weighted) LASSO detector (DLD).

Average case analysis of Lasso under ultra-sparse conditions

Feb 25, 2023Abstract:We analyze the performance of the least absolute shrinkage and selection operator (Lasso) for the linear model when the number of regressors $N$ grows larger keeping the true support size $d$ finite, i.e., the ultra-sparse case. The result is based on a novel treatment of the non-rigorous replica method in statistical physics, which has been applied only to problem settings where $N$ ,$d$ and the number of observations $M$ tend to infinity at the same rate. Our analysis makes it possible to assess the average performance of Lasso with Gaussian sensing matrices without assumptions on the scaling of $N$ and $M$, the noise distribution, and the profile of the true signal. Under mild conditions on the noise distribution, the analysis also offers a lower bound on the sample complexity necessary for partial and perfect support recovery when $M$ diverges as $M = O(\log N)$. The obtained bound for perfect support recovery is a generalization of that given in previous literature, which only considers the case of Gaussian noise and diverging $d$. Extensive numerical experiments strongly support our analysis.

QCM-SGM+: Improved Quantized Compressed Sensing With Score-Based Generative Models for General Sensing Matrices

Feb 02, 2023Abstract:In realistic compressed sensing (CS) scenarios, the obtained measurements usually have to be quantized to a finite number of bits before transmission and/or storage, thus posing a challenge in recovery, especially for extremely coarse quantization such as 1-bit sign measurements. Recently Meng & Kabashima proposed an efficient quantized compressed sensing algorithm called QCS-SGM using the score-based generative models as an implicit prior. Thanks to the power of score-based generative models in capturing the rich structure of the prior, QCS-SGM achieves remarkably better performances than previous quantized CS methods. However, QCS-SGM is restricted to (approximately) row-orthogonal sensing matrices since otherwise the likelihood score becomes intractable. To address this challenging problem, in this paper we propose an improved version of QCS-SGM, which we call QCS-SGM+, which also works well for general matrices. The key idea is a Bayesian inference perspective of the likelihood score computation, whereby an expectation propagation algorithm is proposed to approximately compute the likelihood score. Experiments on a variety of baseline datasets demonstrate that the proposed QCS-SGM+ outperforms QCS-SGM by a large margin when sensing matrices are far from row-orthogonal.

Diffusion Model Based Posterior Sampling for Noisy Linear Inverse Problems

Nov 20, 2022

Abstract:We consider the ubiquitous linear inverse problems with additive Gaussian noise and propose an unsupervised general-purpose sampling approach called diffusion model based posterior sampling (DMPS) to reconstruct the unknown signal from noisy linear measurements. Specifically, the prior of the unknown signal is implicitly modeled by one pre-trained diffusion model (DM). In posterior sampling, to address the intractability of exact noise-perturbed likelihood score, a simple yet effective noise-perturbed pseudo-likelihood score is introduced under the uninformative prior assumption. While DMPS applies to any kind of DM with proper modifications, we focus on the ablated diffusion model (ADM) as one specific example and evaluate its efficacy on a variety of linear inverse problems such as image super-resolution, denoising, deblurring, colorization. Experimental results demonstrate that, for both in-distribution and out-of-distribution samples, DMPS achieves highly competitive or even better performances on various tasks while being 3 times faster than the leading competitor. The code to reproduce the results is available at https://github.com/mengxiangming/dmps.

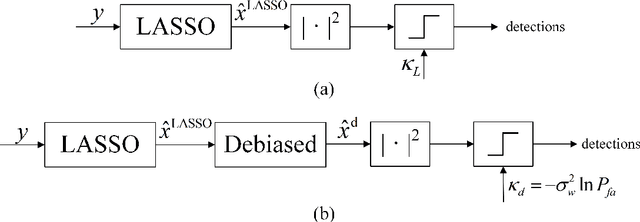

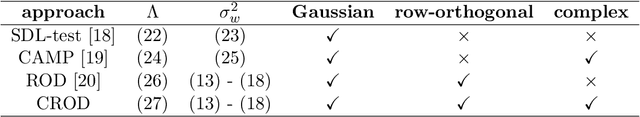

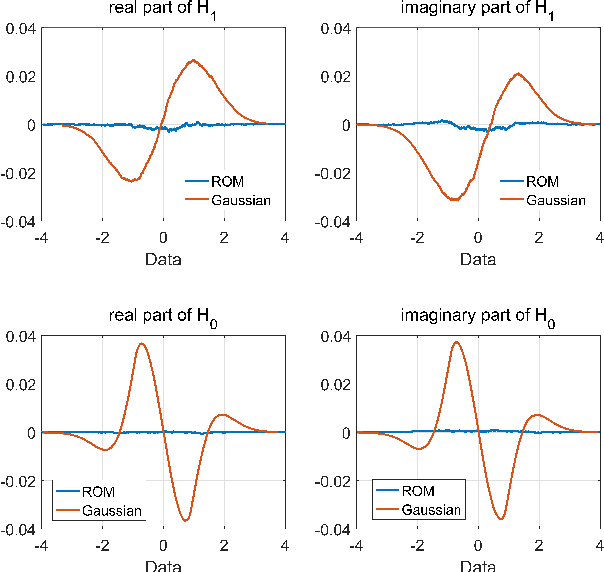

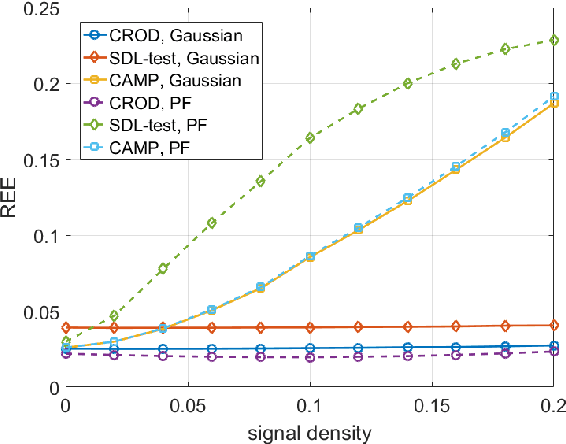

Compressed sensing radar detectors under the row-orthogonal design model: a statistical mechanics perspective

Oct 03, 2022

Abstract:Compressed sensing (CS) model of complex-valued data can represent the signal recovery process of a large amount types of radar systems, especially when the measurement matrix is row-orthogonal. Based on debiased least absolute shrinkage and selection operator (LASSO), detection problem under Gaussian random design model, i.e. the elements of measurement matrix are drawn from Gaussian distribution, is studied by literature. However, we find that these approaches are not suitable for row-orthogonal measurement matrices, which are of more practical relevance. In view of statistical mechanics approaches, we provide derivations of more accurate test statistics and thresholds (or p-values) under the row-orthogonal design model, and theoretically analyze the detection performance of the present detector. Such detector can analytically provide the threshold according to given false alarm rate, which is not possible with the conventional CS detector, and the detection performance is proved to be better than that of the traditional LASSO detector. Comparing with other debiased LASSO based detectors, simulation results indicate that the proposed approach can achieve more accurate probability of false alarm when the measurement matrix is row-orthogonal, leading to better detection performance under Neyman-Pearson principle.

On Model Selection Consistency of Lasso for High-Dimensional Ising Models on Tree-like Graphs

Oct 16, 2021

Abstract:We consider the problem of high-dimensional Ising model selection using neighborhood-based least absolute shrinkage and selection operator (Lasso). It is rigorously proved that under some mild coherence conditions on the population covariance matrix of the Ising model, consistent model selection can be achieved with sample sizes $n=\Omega{(d^3\log{p})}$ for any tree-like graph in the paramagnetic phase, where $p$ is the number of variables and $d$ is the maximum node degree. When the same conditions are imposed directly on the sample covariance matrices, it is shown that a reduced sample size $n=\Omega{(d^2\log{p})}$ suffices. The obtained sufficient conditions for consistent model selection with Lasso are the same in the scaling of the sample complexity as that of $\ell_1$-regularized logistic regression. Given the popularity and efficiency of Lasso, our rigorous analysis provides a theoretical backing for its practical use in Ising model selection.

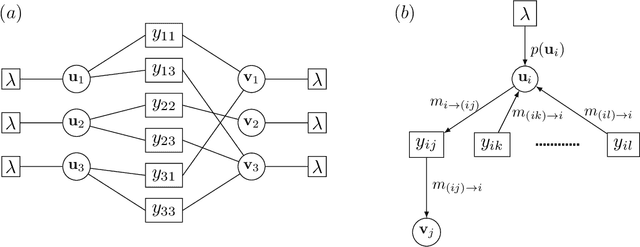

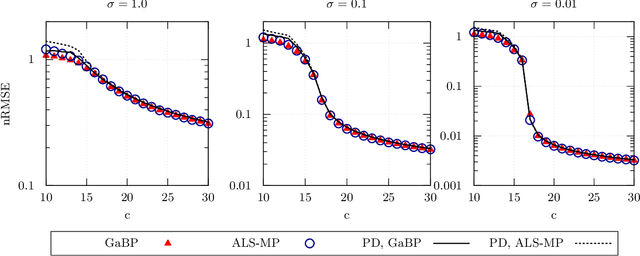

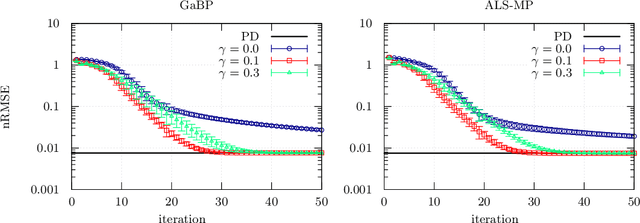

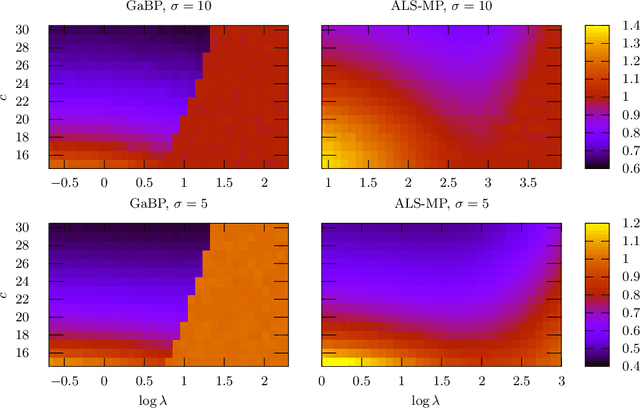

Matrix completion based on Gaussian belief propagation

May 01, 2021

Abstract:We develop a message-passing algorithm for noisy matrix completion problems based on matrix factorization. The algorithm is derived by approximating message distributions of belief propagation with Gaussian distributions that share the same first and second moments. We also derive a memory-friendly version of the proposed algorithm by applying a perturbation treatment commonly used in the literature of approximate message passing. In addition, a damping technique, which is demonstrated to be crucial for optimal performance, is introduced without computational strain, and the relationship to the message-passing version of alternating least squares, a method reported to be optimal in certain settings, is discussed. Experiments on synthetic datasets show that while the proposed algorithm quantitatively exhibits almost the same performance under settings where the earlier algorithm is optimal, it is advantageous when the observed datasets are corrupted by non-Gaussian noise. Experiments on real-world datasets also emphasize the performance differences between the two algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge