Hengtao He

Adaptive Matched Filtering for Sensing With Communication Signals in Cluttered Environments

Dec 09, 2025Abstract:This paper investigates the performance of the adaptive matched filtering (AMF) in cluttered environments, particularly when operating with superimposed signals. Since the instantaneous signal-to-clutter-plus-noise ratio (SCNR) is a random variable dependent on the data payload, using it directly as a design objective poses severe practical challenges, such as prohibitive computational burdens and signaling overhead. To address this, we propose shifting the optimization objective from an instantaneous to a statistical metric, which focuses on maximizing the average SCNR over all possible payloads. Due to its analytical intractability, we leverage tools from random matrix theory (RMT) to derive an asymptotic approximation for the average SCNR, which remains accurate even in moderate-dimensional regimes. A key finding from our theoretical analysis is that, for a fixed modulation basis, the PSK achieves a superior average SCNR compared to QAM and the pure Gaussian constellation. Furthermore, for any given constellation, the OFDM achieves a higher average SCNR than SC and AFDM. Then, we propose two pilot design schemes to enhance system performance: a Data-Payload-Dependent (DPD) scheme and a Data-Payload-Independent (DPI) scheme. The DPD approach maximizes the instantaneous SCNR for each transmission. Conversely, the DPI scheme optimizes the average SCNR, offering a flexible trade-off between sensing performance and implementation complexity. Then, we develop two dedicated optimization algorithms for DPD and DPI schemes. In particular, for the DPD problem, we employ fractional optimization and the KKT conditions to derive a closed-form solution. For the DPI problem, we adopt a manifold optimization approach to handle the inherent rank-one constraint efficiently. Simulation results validate the accuracy of our theoretical analysis and demonstrate the effectiveness of the proposed methods.

Accurate and Fast Channel Estimation for Fluid Antenna Systems with Diffusion Models

May 08, 2025

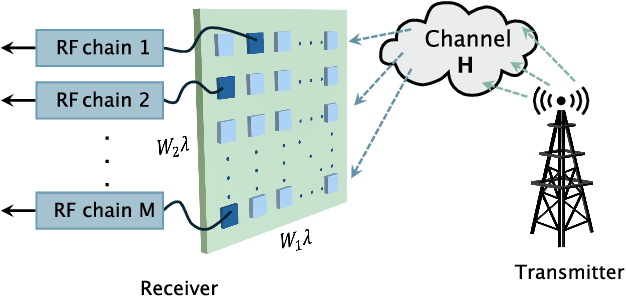

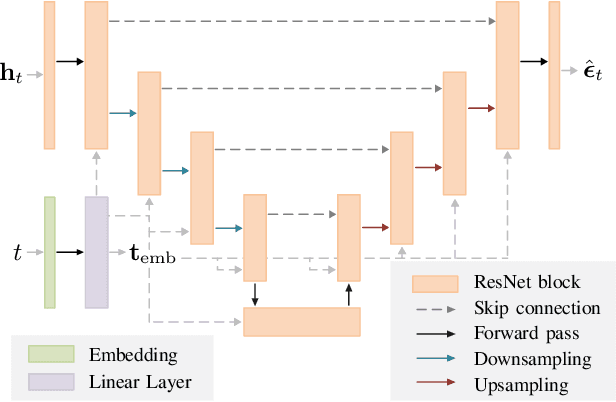

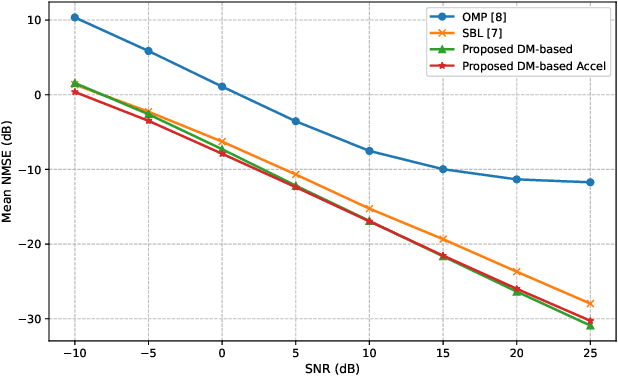

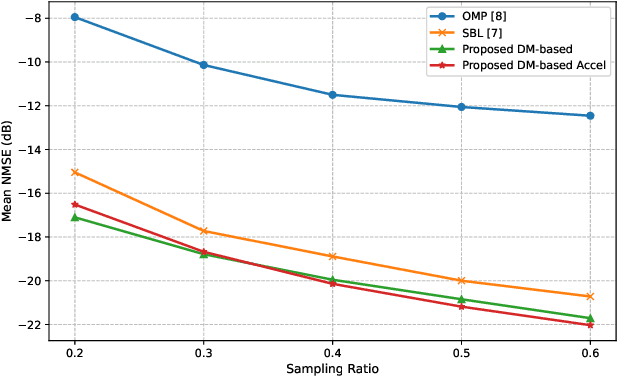

Abstract:Fluid antenna systems (FAS) offer enhanced spatial diversity for next-generation wireless systems. However, acquiring accurate channel state information (CSI) remains challenging due to the large number of reconfigurable ports and the limited availability of radio-frequency (RF) chains -- particularly in high-dimensional FAS scenarios. To address this challenge, we propose an efficient posterior sampling-based channel estimator that leverages a diffusion model (DM) with a simplified U-Net architecture to capture the spatial correlation structure of two-dimensional FAS channels. The DM is initially trained offline in an unsupervised way and then applied online as a learned implicit prior to reconstruct CSI from partial observations via posterior sampling through a denoising diffusion restoration model (DDRM). To accelerate the online inference, we introduce a skipped sampling strategy that updates only a subset of latent variables during the sampling process, thereby reducing the computational cost with minimal accuracy degradation. Simulation results demonstrate that the proposed approach achieves significantly higher estimation accuracy and over 20x speedup compared to state-of-the-art compressed sensing-based methods, highlighting its potential for practical deployment in high-dimensional FAS.

Fluid Antenna-Assisted MU-MIMO Systems with Decentralized Baseband Processing

May 08, 2025Abstract:The fluid antenna system (FAS) has emerged as a disruptive technology, offering unprecedented degrees of freedom (DoF) for wireless communication systems. However, optimizing fluid antenna (FA) positions entails significant computational costs, especially when the number of FAs is large. To address this challenge, we introduce a decentralized baseband processing (DBP) architecture to FAS, which partitions the FA array into clusters and enables parallel processing. Based on the DBP architecture, we formulate a weighted sum rate (WSR) maximization problem through joint beamforming and FA position design for FA-assisted multiuser multiple-input multiple-output (MU-MIMO) systems. To solve the WSR maximization problem, we propose a novel decentralized block coordinate ascent (BCA)-based algorithm that leverages matrix fractional programming (FP) and majorization-minimization (MM) methods. The proposed decentralized algorithm achieves low computational, communication, and storage costs, thus unleashing the potential of the DBP architecture. Simulation results show that our proposed algorithm under the DBP architecture reduces computational time by over 70% compared to centralized architectures with negligible WSR performance loss.

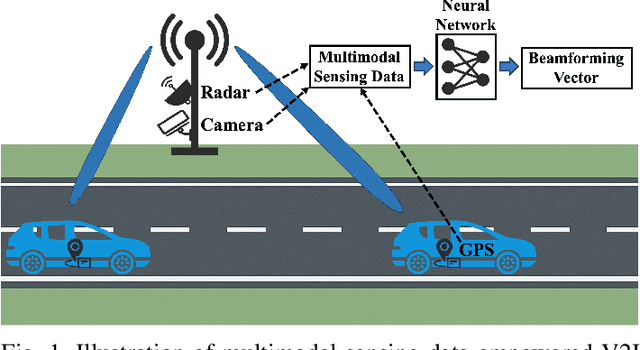

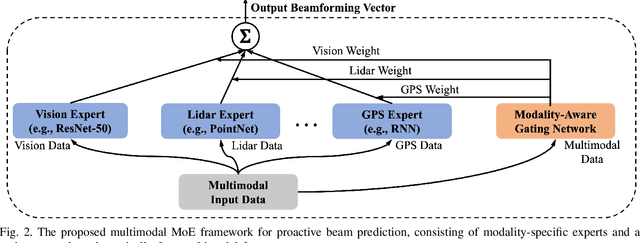

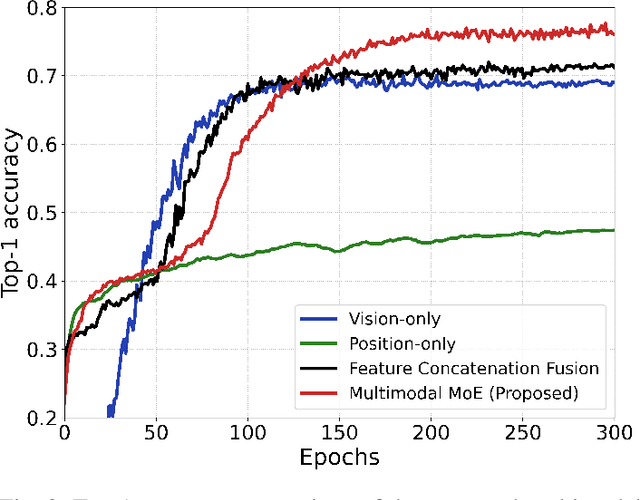

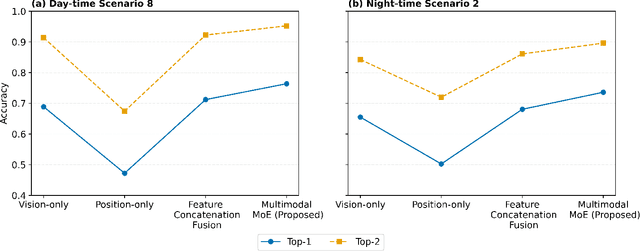

Multimodal Deep Learning-Empowered Beam Prediction in Future THz ISAC Systems

May 05, 2025

Abstract:Integrated sensing and communication (ISAC) systems operating at terahertz (THz) bands are envisioned to enable both ultra-high data-rate communication and precise environmental awareness for next-generation wireless networks. However, the narrow width of THz beams makes them prone to misalignment and necessitates frequent beam prediction in dynamic environments. Multimodal sensing, which integrates complementary modalities such as camera images, positional data, and radar measurements, has recently emerged as a promising solution for proactive beam prediction. Nevertheless, existing multimodal approaches typically employ static fusion architectures that cannot adjust to varying modality reliability and contributions, thereby degrading predictive performance and robustness. To address this challenge, we propose a novel and efficient multimodal mixture-of-experts (MoE) deep learning framework for proactive beam prediction in THz ISAC systems. The proposed multimodal MoE framework employs multiple modality-specific expert networks to extract representative features from individual sensing modalities, and dynamically fuses them using adaptive weights generated by a gating network according to the instantaneous reliability of each modality. Simulation results in realistic vehicle-to-infrastructure (V2I) scenarios demonstrate that the proposed MoE framework outperforms traditional static fusion methods and unimodal baselines in terms of prediction accuracy and adaptability, highlighting its potential in practical THz ISAC systems with ultra-massive multiple-input multiple-output (MIMO).

Mutual Information-Empowered Task-Oriented Communication: Principles, Applications and Challenges

Mar 26, 2025

Abstract:Mutual information (MI)-based guidelines have recently proven to be effective for designing task-oriented communication systems, where the ultimate goal is to extract and transmit task-relevant information for downstream task. This paper provides a comprehensive overview of MI-empowered task-oriented communication, highlighting how MI-based methods can serve as a unifying design framework in various task-oriented communication scenarios. We begin with the roadmap of MI for designing task-oriented communication systems, and then introduce the roles and applications of MI to guide feature encoding, transmission optimization, and efficient training with two case studies. We further elaborate the limitations and challenges of MI-based methods. Finally, we identify several open issues in MI-based task-oriented communication to inspire future research.

Communication-Efficient Distributed On-Device LLM Inference Over Wireless Networks

Mar 19, 2025Abstract:Large language models (LLMs) have demonstrated remarkable success across various application domains, but their enormous sizes and computational demands pose significant challenges for deployment on resource-constrained edge devices. To address this issue, we propose a novel distributed on-device LLM inference framework that leverages tensor parallelism to partition the neural network tensors (e.g., weight matrices) of one LLM across multiple edge devices for collaborative inference. A key challenge in tensor parallelism is the frequent all-reduce operations for aggregating intermediate layer outputs across participating devices, which incurs significant communication overhead. To alleviate this bottleneck, we propose an over-the-air computation (AirComp) approach that harnesses the analog superposition property of wireless multiple-access channels to perform fast all-reduce steps. To utilize the heterogeneous computational capabilities of edge devices and mitigate communication distortions, we investigate a joint model assignment and transceiver optimization problem to minimize the average transmission error. The resulting mixed-timescale stochastic non-convex optimization problem is intractable, and we propose an efficient two-stage algorithm to solve it. Moreover, we prove that the proposed algorithm converges almost surely to a stationary point of the original problem. Comprehensive simulation results will show that the proposed framework outperforms existing benchmark schemes, achieving up to 5x inference speed acceleration and improving inference accuracy.

Remote Training in Task-Oriented Communication: Supervised or Self-Supervised with Fine-Tuning?

Feb 25, 2025Abstract:Task-oriented communication focuses on extracting and transmitting only the information relevant to specific tasks, effectively minimizing communication overhead. Most existing methods prioritize reducing this overhead during inference, often assuming feasible local training or minimal training communication resources. However, in real-world wireless systems with dynamic connection topologies, training models locally for each new connection is impractical, and task-specific information is often unavailable before establishing connections. Therefore, minimizing training overhead and enabling label-free, task-agnostic pre-training before the connection establishment are essential for effective task-oriented communication. In this paper, we tackle these challenges by employing a mutual information maximization approach grounded in self-supervised learning and information-theoretic analysis. We propose an efficient strategy that pre-trains the transmitter in a task-agnostic and label-free manner, followed by joint fine-tuning of both the transmitter and receiver in a task-specific, label-aware manner. Simulation results show that our proposed method reduces training communication overhead to about half that of full-supervised methods using the SGD optimizer, demonstrating significant improvements in training efficiency.

AI and Deep Learning for THz Ultra-Massive MIMO: From Model-Driven Approaches to Foundation Models

Dec 13, 2024Abstract:In this paper, we explore the potential of artificial intelligence (AI) to address the challenges posed by terahertz ultra-massive multiple-input multiple-output (THz UM-MIMO) systems. We begin by outlining the characteristics of THz UM-MIMO systems, and identify three primary challenges for the transceiver design: 'hard to compute', 'hard to model', and 'hard to measure'. We argue that AI can provide a promising solution to these challenges. We then propose two systematic research roadmaps for developing AI algorithms tailored for THz UM-MIMO systems. The first roadmap, called model-driven deep learning (DL), emphasizes the importance to leverage available domain knowledge and advocates for adopting AI only to enhance the bottleneck modules within an established signal processing or optimization framework. We discuss four essential steps to make it work, including algorithmic frameworks, basis algorithms, loss function design, and neural architecture design. Afterwards, we present a forward-looking vision through the second roadmap, i.e., physical layer foundation models. This approach seeks to unify the design of different transceiver modules by focusing on their common foundation, i.e., the wireless channel. We propose to train a single, compact foundation model to estimate the score function of wireless channels, which can serve as a versatile prior for designing a wide variety of transceiver modules. We will also guide the readers through four essential steps, including general frameworks, conditioning, site-specific adaptation, and the joint design of foundation models and model-driven DL.

Siamese Machine Unlearning with Knowledge Vaporization and Concentration

Dec 02, 2024

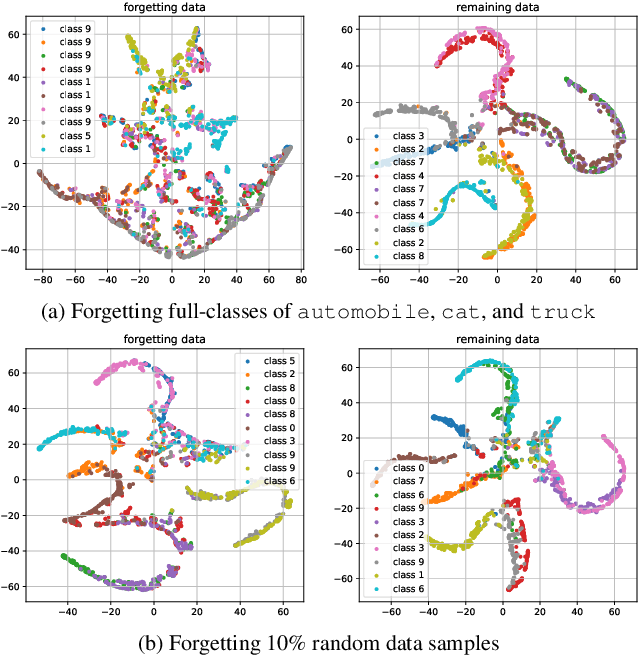

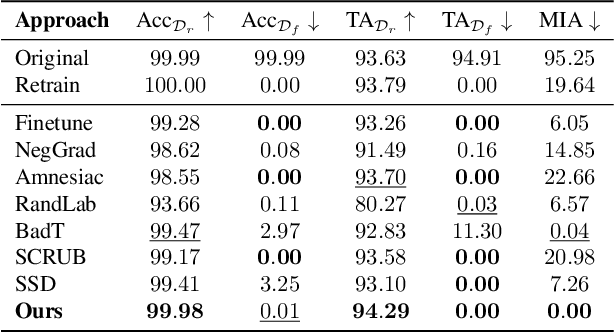

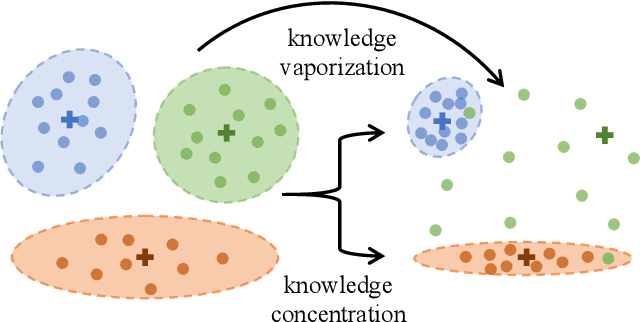

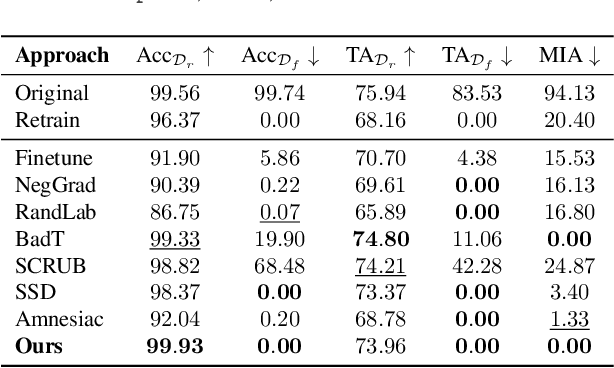

Abstract:In response to the practical demands of the ``right to be forgotten" and the removal of undesired data, machine unlearning emerges as an essential technique to remove the learned knowledge of a fraction of data points from trained models. However, existing methods suffer from limitations such as insufficient methodological support, high computational complexity, and significant memory demands. In this work, we propose the concepts of knowledge vaporization and concentration to selectively erase learned knowledge from specific data points while maintaining representations for the remaining data. Utilizing the Siamese networks, we exemplify the proposed concepts and develop an efficient method for machine unlearning. Our proposed Siamese unlearning method does not require additional memory overhead and full access to the remaining dataset. Extensive experiments conducted across multiple unlearning scenarios showcase the superiority of Siamese unlearning over baseline methods, illustrating its ability to effectively remove knowledge from forgetting data, enhance model utility on remaining data, and reduce susceptibility to membership inference attacks.

Toward Real-Time Edge AI: Model-Agnostic Task-Oriented Communication with Visual Feature Alignment

Dec 01, 2024

Abstract:Task-oriented communication presents a promising approach to improve the communication efficiency of edge inference systems by optimizing learning-based modules to extract and transmit relevant task information. However, real-time applications face practical challenges, such as incomplete coverage and potential malfunctions of edge servers. This situation necessitates cross-model communication between different inference systems, enabling edge devices from one service provider to collaborate effectively with edge servers from another. Independent optimization of diverse edge systems often leads to incoherent feature spaces, which hinders the cross-model inference for existing task-oriented communication. To facilitate and achieve effective cross-model task-oriented communication, this study introduces a novel framework that utilizes shared anchor data across diverse systems. This approach addresses the challenge of feature alignment in both server-based and on-device scenarios. In particular, by leveraging the linear invariance of visual features, we propose efficient server-based feature alignment techniques to estimate linear transformations using encoded anchor data features. For on-device alignment, we exploit the angle-preserving nature of visual features and propose to encode relative representations with anchor data to streamline cross-model communication without additional alignment procedures during the inference. The experimental results on computer vision benchmarks demonstrate the superior performance of the proposed feature alignment approaches in cross-model task-oriented communications. The runtime and computation overhead analysis further confirm the effectiveness of the proposed feature alignment approaches in real-time applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge