Songjie Xie

Mutual Information-Empowered Task-Oriented Communication: Principles, Applications and Challenges

Mar 26, 2025

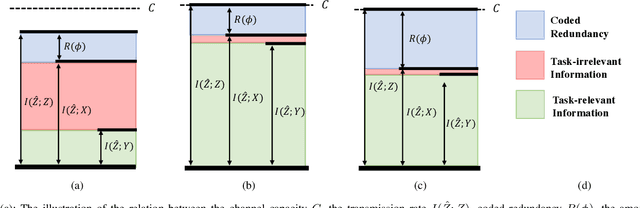

Abstract:Mutual information (MI)-based guidelines have recently proven to be effective for designing task-oriented communication systems, where the ultimate goal is to extract and transmit task-relevant information for downstream task. This paper provides a comprehensive overview of MI-empowered task-oriented communication, highlighting how MI-based methods can serve as a unifying design framework in various task-oriented communication scenarios. We begin with the roadmap of MI for designing task-oriented communication systems, and then introduce the roles and applications of MI to guide feature encoding, transmission optimization, and efficient training with two case studies. We further elaborate the limitations and challenges of MI-based methods. Finally, we identify several open issues in MI-based task-oriented communication to inspire future research.

Siamese Machine Unlearning with Knowledge Vaporization and Concentration

Dec 02, 2024

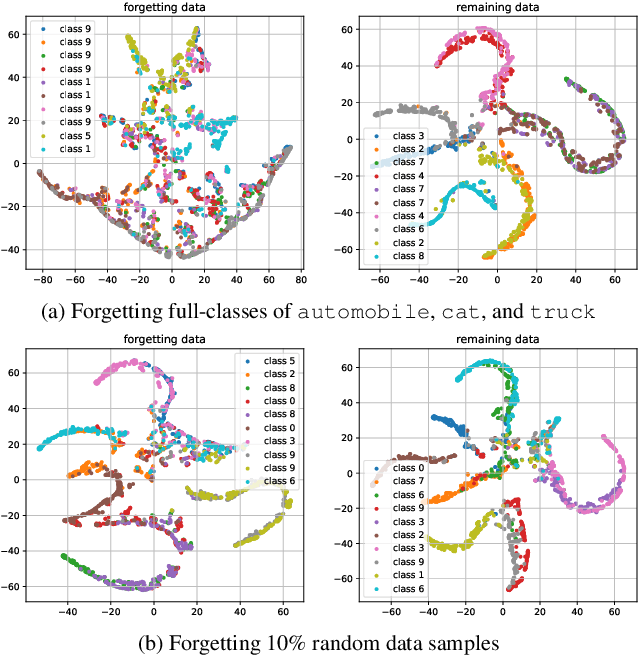

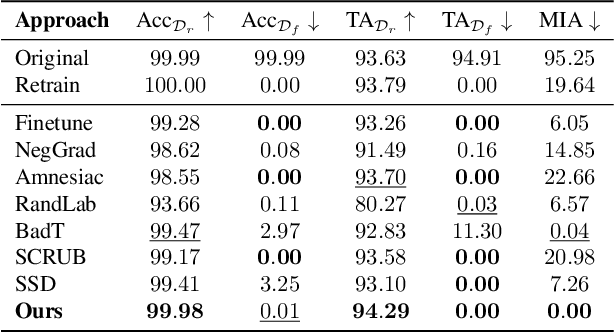

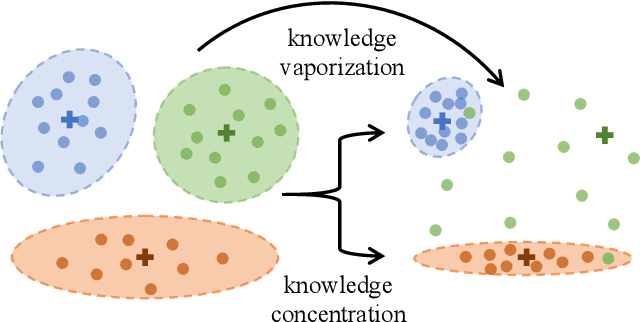

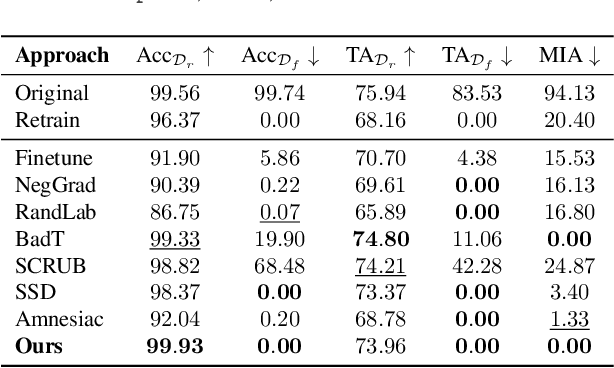

Abstract:In response to the practical demands of the ``right to be forgotten" and the removal of undesired data, machine unlearning emerges as an essential technique to remove the learned knowledge of a fraction of data points from trained models. However, existing methods suffer from limitations such as insufficient methodological support, high computational complexity, and significant memory demands. In this work, we propose the concepts of knowledge vaporization and concentration to selectively erase learned knowledge from specific data points while maintaining representations for the remaining data. Utilizing the Siamese networks, we exemplify the proposed concepts and develop an efficient method for machine unlearning. Our proposed Siamese unlearning method does not require additional memory overhead and full access to the remaining dataset. Extensive experiments conducted across multiple unlearning scenarios showcase the superiority of Siamese unlearning over baseline methods, illustrating its ability to effectively remove knowledge from forgetting data, enhance model utility on remaining data, and reduce susceptibility to membership inference attacks.

Toward Real-Time Edge AI: Model-Agnostic Task-Oriented Communication with Visual Feature Alignment

Dec 01, 2024

Abstract:Task-oriented communication presents a promising approach to improve the communication efficiency of edge inference systems by optimizing learning-based modules to extract and transmit relevant task information. However, real-time applications face practical challenges, such as incomplete coverage and potential malfunctions of edge servers. This situation necessitates cross-model communication between different inference systems, enabling edge devices from one service provider to collaborate effectively with edge servers from another. Independent optimization of diverse edge systems often leads to incoherent feature spaces, which hinders the cross-model inference for existing task-oriented communication. To facilitate and achieve effective cross-model task-oriented communication, this study introduces a novel framework that utilizes shared anchor data across diverse systems. This approach addresses the challenge of feature alignment in both server-based and on-device scenarios. In particular, by leveraging the linear invariance of visual features, we propose efficient server-based feature alignment techniques to estimate linear transformations using encoded anchor data features. For on-device alignment, we exploit the angle-preserving nature of visual features and propose to encode relative representations with anchor data to streamline cross-model communication without additional alignment procedures during the inference. The experimental results on computer vision benchmarks demonstrate the superior performance of the proposed feature alignment approaches in cross-model task-oriented communications. The runtime and computation overhead analysis further confirm the effectiveness of the proposed feature alignment approaches in real-time applications.

Privacy for Fairness: Information Obfuscation for Fair Representation Learning with Local Differential Privacy

Feb 16, 2024Abstract:As machine learning (ML) becomes more prevalent in human-centric applications, there is a growing emphasis on algorithmic fairness and privacy protection. While previous research has explored these areas as separate objectives, there is a growing recognition of the complex relationship between privacy and fairness. However, previous works have primarily focused on examining the interplay between privacy and fairness through empirical investigations, with limited attention given to theoretical exploration. This study aims to bridge this gap by introducing a theoretical framework that enables a comprehensive examination of their interrelation. We shall develop and analyze an information bottleneck (IB) based information obfuscation method with local differential privacy (LDP) for fair representation learning. In contrast to many empirical studies on fairness in ML, we show that the incorporation of LDP randomizers during the encoding process can enhance the fairness of the learned representation. Our analysis will demonstrate that the disclosure of sensitive information is constrained by the privacy budget of the LDP randomizer, thereby enabling the optimization process within the IB framework to effectively suppress sensitive information while preserving the desired utility through obfuscation. Based on the proposed method, we further develop a variational representation encoding approach that simultaneously achieves fairness and LDP. Our variational encoding approach offers practical advantages. It is trained using a non-adversarial method and does not require the introduction of any variational prior. Extensive experiments will be presented to validate our theoretical results and demonstrate the ability of our proposed approach to achieve both LDP and fairness while preserving adequate utility.

Robust Information Bottleneck for Task-Oriented Communication with Digital Modulation

Sep 21, 2022

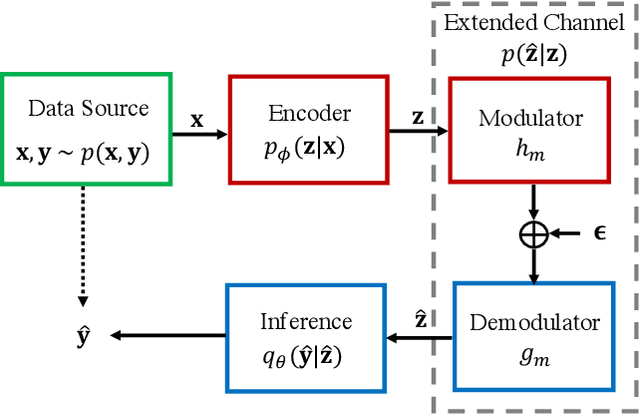

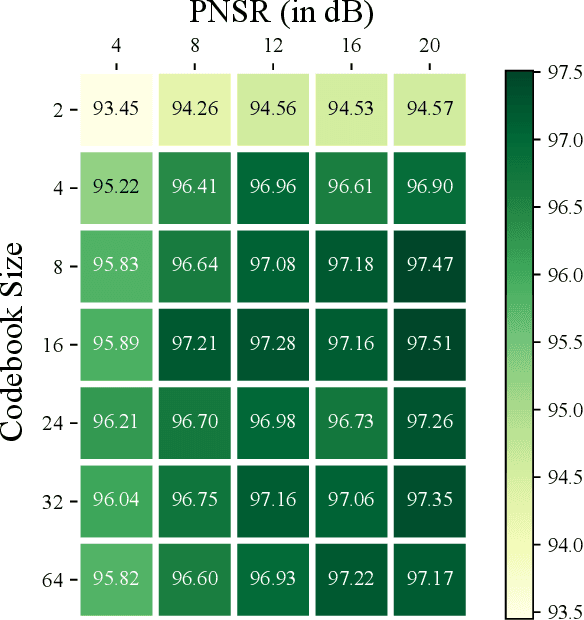

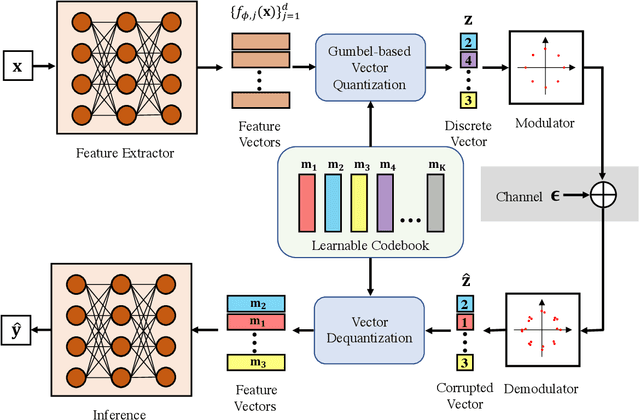

Abstract:Task-oriented communications, mostly using learning-based joint source-channel coding (JSCC), aim to design a communication-efficient edge inference system by transmitting task-relevant information to the receiver. However, only transmitting task-relevant information without introducing any redundancy may cause robustness issues in learning due to the channel variations, and the JSCC which directly maps the source data into continuous channel input symbols poses compatibility issues on existing digital communication systems. In this paper, we address these two issues by first investigating the inherent tradeoff between the informativeness of the encoded representations and the robustness to information distortion in the received representations, and then propose a task-oriented communication scheme with digital modulation, named discrete task-oriented JSCC (DT-JSCC), where the transmitter encodes the features into a discrete representation and transmits it to the receiver with the digital modulation scheme. In the DT-JSCC scheme, we develop a robust encoding framework, named robust information bottleneck (RIB), to improve the communication robustness to the channel variations, and derive a tractable variational upper bound of the RIB objective function using the variational approximation to overcome the computational intractability of mutual information. The experimental results demonstrate that the proposed DT-JSCC achieves better inference performance than the baseline methods with low communication latency, and exhibits robustness to channel variations due to the applied RIB framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge