Arumugam Nallanathan

Sampling-Free Diffusion Transformers for Low-Complexity MIMO Channel Estimation

Feb 02, 2026Abstract:Diffusion model-based channel estimators have shown impressive performance but suffer from high computational complexity because they rely on iterative reverse sampling. This paper proposes a sampling-free diffusion transformer (DiT) for low-complexity MIMO channel estimation, termed SF-DiT-CE. Exploiting angular-domain sparsity of MIMO channels, we train a lightweight DiT to directly predict the clean channels from their perturbed observations and noise levels. At inference, the least square (LS) estimate and estimation noise condition the DiT to recover the channel in a single forward pass, eliminating iterative sampling. Numerical results demonstrate that our method achieves superior estimation accuracy and robustness with significantly lower complexity than state-of-the-art baselines.

Multi-Mode Pinching Antenna Systems Enabled Multi-User Communications

Jan 28, 2026Abstract:This paper proposes a novel multi-mode pinching-antenna systems (PASS) framework. Multiple data streams can be transmitted within a single waveguide through multiple guided modes, thus facilitating efficient multi-user communications through the mode-domain multiplexing. A physic model is derived, which reveals the mode-selective power radiation feature of pinching antennas (PAs). A two-mode PASS enabled two-user downlink communication system is investigated. Considering the mode selectivity of PA power radiation, a practical PA grouping scheme is proposed, where each PA group matches with one specific guided mode and mainly radiates its signal sequentially. Depending on whether the guided mode leaks power to unmatched PAs or not, the proposed PA grouping scheme operates in either the non-leakage or weak-leakage regime. Based on this, the baseband beamforming and PA locations are jointly optimized for sum rate maximization, subject to each user's minimum rate requirement. 1) A simple two-PA case in non-leakage regime is first considered. To solve the formulated problem, a channel orthogonality based solution is proposed. The channel orthogonality is ensured by large-scale and wavelength-scale equality constraints on PA locations. Thus, the optimal beamforming reduces to maximum-ratio transmission (MRT). Moreover, the optimal PA locations are obtained via a Newton-based one-dimension search algorithm that enforces two-scale PA-location constraints by Newton's method. 2) A general multi-PA case in both non-leakage and weak-leakage regimes is further considered. A low-complexity particle-swarm optimization with zero-forcing beamforming (PSO-ZF) algorithm is developed, thus effectively tackling the high-oscillatory and strong-coupled problem. Simulation results demonstrate the superiority of the proposed multi-mode PASS over conventional single-mode PASS and fixed-antenna structures.

A Survey of Pinching-Antenna Systems (PASS)

Jan 26, 2026Abstract:The pinching-antenna system (PASS), recently proposed as a flexible-antenna technology, has been regarded as a promising solution for several challenges in next-generation wireless networks. It provides large-scale antenna reconfiguration, establishes stable line-of-sight links, mitigates signal blockage, and exploits near-field advantages through its distinctive architecture. This article aims to present a comprehensive overview of the state of the art in PASS. The fundamental principles of PASS are first discussed, including its hardware architecture, circuit and physical models, and signal models. Several emerging PASS designs, such as segmented PASS (S-PASS), center-fed PASS (C-PASS), and multi-mode PASS (M-PASS), are subsequently introduced, and their design features are discussed. In addition, the properties and promising applications of PASS for wireless sensing are reviewed. On this basis, recent progress in the performance analysis of PASS for both communications and sensing is surveyed, and the performance gains achieved by PASS are highlighted. Existing research contributions in optimization and machine learning are also summarized, with the practical challenges of beamforming and resource allocation being identified in relation to the unique transmission structure and propagation characteristics of PASS. Finally, several variants of PASS are presented, and key implementation challenges that remain open for future study are discussed.

User Localization and Channel Estimation for Pinching-Antenna Systems (PASS)

Dec 16, 2025

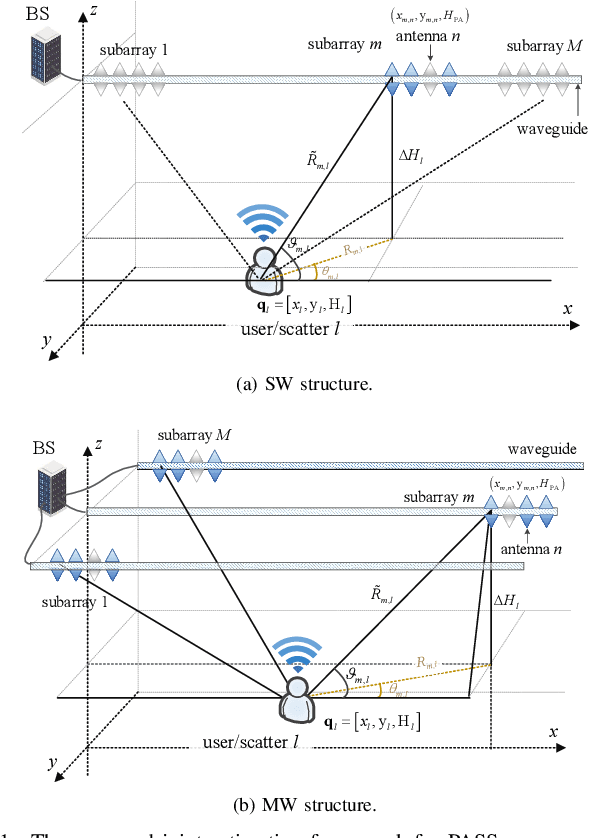

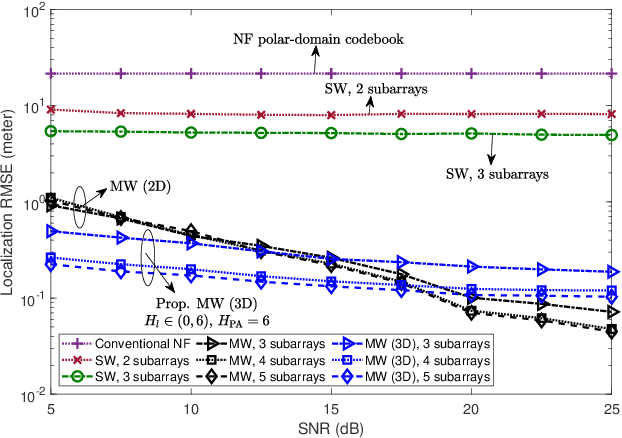

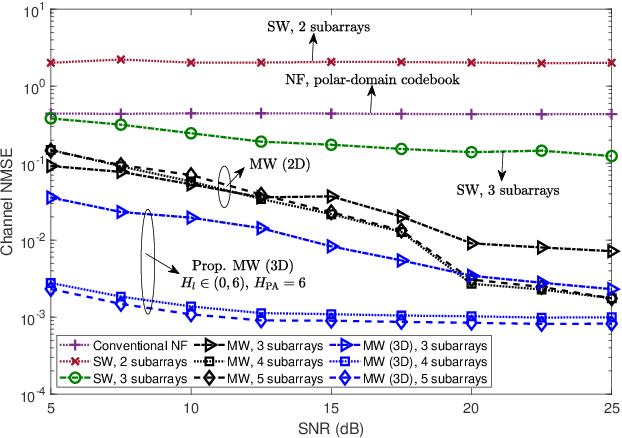

Abstract:This letter proposes a novel user localization and channel estimation framework for pinching-antenna systems (PASS), where pinching antennas are grouped into subarrays on each waveguide to cooperatively estimate user/scatterer locations, thus reconstructing channels. Both single-waveguide (SW) and multi-waveguide (MW) structures are considered. SW consists of multiple alternatingly activated subarrays, while MW deploys one subarray on each waveguide to enable concurrent subarray measurements. For the 2D scenarios with a fixed user/scatter height, an orthogonal matching pursuit-based geometry-consistent localization (OMP-GCL) algorithm is proposed, which leverages inter-subarray geometric relationships and compressed sensing for precise estimation. Theoretical analysis on Cramér-Rao lower bound (CRLB) demonstrates that: 1) The estimation accuracy can be improved by increasing the geometric diversity through multi-subarray deployment; and 2) SW provides a limited geometric diversity within a $180^\circ$ half space and leads to angle ambiguity, while MW enables full-space observations and reduces overheads. The OMP-GCL algorithm is further extended to 3D scenarios, where user and scatter heights are also estimated. Numerical results validate the theoretical analysis, and verify that MW achieves centimeter- and decimeter-level localization accuracy in 2D and 3D scenarios with only three waveguides.

Segmented Waveguide-Enabled Pinching-Antenna Systems (SWANs) for ISAC

Dec 08, 2025

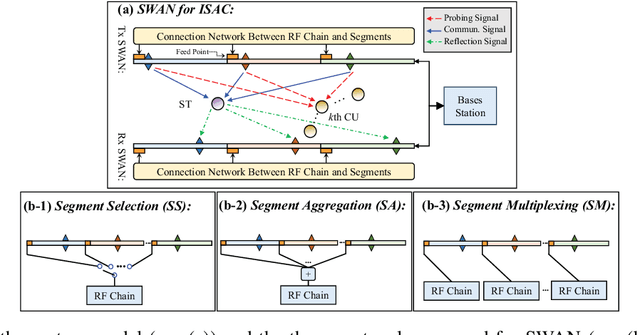

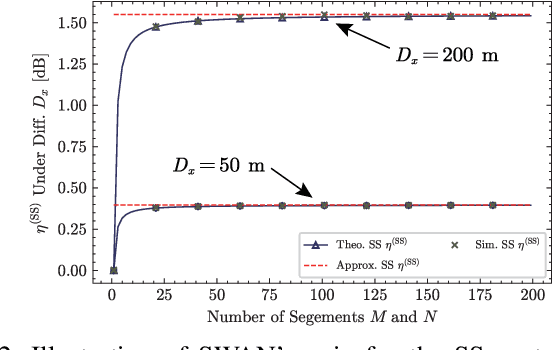

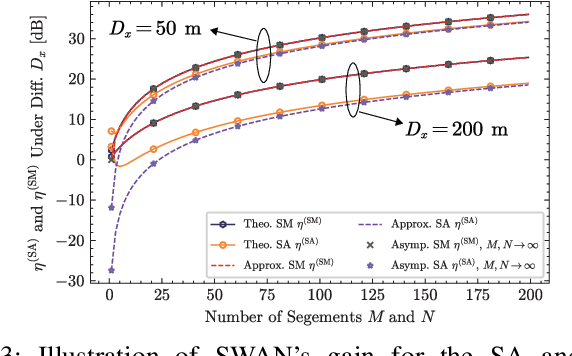

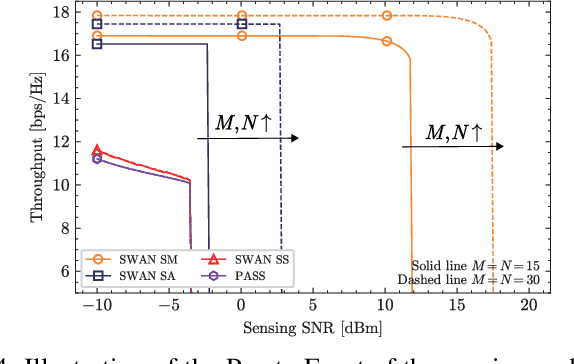

Abstract:A segmented waveguide-enabled pinching-antenna system (SWAN)-assisted integrated sensing and communications (ISAC) framework is proposed. Unlike conventional pinching antenna systems (PASS), which use a single long waveguide, SWAN divides the waveguide into multiple short segments, each with a dedicated feed point. Thanks to the segmented structure, SWAN enhances sensing performance by significantly simplifying the reception model and reducing the in-waveguide propagation loss. To balance performance and complexity, three segment controlling protocols are proposed for the transceivers, namely i) \emph{segment selection} to select a single segment for signal transceiving, ii) \emph{segment aggregation} to aggregate signals from all segments using a single RF chain, and iii) \emph{segment multiplexing} to jointly process the signals from all segments using individual RF chains. The theoretical sensing performance limit is first analyzed for different protocols, unveiling how the sensing performance gain of SWAN scales with the number of segments. Based on this performance limit, the Pareto fronts of sensing and communication performance are characterized for the simple one-user one-target case, which is then extended to the general multi-user single-target case based on time-division multiple access (TDMA). Numerical results are presented to verify the correctness of the derivations and the effectiveness of the proposed algorithms, which jointly confirm the advantages of SWAN-assisted ISAC.

GNN-Enabled Robust Hybrid Beamforming with Score-Based CSI Generation and Denoising

Nov 10, 2025Abstract:Accurate Channel State Information (CSI) is critical for Hybrid Beamforming (HBF) tasks. However, obtaining high-resolution CSI remains challenging in practical wireless communication systems. To address this issue, we propose to utilize Graph Neural Networks (GNNs) and score-based generative models to enable robust HBF under imperfect CSI conditions. Firstly, we develop the Hybrid Message Graph Attention Network (HMGAT) which updates both node and edge features through node-level and edge-level message passing. Secondly, we design a Bidirectional Encoder Representations from Transformers (BERT)-based Noise Conditional Score Network (NCSN) to learn the distribution of high-resolution CSI, facilitating CSI generation and data augmentation to further improve HMGAT's performance. Finally, we present a Denoising Score Network (DSN) framework and its instantiation, termed DeBERT, which can denoise imperfect CSI under arbitrary channel error levels, thereby facilitating robust HBF. Experiments on DeepMIMO urban datasets demonstrate the proposed models' superior generalization, scalability, and robustness across various HBF tasks with perfect and imperfect CSI.

Large Language Model-Empowered Decision Transformer for UAV-Enabled Data Collection

Sep 17, 2025Abstract:The deployment of unmanned aerial vehicles (UAVs) for reliable and energy-efficient data collection from spatially distributed devices holds great promise in supporting diverse Internet of Things (IoT) applications. Nevertheless, the limited endurance and communication range of UAVs necessitate intelligent trajectory planning. While reinforcement learning (RL) has been extensively explored for UAV trajectory optimization, its interactive nature entails high costs and risks in real-world environments. Offline RL mitigates these issues but remains susceptible to unstable training and heavily rely on expert-quality datasets. To address these challenges, we formulate a joint UAV trajectory planning and resource allocation problem to maximize energy efficiency of data collection. The resource allocation subproblem is first transformed into an equivalent linear programming formulation and solved optimally with polynomial-time complexity. Then, we propose a large language model (LLM)-empowered critic-regularized decision transformer (DT) framework, termed LLM-CRDT, to learn effective UAV control policies. In LLM-CRDT, we incorporate critic networks to regularize the DT model training, thereby integrating the sequence modeling capabilities of DT with critic-based value guidance to enable learning effective policies from suboptimal datasets. Furthermore, to mitigate the data-hungry nature of transformer models, we employ a pre-trained LLM as the transformer backbone of the DT model and adopt a parameter-efficient fine-tuning strategy, i.e., LoRA, enabling rapid adaptation to UAV control tasks with small-scale dataset and low computational overhead. Extensive simulations demonstrate that LLM-CRDT outperforms benchmark online and offline RL methods, achieving up to 36.7\% higher energy efficiency than the current state-of-the-art DT approaches.

Pinching-Antenna Systems (PASS): A Tutorial

Aug 11, 2025Abstract:Pinching antenna systems (PASS) present a breakthrough among the flexible-antenna technologies, and distinguish themselves by facilitating large-scale antenna reconfiguration, line-of-sight creation, scalable implementation, and near-field benefits, thus bringing wireless communications from the last mile to the last meter. A comprehensive tutorial is presented in this paper. First, the fundamentals of PASS are discussed, including PASS signal models, hardware models, power radiation models, and pinching antenna activation methods. Building upon this, the information-theoretic capacity limits achieved by PASS are characterized, and several typical performance metrics of PASS-based communications are analyzed to demonstrate its superiority over conventional antenna technologies. Next, the pinching beamforming design is investigated. The corresponding power scaling law is first characterized. For the joint transmit and pinching design in the general multiple-waveguide case, 1) a pair of transmission strategies is proposed for PASS-based single-user communications to validate the superiority of PASS, namely sub-connected and fully connected structures; and 2) three practical protocols are proposed for facilitating PASS-based multi-user communications, namely waveguide switching, waveguide division, and waveguide multiplexing. A possible implementation of PASS in wideband communications is further highlighted. Moreover, the channel state information acquisition in PASS is elaborated with a pair of promising solutions. To overcome the high complexity and suboptimality inherent in conventional convex-optimization-based approaches, machine-learning-based methods for operating PASS are also explored, focusing on selected deep neural network architectures and training algorithms. Finally, several promising applications of PASS in next-generation wireless networks are highlighted.

Wireless Sensing via Pinching-Antenna Systems

May 21, 2025Abstract:A wireless sensing architecture via pinching antenna systems is proposed. Compared to conventional wireless systems, PASS offers flexible antenna deployment and improved probing performance for wireless sensing by leveraging dielectric waveguides and pinching antennas (PAs). To enhance signal reception, leaky coaxial (LCX) cables are used to uniformly collect echo signals over a wide area. The Cram\'er-Rao bound (CRB) for multi-target sensing is derived and then minimized through the joint optimization of the transmit waveform and the positions of PAs. To solve the resulting highly coupled, non-convex problem, a two-stage particle swarm optimization (PSO)-based algorithm is proposed. Numerical results demonstrate significant gains in sensing accuracy and robustness over conventional sensing systems, highlighting the benefits of integrating LCX-based reception with optimized PASS configurations.

Pinching-Antenna Systems (PASS): Power Radiation Model and Optimal Beamforming Design

Apr 30, 2025Abstract:Pinching-antenna systems (PASS) improve wireless links by configuring the locations of activated pinching antennas along dielectric waveguides, namely pinching beamforming. In this paper, a novel adjustable power radiation model is proposed for PASS, where power radiation ratios of pinching antennas can be flexibly controlled by tuning the spacing between pinching antennas and waveguides. A closed-form pinching antenna spacing arrangement strategy is derived to achieve the commonly assumed equal-power radiation. Based on this, a practical PASS framework relying on discrete activation is considered, where pinching antennas can only be activated among a set of predefined locations. A transmit power minimization problem is formulated, which jointly optimizes the transmit beamforming, pinching beamforming, and the numbers of activated pinching antennas, subject to each user's minimum rate requirement. (1) To solve the resulting highly coupled mixed-integer nonlinear programming (MINLP) problem, branch-and-bound (BnB)-based algorithms are proposed for both single-user and multi-user scenarios, which is guaranteed to converge to globally optimal solutions. (2) A low-complexity many-to-many matching algorithm is further developed. Combined with the Karush-Kuhn-Tucker (KKT) theory, locally optimal and pairwise-stable solutions are obtained within polynomial-time complexity. Simulation results demonstrate that: (i) PASS significantly outperforms conventional multi-antenna architectures, particularly when the number of users and the spatial range increase; and (ii) The proposed matching-based algorithm achieves near-optimal performance, resulting in only a slight performance loss while significantly reducing computational overheads. Code is available at https://github.com/xiaoxiaxusummer/PASS_Discrete

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge