Yansha Deng

Goal-oriented Communication for Fast and Robust Robotic Fault Detection and Recovery

Jan 26, 2026Abstract:Autonomous robotic systems are widely deployed in smart factories and operate in dynamic, uncertain, and human-involved environments that require low-latency and robust fault detection and recovery (FDR). However, existing FDR frameworks exhibit various limitations, such as significant delays in communication and computation, and unreliability in robot motion/trajectory generation, mainly because the communication-computation-control (3C) loop is designed without considering the downstream FDR goal. To address this, we propose a novel Goal-oriented Communication (GoC) framework that jointly designs the 3C loop tailored for fast and robust robotic FDR, with the goal of minimising the FDR time while maximising the robotic task (e.g., workpiece sorting) success rate. For fault detection, our GoC framework innovatively defines and extracts the 3D scene graph (3D-SG) as the semantic representation via our designed representation extractor, and detects faults by monitoring spatial relationship changes in the 3D-SG. For fault recovery, we fine-tune a small language model (SLM) via Low-Rank Adaptation (LoRA) and enhance its reasoning and generalization capabilities via knowledge distillation to generate recovery motions for robots. We also design a lightweight goal-oriented digital twin reconstruction module to refine the recovery motions generated by the SLM when fine-grained robotic control is required, using only task-relevant object contours for digital twin reconstruction. Extensive simulations demonstrate that our GoC framework reduces the FDR time by up to 82.6% and improves the task success rate by up to 76%, compared to the state-of-the-art frameworks that rely on vision language models for fault detection and large language models for fault recovery.

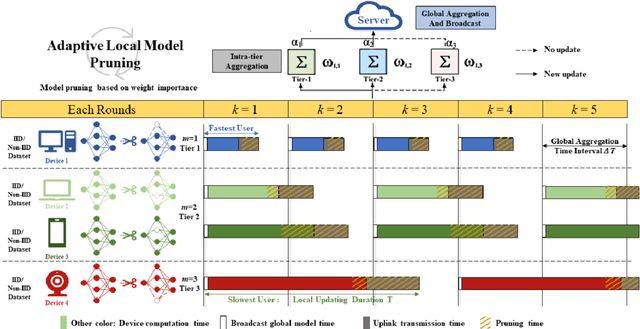

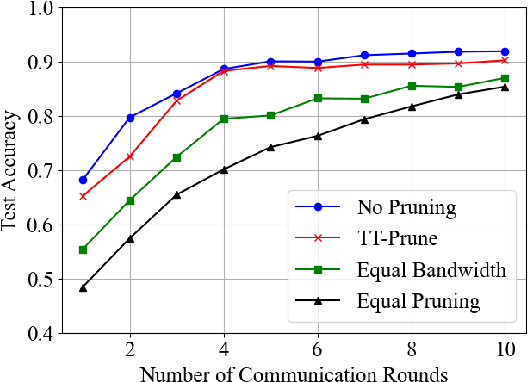

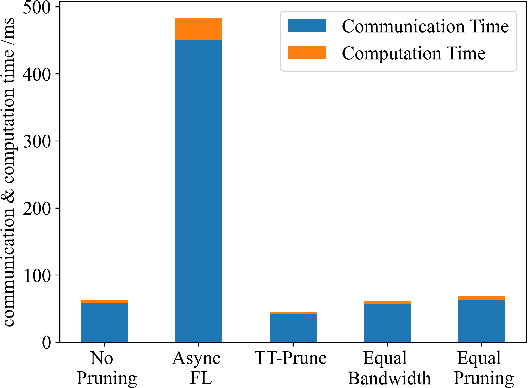

TT-Prune: Joint Model Pruning and Resource Allocation for Communication-efficient Time-triggered Federated Learning

Nov 06, 2025Abstract:Federated learning (FL) offers new opportunities in machine learning, particularly in addressing data privacy concerns. In contrast to conventional event-based federated learning, time-triggered federated learning (TT-Fed), as a general form of both asynchronous and synchronous FL, clusters users into different tiers based on fixed time intervals. However, the FL network consists of a growing number of user devices with limited wireless bandwidth, consequently magnifying issues such as stragglers and communication overhead. In this paper, we introduce adaptive model pruning to wireless TT-Fed systems and study the problem of jointly optimizing the pruning ratio and bandwidth allocation to minimize the training loss while ensuring minimal learning latency. To answer this question, we perform convergence analysis on the gradient l_2 norm of the TT-Fed model based on model pruning. Based on the obtained convergence upper bound, a joint optimization problem of pruning ratio and wireless bandwidth is formulated to minimize the model training loss under a given delay threshold. Then, we derive closed-form solutions for wireless bandwidth and pruning ratio using Karush-Kuhn-Tucker(KKT) conditions. The simulation results show that model pruning could reduce the communication cost by 40% while maintaining the model performance at the same level.

PWC-MoE: Privacy-Aware Wireless Collaborative Mixture of Experts

May 13, 2025Abstract:Large language models (LLMs) hosted on cloud servers alleviate the computational and storage burdens on local devices but raise privacy concerns due to sensitive data transmission and require substantial communication bandwidth, which is challenging in constrained environments. In contrast, small language models (SLMs) running locally enhance privacy but suffer from limited performance on complex tasks. To balance computational cost, performance, and privacy protection under bandwidth constraints, we propose a privacy-aware wireless collaborative mixture of experts (PWC-MoE) framework. Specifically, PWC-MoE employs a sparse privacy-aware gating network to dynamically route sensitive tokens to privacy experts located on local clients, while non-sensitive tokens are routed to non-privacy experts located at the remote base station. To achieve computational efficiency, the gating network ensures that each token is dynamically routed to and processed by only one expert. To enhance scalability and prevent overloading of specific experts, we introduce a group-wise load-balancing mechanism for the gating network that evenly distributes sensitive tokens among privacy experts and non-sensitive tokens among non-privacy experts. To adapt to bandwidth constraints while preserving model performance, we propose a bandwidth-adaptive and importance-aware token offloading scheme. This scheme incorporates an importance predictor to evaluate the importance scores of non-sensitive tokens, prioritizing the most important tokens for transmission to the base station based on their predicted importance and the available bandwidth. Experiments demonstrate that the PWC-MoE framework effectively preserves privacy and maintains high performance even in bandwidth-constrained environments, offering a practical solution for deploying LLMs in privacy-sensitive and bandwidth-limited scenarios.

Large-Scale AI in Telecom: Charting the Roadmap for Innovation, Scalability, and Enhanced Digital Experiences

Mar 06, 2025

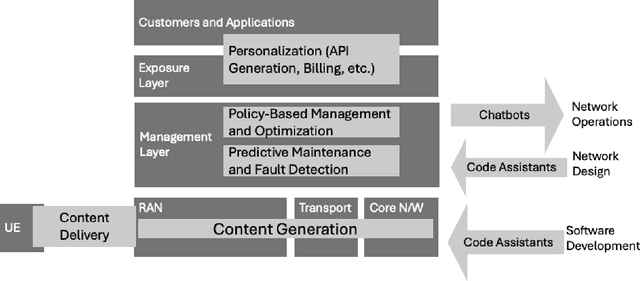

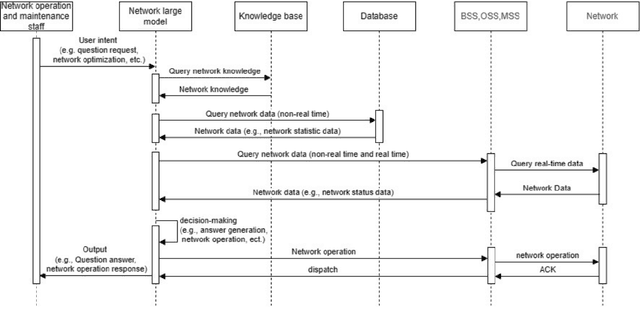

Abstract:This white paper discusses the role of large-scale AI in the telecommunications industry, with a specific focus on the potential of generative AI to revolutionize network functions and user experiences, especially in the context of 6G systems. It highlights the development and deployment of Large Telecom Models (LTMs), which are tailored AI models designed to address the complex challenges faced by modern telecom networks. The paper covers a wide range of topics, from the architecture and deployment strategies of LTMs to their applications in network management, resource allocation, and optimization. It also explores the regulatory, ethical, and standardization considerations for LTMs, offering insights into their future integration into telecom infrastructure. The goal is to provide a comprehensive roadmap for the adoption of LTMs to enhance scalability, performance, and user-centric innovation in telecom networks.

When xURLLC Meets NOMA: A Stochastic Network Calculus Perspective

Jan 11, 2025

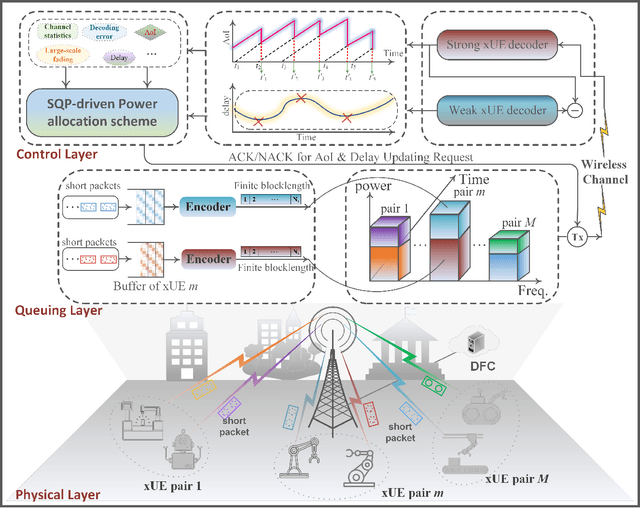

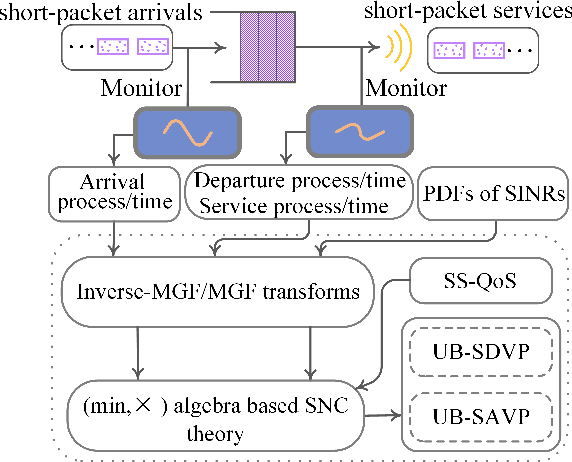

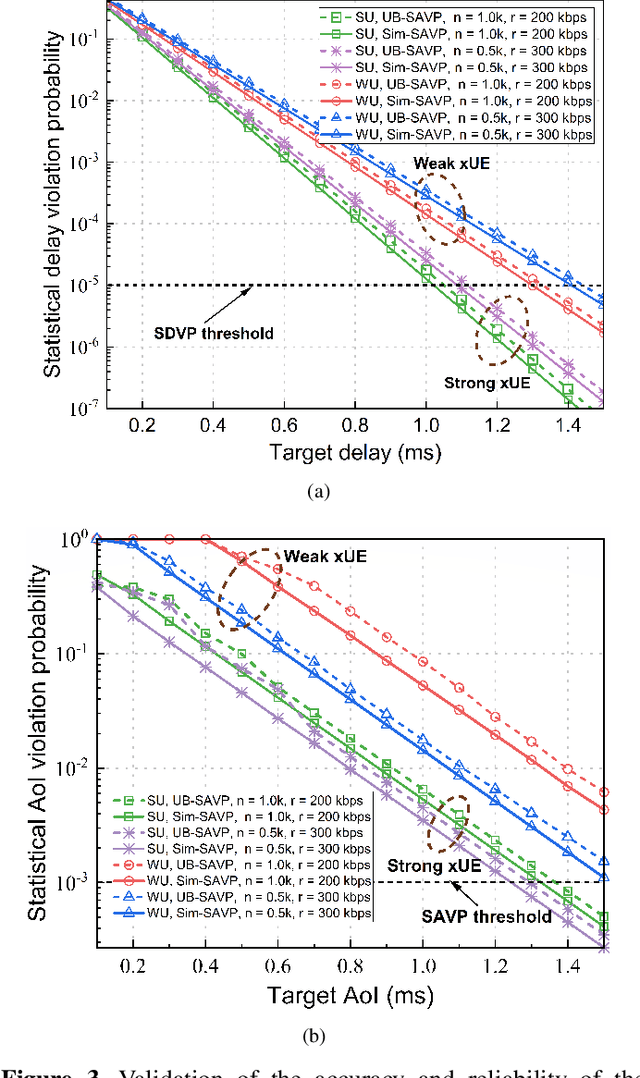

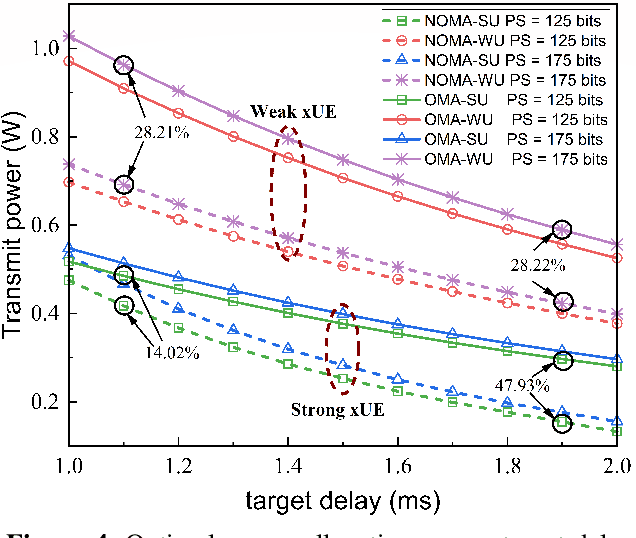

Abstract:The advent of next-generation ultra-reliable and low-latency communications (xURLLC) presents stringent and unprecedented requirements for key performance indicators (KPIs). As a disruptive technology, non-orthogonal multiple access (NOMA) harbors the potential to fulfill these stringent KPIs essential for xURLLC. However, the immaturity of research on the tail distributions of these KPIs significantly impedes the application of NOMA to xURLLC. Stochastic network calculus (SNC), as a potent methodology, is leveraged to provide dependable theoretical insights into tail distribution analysis and statistical QoS provisioning (SQP). In this article, we develop a NOMA-assisted uplink xURLLC network architecture that incorporates an SNC-based SQP theoretical framework (SNC-SQP) to support tail distribution analysis in terms of delay, age-of-information (AoI), and reliability. Based on SNC-SQP, an SQP-driven power optimization problem is proposed to minimize transmit power while guaranteeing xURLLC's KPIs on delay, AoI, reliability, and power consumption. Extensive simulations validate our proposed theoretical framework and demonstrate that the proposed power allocation scheme significantly reduces uplink transmit power and outperforms conventional schemes in terms of SQP performance.

Federated Fine-Tuning of LLMs: Framework Comparison and Research Directions

Jan 08, 2025

Abstract:Federated learning (FL) provides a privacy-preserving solution for fine-tuning pre-trained large language models (LLMs) using distributed private datasets, enabling task-specific adaptation while preserving data privacy. However, fine-tuning the extensive parameters in LLMs is particularly challenging in resource-constrained federated scenarios due to the significant communication and computational costs. To gain a deeper understanding of how these challenges can be addressed, this article conducts a comparative analysis three advanced federated LLM (FedLLM) frameworks that integrate knowledge distillation (KD) and split learning (SL) to mitigate these issues: 1) FedLLMs, where clients upload model parameters or gradients to enable straightforward and effective fine-tuning; 2) KD-FedLLMs, which leverage KD for efficient knowledge sharing via logits; and 3) Split-FedLLMs, which split the LLMs into two parts, with one part executed on the client and the other one on the server, to balance the computational load. Each framework is evaluated based on key performance metrics, including model accuracy, communication overhead, and client-side computational load, offering insights into their effectiveness for various federated fine-tuning scenarios. Through this analysis, we identify framework-specific optimization opportunities to enhance the efficiency of FedLLMs and discuss broader research directions, highlighting open opportunities to better adapt FedLLMs for real-world applications. A use case is presented to demonstrate the performance comparison of these three frameworks under varying configurations and settings.

Goal-oriented Semantic Communications for Metaverse Construction via Generative AI and Optimal Transport

Nov 25, 2024

Abstract:The emergence of the metaverse has boosted productivity and creativity, driving real-time updates and personalized content, which will substantially increase data traffic. However, current bit-oriented communication networks struggle to manage this high volume of dynamic information, restricting metaverse applications interactivity. To address this research gap, we propose a goal-oriented semantic communication (GSC) framework for metaverse. Building on an existing metaverse wireless construction task, our proposed GSC framework includes an hourglass network-based (HgNet) encoder to extract semantic information of objects in the metaverse; and a semantic decoder that uses this extracted information to reconstruct the metaverse content after wireless transmission, enabling efficient communication and real-time object behaviour updates to the scenery for metaverse construction task. To overcome the wireless channel noise at the receiver, we design an optimal transport (OT)-enabled semantic denoiser, which enhances the accuracy of metaverse scenery through wireless communication. Experimental results show that compared to the conventional metaverse construction, our proposed GSC framework significantly reduces wireless metaverse construction latency by 92.6\%, while improving metaverse object status accuracy and viewing experience by 45.6\% and 44.7\%, respectively.

Federated LLMs Fine-tuned with Adaptive Importance-Aware LoRA

Nov 10, 2024

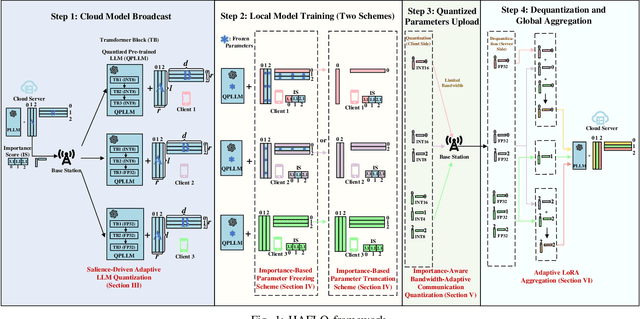

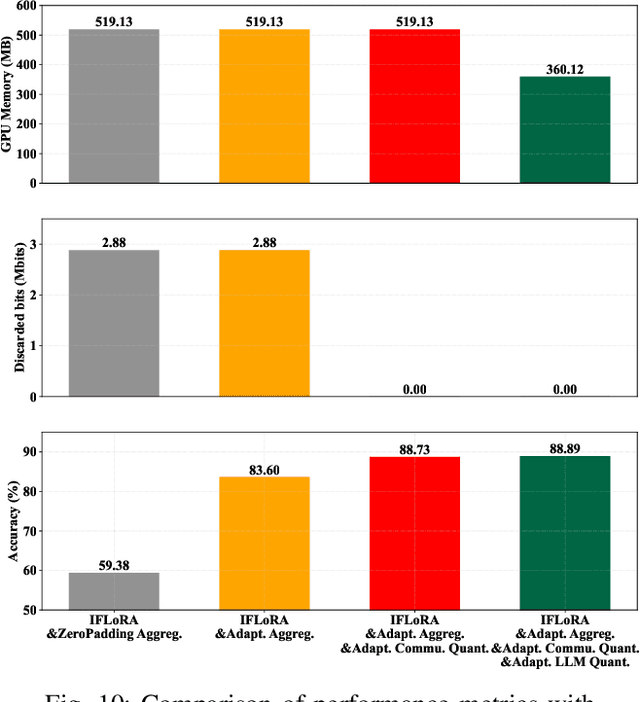

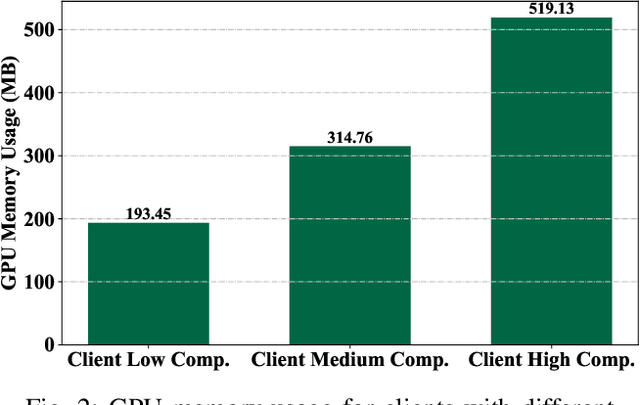

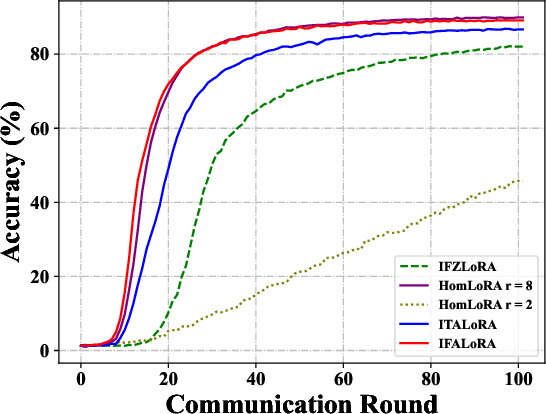

Abstract:Federated fine-tuning of pre-trained Large Language Models (LLMs) enables task-specific adaptation across diverse datasets while preserving data privacy. However, the large model size and heterogeneity in client resources pose significant computational and communication challenges. To address these issues, in this paper, we propose a novel Heterogeneous Adaptive Federated Low-Rank Adaptation (LoRA) fine-tuned LLM framework (HAFL). To accommodate client resource heterogeneity, we first introduce an importance-based parameter truncation scheme, which allows clients to have different LoRA ranks, and smoothed sensitivity scores are used as importance indicators. Despite its flexibility, the truncation process may cause performance degradation. To tackle this problem, we develop an importance-based parameter freezing scheme. In this approach, both the cloud server and clients maintain the same LoRA rank, while clients selectively update only the most important decomposed LoRA rank-1 matrices, keeping the rest frozen. To mitigate the information dilution caused by the zero-padding aggregation method, we propose an adaptive aggregation approach that operates at the decomposed rank-1 matrix level. Experiments on the 20 News Group classification task show that our method converges quickly with low communication size, and avoids performance degradation when distributing models to clients compared to truncation-based heterogeneous LoRA rank scheme. Additionally, our adaptive aggregation method achieves faster convergence compared to the zero-padding approach.

Joint Model Pruning and Resource Allocation for Wireless Time-triggered Federated Learning

Aug 03, 2024

Abstract:Time-triggered federated learning, in contrast to conventional event-based federated learning, organizes users into tiers based on fixed time intervals. However, this network still faces challenges due to a growing number of devices and limited wireless bandwidth, increasing issues like stragglers and communication overhead. In this paper, we apply model pruning to wireless Time-triggered systems and jointly study the problem of optimizing the pruning ratio and bandwidth allocation to minimize training loss under communication latency constraints. To solve this joint optimization problem, we perform a convergence analysis on the gradient $l_2$-norm of the asynchronous multi-tier federated learning (FL) model with adaptive model pruning. The convergence upper bound is derived and a joint optimization problem of pruning ratio and wireless bandwidth is defined to minimize the model training loss under a given communication latency constraint. The closed-form solutions for wireless bandwidth and pruning ratio by using KKT conditions are then formulated. As indicated in the simulation experiments, our proposed TT-Prune demonstrates a 40% reduction in communication cost, compared with the asynchronous multi-tier FL without model pruning, while maintaining the model convergence at the same level.

Goal-Oriented Semantic Communication for Wireless Image Transmission via Stable Diffusion

Aug 01, 2024

Abstract:Efficient image transmission is essential for seamless communication and collaboration within the visually-driven digital landscape. To achieve low latency and high-quality image reconstruction over a bandwidth-constrained noisy wireless channel, we propose a stable diffusion (SD)-based goal-oriented semantic communication (GSC) framework. In this framework, we design a semantic autoencoder that effectively extracts semantic information from images to reduce the transmission data size while ensuring high-quality reconstruction. Recognizing the impact of wireless channel noise on semantic information transmission, we propose an SD-based denoiser for GSC (SD-GSC) conditional on instantaneous channel gain to remove the channel noise from the received noisy semantic information under known channel. For scenarios with unknown channel, we further propose a parallel SD denoiser for GSC (PSD-GSC) to jointly learn the distribution of channel gains and denoise the received semantic information. Experimental results show that SD-GSC outperforms state-of-the-art ADJSCC and Latent-Diff DNSC, with the Peak Signal-to-Noise Ratio (PSNR) improvement by 7 dB and 5 dB, and the Fr\'echet Inception Distance (FID) reduction by 16 and 20, respectively. Additionally, PSD-GSC archives PSNR improvement of 2 dB and FID reduction of 6 compared to MMSE equalizer-enhanced SD-GSC.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge