Na Yan

PWC-MoE: Privacy-Aware Wireless Collaborative Mixture of Experts

May 13, 2025Abstract:Large language models (LLMs) hosted on cloud servers alleviate the computational and storage burdens on local devices but raise privacy concerns due to sensitive data transmission and require substantial communication bandwidth, which is challenging in constrained environments. In contrast, small language models (SLMs) running locally enhance privacy but suffer from limited performance on complex tasks. To balance computational cost, performance, and privacy protection under bandwidth constraints, we propose a privacy-aware wireless collaborative mixture of experts (PWC-MoE) framework. Specifically, PWC-MoE employs a sparse privacy-aware gating network to dynamically route sensitive tokens to privacy experts located on local clients, while non-sensitive tokens are routed to non-privacy experts located at the remote base station. To achieve computational efficiency, the gating network ensures that each token is dynamically routed to and processed by only one expert. To enhance scalability and prevent overloading of specific experts, we introduce a group-wise load-balancing mechanism for the gating network that evenly distributes sensitive tokens among privacy experts and non-sensitive tokens among non-privacy experts. To adapt to bandwidth constraints while preserving model performance, we propose a bandwidth-adaptive and importance-aware token offloading scheme. This scheme incorporates an importance predictor to evaluate the importance scores of non-sensitive tokens, prioritizing the most important tokens for transmission to the base station based on their predicted importance and the available bandwidth. Experiments demonstrate that the PWC-MoE framework effectively preserves privacy and maintains high performance even in bandwidth-constrained environments, offering a practical solution for deploying LLMs in privacy-sensitive and bandwidth-limited scenarios.

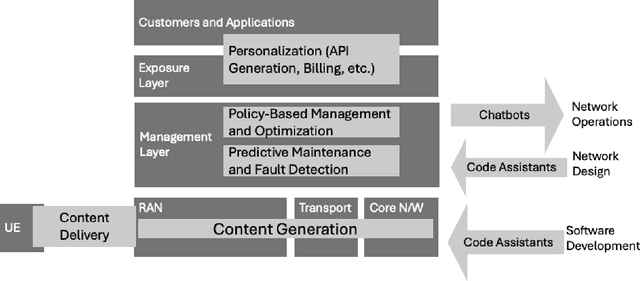

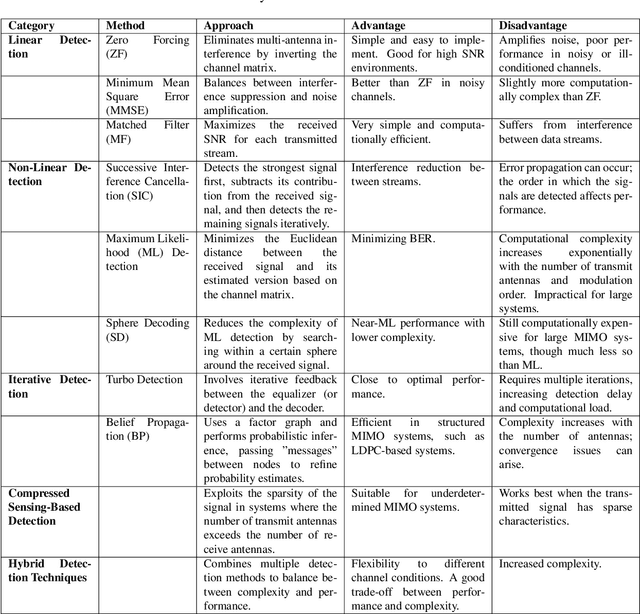

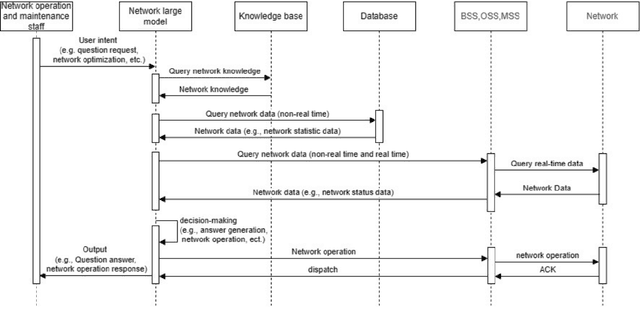

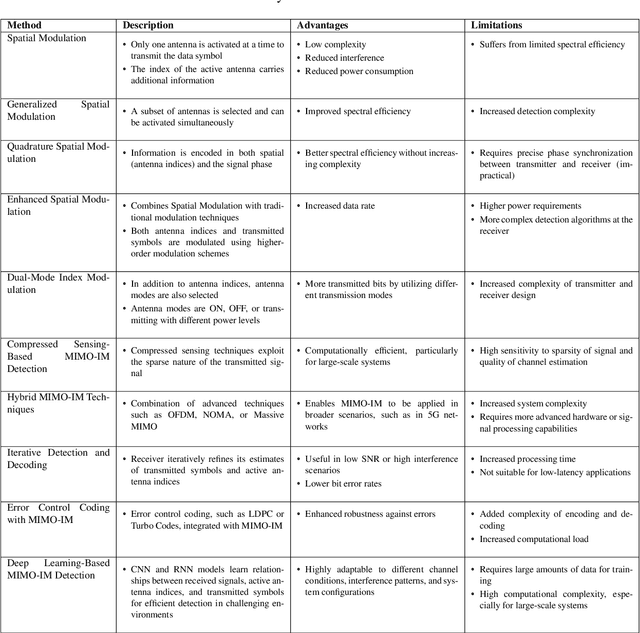

Large-Scale AI in Telecom: Charting the Roadmap for Innovation, Scalability, and Enhanced Digital Experiences

Mar 06, 2025

Abstract:This white paper discusses the role of large-scale AI in the telecommunications industry, with a specific focus on the potential of generative AI to revolutionize network functions and user experiences, especially in the context of 6G systems. It highlights the development and deployment of Large Telecom Models (LTMs), which are tailored AI models designed to address the complex challenges faced by modern telecom networks. The paper covers a wide range of topics, from the architecture and deployment strategies of LTMs to their applications in network management, resource allocation, and optimization. It also explores the regulatory, ethical, and standardization considerations for LTMs, offering insights into their future integration into telecom infrastructure. The goal is to provide a comprehensive roadmap for the adoption of LTMs to enhance scalability, performance, and user-centric innovation in telecom networks.

Federated Fine-Tuning of LLMs: Framework Comparison and Research Directions

Jan 08, 2025

Abstract:Federated learning (FL) provides a privacy-preserving solution for fine-tuning pre-trained large language models (LLMs) using distributed private datasets, enabling task-specific adaptation while preserving data privacy. However, fine-tuning the extensive parameters in LLMs is particularly challenging in resource-constrained federated scenarios due to the significant communication and computational costs. To gain a deeper understanding of how these challenges can be addressed, this article conducts a comparative analysis three advanced federated LLM (FedLLM) frameworks that integrate knowledge distillation (KD) and split learning (SL) to mitigate these issues: 1) FedLLMs, where clients upload model parameters or gradients to enable straightforward and effective fine-tuning; 2) KD-FedLLMs, which leverage KD for efficient knowledge sharing via logits; and 3) Split-FedLLMs, which split the LLMs into two parts, with one part executed on the client and the other one on the server, to balance the computational load. Each framework is evaluated based on key performance metrics, including model accuracy, communication overhead, and client-side computational load, offering insights into their effectiveness for various federated fine-tuning scenarios. Through this analysis, we identify framework-specific optimization opportunities to enhance the efficiency of FedLLMs and discuss broader research directions, highlighting open opportunities to better adapt FedLLMs for real-world applications. A use case is presented to demonstrate the performance comparison of these three frameworks under varying configurations and settings.

Federated LLMs Fine-tuned with Adaptive Importance-Aware LoRA

Nov 10, 2024

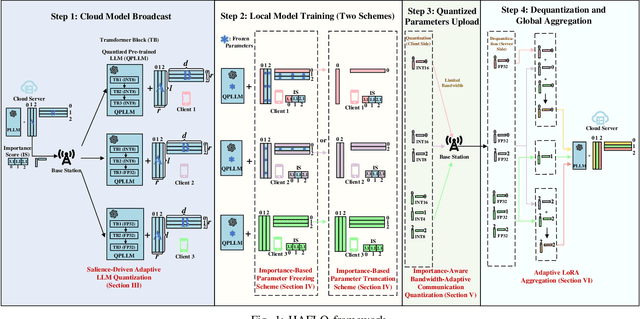

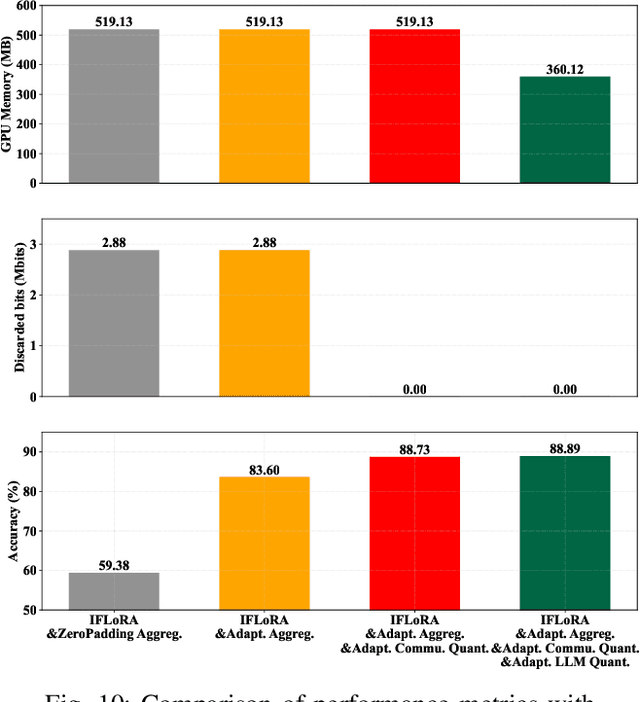

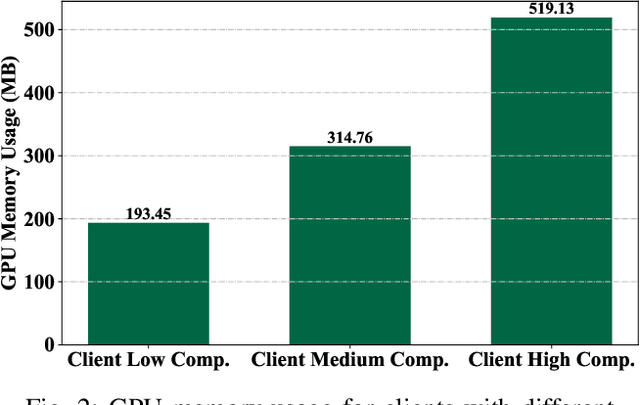

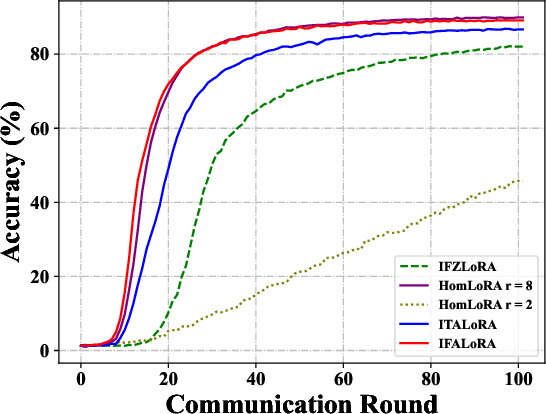

Abstract:Federated fine-tuning of pre-trained Large Language Models (LLMs) enables task-specific adaptation across diverse datasets while preserving data privacy. However, the large model size and heterogeneity in client resources pose significant computational and communication challenges. To address these issues, in this paper, we propose a novel Heterogeneous Adaptive Federated Low-Rank Adaptation (LoRA) fine-tuned LLM framework (HAFL). To accommodate client resource heterogeneity, we first introduce an importance-based parameter truncation scheme, which allows clients to have different LoRA ranks, and smoothed sensitivity scores are used as importance indicators. Despite its flexibility, the truncation process may cause performance degradation. To tackle this problem, we develop an importance-based parameter freezing scheme. In this approach, both the cloud server and clients maintain the same LoRA rank, while clients selectively update only the most important decomposed LoRA rank-1 matrices, keeping the rest frozen. To mitigate the information dilution caused by the zero-padding aggregation method, we propose an adaptive aggregation approach that operates at the decomposed rank-1 matrix level. Experiments on the 20 News Group classification task show that our method converges quickly with low communication size, and avoids performance degradation when distributing models to clients compared to truncation-based heterogeneous LoRA rank scheme. Additionally, our adaptive aggregation method achieves faster convergence compared to the zero-padding approach.

Over-the-Air Federated Averaging with Limited Power and Privacy Budgets

May 05, 2023Abstract:To jointly overcome the communication bottleneck and privacy leakage of wireless federated learning (FL), this paper studies a differentially private over-the-air federated averaging (DP-OTA-FedAvg) system with a limited sum power budget. With DP-OTA-FedAvg, the gradients are aligned by an alignment coefficient and aggregated over the air, and channel noise is employed to protect privacy. We aim to improve the learning performance by jointly designing the device scheduling, alignment coefficient, and the number of aggregation rounds of federated averaging (FedAvg) subject to sum power and privacy constraints. We first present the privacy analysis based on differential privacy (DP) to quantify the impact of the alignment coefficient on privacy preservation in each communication round. Furthermore, to study how the device scheduling, alignment coefficient, and the number of the global aggregation affect the learning process, we conduct the convergence analysis of DP-OTA-FedAvg in the cases of convex and non-convex loss functions. Based on these analytical results, we formulate an optimization problem to minimize the optimality gap of the DP-OTA-FedAvg subject to limited sum power and privacy budgets. The problem is solved by decoupling it into two sub-problems. Given the number of communication rounds, we conclude the relationship between the number of scheduled devices and the alignment coefficient, which offers a set of potential optimal solution pairs of device scheduling and the alignment coefficient. Thanks to the reduced search space, the optimal solution can be efficiently obtained. The effectiveness of the proposed policy is validated through simulations.

Device Scheduling for Over-the-Air Federated Learning with Differential Privacy

Nov 13, 2022

Abstract:In this paper, we propose a device scheduling scheme for differentially private over-the-air federated learning (DP-OTA-FL) systems, referred to as S-DPOTAFL, where the privacy of the participants is guaranteed by channel noise. In S-DPOTAFL, the gradients are aligned by the alignment coefficient and aggregated via over-the-air computation (AirComp). The scheme schedules the devices with better channel conditions in the training to avoid the problem that the alignment coefficient is limited by the device with the worst channel condition in the system. We conduct the privacy and convergence analysis to theoretically demonstrate the impact of device scheduling on privacy protection and learning performance. To improve the learning accuracy, we formulate an optimization problem with the goal to minimize the training loss subjecting to privacy and transmit power constraints. Furthermore, we present the condition that the S-DPOTAFL performs better than the DP-OTA-FL without considering device scheduling (NoS-DPOTAFL). The effectiveness of the S-DPOTAFL is validated through simulations.

Toward Secure and Private Over-the-Air Federated Learning

Oct 14, 2022

Abstract:In this paper, a novel secure and private over-the-air federated learning (SP-OTA-FL) framework is studied where noise is employed to protect data privacy and system security. Specifically, the privacy leakage of user data and the security level of the system are measured by differential privacy (DP) and mean square error security (MSE-security), respectively. To mitigate the impact of noise on learning accuracy, we propose a channel-weighted post-processing (CWPP) mechanism, which assigns a smaller weight to the gradient of the device with poor channel conditions. Furthermore, employing CWPP can avoid the issue that the signal-to-noise ratio (SNR) of the overall system is limited by the device with the worst channel condition in aligned over-the-air federated learning (OTA-FL). We theoretically analyze the effect of noise on privacy and security protection and also illustrate the adverse impact of noise on learning performance by conducting convergence analysis. Based on these analytical results, we propose device scheduling policies considering privacy and security protection in different cases of channel noise. In particular, we formulate an integer nonlinear fractional programming problem aiming to minimize the negative impact of noise on the learning process. We obtain the closed-form solution to the optimization problem when the model is with high dimension. For the general case, we propose a secure and private algorithm (SPA) based on the branch-and-bound (BnB) method, which can obtain an optimal solution with low complexity. The effectiveness of the proposed CWPP mechanism and the policies for device selection are validated through simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge