Chenwu Zhang

Enhancing xURLLC with RSMA-Assisted Massive-MIMO Networks: Performance Analysis and Optimization

Feb 25, 2024

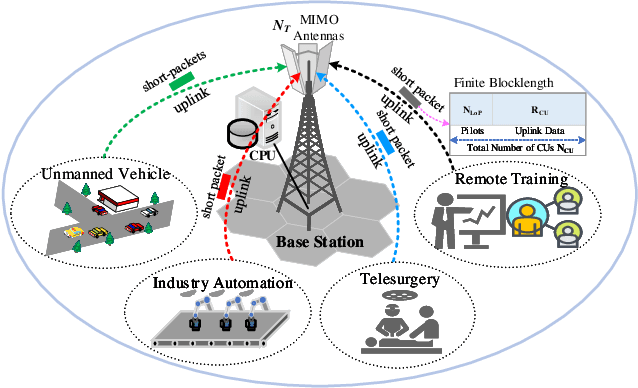

Abstract:Massive interconnection has sparked people's envisioning for next-generation ultra-reliable and low-latency communications (xURLLC), prompting the design of customized next-generation advanced transceivers (NGAT). Rate-splitting multiple access (RSMA) has emerged as a pivotal technology for NGAT design, given its robustness to imperfect channel state information (CSI) and resilience to quality of service (QoS). Additionally, xURLLC urgently appeals to large-scale access techniques, thus massive multiple-input multiple-output (mMIMO) is anticipated to integrate with RSMA to enhance xURLLC. In this paper, we develop an innovative RSMA-assisted massive-MIMO xURLLC (RSMA-mMIMO-xURLLC) network architecture tailored to accommodate xURLLC's critical QoS constraints in finite blocklength (FBL) regimes. Leveraging uplink pilot training under imperfect CSI at the transmitter, we estimate channel gains and customize linear precoders for efficient downlink short-packet data transmission. Subsequently, we formulate a joint rate-splitting, beamforming, and transmit antenna selection optimization problem to maximize the total effective transmission rate (ETR). Addressing this multi-variable coupled non-convex problem, we decompose it into three corresponding subproblems and propose a low-complexity joint iterative algorithm for efficient optimization. Extensive simulations substantiate that compared with non-orthogonal multiple access (NOMA) and space division multiple access (SDMA), the developed architecture improves the total ETR by 15.3% and 41.91%, respectively, as well as accommodates larger-scale access.

Statistical QoS Provisioning Analysis and Performance Optimization in xURLLC-enabled Massive MU-MIMO Networks: A Stochastic Network Calculus Perspective

Mar 10, 2023

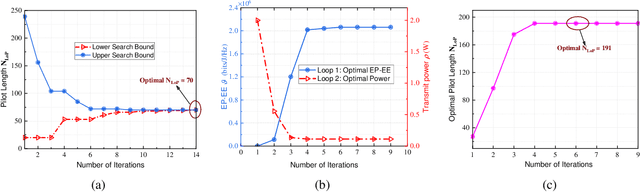

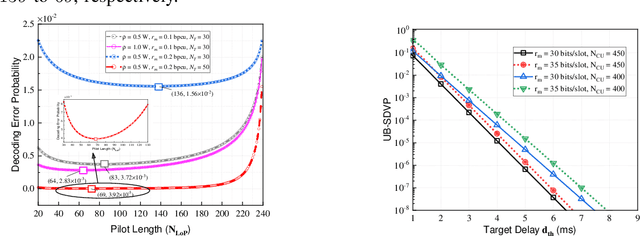

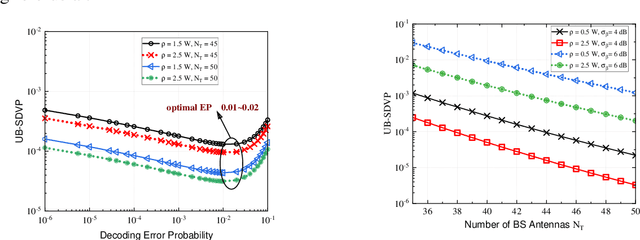

Abstract:In this paper, fundamentals and performance tradeoffs of the neXt-generation ultra-reliable and low-latency communication (xURLLC) are investigated from the perspective of stochastic network calculus (SNC). An xURLLC-enabled massive MU-MIMO system model has been developed to accommodate xURLLC features. By leveraging and promoting SNC, we provide a quantitative statistical quality of service (QoS) provisioning analysis and derive the closed-form expression of upper-bounded statistical delay violation probability (UB-SDVP). Based on the proposed theoretical framework, we formulate the UB-SDVP minimization problem that is first degenerated into a one-dimensional integer-search problem by deriving the minimum error probability (EP) detector, and then efficiently solved by the integer-form Golden-Section search algorithm. Moreover, two novel concepts, EP-based effective capacity (EP-EC) and EP-based energy efficiency (EP-EE) have been defined to characterize the tail distributions and performance tradeoffs for xURLLC. Subsequently, we formulate the EP-EC and EP-EE maximization problems and prove that the EP-EC maximization problem is equivalent to the UB-SDVP minimization problem, while the EP-EE maximization problem is solved with a low-complexity outer-descent inner-search collaborative algorithm. Extensive simulations demonstrate that the proposed framework in reducing computational complexity compared to reference schemes, and in providing various tradeoffs and optimization performance of xURLLC concerning UB-SDVP, EP, EP-EC, and EP-EE.

Joint Optimization of Base Station Clustering and Service Caching in User-Centric MEC

Feb 21, 2023Abstract:Edge service caching can effectively reduce the delay or bandwidth overhead for acquiring and initializing applications. To address single-base station (BS) transmission limitation and serious edge effect in traditional cellular-based edge service caching networks, in this paper, we proposed a novel user-centric edge service caching framework where each user is jointly provided with edge caching and wireless transmission services by a specific BS cluster instead of a single BS. To minimize the long-term average delay under the constraint of the caching cost, a mixed integer non-linear programming (MINLP) problem is formulated by jointly optimizing the BS clustering and service caching decisions. To tackle the problem, we propose JO-CDSD, an efficiently joint optimization algorithm based on Lyapunov optimization and generalized benders decomposition (GBD). In particular, the long-term optimization problem can be transformed into a primal problem and a master problem in each time slot that is much simpler to solve. The near-optimal clustering and caching strategy can be obtained through solving the primal and master problem alternately. Extensive simulations show that the proposed joint optimization algorithm outperforms other algorithms and can effectively reduce the long-term delay by at most $93.75% and caching cost by at most $53.12%.

IMRecoNet: Learn to Detect in Index Modulation Aided MIMO Systems with Complex Valued Neural Networks

Dec 02, 2021

Abstract:Index modulation (IM) reduces the power consumption and hardware cost of the multiple-input multiple-output (MIMO) system by activating part of the antennas for data transmission. However, IM significantly increases the complexity of the receiver and needs accurate channel estimation to guarantee its performance. To tackle these challenges, in this paper, we design a deep learning (DL) based detector for the IM aided MIMO (IM-MIMO) systems. We first formulate the detection process as a sparse reconstruction problem by utilizing the inherent attributes of IM. Then, based on greedy strategy, we design a DL based detector, called IMRecoNet, to realize this sparse reconstruction process. Different from the general neural networks, we introduce complex value operations to adapt the complex signals in communication systems. To the best of our knowledge, this is the first attempt that introduce complex valued neural network to the design of detector for the IM-MIMO systems. Finally, to verify the adaptability and robustness of the proposed detector, simulations are carried out with consideration of inaccurate channel state information (CSI) and correlated MIMO channels. The simulation results demonstrate that the proposed detector outperforms existing algorithms in terms of antenna recognition accuracy and bit error rate under various scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge