Hao Dai

Domain-Specific Knowledge Graphs in RAG-Enhanced Healthcare LLMs

Jan 21, 2026Abstract:Large Language Models (LLMs) generate fluent answers but can struggle with trustworthy, domain-specific reasoning. We evaluate whether domain knowledge graphs (KGs) improve Retrieval-Augmented Generation (RAG) for healthcare by constructing three PubMed-derived graphs: $\mathbb{G}_1$ (T2DM), $\mathbb{G}_2$ (Alzheimer's disease), and $\mathbb{G}_3$ (AD+T2DM). We design two probes: Probe 1 targets merged AD T2DM knowledge, while Probe 2 targets the intersection of $\mathbb{G}_1$ and $\mathbb{G}_2$. Seven instruction-tuned LLMs are tested across retrieval sources {No-RAG, $\mathbb{G}_1$, $\mathbb{G}_2$, $\mathbb{G}_1$ + $\mathbb{G}_2$, $\mathbb{G}_3$, $\mathbb{G}_1$+$\mathbb{G}_2$ + $\mathbb{G}_3$} and three decoding temperatures. Results show that scope alignment between probe and KG is decisive: precise, scope-matched retrieval (notably $\mathbb{G}_2$) yields the most consistent gains, whereas indiscriminate graph unions often introduce distractors that reduce accuracy. Larger models frequently match or exceed KG-RAG with a No-RAG baseline on Probe 1, indicating strong parametric priors, whereas smaller/mid-sized models benefit more from well-scoped retrieval. Temperature plays a secondary role; higher values rarely help. We conclude that precision-first, scope-matched KG-RAG is preferable to breadth-first unions, and we outline practical guidelines for graph selection, model sizing, and retrieval/reranking. Code and Data available here - https://github.com/sydneyanuyah/RAGComparison

Parallax: Runtime Parallelization for Operator Fallbacks in Heterogeneous Edge Systems

Dec 12, 2025

Abstract:The growing demand for real-time DNN applications on edge devices necessitates faster inference of increasingly complex models. Although many devices include specialized accelerators (e.g., mobile GPUs), dynamic control-flow operators and unsupported kernels often fall back to CPU execution. Existing frameworks handle these fallbacks poorly, leaving CPU cores idle and causing high latency and memory spikes. We introduce Parallax, a framework that accelerates mobile DNN inference without model refactoring or custom operator implementations. Parallax first partitions the computation DAG to expose parallelism, then employs branch-aware memory management with dedicated arenas and buffer reuse to reduce runtime footprint. An adaptive scheduler executes branches according to device memory constraints, meanwhile, fine-grained subgraph control enables heterogeneous inference of dynamic models. By evaluating on five representative DNNs across three different mobile devices, Parallax achieves up to 46% latency reduction, maintains controlled memory overhead (26.5% on average), and delivers up to 30% energy savings compared with state-of-the-art frameworks, offering improvements aligned with the responsiveness demands of real-time mobile inference.

Every Step Evolves: Scaling Reinforcement Learning for Trillion-Scale Thinking Model

Oct 21, 2025

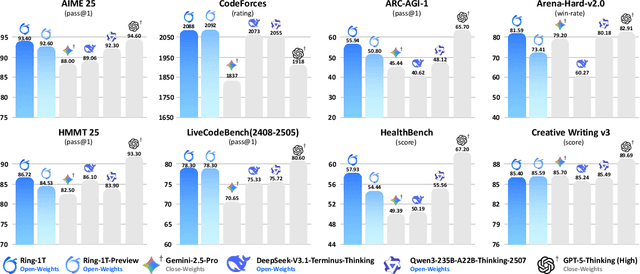

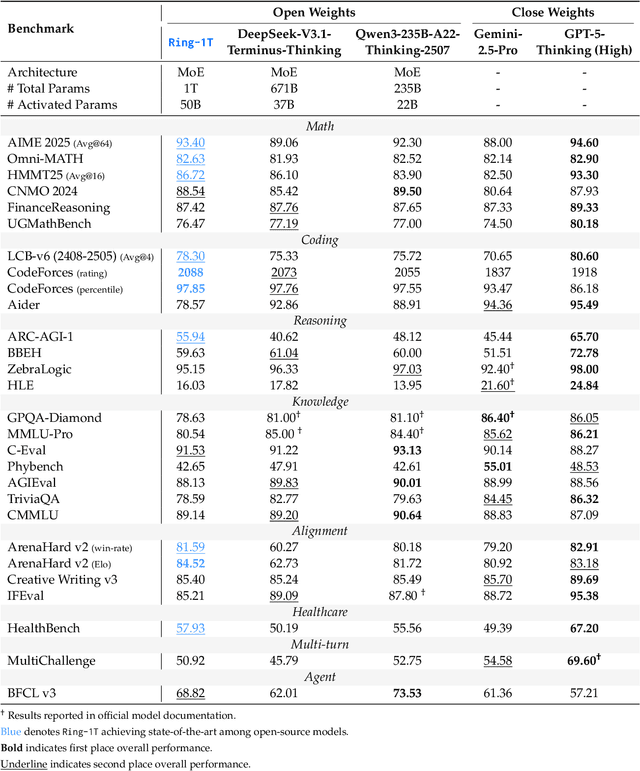

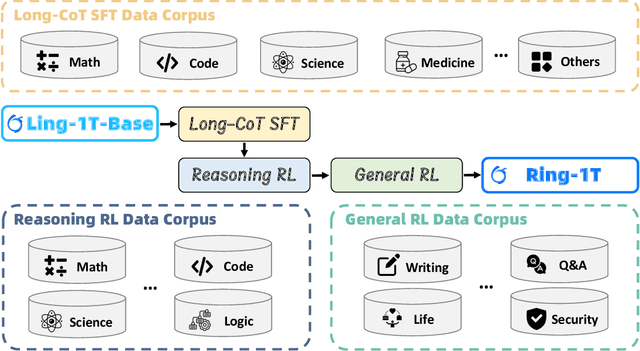

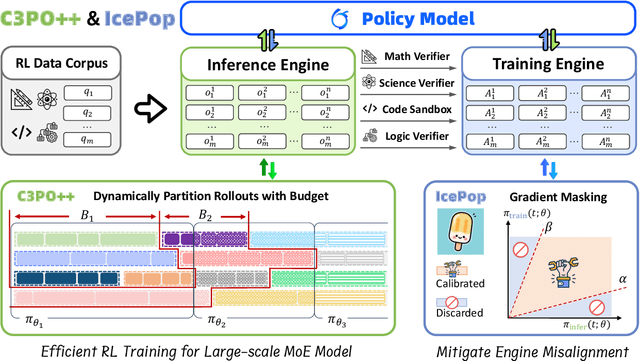

Abstract:We present Ring-1T, the first open-source, state-of-the-art thinking model with a trillion-scale parameter. It features 1 trillion total parameters and activates approximately 50 billion per token. Training such models at a trillion-parameter scale introduces unprecedented challenges, including train-inference misalignment, inefficiencies in rollout processing, and bottlenecks in the RL system. To address these, we pioneer three interconnected innovations: (1) IcePop stabilizes RL training via token-level discrepancy masking and clipping, resolving instability from training-inference mismatches; (2) C3PO++ improves resource utilization for long rollouts under a token budget by dynamically partitioning them, thereby obtaining high time efficiency; and (3) ASystem, a high-performance RL framework designed to overcome the systemic bottlenecks that impede trillion-parameter model training. Ring-1T delivers breakthrough results across critical benchmarks: 93.4 on AIME-2025, 86.72 on HMMT-2025, 2088 on CodeForces, and 55.94 on ARC-AGI-v1. Notably, it attains a silver medal-level result on the IMO-2025, underscoring its exceptional reasoning capabilities. By releasing the complete 1T parameter MoE model to the community, we provide the research community with direct access to cutting-edge reasoning capabilities. This contribution marks a significant milestone in democratizing large-scale reasoning intelligence and establishes a new baseline for open-source model performance.

Continual Generalized Category Discovery: Learning and Forgetting from a Bayesian Perspective

Jul 23, 2025Abstract:Continual Generalized Category Discovery (C-GCD) faces a critical challenge: incrementally learning new classes from unlabeled data streams while preserving knowledge of old classes. Existing methods struggle with catastrophic forgetting, especially when unlabeled data mixes known and novel categories. We address this by analyzing C-GCD's forgetting dynamics through a Bayesian lens, revealing that covariance misalignment between old and new classes drives performance degradation. Building on this insight, we propose Variational Bayes C-GCD (VB-CGCD), a novel framework that integrates variational inference with covariance-aware nearest-class-mean classification. VB-CGCD adaptively aligns class distributions while suppressing pseudo-label noise via stochastic variational updates. Experiments show VB-CGCD surpasses prior art by +15.21% with the overall accuracy in the final session on standard benchmarks. We also introduce a new challenging benchmark with only 10% labeled data and extended online phases, VB-CGCD achieves a 67.86% final accuracy, significantly higher than state-of-the-art (38.55%), demonstrating its robust applicability across diverse scenarios. Code is available at: https://github.com/daihao42/VB-CGCD

ViRN: Variational Inference and Distribution Trilateration for Long-Tailed Continual Representation Learning

Jul 23, 2025Abstract:Continual learning (CL) with long-tailed data distributions remains a critical challenge for real-world AI systems, where models must sequentially adapt to new classes while retaining knowledge of old ones, despite severe class imbalance. Existing methods struggle to balance stability and plasticity, often collapsing under extreme sample scarcity. To address this, we propose ViRN, a novel CL framework that integrates variational inference (VI) with distributional trilateration for robust long-tailed learning. First, we model class-conditional distributions via a Variational Autoencoder to mitigate bias toward head classes. Second, we reconstruct tail-class distributions via Wasserstein distance-based neighborhood retrieval and geometric fusion, enabling sample-efficient alignment of tail-class representations. Evaluated on six long-tailed classification benchmarks, including speech (e.g., rare acoustic events, accents) and image tasks, ViRN achieves a 10.24% average accuracy gain over state-of-the-art methods.

Ring-lite: Scalable Reasoning via C3PO-Stabilized Reinforcement Learning for LLMs

Jun 18, 2025Abstract:We present Ring-lite, a Mixture-of-Experts (MoE)-based large language model optimized via reinforcement learning (RL) to achieve efficient and robust reasoning capabilities. Built upon the publicly available Ling-lite model, a 16.8 billion parameter model with 2.75 billion activated parameters, our approach matches the performance of state-of-the-art (SOTA) small-scale reasoning models on challenging benchmarks (e.g., AIME, LiveCodeBench, GPQA-Diamond) while activating only one-third of the parameters required by comparable models. To accomplish this, we introduce a joint training pipeline integrating distillation with RL, revealing undocumented challenges in MoE RL training. First, we identify optimization instability during RL training, and we propose Constrained Contextual Computation Policy Optimization(C3PO), a novel approach that enhances training stability and improves computational throughput via algorithm-system co-design methodology. Second, we empirically demonstrate that selecting distillation checkpoints based on entropy loss for RL training, rather than validation metrics, yields superior performance-efficiency trade-offs in subsequent RL training. Finally, we develop a two-stage training paradigm to harmonize multi-domain data integration, addressing domain conflicts that arise in training with mixed dataset. We will release the model, dataset, and code.

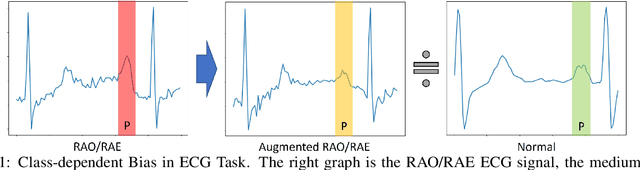

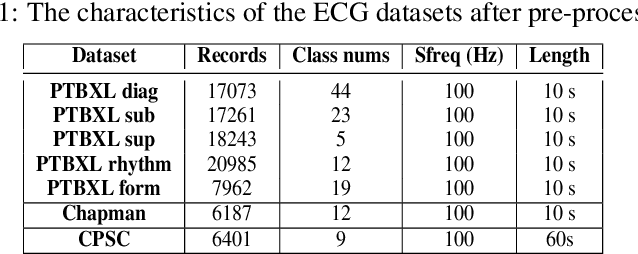

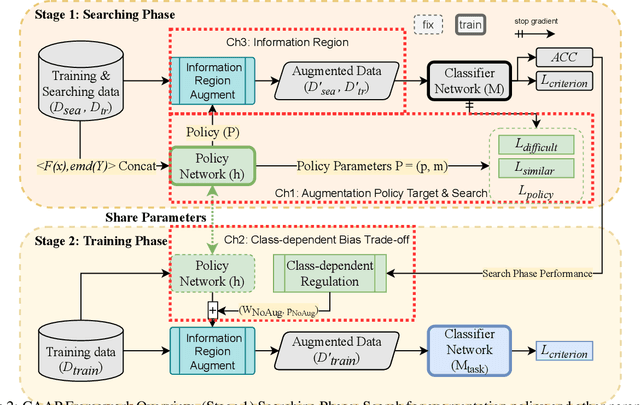

CAAP: Class-Dependent Automatic Data Augmentation Based On Adaptive Policies For Time Series

Apr 01, 2024

Abstract:Data Augmentation is a common technique used to enhance the performance of deep learning models by expanding the training dataset. Automatic Data Augmentation (ADA) methods are getting popular because of their capacity to generate policies for various datasets. However, existing ADA methods primarily focused on overall performance improvement, neglecting the problem of class-dependent bias that leads to performance reduction in specific classes. This bias poses significant challenges when deploying models in real-world applications. Furthermore, ADA for time series remains an underexplored domain, highlighting the need for advancements in this field. In particular, applying ADA techniques to vital signals like an electrocardiogram (ECG) is a compelling example due to its potential in medical domains such as heart disease diagnostics. We propose a novel deep learning-based approach called Class-dependent Automatic Adaptive Policies (CAAP) framework to overcome the notable class-dependent bias problem while maintaining the overall improvement in time-series data augmentation. Specifically, we utilize the policy network to generate effective sample-wise policies with balanced difficulty through class and feature information extraction. Second, we design the augmentation probability regulation method to minimize class-dependent bias. Third, we introduce the information region concepts into the ADA framework to preserve essential regions in the sample. Through a series of experiments on real-world ECG datasets, we demonstrate that CAAP outperforms representative methods in achieving lower class-dependent bias combined with superior overall performance. These results highlight the reliability of CAAP as a promising ADA method for time series modeling that fits for the demands of real-world applications.

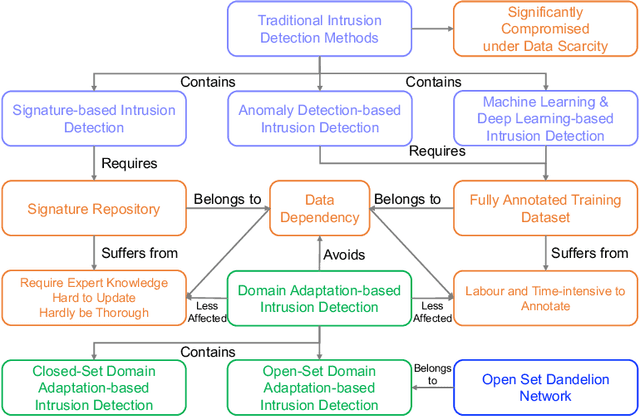

Open Set Dandelion Network for IoT Intrusion Detection

Nov 19, 2023

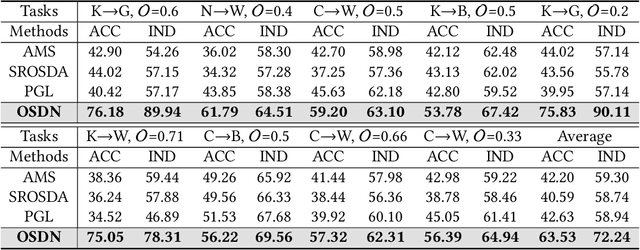

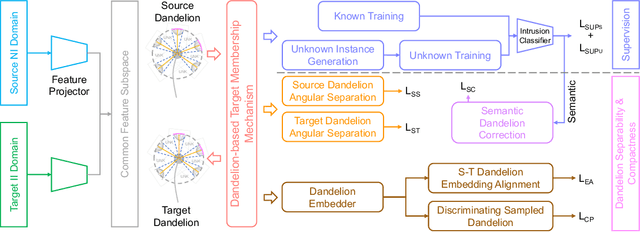

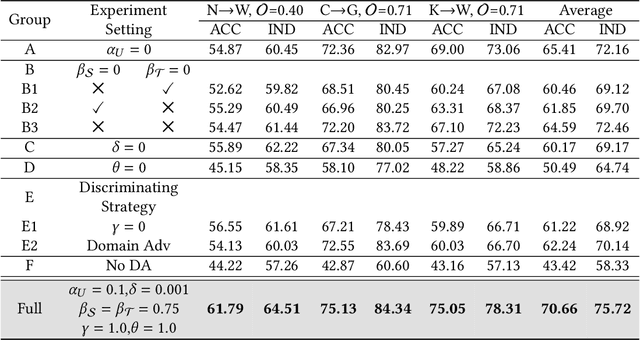

Abstract:As IoT devices become widely, it is crucial to protect them from malicious intrusions. However, the data scarcity of IoT limits the applicability of traditional intrusion detection methods, which are highly data-dependent. To address this, in this paper we propose the Open-Set Dandelion Network (OSDN) based on unsupervised heterogeneous domain adaptation in an open-set manner. The OSDN model performs intrusion knowledge transfer from the knowledge-rich source network intrusion domain to facilitate more accurate intrusion detection for the data-scarce target IoT intrusion domain. Under the open-set setting, it can also detect newly-emerged target domain intrusions that are not observed in the source domain. To achieve this, the OSDN model forms the source domain into a dandelion-like feature space in which each intrusion category is compactly grouped and different intrusion categories are separated, i.e., simultaneously emphasising inter-category separability and intra-category compactness. The dandelion-based target membership mechanism then forms the target dandelion. Then, the dandelion angular separation mechanism achieves better inter-category separability, and the dandelion embedding alignment mechanism further aligns both dandelions in a finer manner. To promote intra-category compactness, the discriminating sampled dandelion mechanism is used. Assisted by the intrusion classifier trained using both known and generated unknown intrusion knowledge, a semantic dandelion correction mechanism emphasises easily-confused categories and guides better inter-category separability. Holistically, these mechanisms form the OSDN model that effectively performs intrusion knowledge transfer to benefit IoT intrusion detection. Comprehensive experiments on several intrusion datasets verify the effectiveness of the OSDN model, outperforming three state-of-the-art baseline methods by 16.9%.

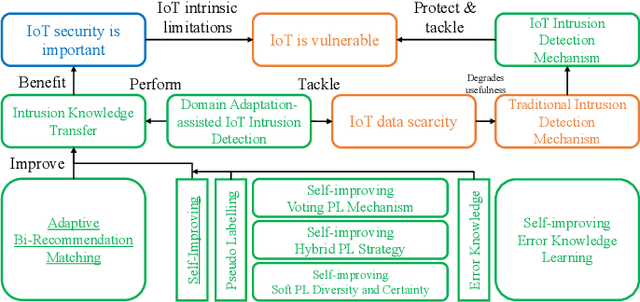

Adaptive Bi-Recommendation and Self-Improving Network for Heterogeneous Domain Adaptation-Assisted IoT Intrusion Detection

Mar 25, 2023

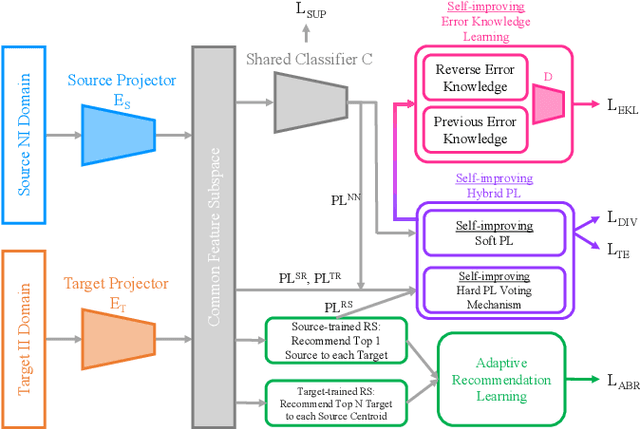

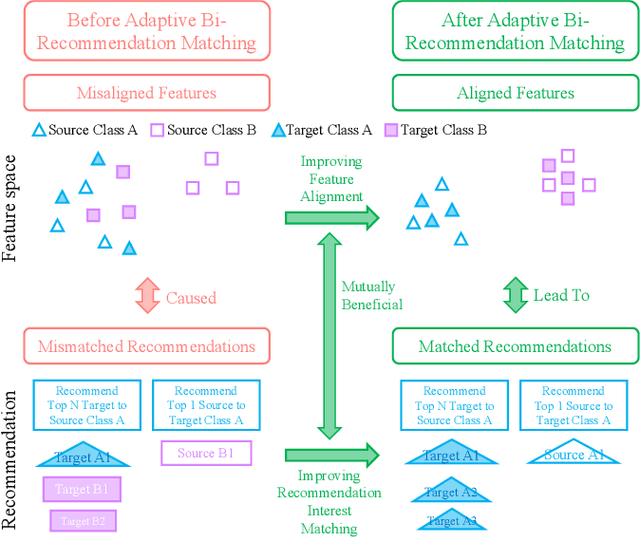

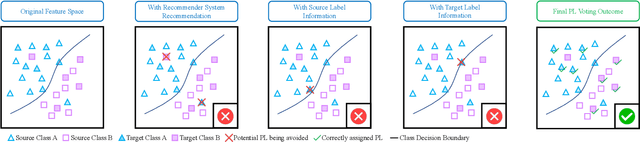

Abstract:As Internet of Things devices become prevalent, using intrusion detection to protect IoT from malicious intrusions is of vital importance. However, the data scarcity of IoT hinders the effectiveness of traditional intrusion detection methods. To tackle this issue, in this paper, we propose the Adaptive Bi-Recommendation and Self-Improving Network (ABRSI) based on unsupervised heterogeneous domain adaptation (HDA). The ABRSI transfers enrich intrusion knowledge from a data-rich network intrusion source domain to facilitate effective intrusion detection for data-scarce IoT target domains. The ABRSI achieves fine-grained intrusion knowledge transfer via adaptive bi-recommendation matching. Matching the bi-recommendation interests of two recommender systems and the alignment of intrusion categories in the shared feature space form a mutual-benefit loop. Besides, the ABRSI uses a self-improving mechanism, autonomously improving the intrusion knowledge transfer from four ways. A hard pseudo label voting mechanism jointly considers recommender system decision and label relationship information to promote more accurate hard pseudo label assignment. To promote diversity and target data participation during intrusion knowledge transfer, target instances failing to be assigned with a hard pseudo label will be assigned with a probabilistic soft pseudo label, forming a hybrid pseudo-labelling strategy. Meanwhile, the ABRSI also makes soft pseudo-labels globally diverse and individually certain. Finally, an error knowledge learning mechanism is utilised to adversarially exploit factors that causes detection ambiguity and learns through both current and previous error knowledge, preventing error knowledge forgetfulness. Holistically, these mechanisms form the ABRSI model that boosts IoT intrusion detection accuracy via HDA-assisted intrusion knowledge transfer.

Heterogeneous Domain Adaptation for IoT Intrusion Detection: A Geometric Graph Alignment Approach

Jan 24, 2023Abstract:Data scarcity hinders the usability of data-dependent algorithms when tackling IoT intrusion detection (IID). To address this, we utilise the data rich network intrusion detection (NID) domain to facilitate more accurate intrusion detection for IID domains. In this paper, a Geometric Graph Alignment (GGA) approach is leveraged to mask the geometric heterogeneities between domains for better intrusion knowledge transfer. Specifically, each intrusion domain is formulated as a graph where vertices and edges represent intrusion categories and category-wise interrelationships, respectively. The overall shape is preserved via a confused discriminator incapable to identify adjacency matrices between different intrusion domain graphs. A rotation avoidance mechanism and a centre point matching mechanism is used to avoid graph misalignment due to rotation and symmetry, respectively. Besides, category-wise semantic knowledge is transferred to act as vertex-level alignment. To exploit the target data, a pseudo-label election mechanism that jointly considers network prediction, geometric property and neighbourhood information is used to produce fine-grained pseudo-label assignment. Upon aligning the intrusion graphs geometrically from different granularities, the transferred intrusion knowledge can boost IID performance. Comprehensive experiments on several intrusion datasets demonstrate state-of-the-art performance of the GGA approach and validate the usefulness of GGA constituting components.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge