Li Xiong

Exposing Vulnerabilities in Explanation for Time Series Classifiers via Dual-Target Attacks

Feb 02, 2026Abstract:Interpretable time series deep learning systems are often assessed by checking temporal consistency on explanations, implicitly treating this as evidence of robustness. We show that this assumption can fail: Predictions and explanations can be adversarially decoupled, enabling targeted misclassification while the explanation remains plausible and consistent with a chosen reference rationale. We propose TSEF (Time Series Explanation Fooler), a dual-target attack that jointly manipulates the classifier and explainer outputs. In contrast to single-objective misclassification attacks that disrupt explanation and spread attribution mass broadly, TSEF achieves targeted prediction changes while keeping explanations consistent with the reference. Across multiple datasets and explainer backbones, our results consistently reveal that explanation stability is a misleading proxy for decision robustness and motivate coupling-aware robustness evaluations for trustworthy time series tasks.

BicKD: Bilateral Contrastive Knowledge Distillation

Feb 01, 2026Abstract:Knowledge distillation (KD) is a machine learning framework that transfers knowledge from a teacher model to a student model. The vanilla KD proposed by Hinton et al. has been the dominant approach in logit-based distillation and demonstrates compelling performance. However, it only performs sample-wise probability alignment between teacher and student's predictions, lacking an mechanism for class-wise comparison. Besides, vanilla KD imposes no structural constraint on the probability space. In this work, we propose a simple yet effective methodology, bilateral contrastive knowledge distillation (BicKD). This approach introduces a novel bilateral contrastive loss, which intensifies the orthogonality among different class generalization spaces while preserving consistency within the same class. The bilateral formulation enables explicit comparison of both sample-wise and class-wise prediction patterns between teacher and student. By emphasizing probabilistic orthogonality, BicKD further regularizes the geometric structure of the predictive distribution. Extensive experiments show that our BicKD method enhances knowledge transfer, and consistently outperforms state-of-the-art knowledge distillation techniques across various model architectures and benchmarks.

Collaborative Reconstruction and Repair for Multi-class Industrial Anomaly Detection

Dec 12, 2025Abstract:Industrial anomaly detection is a challenging open-set task that aims to identify unknown anomalous patterns deviating from normal data distribution. To avoid the significant memory consumption and limited generalizability brought by building separate models per class, we focus on developing a unified framework for multi-class anomaly detection. However, under this challenging setting, conventional reconstruction-based networks often suffer from an identity mapping problem, where they directly replicate input features regardless of whether they are normal or anomalous, resulting in detection failures. To address this issue, this study proposes a novel framework termed Collaborative Reconstruction and Repair (CRR), which transforms the reconstruction to repairation. First, we optimize the decoder to reconstruct normal samples while repairing synthesized anomalies. Consequently, it generates distinct representations for anomalous regions and similar representations for normal areas compared to the encoder's output. Second, we implement feature-level random masking to ensure that the representations from decoder contain sufficient local information. Finally, to minimize detection errors arising from the discrepancies between feature representations from the encoder and decoder, we train a segmentation network supervised by synthetic anomaly masks, thereby enhancing localization performance. Extensive experiments on industrial datasets that CRR effectively mitigates the identity mapping issue and achieves state-of-the-art performance in multi-class industrial anomaly detection.

FusionDP: Foundation Model-Assisted Differentially Private Learning for Partially Sensitive Features

Nov 05, 2025

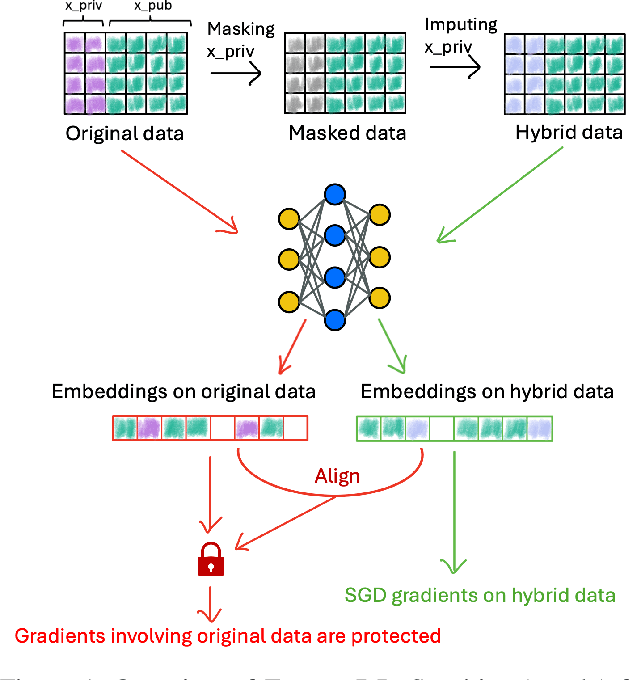

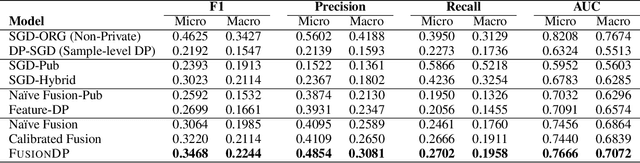

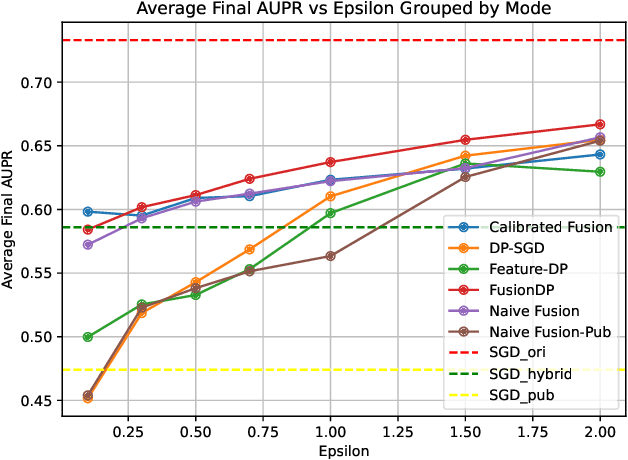

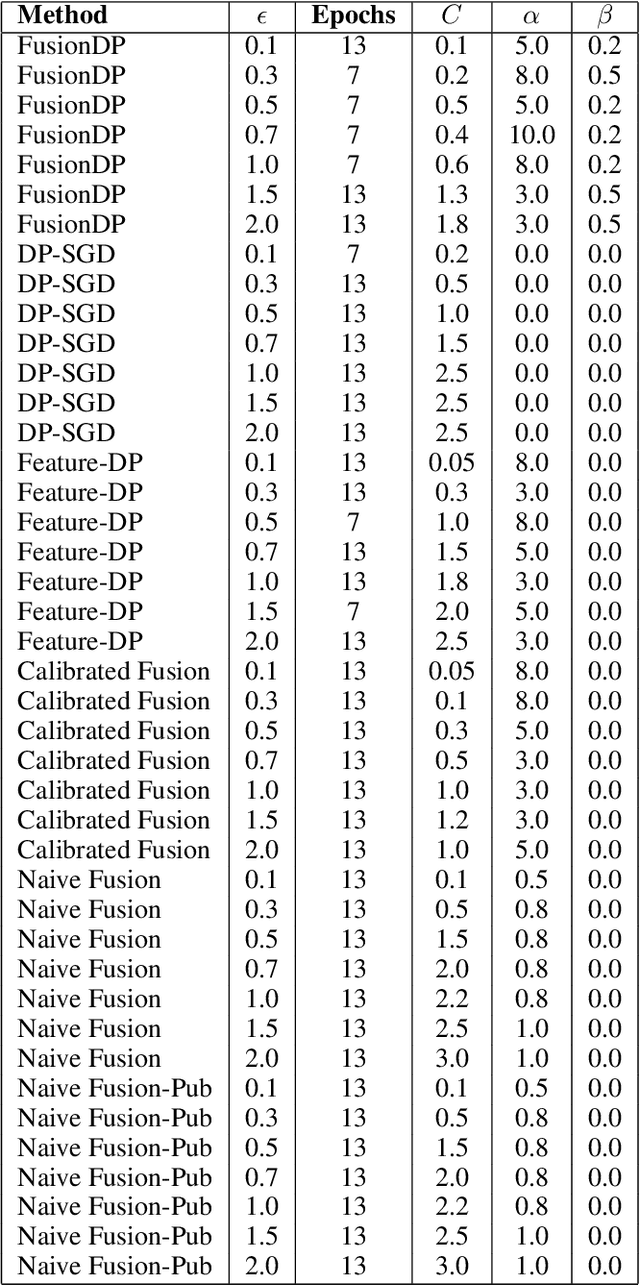

Abstract:Ensuring the privacy of sensitive training data is crucial in privacy-preserving machine learning. However, in practical scenarios, privacy protection may be required for only a subset of features. For instance, in ICU data, demographic attributes like age and gender pose higher privacy risks due to their re-identification potential, whereas raw lab results are generally less sensitive. Traditional DP-SGD enforces privacy protection on all features in one sample, leading to excessive noise injection and significant utility degradation. We propose FusionDP, a two-step framework that enhances model utility under feature-level differential privacy. First, FusionDP leverages large foundation models to impute sensitive features given non-sensitive features, treating them as external priors that provide high-quality estimates of sensitive attributes without accessing the true values during model training. Second, we introduce a modified DP-SGD algorithm that trains models on both original and imputed features while formally preserving the privacy of the original sensitive features. We evaluate FusionDP on two modalities: a sepsis prediction task on tabular data from PhysioNet and a clinical note classification task from MIMIC-III. By comparing against privacy-preserving baselines, our results show that FusionDP significantly improves model performance while maintaining rigorous feature-level privacy, demonstrating the potential of foundation model-driven imputation to enhance the privacy-utility trade-off for various modalities.

Search is All You Need for Few-shot Anomaly Detection

Apr 16, 2025

Abstract:Few-shot anomaly detection (FSAD) has emerged as a crucial yet challenging task in industrial inspection, where normal distribution modeling must be accomplished with only a few normal images. While existing approaches typically employ multi-modal foundation models combining language and vision modalities for prompt-guided anomaly detection, these methods often demand sophisticated prompt engineering and extensive manual tuning. In this paper, we demonstrate that a straightforward nearest-neighbor search framework can surpass state-of-the-art performance in both single-class and multi-class FSAD scenarios. Our proposed method, VisionAD, consists of four simple yet essential components: (1) scalable vision foundation models that extract universal and discriminative features; (2) dual augmentation strategies - support augmentation to enhance feature matching adaptability and query augmentation to address the oversights of single-view prediction; (3) multi-layer feature integration that captures both low-frequency global context and high-frequency local details with minimal computational overhead; and (4) a class-aware visual memory bank enabling efficient one-for-all multi-class detection. Extensive evaluations across MVTec-AD, VisA, and Real-IAD benchmarks demonstrate VisionAD's exceptional performance. Using only 1 normal images as support, our method achieves remarkable image-level AUROC scores of 97.4%, 94.8%, and 70.8% respectively, outperforming current state-of-the-art approaches by significant margins (+1.6%, +3.2%, and +1.4%). The training-free nature and superior few-shot capabilities of VisionAD make it particularly appealing for real-world applications where samples are scarce or expensive to obtain. Code is available at https://github.com/Qiqigeww/VisionAD.

Sharpness-Aware Parameter Selection for Machine Unlearning

Apr 08, 2025Abstract:It often happens that some sensitive personal information, such as credit card numbers or passwords, are mistakenly incorporated in the training of machine learning models and need to be removed afterwards. The removal of such information from a trained model is a complex task that needs to partially reverse the training process. There have been various machine unlearning techniques proposed in the literature to address this problem. Most of the proposed methods revolve around removing individual data samples from a trained model. Another less explored direction is when features/labels of a group of data samples need to be reverted. While the existing methods for these tasks do the unlearning task by updating the whole set of model parameters or only the last layer of the model, we show that there are a subset of model parameters that have the largest contribution in the unlearning target features. More precisely, the model parameters with the largest corresponding diagonal value in the Hessian matrix (computed at the learned model parameter) have the most contribution in the unlearning task. By selecting these parameters and updating them during the unlearning stage, we can have the most progress in unlearning. We provide theoretical justifications for the proposed strategy by connecting it to sharpness-aware minimization and robust unlearning. We empirically show the effectiveness of the proposed strategy in improving the efficacy of unlearning with a low computational cost.

Node-level Contrastive Unlearning on Graph Neural Networks

Mar 04, 2025

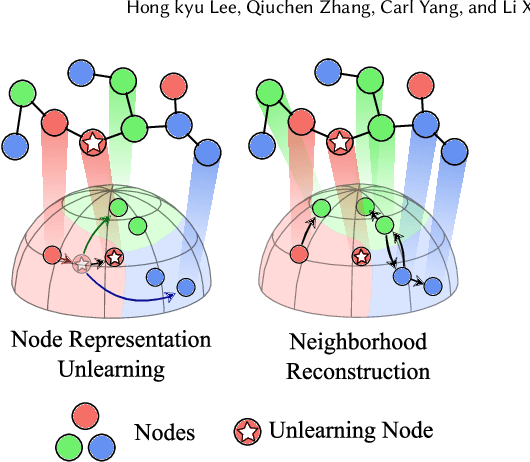

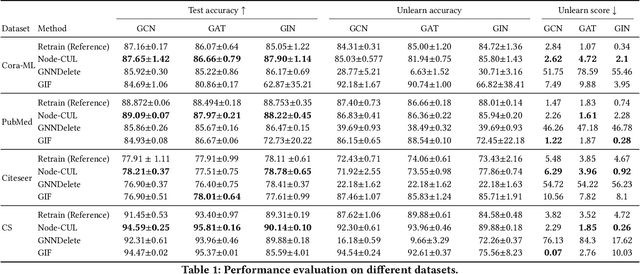

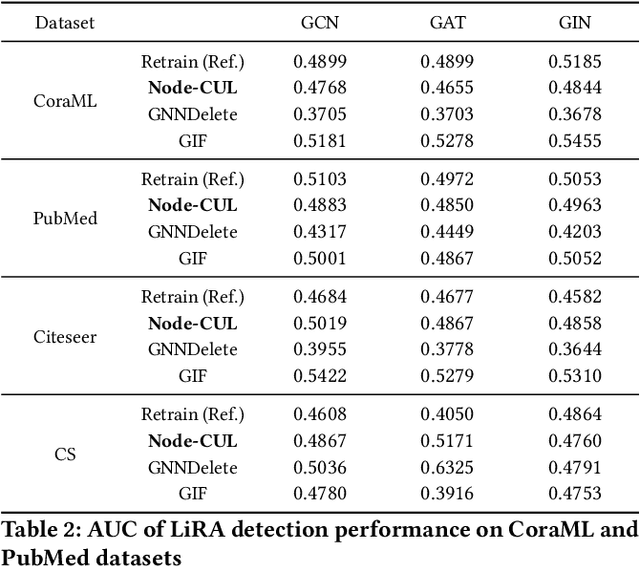

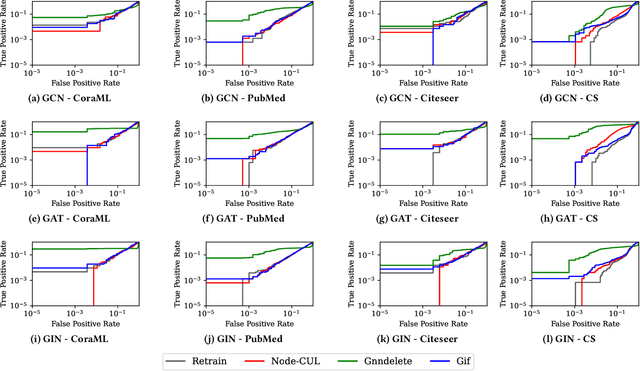

Abstract:Graph unlearning aims to remove a subset of graph entities (i.e. nodes and edges) from a graph neural network (GNN) trained on the graph. Unlike machine unlearning for models trained on Euclidean-structured data, effectively unlearning a model trained on non-Euclidean-structured data, such as graphs, is challenging because graph entities exhibit mutual dependencies. Existing works utilize graph partitioning, influence function, or additional layers to achieve graph unlearning. However, none of them can achieve high scalability and effectiveness without additional constraints. In this paper, we achieve more effective graph unlearning by utilizing the embedding space. The primary training objective of a GNN is to generate proper embeddings for each node that encapsulates both structural information and node feature representations. Thus, directly optimizing the embedding space can effectively remove the target nodes' information from the model. Based on this intuition, we propose node-level contrastive unlearning (Node-CUL). It removes the influence of the target nodes (unlearning nodes) by contrasting the embeddings of remaining nodes and neighbors of unlearning nodes. Through iterative updates, the embeddings of unlearning nodes gradually become similar to those of unseen nodes, effectively removing the learned information without directly incorporating unseen data. In addition, we introduce a neighborhood reconstruction method that optimizes the embeddings of the neighbors in order to remove influence of unlearning nodes to maintain the utility of the GNN model. Experiments on various graph data and models show that our Node-CUL achieves the best unlearn efficacy and enhanced model utility with requiring comparable computing resources with existing frameworks.

Tokens for Learning, Tokens for Unlearning: Mitigating Membership Inference Attacks in Large Language Models via Dual-Purpose Training

Feb 27, 2025

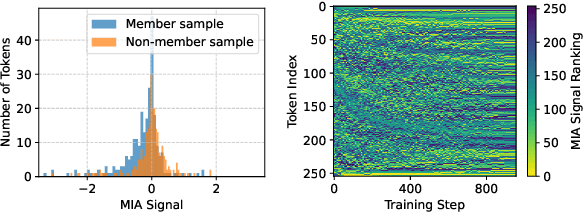

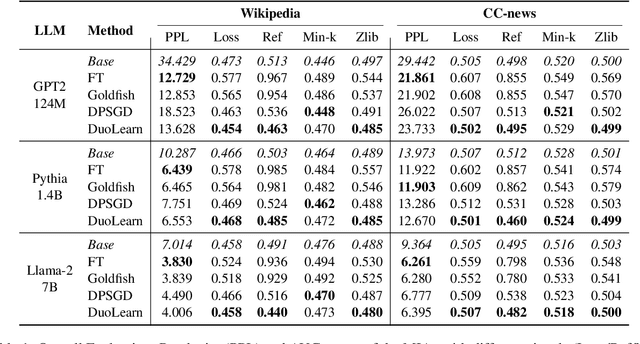

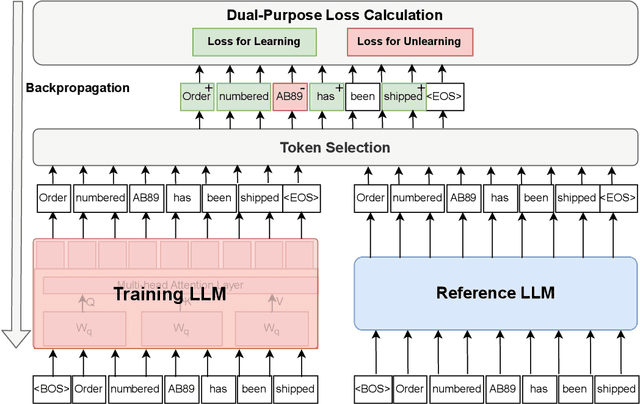

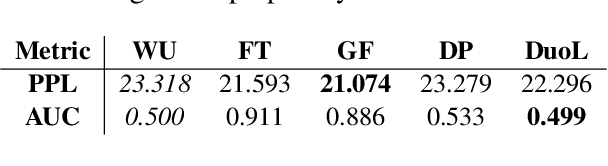

Abstract:Large language models (LLMs) have become the backbone of modern natural language processing but pose privacy concerns about leaking sensitive training data. Membership inference attacks (MIAs), which aim to infer whether a sample is included in a model's training dataset, can serve as a foundation for broader privacy threats. Existing defenses designed for traditional classification models do not account for the sequential nature of text data. As a result, they either require significant computational resources or fail to effectively mitigate privacy risks in LLMs. In this work, we propose a lightweight yet effective empirical privacy defense for protecting training data of language modeling by leveraging the token-specific characteristics. By analyzing token dynamics during training, we propose a token selection strategy that categorizes tokens into hard tokens for learning and memorized tokens for unlearning. Subsequently, our training-phase defense optimizes a novel dual-purpose token-level loss to achieve a Pareto-optimal balance between utility and privacy. Extensive experiments demonstrate that our approach not only provides strong protection against MIAs but also improves language modeling performance by around 10\% across various LLM architectures and datasets compared to the baselines.

HARBOR: Exploring Persona Dynamics in Multi-Agent Competition

Feb 17, 2025Abstract:We investigate factors contributing to LLM agents' success in competitive multi-agent environments, using auctions as a testbed where agents bid to maximize profit. The agents are equipped with bidding domain knowledge, distinct personas that reflect item preferences, and a memory of auction history. Our work extends the classic auction scenario by creating a realistic environment where multiple agents bid on houses, weighing aspects such as size, location, and budget to secure the most desirable homes at the lowest prices. Particularly, we investigate three key questions: (a) How does a persona influence an agent's behavior in a competitive setting? (b) Can an agent effectively profile its competitors' behavior during auctions? (c) How can persona profiling be leveraged to create an advantage using strategies such as theory of mind? Through a series of experiments, we analyze the behaviors of LLM agents and shed light on new findings. Our testbed, called HARBOR, offers a valuable platform for deepening our understanding of multi-agent workflows in competitive environments.

Auto-Search and Refinement: An Automated Framework for Gender Bias Mitigation in Large Language Models

Feb 17, 2025Abstract:Pre-training large language models (LLMs) on vast text corpora enhances natural language processing capabilities but risks encoding social biases, particularly gender bias. While parameter-modification methods like fine-tuning mitigate bias, they are resource-intensive, unsuitable for closed-source models, and lack adaptability to evolving societal norms. Instruction-based approaches offer flexibility but often compromise task performance. To address these limitations, we propose $\textit{FaIRMaker}$, an automated and model-independent framework that employs an $\textbf{auto-search and refinement}$ paradigm to adaptively generate Fairwords, which act as instructions integrated into input queries to reduce gender bias and enhance response quality. Extensive experiments demonstrate that $\textit{FaIRMaker}$ automatically searches for and dynamically refines Fairwords, effectively mitigating gender bias while preserving task integrity and ensuring compatibility with both API-based and open-source LLMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge