Minghao Chen

VideoARM: Agentic Reasoning over Hierarchical Memory for Long-Form Video Understanding

Dec 13, 2025

Abstract:Long-form video understanding remains challenging due to the extended temporal structure and dense multimodal cues. Despite recent progress, many existing approaches still rely on hand-crafted reasoning pipelines or employ token-consuming video preprocessing to guide MLLMs in autonomous reasoning. To overcome these limitations, we introduce VideoARM, an Agentic Reasoning-over-hierarchical-Memory paradigm for long-form video understanding. Instead of static, exhaustive preprocessing, VideoARM performs adaptive, on-the-fly agentic reasoning and memory construction. Specifically, VideoARM performs an adaptive and continuous loop of observing, thinking, acting, and memorizing, where a controller autonomously invokes tools to interpret the video in a coarse-to-fine manner, thereby substantially reducing token consumption. In parallel, a hierarchical multimodal memory continuously captures and updates multi-level clues throughout the operation of the agent, providing precise contextual information to support the controller in decision-making. Experiments on prevalent benchmarks demonstrate that VideoARM outperforms the state-of-the-art method, DVD, while significantly reducing token consumption for long-form videos.

Sensing-enabled Secure Rotatable Array System Enhanced by Multi-Layer Transmitting RIS

Nov 17, 2025Abstract:Programmable metasurfaces and adjustable antennas are promising technologies. The security of a rotatable array system is investigated in this paper. A dual-base-station (BS) architecture is adopted, in which the BSs collaboratively perform integrated sensing of the eavesdropper (the target) and communication tasks. To address the security challenge when the sensing target is located on the main communication link, the problem of maximizing the secrecy rate (SR) under sensing signal-to-interference-plus-noise ratio requirements and discrete constraints is formulated. This problem involves the joint optimization of the array pose, the antenna distribution on the array surface, the multi-layer transmitting RIS phase matrices, and the beamforming matrices, which is non-convex. To solve this challenge, an two-stage online algorithm based on the generalized Rayleigh quotient and an offline algorithm based on the Multi-Agent Deep Deterministic Policy Gradient are proposed. Simulation results validate the effectiveness of the proposed algorithms. Compared to conventional schemes without array pose adjustment, the proposed approach achieves approximately 22\% improvement in SR. Furthermore, array rotation provides higher performance gains than position changes.

SRSplat: Feed-Forward Super-Resolution Gaussian Splatting from Sparse Multi-View Images

Nov 15, 2025Abstract:Feed-forward 3D reconstruction from sparse, low-resolution (LR) images is a crucial capability for real-world applications, such as autonomous driving and embodied AI. However, existing methods often fail to recover fine texture details. This limitation stems from the inherent lack of high-frequency information in LR inputs. To address this, we propose \textbf{SRSplat}, a feed-forward framework that reconstructs high-resolution 3D scenes from only a few LR views. Our main insight is to compensate for the deficiency of texture information by jointly leveraging external high-quality reference images and internal texture cues. We first construct a scene-specific reference gallery, generated for each scene using Multimodal Large Language Models (MLLMs) and diffusion models. To integrate this external information, we introduce the \textit{Reference-Guided Feature Enhancement (RGFE)} module, which aligns and fuses features from the LR input images and their reference twin image. Subsequently, we train a decoder to predict the Gaussian primitives using the multi-view fused feature obtained from \textit{RGFE}. To further refine predicted Gaussian primitives, we introduce \textit{Texture-Aware Density Control (TADC)}, which adaptively adjusts Gaussian density based on the internal texture richness of the LR inputs. Extensive experiments demonstrate that our SRSplat outperforms existing methods on various datasets, including RealEstate10K, ACID, and DTU, and exhibits strong cross-dataset and cross-resolution generalization capabilities.

Sparse4DGS: 4D Gaussian Splatting for Sparse-Frame Dynamic Scene Reconstruction

Nov 10, 2025

Abstract:Dynamic Gaussian Splatting approaches have achieved remarkable performance for 4D scene reconstruction. However, these approaches rely on dense-frame video sequences for photorealistic reconstruction. In real-world scenarios, due to equipment constraints, sometimes only sparse frames are accessible. In this paper, we propose Sparse4DGS, the first method for sparse-frame dynamic scene reconstruction. We observe that dynamic reconstruction methods fail in both canonical and deformed spaces under sparse-frame settings, especially in areas with high texture richness. Sparse4DGS tackles this challenge by focusing on texture-rich areas. For the deformation network, we propose Texture-Aware Deformation Regularization, which introduces a texture-based depth alignment loss to regulate Gaussian deformation. For the canonical Gaussian field, we introduce Texture-Aware Canonical Optimization, which incorporates texture-based noise into the gradient descent process of canonical Gaussians. Extensive experiments show that when taking sparse frames as inputs, our method outperforms existing dynamic or few-shot techniques on NeRF-Synthetic, HyperNeRF, NeRF-DS, and our iPhone-4D datasets.

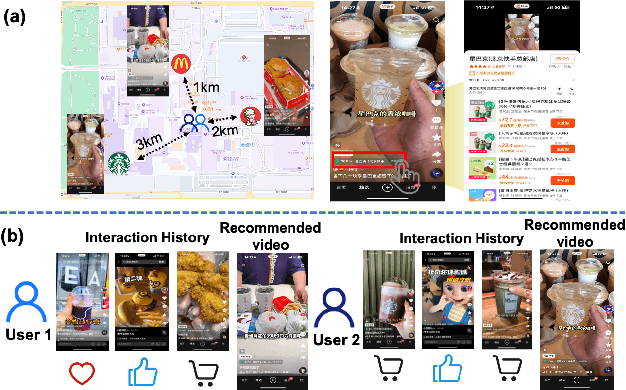

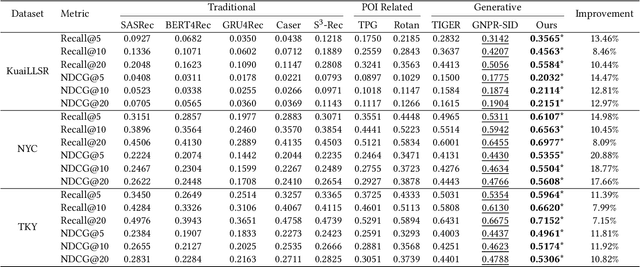

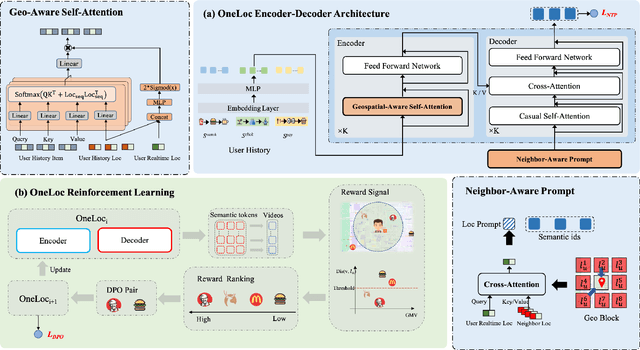

OneLoc: Geo-Aware Generative Recommender Systems for Local Life Service

Aug 20, 2025

Abstract:Local life service is a vital scenario in Kuaishou App, where video recommendation is intrinsically linked with store's location information. Thus, recommendation in our scenario is challenging because we should take into account user's interest and real-time location at the same time. In the face of such complex scenarios, end-to-end generative recommendation has emerged as a new paradigm, such as OneRec in the short video scenario, OneSug in the search scenario, and EGA in the advertising scenario. However, in local life service, an end-to-end generative recommendation model has not yet been developed as there are some key challenges to be solved. The first challenge is how to make full use of geographic information. The second challenge is how to balance multiple objectives, including user interests, the distance between user and stores, and some other business objectives. To address the challenges, we propose OneLoc. Specifically, we leverage geographic information from different perspectives: (1) geo-aware semantic ID incorporates both video and geographic information for tokenization, (2) geo-aware self-attention in the encoder leverages both video location similarity and user's real-time location, and (3) neighbor-aware prompt captures rich context information surrounding users for generation. To balance multiple objectives, we use reinforcement learning and propose two reward functions, i.e., geographic reward and GMV reward. With the above design, OneLoc achieves outstanding offline and online performance. In fact, OneLoc has been deployed in local life service of Kuaishou App. It serves 400 million active users daily, achieving 21.016% and 17.891% improvements in terms of gross merchandise value (GMV) and orders numbers.

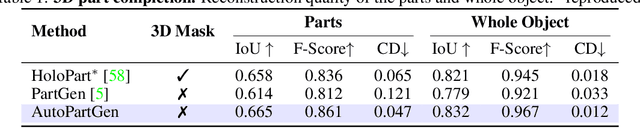

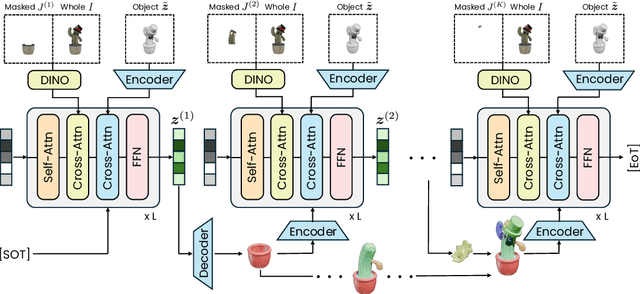

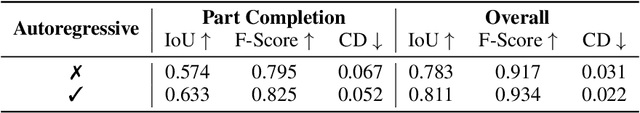

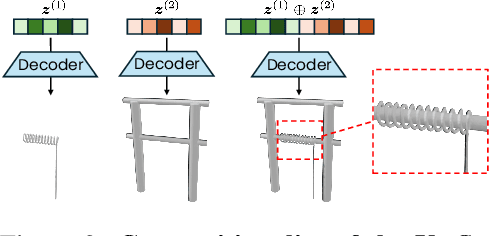

AutoPartGen: Autogressive 3D Part Generation and Discovery

Jul 17, 2025

Abstract:We introduce AutoPartGen, a model that generates objects composed of 3D parts in an autoregressive manner. This model can take as input an image of an object, 2D masks of the object's parts, or an existing 3D object, and generate a corresponding compositional 3D reconstruction. Our approach builds upon 3DShape2VecSet, a recent latent 3D representation with powerful geometric expressiveness. We observe that this latent space exhibits strong compositional properties, making it particularly well-suited for part-based generation tasks. Specifically, AutoPartGen generates object parts autoregressively, predicting one part at a time while conditioning on previously generated parts and additional inputs, such as 2D images, masks, or 3D objects. This process continues until the model decides that all parts have been generated, thus determining automatically the type and number of parts. The resulting parts can be seamlessly assembled into coherent objects or scenes without requiring additional optimization. We evaluate both the overall 3D generation capabilities and the part-level generation quality of AutoPartGen, demonstrating that it achieves state-of-the-art performance in 3D part generation.

AI-empowered Channel Estimation for Block-based Active IRS-enhanced Hybrid-field IoT Network

May 20, 2025Abstract:In this paper, channel estimation (CE) for uplink hybrid-field communications involving multiple Internet of Things (IoT) devices assisted by an active intelligent reflecting surface (IRS) is investigated. Firstly, to reduce the complexity of near-field (NF) channel modeling and estimation between IoT devices and active IRS, a sub-blocking strategy for active IRS is proposed. Specifically, the entire active IRS is divided into multiple smaller sub-blocks, so that IoT devices are located in the far-field (FF) region of each sub block, while also being located in the NF region of the entire active IRS. This strategy significantly simplifies the channel model and reduces the parameter estimation dimension by decoupling the high-dimensional NF channel parameter space into low dimensional FF sub channels. Subsequently, the relationship between channel approximation error and CE error with respect to the number of sub blocks is derived, and the optimal number of sub blocks is solved based on the criterion of minimizing the total error. In addition, considering that the amplification capability of active IRS requires power consumption, a closed-form expression for the optimal power allocation factor is derived. To further reduce the pilot overhead, a lightweight CE algorithm based on convolutional autoencoder (CAE) and multi-head attention mechanism, called CAEformer, is designed. The Cramer-Rao lower bound is derived to evaluate the proposed algorithm's performance. Finally, simulation results demonstrate the proposed CAEformer network significantly outperforms the conventional least square and minimum mean square error scheme in terms of estimation accuracy.

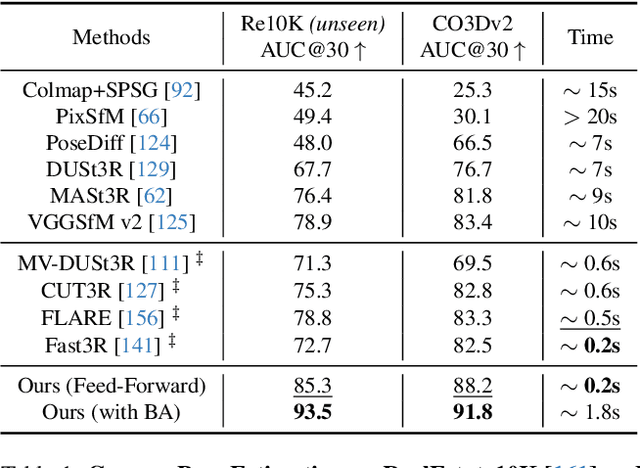

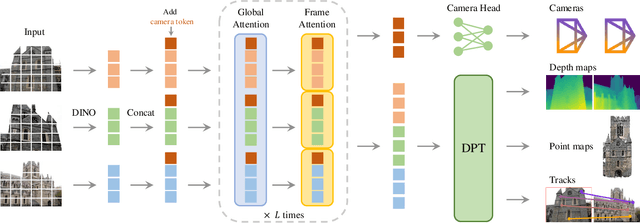

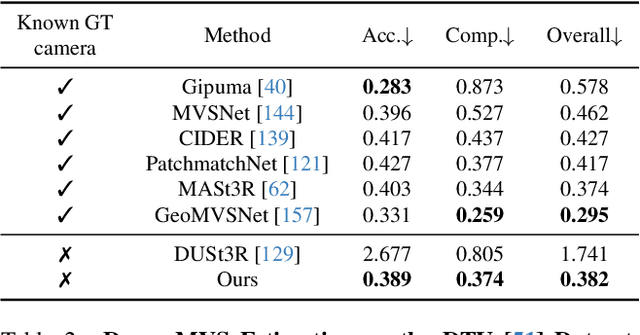

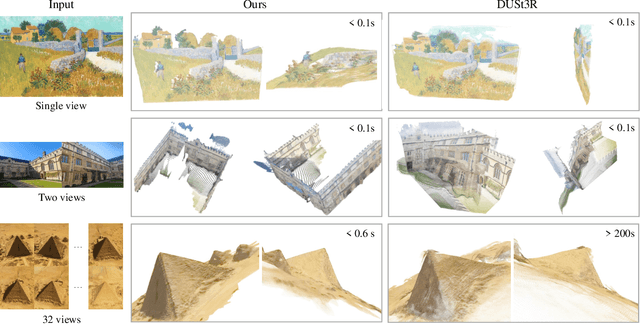

VGGT: Visual Geometry Grounded Transformer

Mar 14, 2025

Abstract:We present VGGT, a feed-forward neural network that directly infers all key 3D attributes of a scene, including camera parameters, point maps, depth maps, and 3D point tracks, from one, a few, or hundreds of its views. This approach is a step forward in 3D computer vision, where models have typically been constrained to and specialized for single tasks. It is also simple and efficient, reconstructing images in under one second, and still outperforming alternatives that require post-processing with visual geometry optimization techniques. The network achieves state-of-the-art results in multiple 3D tasks, including camera parameter estimation, multi-view depth estimation, dense point cloud reconstruction, and 3D point tracking. We also show that using pretrained VGGT as a feature backbone significantly enhances downstream tasks, such as non-rigid point tracking and feed-forward novel view synthesis. Code and models are publicly available at https://github.com/facebookresearch/vggt.

PartGen: Part-level 3D Generation and Reconstruction with Multi-View Diffusion Models

Dec 24, 2024

Abstract:Text- or image-to-3D generators and 3D scanners can now produce 3D assets with high-quality shapes and textures. These assets typically consist of a single, fused representation, like an implicit neural field, a Gaussian mixture, or a mesh, without any useful structure. However, most applications and creative workflows require assets to be made of several meaningful parts that can be manipulated independently. To address this gap, we introduce PartGen, a novel approach that generates 3D objects composed of meaningful parts starting from text, an image, or an unstructured 3D object. First, given multiple views of a 3D object, generated or rendered, a multi-view diffusion model extracts a set of plausible and view-consistent part segmentations, dividing the object into parts. Then, a second multi-view diffusion model takes each part separately, fills in the occlusions, and uses those completed views for 3D reconstruction by feeding them to a 3D reconstruction network. This completion process considers the context of the entire object to ensure that the parts integrate cohesively. The generative completion model can make up for the information missing due to occlusions; in extreme cases, it can hallucinate entirely invisible parts based on the input 3D asset. We evaluate our method on generated and real 3D assets and show that it outperforms segmentation and part-extraction baselines by a large margin. We also showcase downstream applications such as 3D part editing.

Multi-modal Iterative and Deep Fusion Frameworks for Enhanced Passive DOA Sensing via a Green Massive H2AD MIMO Receiver

Nov 11, 2024

Abstract:Most existing DOA estimation methods assume ideal source incident angles with minimal noise. Moreover, directly using pre-estimated angles to calculate weighted coefficients can lead to performance loss. Thus, a green multi-modal (MM) fusion DOA framework is proposed to realize a more practical, low-cost and high time-efficiency DOA estimation for a H$^2$AD array. Firstly, two more efficient clustering methods, global maximum cos\_similarity clustering (GMaxCS) and global minimum distance clustering (GMinD), are presented to infer more precise true solutions from the candidate solution sets. Based on this, an iteration weighted fusion (IWF)-based method is introduced to iteratively update weighted fusion coefficients and the clustering center of the true solution classes by using the estimated values. Particularly, the coarse DOA calculated by fully digital (FD) subarray, serves as the initial cluster center. The above process yields two methods called MM-IWF-GMaxCS and MM-IWF-GMinD. To further provide a higher-accuracy DOA estimation, a fusion network (fusionNet) is proposed to aggregate the inferred two-part true angles and thus generates two effective approaches called MM-fusionNet-GMaxCS and MM-fusionNet-GMinD. The simulation outcomes show the proposed four approaches can achieve the ideal DOA performance and the CRLB. Meanwhile, proposed MM-fusionNet-GMaxCS and MM-fusionNet-GMinD exhibit superior DOA performance compared to MM-IWF-GMaxCS and MM-IWF-GMinD, especially in extremely-low SNR range.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge