Yu Yao

DINO-Detect: A Simple yet Effective Framework for Blur-Robust AI-Generated Image Detection

Nov 18, 2025Abstract:With growing concerns over image authenticity and digital safety, the field of AI-generated image (AIGI) detection has progressed rapidly. Yet, most AIGI detectors still struggle under real-world degradations, particularly motion blur, which frequently occurs in handheld photography, fast motion, and compressed video. Such blur distorts fine textures and suppresses high-frequency artifacts, causing severe performance drops in real-world settings. We address this limitation with a blur-robust AIGI detection framework based on teacher-student knowledge distillation. A high-capacity teacher (DINOv3), trained on clean (i.e., sharp) images, provides stable and semantically rich representations that serve as a reference for learning. By freezing the teacher to maintain its generalization ability, we distill its feature and logit responses from sharp images to a student trained on blurred counterparts, enabling the student to produce consistent representations under motion degradation. Extensive experiments benchmarks show that our method achieves state-of-the-art performance under both motion-blurred and clean conditions, demonstrating improved generalization and real-world applicability. Source codes will be released at: https://github.com/JiaLiangShen/Dino-Detect-for-blur-robust-AIGC-Detection.

VitalBench: A Rigorous Multi-Center Benchmark for Long-Term Vital Sign Prediction in Intraoperative Care

Nov 14, 2025Abstract:Intraoperative monitoring and prediction of vital signs are critical for ensuring patient safety and improving surgical outcomes. Despite recent advances in deep learning models for medical time-series forecasting, several challenges persist, including the lack of standardized benchmarks, incomplete data, and limited cross-center validation. To address these challenges, we introduce VitalBench, a novel benchmark specifically designed for intraoperative vital sign prediction. VitalBench includes data from over 4,000 surgeries across two independent medical centers, offering three evaluation tracks: complete data, incomplete data, and cross-center generalization. This framework reflects the real-world complexities of clinical practice, minimizing reliance on extensive preprocessing and incorporating masked loss techniques for robust and unbiased model evaluation. By providing a standardized and unified platform for model development and comparison, VitalBench enables researchers to focus on architectural innovation while ensuring consistency in data handling. This work lays the foundation for advancing predictive models for intraoperative vital sign forecasting, ensuring that these models are not only accurate but also robust and adaptable across diverse clinical environments. Our code and data are available at https://github.com/XiudingCai/VitalBench.

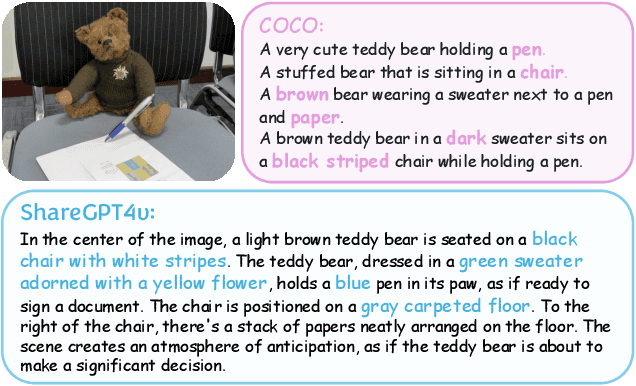

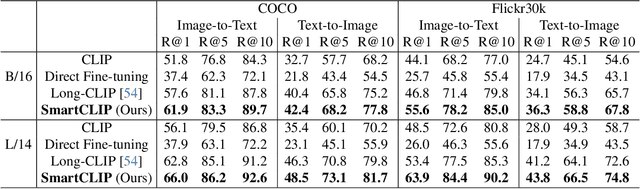

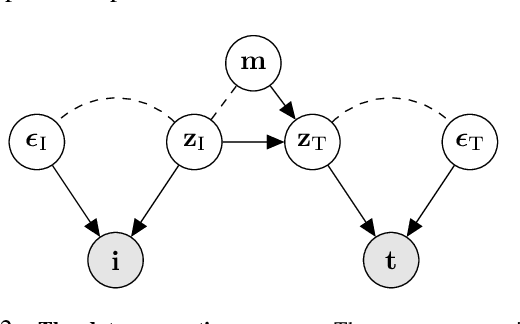

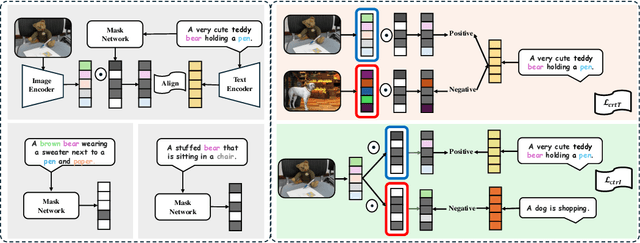

SmartCLIP: Modular Vision-language Alignment with Identification Guarantees

Jul 29, 2025

Abstract:Contrastive Language-Image Pre-training (CLIP)~\citep{radford2021learning} has emerged as a pivotal model in computer vision and multimodal learning, achieving state-of-the-art performance at aligning visual and textual representations through contrastive learning. However, CLIP struggles with potential information misalignment in many image-text datasets and suffers from entangled representation. On the one hand, short captions for a single image in datasets like MSCOCO may describe disjoint regions in the image, leaving the model uncertain about which visual features to retain or disregard. On the other hand, directly aligning long captions with images can lead to the retention of entangled details, preventing the model from learning disentangled, atomic concepts -- ultimately limiting its generalization on certain downstream tasks involving short prompts. In this paper, we establish theoretical conditions that enable flexible alignment between textual and visual representations across varying levels of granularity. Specifically, our framework ensures that a model can not only \emph{preserve} cross-modal semantic information in its entirety but also \emph{disentangle} visual representations to capture fine-grained textual concepts. Building on this foundation, we introduce \ours, a novel approach that identifies and aligns the most relevant visual and textual representations in a modular manner. Superior performance across various tasks demonstrates its capability to handle information misalignment and supports our identification theory. The code is available at https://github.com/Mid-Push/SmartCLIP.

A Sample Efficient Conditional Independence Test in the Presence of Discretization

Jun 10, 2025Abstract:In many real-world scenarios, interested variables are often represented as discretized values due to measurement limitations. Applying Conditional Independence (CI) tests directly to such discretized data, however, can lead to incorrect conclusions. To address this, recent advancements have sought to infer the correct CI relationship between the latent variables through binarizing observed data. However, this process inevitably results in a loss of information, which degrades the test's performance. Motivated by this, this paper introduces a sample-efficient CI test that does not rely on the binarization process. We find that the independence relationships of latent continuous variables can be established by addressing an over-identifying restriction problem with Generalized Method of Moments (GMM). Based on this insight, we derive an appropriate test statistic and establish its asymptotic distribution correctly reflecting CI by leveraging nodewise regression. Theoretical findings and Empirical results across various datasets demonstrate that the superiority and effectiveness of our proposed test. Our code implementation is provided in https://github.com/boyangaaaaa/DCT

Beyond Optimal Transport: Model-Aligned Coupling for Flow Matching

May 29, 2025Abstract:Flow Matching (FM) is an effective framework for training a model to learn a vector field that transports samples from a source distribution to a target distribution. To train the model, early FM methods use random couplings, which often result in crossing paths and lead the model to learn non-straight trajectories that require many integration steps to generate high-quality samples. To address this, recent methods adopt Optimal Transport (OT) to construct couplings by minimizing geometric distances, which helps reduce path crossings. However, we observe that such geometry-based couplings do not necessarily align with the model's preferred trajectories, making it difficult to learn the vector field induced by these couplings, which prevents the model from learning straight trajectories. Motivated by this, we propose Model-Aligned Coupling (MAC), an effective method that matches training couplings based not only on geometric distance but also on alignment with the model's preferred transport directions based on its prediction error. To avoid the time-costly match process, MAC proposes to select the top-$k$ fraction of couplings with the lowest error for training. Extensive experiments show that MAC significantly improves generation quality and efficiency in few-step settings compared to existing methods. Project page: https://yexionglin.github.io/mac

SP2RINT: Spatially-Decoupled Physics-Inspired Progressive Inverse Optimization for Scalable, PDE-Constrained Meta-Optical Neural Network Training

May 23, 2025Abstract:DONNs harness the physics of light propagation for efficient analog computation, with applications in AI and signal processing. Advances in nanophotonic fabrication and metasurface-based wavefront engineering have opened new pathways to realize high-capacity DONNs across various spectral regimes. Training such DONN systems to determine the metasurface structures remains challenging. Heuristic methods are fast but oversimplify metasurfaces modulation, often resulting in physically unrealizable designs and significant performance degradation. Simulation-in-the-loop training methods directly optimize a physically implementable metasurface using adjoint methods during end-to-end DONN training, but are inherently computationally prohibitive and unscalable.To address these limitations, we propose SP2RINT, a spatially decoupled, progressive training framework that formulates DONN training as a PDE-constrained learning problem. Metasurface responses are first relaxed into freely trainable transfer matrices with a banded structure. We then progressively enforce physical constraints by alternating between transfer matrix training and adjoint-based inverse design, avoiding per-iteration PDE solves while ensuring final physical realizability. To further reduce runtime, we introduce a physics-inspired, spatially decoupled inverse design strategy based on the natural locality of field interactions. This approach partitions the metasurface into independently solvable patches, enabling scalable and parallel inverse design with system-level calibration. Evaluated across diverse DONN training tasks, SP2RINT achieves digital-comparable accuracy while being 1825 times faster than simulation-in-the-loop approaches. By bridging the gap between abstract DONN models and implementable photonic hardware, SP2RINT enables scalable, high-performance training of physically realizable meta-optical neural systems.

Mamba-VA: A Mamba-based Approach for Continuous Emotion Recognition in Valence-Arousal Space

Mar 13, 2025Abstract:Continuous Emotion Recognition (CER) plays a crucial role in intelligent human-computer interaction, mental health monitoring, and autonomous driving. Emotion modeling based on the Valence-Arousal (VA) space enables a more nuanced representation of emotional states. However, existing methods still face challenges in handling long-term dependencies and capturing complex temporal dynamics. To address these issues, this paper proposes a novel emotion recognition model, Mamba-VA, which leverages the Mamba architecture to efficiently model sequential emotional variations in video frames. First, the model employs a Masked Autoencoder (MAE) to extract deep visual features from video frames, enhancing the robustness of temporal information. Then, a Temporal Convolutional Network (TCN) is utilized for temporal modeling to capture local temporal dependencies. Subsequently, Mamba is applied for long-sequence modeling, enabling the learning of global emotional trends. Finally, a fully connected (FC) layer performs regression analysis to predict continuous valence and arousal values. Experimental results on the Valence-Arousal (VA) Estimation task of the 8th competition on Affective Behavior Analysis in-the-wild (ABAW) demonstrate that the proposed model achieves valence and arousal scores of 0.5362 (0.5036) and 0.4310 (0.4119) on the validation (test) set, respectively, outperforming the baseline. The source code is available on GitHub:https://github.com/FreedomPuppy77/Charon.

Semantic Data Augmentation Enhanced Invariant Risk Minimization for Medical Image Domain Generalization

Feb 08, 2025Abstract:Deep learning has achieved remarkable success in medical image classification. However, its clinical application is often hindered by data heterogeneity caused by variations in scanner vendors, imaging protocols, and operators. Approaches such as invariant risk minimization (IRM) aim to address this challenge of out-of-distribution generalization. For instance, VIRM improves upon IRM by tackling the issue of insufficient feature support overlap, demonstrating promising potential. Nonetheless, these methods face limitations in medical imaging due to the scarcity of annotated data and the inefficiency of augmentation strategies. To address these issues, we propose a novel domain-oriented direction selector to replace the random augmentation strategy used in VIRM. Our method leverages inter-domain covariance as a guider for augmentation direction, guiding data augmentation towards the target domain. This approach effectively reduces domain discrepancies and enhances generalization performance. Experiments on a multi-center diabetic retinopathy dataset demonstrate that our method outperforms state-of-the-art approaches, particularly under limited data conditions and significant domain heterogeneity.

Flow: A Modular Approach to Automated Agentic Workflow Generation

Jan 14, 2025

Abstract:Multi-agent frameworks powered by large language models (LLMs) have demonstrated great success in automated planning and task execution. However, the effective adjustment of Agentic workflows during execution has not been well-studied. A effective workflow adjustment is crucial, as in many real-world scenarios, the initial plan must adjust to unforeseen challenges and changing conditions in real-time to ensure the efficient execution of complex tasks. In this paper, we define workflows as an activity-on-vertex (AOV) graphs. We continuously refine the workflow by dynamically adjusting task allocations based on historical performance and previous AOV with LLM agents. To further enhance system performance, we emphasize modularity in workflow design based on measuring parallelism and dependence complexity. Our proposed multi-agent framework achieved efficient sub-task concurrent execution, goal achievement, and error tolerance. Empirical results across different practical tasks demonstrate dramatic improvements in the efficiency of multi-agent frameworks through dynamic workflow updating and modularization.

Ranked from Within: Ranking Large Multimodal Models for Visual Question Answering Without Labels

Dec 09, 2024

Abstract:As large multimodal models (LMMs) are increasingly deployed across diverse applications, the need for adaptable, real-world model ranking has become paramount. Traditional evaluation methods are largely dataset-centric, relying on fixed, labeled datasets and supervised metrics, which are resource-intensive and may lack generalizability to novel scenarios, highlighting the importance of unsupervised ranking. In this work, we explore unsupervised model ranking for LMMs by leveraging their uncertainty signals, such as softmax probabilities. We evaluate state-of-the-art LMMs (e.g., LLaVA) across visual question answering benchmarks, analyzing how uncertainty-based metrics can reflect model performance. Our findings show that uncertainty scores derived from softmax distributions provide a robust, consistent basis for ranking models across varied tasks. This finding enables the ranking of LMMs on real-world, unlabeled data for visual question answering, providing a practical approach for selecting models across diverse domains without requiring manual annotation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge