Yiliao Song

Cultural Bias Matters: A Cross-Cultural Benchmark Dataset and Sentiment-Enriched Model for Understanding Multimodal Metaphors

Jun 08, 2025

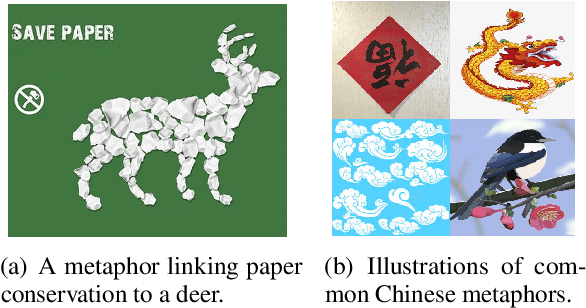

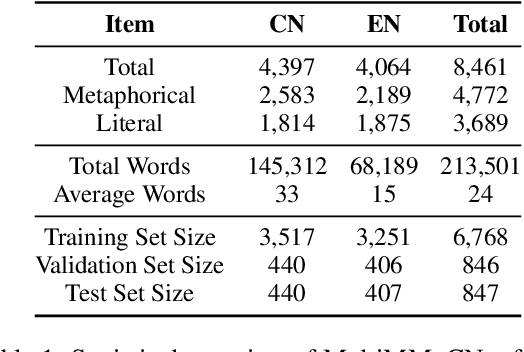

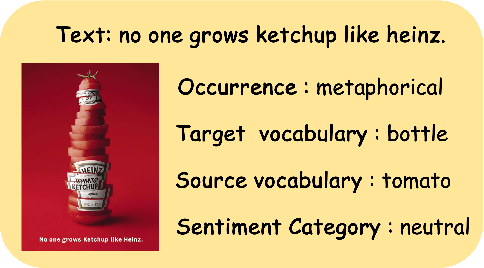

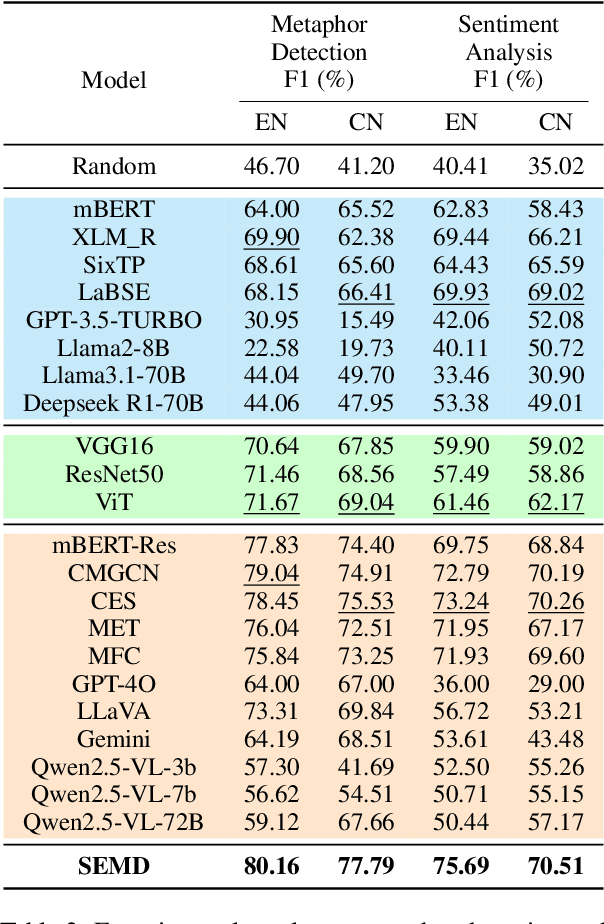

Abstract:Metaphors are pervasive in communication, making them crucial for natural language processing (NLP). Previous research on automatic metaphor processing predominantly relies on training data consisting of English samples, which often reflect Western European or North American biases. This cultural skew can lead to an overestimation of model performance and contributions to NLP progress. However, the impact of cultural bias on metaphor processing, particularly in multimodal contexts, remains largely unexplored. To address this gap, we introduce MultiMM, a Multicultural Multimodal Metaphor dataset designed for cross-cultural studies of metaphor in Chinese and English. MultiMM consists of 8,461 text-image advertisement pairs, each accompanied by fine-grained annotations, providing a deeper understanding of multimodal metaphors beyond a single cultural domain. Additionally, we propose Sentiment-Enriched Metaphor Detection (SEMD), a baseline model that integrates sentiment embeddings to enhance metaphor comprehension across cultural backgrounds. Experimental results validate the effectiveness of SEMD on metaphor detection and sentiment analysis tasks. We hope this work increases awareness of cultural bias in NLP research and contributes to the development of fairer and more inclusive language models. Our dataset and code are available at https://github.com/DUTIR-YSQ/MultiMM.

Flow: A Modular Approach to Automated Agentic Workflow Generation

Jan 14, 2025

Abstract:Multi-agent frameworks powered by large language models (LLMs) have demonstrated great success in automated planning and task execution. However, the effective adjustment of Agentic workflows during execution has not been well-studied. A effective workflow adjustment is crucial, as in many real-world scenarios, the initial plan must adjust to unforeseen challenges and changing conditions in real-time to ensure the efficient execution of complex tasks. In this paper, we define workflows as an activity-on-vertex (AOV) graphs. We continuously refine the workflow by dynamically adjusting task allocations based on historical performance and previous AOV with LLM agents. To further enhance system performance, we emphasize modularity in workflow design based on measuring parallelism and dependence complexity. Our proposed multi-agent framework achieved efficient sub-task concurrent execution, goal achievement, and error tolerance. Empirical results across different practical tasks demonstrate dramatic improvements in the efficiency of multi-agent frameworks through dynamic workflow updating and modularization.

A Unified Solution to Diverse Heterogeneities in One-shot Federated Learning

Oct 28, 2024

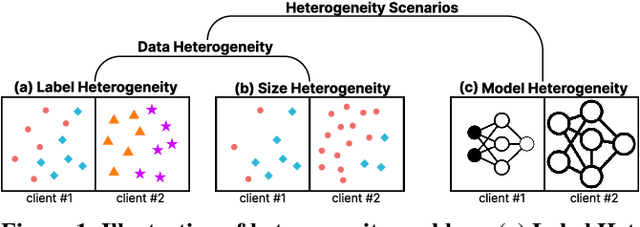

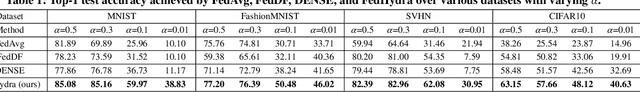

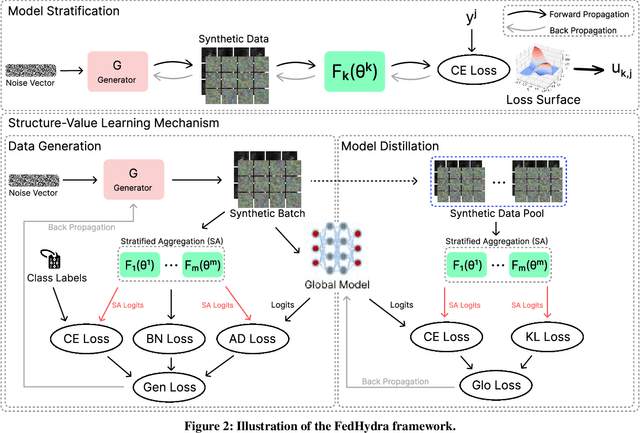

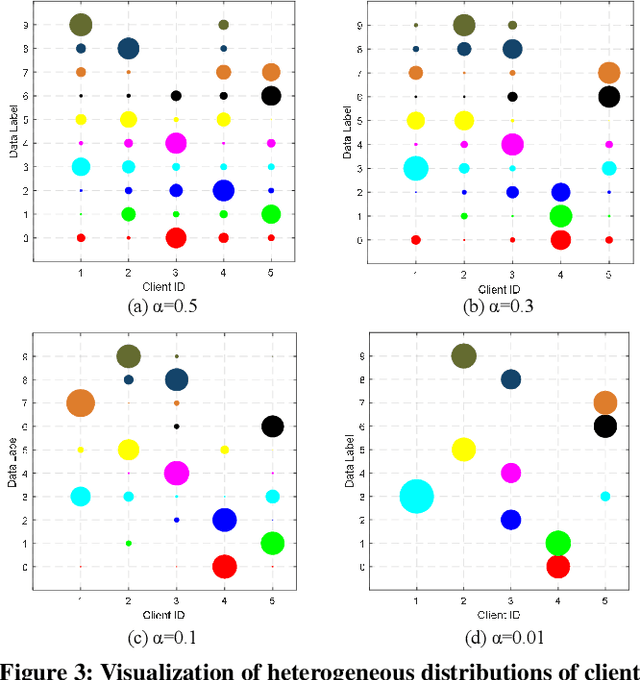

Abstract:One-shot federated learning (FL) limits the communication between the server and clients to a single round, which largely decreases the privacy leakage risks in traditional FLs requiring multiple communications. However, we find existing one-shot FL frameworks are vulnerable to distributional heterogeneity due to their insufficient focus on data heterogeneity while concentrating predominantly on model heterogeneity. Filling this gap, we propose a unified, data-free, one-shot federated learning framework (FedHydra) that can effectively address both model and data heterogeneity. Rather than applying existing value-only learning mechanisms, a structure-value learning mechanism is proposed in FedHydra. Specifically, a new stratified learning structure is proposed to cover data heterogeneity, and the value of each item during computation reflects model heterogeneity. By this design, the data and model heterogeneity issues are simultaneously monitored from different aspects during learning. Consequently, FedHydra can effectively mitigate both issues by minimizing their inherent conflicts. We compared FedHydra with three SOTA baselines on four benchmark datasets. Experimental results show that our method outperforms the previous one-shot FL methods in both homogeneous and heterogeneous settings.

Real-time Fuel Leakage Detection via Online Change Point Detection

Oct 13, 2024

Abstract:Early detection of fuel leakage at service stations with underground petroleum storage systems is a crucial task to prevent catastrophic hazards. Current data-driven fuel leakage detection methods employ offline statistical inventory reconciliation, leading to significant detection delays. Consequently, this can result in substantial financial loss and environmental impact on the surrounding community. In this paper, we propose a novel framework called Memory-based Online Change Point Detection (MOCPD) which operates in near real-time, enabling early detection of fuel leakage. MOCPD maintains a collection of representative historical data within a size-constrained memory, along with an adaptively computed threshold. Leaks are detected when the dissimilarity between the latest data and historical memory exceeds the current threshold. An update phase is incorporated in MOCPD to ensure diversity among historical samples in the memory. With this design, MOCPD is more robust and achieves a better recall rate while maintaining a reasonable precision score. We have conducted a variety of experiments comparing MOCPD to commonly used online change point detection (CPD) baselines on real-world fuel variance data with induced leakages, actual fuel leakage data and benchmark CPD datasets. Overall, MOCPD consistently outperforms the baseline methods in terms of detection accuracy, demonstrating its applicability to fuel leakage detection and CPD problems.

Detecting Machine-Generated Texts by Multi-Population Aware Optimization for Maximum Mean Discrepancy

Feb 29, 2024

Abstract:Large language models (LLMs) such as ChatGPT have exhibited remarkable performance in generating human-like texts. However, machine-generated texts (MGTs) may carry critical risks, such as plagiarism issues, misleading information, or hallucination issues. Therefore, it is very urgent and important to detect MGTs in many situations. Unfortunately, it is challenging to distinguish MGTs and human-written texts because the distributional discrepancy between them is often very subtle due to the remarkable performance of LLMs. In this paper, we seek to exploit \textit{maximum mean discrepancy} (MMD) to address this issue in the sense that MMD can well identify distributional discrepancies. However, directly training a detector with MMD using diverse MGTs will incur a significantly increased variance of MMD since MGTs may contain \textit{multiple text populations} due to various LLMs. This will severely impair MMD's ability to measure the difference between two samples. To tackle this, we propose a novel \textit{multi-population} aware optimization method for MMD called MMD-MP, which can \textit{avoid variance increases} and thus improve the stability to measure the distributional discrepancy. Relying on MMD-MP, we develop two methods for paragraph-based and sentence-based detection, respectively. Extensive experiments on various LLMs, \eg, GPT2 and ChatGPT, show superior detection performance of our MMD-MP. The source code is available at \url{https://github.com/ZSHsh98/MMD-MP}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge