Jiawei Huang

SDP: A Unified Protocol and Benchmarking Framework for Reproducible Wireless Sensing

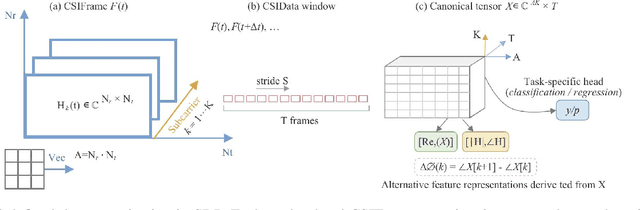

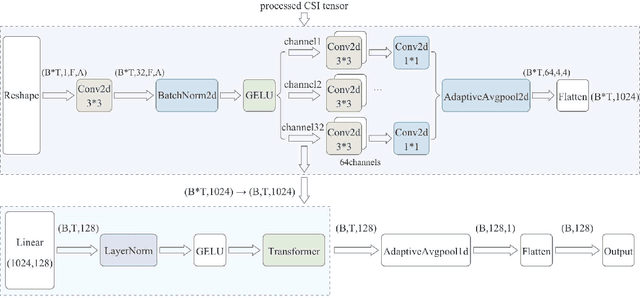

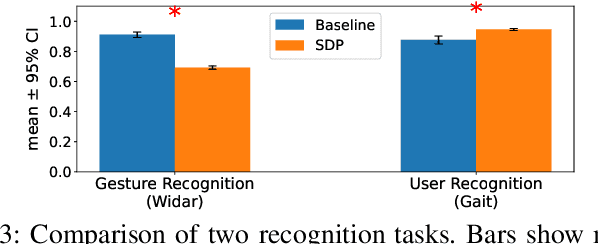

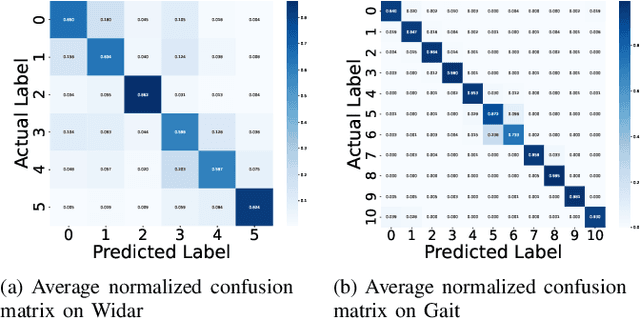

Jan 13, 2026Abstract:Learning-based wireless sensing has made rapid progress, yet the field still lacks a unified and reproducible experimental foundation. Unlike computer vision, wireless sensing relies on hardware-dependent channel measurements whose representations, preprocessing pipelines, and evaluation protocols vary significantly across devices and datasets, hindering fair comparison and reproducibility. This paper proposes the Sensing Data Protocol (SDP), a protocol-level abstraction and unified benchmark for scalable wireless sensing. SDP acts as a standardization layer that decouples learning tasks from hardware heterogeneity. To this end, SDP enforces deterministic physical-layer sanitization, canonical tensor construction, and standardized training and evaluation procedures, decoupling learning performance from hardware-specific artifacts. Rather than introducing task-specific models, SDP establishes a principled protocol foundation for fair evaluation across diverse sensing tasks and platforms. Extensive experiments demonstrate that SDP achieves competitive accuracy while substantially improving stability, reducing inter-seed performance variance by orders of magnitude on complex activity recognition tasks. A real-world experiment using commercial off-the-shelf Wi-Fi hardware further illustrating the protocol's interoperability across heterogeneous hardware. By providing a unified protocol and benchmark, SDP enables reproducible and comparable wireless sensing research and supports the transition from ad hoc experimentation toward reliable engineering practice.

A Sensing Dataset Protocol for Benchmarking and Multi-Task Wireless Sensing

Dec 13, 2025

Abstract:Wireless sensing has become a fundamental enabler for intelligent environments, supporting applications such as human detection, activity recognition, localization, and vital sign monitoring. Despite rapid advances, existing datasets and pipelines remain fragmented across sensing modalities, hindering fair comparison, transfer, and reproducibility. We propose the Sensing Dataset Protocol (SDP), a protocol-level specification and benchmark framework for large-scale wireless sensing. SDP defines how heterogeneous wireless signals are mapped into a unified perception data-block schema through lightweight synchronization, frequency-time alignment, and resampling, while a Canonical Polyadic-Alternating Least Squares (CP-ALS) pooling stage provides a task-agnostic representation that preserves multipath, spectral, and temporal structures. Built upon this protocol, a unified benchmark is established for detection, recognition, and vital-sign estimation with consistent preprocessing, training, and evaluation. Experiments under the cross-user split demonstrate that SDP significantly reduces variance (approximately 88%) across seeds while maintaining competitive accuracy and latency, confirming its value as a reproducible foundation for multi-modal and multitask sensing research.

Transformer IMU Calibrator: Dynamic On-body IMU Calibration for Inertial Motion Capture

Jun 12, 2025Abstract:In this paper, we propose a novel dynamic calibration method for sparse inertial motion capture systems, which is the first to break the restrictive absolute static assumption in IMU calibration, i.e., the coordinate drift RG'G and measurement offset RBS remain constant during the entire motion, thereby significantly expanding their application scenarios. Specifically, we achieve real-time estimation of RG'G and RBS under two relaxed assumptions: i) the matrices change negligibly in a short time window; ii) the human movements/IMU readings are diverse in such a time window. Intuitively, the first assumption reduces the number of candidate matrices, and the second assumption provides diverse constraints, which greatly reduces the solution space and allows for accurate estimation of RG'G and RBS from a short history of IMU readings in real time. To achieve this, we created synthetic datasets of paired RG'G, RBS matrices and IMU readings, and learned their mappings using a Transformer-based model. We also designed a calibration trigger based on the diversity of IMU readings to ensure that assumption ii) is met before applying our method. To our knowledge, we are the first to achieve implicit IMU calibration (i.e., seamlessly putting IMUs into use without the need for an explicit calibration process), as well as the first to enable long-term and accurate motion capture using sparse IMUs. The code and dataset are available at https://github.com/ZuoCX1996/TIC.

T2A-Feedback: Improving Basic Capabilities of Text-to-Audio Generation via Fine-grained AI Feedback

May 15, 2025

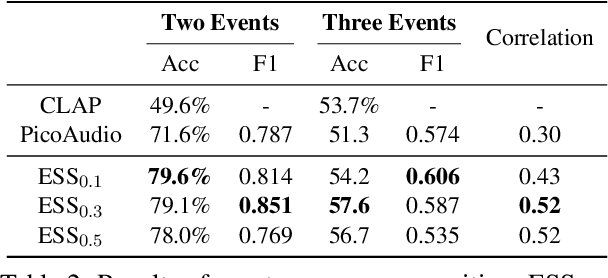

Abstract:Text-to-audio (T2A) generation has achieved remarkable progress in generating a variety of audio outputs from language prompts. However, current state-of-the-art T2A models still struggle to satisfy human preferences for prompt-following and acoustic quality when generating complex multi-event audio. To improve the performance of the model in these high-level applications, we propose to enhance the basic capabilities of the model with AI feedback learning. First, we introduce fine-grained AI audio scoring pipelines to: 1) verify whether each event in the text prompt is present in the audio (Event Occurrence Score), 2) detect deviations in event sequences from the language description (Event Sequence Score), and 3) assess the overall acoustic and harmonic quality of the generated audio (Acoustic&Harmonic Quality). We evaluate these three automatic scoring pipelines and find that they correlate significantly better with human preferences than other evaluation metrics. This highlights their value as both feedback signals and evaluation metrics. Utilizing our robust scoring pipelines, we construct a large audio preference dataset, T2A-FeedBack, which contains 41k prompts and 249k audios, each accompanied by detailed scores. Moreover, we introduce T2A-EpicBench, a benchmark that focuses on long captions, multi-events, and story-telling scenarios, aiming to evaluate the advanced capabilities of T2A models. Finally, we demonstrate how T2A-FeedBack can enhance current state-of-the-art audio model. With simple preference tuning, the audio generation model exhibits significant improvements in both simple (AudioCaps test set) and complex (T2A-EpicBench) scenarios.

Steering No-Regret Agents in MFGs under Model Uncertainty

Mar 12, 2025Abstract:Incentive design is a popular framework for guiding agents' learning dynamics towards desired outcomes by providing additional payments beyond intrinsic rewards. However, most existing works focus on a finite, small set of agents or assume complete knowledge of the game, limiting their applicability to real-world scenarios involving large populations and model uncertainty. To address this gap, we study the design of steering rewards in Mean-Field Games (MFGs) with density-independent transitions, where both the transition dynamics and intrinsic reward functions are unknown. This setting presents non-trivial challenges, as the mediator must incentivize the agents to explore for its model learning under uncertainty, while simultaneously steer them to converge to desired behaviors without incurring excessive incentive payments. Assuming agents exhibit no(-adaptive) regret behaviors, we contribute novel optimistic exploration algorithms. Theoretically, we establish sub-linear regret guarantees for the cumulative gaps between the agents' behaviors and the desired ones. In terms of the steering cost, we demonstrate that our total incentive payments incur only sub-linear excess, competing with a baseline steering strategy that stabilizes the target policy as an equilibrium. Our work presents an effective framework for steering agents behaviors in large-population systems under uncertainty.

Can RLHF be More Efficient with Imperfect Reward Models? A Policy Coverage Perspective

Feb 26, 2025Abstract:Sample efficiency is critical for online Reinforcement Learning from Human Feedback (RLHF). While existing works investigate sample-efficient online exploration strategies, the potential of utilizing misspecified yet relevant reward models to accelerate learning remains underexplored. This paper studies how to transfer knowledge from those imperfect reward models in online RLHF. We start by identifying a novel property of the KL-regularized RLHF objective: \emph{a policy's ability to cover the optimal policy is captured by its sub-optimality}. Building on this insight, we propose a theoretical transfer learning algorithm with provable benefits compared to standard online learning. Our approach achieves low regret in the early stage by quickly adapting to the best available source reward models without prior knowledge of their quality, and over time, it attains an $\tilde{O}(\sqrt{T})$ regret bound \emph{independent} of structural complexity measures. Inspired by our theoretical findings, we develop an empirical algorithm with improved computational efficiency, and demonstrate its effectiveness empirically in summarization tasks.

Low-altitude Friendly-Jamming for Satellite-Maritime Communications via Generative AI-enabled Deep Reinforcement Learning

Jan 26, 2025

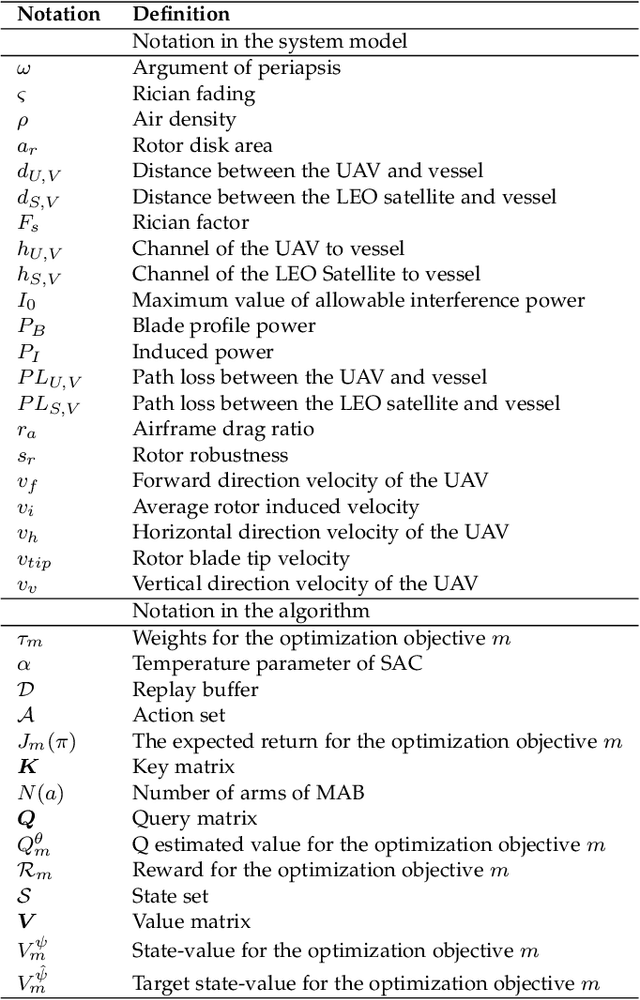

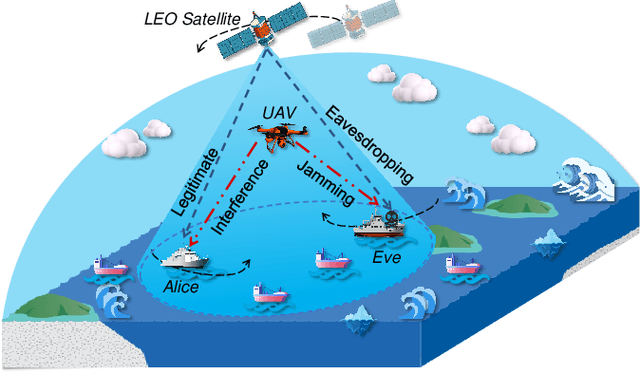

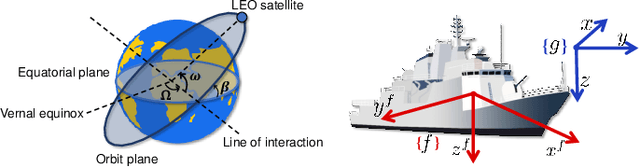

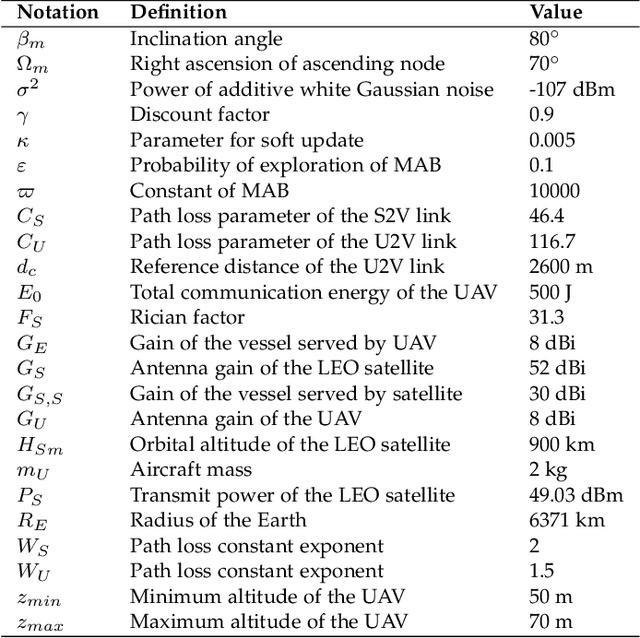

Abstract:Low Earth Orbit (LEO) satellites can be used to assist maritime wireless communications for data transmission across wide-ranging areas. However, extensive coverage of LEO satellites, combined with openness of channels, can cause the communication process to suffer from security risks. This paper presents a low-altitude friendly-jamming LEO satellite-maritime communication system enabled by a unmanned aerial vehicle (UAV) to ensure data security at the physical layer. Since such a system requires trade-off policies that balance the secrecy rate and energy consumption of the UAV to meet evolving scenario demands, we formulate a secure satellite-maritime communication multi-objective optimization problem (SSMCMOP). In order to solve the dynamic and long-term optimization problem, we reformulate it into a Markov decision process. We then propose a transformer-enhanced soft actor critic (TransSAC) algorithm, which is a generative artificial intelligence-enable deep reinforcement learning approach to solve the reformulated problem, so that capturing global dependencies and diversely exploring weights. Simulation results demonstrate that the TransSAC outperforms various baselines, and achieves an optimal secrecy rate while effectively minimizing the energy consumption of the UAV. Moreover, the results find more suitable constraint values for the system.

Spatiotemporal Decoupling for Efficient Vision-Based Occupancy Forecasting

Nov 21, 2024

Abstract:The task of occupancy forecasting (OCF) involves utilizing past and present perception data to predict future occupancy states of autonomous vehicle surrounding environments, which is critical for downstream tasks such as obstacle avoidance and path planning. Existing 3D OCF approaches struggle to predict plausible spatial details for movable objects and suffer from slow inference speeds due to neglecting the bias and uneven distribution of changing occupancy states in both space and time. In this paper, we propose a novel spatiotemporal decoupling vision-based paradigm to explicitly tackle the bias and achieve both effective and efficient 3D OCF. To tackle spatial bias in empty areas, we introduce a novel spatial representation that decouples the conventional dense 3D format into 2D bird's-eye view (BEV) occupancy with corresponding height values, enabling 3D OCF derived only from 2D predictions thus enhancing efficiency. To reduce temporal bias on static voxels, we design temporal decoupling to improve end-to-end OCF by temporally associating instances via predicted flows. We develop an efficient multi-head network EfficientOCF to achieve 3D OCF with our devised spatiotemporally decoupled representation. A new metric, conditional IoU (C-IoU), is also introduced to provide a robust 3D OCF performance assessment, especially in datasets with missing or incomplete annotations. The experimental results demonstrate that EfficientOCF surpasses existing baseline methods on accuracy and efficiency, achieving state-of-the-art performance with a fast inference time of 82.33ms with a single GPU. Our code will be released as open source.

MimicTalk: Mimicking a personalized and expressive 3D talking face in minutes

Oct 09, 2024

Abstract:Talking face generation (TFG) aims to animate a target identity's face to create realistic talking videos. Personalized TFG is a variant that emphasizes the perceptual identity similarity of the synthesized result (from the perspective of appearance and talking style). While previous works typically solve this problem by learning an individual neural radiance field (NeRF) for each identity to implicitly store its static and dynamic information, we find it inefficient and non-generalized due to the per-identity-per-training framework and the limited training data. To this end, we propose MimicTalk, the first attempt that exploits the rich knowledge from a NeRF-based person-agnostic generic model for improving the efficiency and robustness of personalized TFG. To be specific, (1) we first come up with a person-agnostic 3D TFG model as the base model and propose to adapt it into a specific identity; (2) we propose a static-dynamic-hybrid adaptation pipeline to help the model learn the personalized static appearance and facial dynamic features; (3) To generate the facial motion of the personalized talking style, we propose an in-context stylized audio-to-motion model that mimics the implicit talking style provided in the reference video without information loss by an explicit style representation. The adaptation process to an unseen identity can be performed in 15 minutes, which is 47 times faster than previous person-dependent methods. Experiments show that our MimicTalk surpasses previous baselines regarding video quality, efficiency, and expressiveness. Source code and video samples are available at https://mimictalk.github.io .

MulliVC: Multi-lingual Voice Conversion With Cycle Consistency

Aug 08, 2024Abstract:Voice conversion aims to modify the source speaker's voice to resemble the target speaker while preserving the original speech content. Despite notable advancements in voice conversion these days, multi-lingual voice conversion (including both monolingual and cross-lingual scenarios) has yet to be extensively studied. It faces two main challenges: 1) the considerable variability in prosody and articulation habits across languages; and 2) the rarity of paired multi-lingual datasets from the same speaker. In this paper, we propose MulliVC, a novel voice conversion system that only converts timbre and keeps original content and source language prosody without multi-lingual paired data. Specifically, each training step of MulliVC contains three substeps: In step one the model is trained with monolingual speech data; then, steps two and three take inspiration from back translation, construct a cyclical process to disentangle the timbre and other information (content, prosody, and other language-related information) in the absence of multi-lingual data from the same speaker. Both objective and subjective results indicate that MulliVC significantly surpasses other methods in both monolingual and cross-lingual contexts, demonstrating the system's efficacy and the viability of the three-step approach with cycle consistency. Audio samples can be found on our demo page (mullivc.github.io).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge