Siqi Wang

Generative Diffusion Augmentation with Quantum-Enhanced Discrimination for Medical Image Diagnosis

Jan 26, 2026Abstract:In biomedical engineering, artificial intelligence has become a pivotal tool for enhancing medical diagnostics, particularly in medical image classification tasks such as detecting pneumonia from chest X-rays and breast cancer screening. However, real-world medical datasets frequently exhibit severe class imbalance, where positive samples substantially outnumber negative samples, leading to biased models with low recall rates for minority classes. This imbalance not only compromises diagnostic accuracy but also poses clinical misdiagnosis risks. To address this challenge, we propose SDA-QEC (Simplified Diffusion Augmentation with Quantum-Enhanced Classification), an innovative framework that integrates simplified diffusion-based data augmentation with quantum-enhanced feature discrimination. Our approach employs a lightweight diffusion augmentor to generate high-quality synthetic samples for minority classes, rebalancing the training distribution. Subsequently, a quantum feature layer embedded within MobileNetV2 architecture enhances the model's discriminative capability through high-dimensional feature mapping in Hilbert space. Comprehensive experiments on coronary angiography image classification demonstrate that SDA-QEC achieves 98.33% accuracy, 98.78% AUC, and 98.33% F1-score, significantly outperforming classical baselines including ResNet18, MobileNetV2, DenseNet121, and VGG16. Notably, our framework simultaneously attains 98.33% sensitivity and 98.33% specificity, achieving a balanced performance critical for clinical deployment. The proposed method validates the feasibility of integrating generative augmentation with quantum-enhanced modeling in real-world medical imaging tasks, offering a novel research pathway for developing highly reliable medical AI systems in small-sample, highly imbalanced, and high-risk diagnostic scenarios.

Domain Generalization with Quantum Enhancement for Medical Image Classification: A Lightweight Approach for Cross-Center Deployment

Jan 25, 2026Abstract:Medical image artificial intelligence models often achieve strong performance in single-center or single-device settings, yet their effectiveness frequently deteriorates in real-world cross-center deployment due to domain shift, limiting clinical generalizability. To address this challenge, we propose a lightweight domain generalization framework with quantum-enhanced collaborative learning, enabling robust generalization to unseen target domains without relying on real multi-center labeled data. Specifically, a MobileNetV2-based domain-invariant encoder is constructed and optimized through three key components: (1) multi-domain imaging shift simulation using brightness, contrast, sharpening, and noise perturbations to emulate heterogeneous acquisition conditions; (2) domain-adversarial training with gradient reversal to suppress domain-discriminative features; and (3) a lightweight quantum feature enhancement layer that applies parameterized quantum circuits for nonlinear feature mapping and entanglement modeling. In addition, a test-time adaptation strategy is employed during inference to further alleviate distribution shifts. Experiments on simulated multi-center medical imaging datasets demonstrate that the proposed method significantly outperforms baseline models without domain generalization or quantum enhancement on unseen domains, achieving reduced domain-specific performance variance and improved AUC and sensitivity. These results highlight the clinical potential of quantum-enhanced domain generalization under constrained computational resources and provide a feasible paradigm for hybrid quantum--classical medical imaging systems.

A Lightweight Medical Image Classification Framework via Self-Supervised Contrastive Learning and Quantum-Enhanced Feature Modeling

Jan 23, 2026Abstract:Intelligent medical image analysis is essential for clinical decision support but is often limited by scarce annotations, constrained computational resources, and suboptimal model generalization. To address these challenges, we propose a lightweight medical image classification framework that integrates self-supervised contrastive learning with quantum-enhanced feature modeling. MobileNetV2 is employed as a compact backbone and pretrained using a SimCLR-style self-supervised paradigm on unlabeled images. A lightweight parameterized quantum circuit (PQC) is embedded as a quantum feature enhancement module, forming a hybrid classical-quantum architecture, which is subsequently fine-tuned on limited labeled data. Experimental results demonstrate that, with only approximately 2-3 million parameters and low computational cost, the proposed method consistently outperforms classical baselines without self-supervised learning or quantum enhancement in terms of Accuracy, AUC, and F1-score. Feature visualization further indicates improved discriminability and representation stability. Overall, this work provides a practical and forward-looking solution for high-performance medical artificial intelligence under resource-constrained settings.

A Lightweight Brain-Inspired Machine Learning Framework for Coronary Angiography: Hybrid Neural Representation and Robust Learning Strategies

Jan 22, 2026Abstract:Background: Coronary angiography (CAG) is a cornerstone imaging modality for assessing coronary artery disease and guiding interventional treatment decisions. However, in real-world clinical settings, angiographic images are often characterized by complex lesion morphology, severe class imbalance, label uncertainty, and limited computational resources, posing substantial challenges to conventional deep learning approaches in terms of robustness and generalization.Methods: The proposed framework is built upon a pretrained convolutional neural network to construct a lightweight hybrid neural representation. A selective neural plasticity training strategy is introduced to enable efficient parameter adaptation. Furthermore, a brain-inspired attention-modulated loss function, combining Focal Loss with label smoothing, is employed to enhance sensitivity to hard samples and uncertain annotations. Class-imbalance-aware sampling and cosine annealing with warm restarts are adopted to mimic rhythmic regulation and attention allocation mechanisms observed in biological neural systems.Results: Experimental results demonstrate that the proposed lightweight brain-inspired model achieves strong and stable performance in binary coronary angiography classification, yielding competitive accuracy, recall, F1-score, and AUC metrics while maintaining high computational efficiency.Conclusion: This study validates the effectiveness of brain-inspired learning mechanisms in lightweight medical image analysis and provides a biologically plausible and deployable solution for intelligent clinical decision support under limited computational resources.

CitySeeker: How Do VLMS Explore Embodied Urban Navigation With Implicit Human Needs?

Dec 18, 2025Abstract:Vision-Language Models (VLMs) have made significant progress in explicit instruction-based navigation; however, their ability to interpret implicit human needs (e.g., "I am thirsty") in dynamic urban environments remains underexplored. This paper introduces CitySeeker, a novel benchmark designed to assess VLMs' spatial reasoning and decision-making capabilities for exploring embodied urban navigation to address implicit needs. CitySeeker includes 6,440 trajectories across 8 cities, capturing diverse visual characteristics and implicit needs in 7 goal-driven scenarios. Extensive experiments reveal that even top-performing models (e.g., Qwen2.5-VL-32B-Instruct) achieve only 21.1% task completion. We find key bottlenecks in error accumulation in long-horizon reasoning, inadequate spatial cognition, and deficient experiential recall. To further analyze them, we investigate a series of exploratory strategies-Backtracking Mechanisms, Enriching Spatial Cognition, and Memory-Based Retrieval (BCR), inspired by human cognitive mapping's emphasis on iterative observation-reasoning cycles and adaptive path optimization. Our analysis provides actionable insights for developing VLMs with robust spatial intelligence required for tackling "last-mile" navigation challenges.

Boosting RL-Based Visual Reasoning with Selective Adversarial Entropy Intervention

Dec 11, 2025

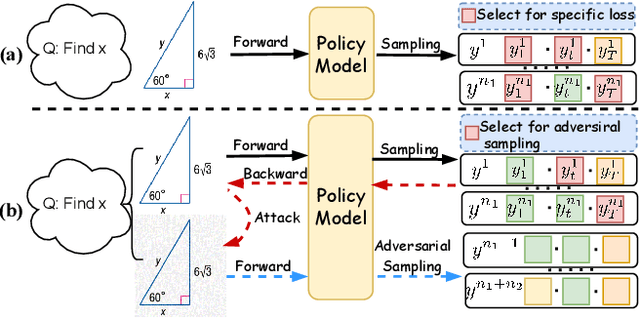

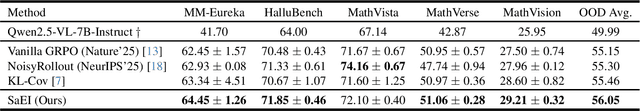

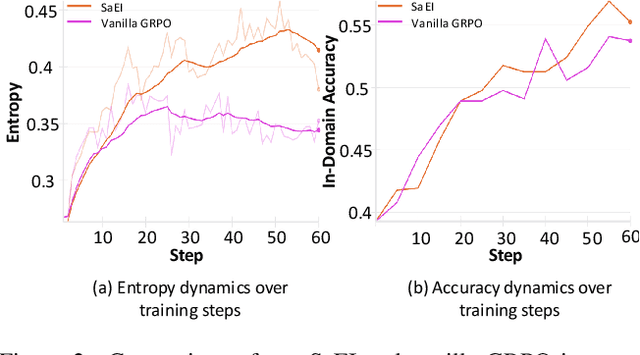

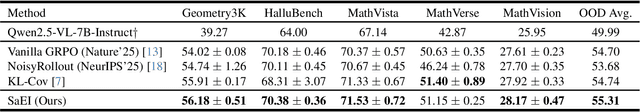

Abstract:Recently, reinforcement learning (RL) has become a common choice in enhancing the reasoning capabilities of vision-language models (VLMs). Considering existing RL-based finetuning methods, entropy intervention turns out to be an effective way to benefit exploratory ability, thereby improving policy performance. Notably, most existing studies intervene in entropy by simply controlling the update of specific tokens during policy optimization of RL. They ignore the entropy intervention during the RL sampling that can boost the performance of GRPO by improving the diversity of responses. In this paper, we propose Selective-adversarial Entropy Intervention, namely SaEI, which enhances policy entropy by distorting the visual input with the token-selective adversarial objective coming from the entropy of sampled responses. Specifically, we first propose entropy-guided adversarial sampling (EgAS) that formulates the entropy of sampled responses as an adversarial objective. Then, the corresponding adversarial gradient can be used to attack the visual input for producing adversarial samples, allowing the policy model to explore a larger answer space during RL sampling. Then, we propose token-selective entropy computation (TsEC) to maximize the effectiveness of adversarial attack in EgAS without distorting factual knowledge within VLMs. Extensive experiments on both in-domain and out-of-domain datasets show that our proposed method can greatly improve policy exploration via entropy intervention, to boost reasoning capabilities. Code will be released once the paper is accepted.

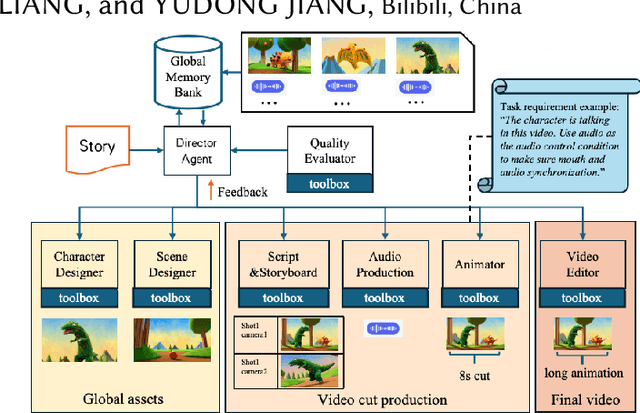

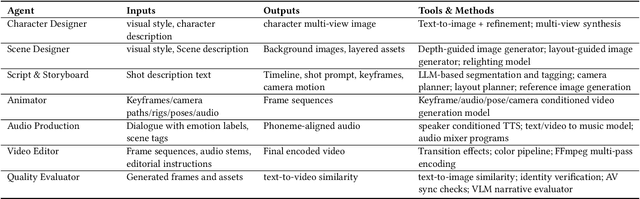

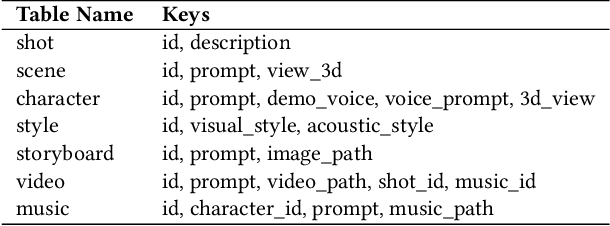

AniME: Adaptive Multi-Agent Planning for Long Animation Generation

Aug 27, 2025

Abstract:We present AniME, a director-oriented multi-agent system for automated long-form anime production, covering the full workflow from a story to the final video. The director agent keeps a global memory for the whole workflow, and coordinates several downstream specialized agents. By integrating customized Model Context Protocol (MCP) with downstream model instruction, the specialized agent adaptively selects control conditions for diverse sub-tasks. AniME produces cinematic animation with consistent characters and synchronized audio visual elements, offering a scalable solution for AI-driven anime creation.

Post-Completion Learning for Language Models

Jul 27, 2025Abstract:Current language model training paradigms typically terminate learning upon reaching the end-of-sequence (<eos>}) token, overlooking the potential learning opportunities in the post-completion space. We propose Post-Completion Learning (PCL), a novel training framework that systematically utilizes the sequence space after model output completion, to enhance both the reasoning and self-evaluation abilities. PCL enables models to continue generating self-assessments and reward predictions during training, while maintaining efficient inference by stopping at the completion point. To fully utilize this post-completion space, we design a white-box reinforcement learning method: let the model evaluate the output content according to the reward rules, then calculate and align the score with the reward functions for supervision. We implement dual-track SFT to optimize both reasoning and evaluation capabilities, and mixed it with RL training to achieve multi-objective hybrid optimization. Experimental results on different datasets and models demonstrate consistent improvements over traditional SFT and RL methods. Our method provides a new technical path for language model training that enhances output quality while preserving deployment efficiency.

ADRD: LLM-Driven Autonomous Driving Based on Rule-based Decision Systems

Jun 17, 2025Abstract:How to construct an interpretable autonomous driving decision-making system has become a focal point in academic research. In this study, we propose a novel approach that leverages large language models (LLMs) to generate executable, rule-based decision systems to address this challenge. Specifically, harnessing the strong reasoning and programming capabilities of LLMs, we introduce the ADRD(LLM-Driven Autonomous Driving Based on Rule-based Decision Systems) framework, which integrates three core modules: the Information Module, the Agents Module, and the Testing Module. The framework operates by first aggregating contextual driving scenario information through the Information Module, then utilizing the Agents Module to generate rule-based driving tactics. These tactics are iteratively refined through continuous interaction with the Testing Module. Extensive experimental evaluations demonstrate that ADRD exhibits superior performance in autonomous driving decision tasks. Compared to traditional reinforcement learning approaches and the most advanced LLM-based methods, ADRD shows significant advantages in terms of interpretability, response speed, and driving performance. These results highlight the framework's ability to achieve comprehensive and accurate understanding of complex driving scenarios, and underscore the promising future of transparent, rule-based decision systems that are easily modifiable and broadly applicable. To the best of our knowledge, this is the first work that integrates large language models with rule-based systems for autonomous driving decision-making, and our findings validate its potential for real-world deployment.

LaF-GRPO: In-Situ Navigation Instruction Generation for the Visually Impaired via GRPO with LLM-as-Follower Reward

Jun 04, 2025Abstract:Navigation instruction generation for visually impaired (VI) individuals (NIG-VI) is critical yet relatively underexplored. This study, hence, focuses on producing precise, in-situ, step-by-step navigation instructions that are practically usable by VI users. Concretely, we propose LaF-GRPO (LLM-as-Follower GRPO), where an LLM simulates VI user responses to generate rewards guiding the Vision-Language Model (VLM) post-training. This enhances instruction usability while reducing costly real-world data needs. To facilitate training and testing, we introduce NIG4VI, a 27k-sample open-sourced benchmark. It provides diverse navigation scenarios with accurate spatial coordinates, supporting detailed, open-ended in-situ instruction generation. Experiments on NIG4VI show the effectiveness of LaF-GRPO by quantitative metrics (e.g., Zero-(LaF-GRPO) boosts BLEU +14\%; SFT+(LaF-GRPO) METEOR 0.542 vs. GPT-4o's 0.323) and yields more intuitive, safer instructions. Code and benchmark are available at \href{https://github.com/YiyiyiZhao/NIG4VI}{https://github.com/YiyiyiZhao/NIG4VI}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge