Shaojie Shen

Geometry-Grounded Gaussian Splatting

Jan 25, 2026Abstract:Gaussian Splatting (GS) has demonstrated impressive quality and efficiency in novel view synthesis. However, shape extraction from Gaussian primitives remains an open problem. Due to inadequate geometry parameterization and approximation, existing shape reconstruction methods suffer from poor multi-view consistency and are sensitive to floaters. In this paper, we present a rigorous theoretical derivation that establishes Gaussian primitives as a specific type of stochastic solids. This theoretical framework provides a principled foundation for Geometry-Grounded Gaussian Splatting by enabling the direct treatment of Gaussian primitives as explicit geometric representations. Using the volumetric nature of stochastic solids, our method efficiently renders high-quality depth maps for fine-grained geometry extraction. Experiments show that our method achieves the best shape reconstruction results among all Gaussian Splatting-based methods on public datasets.

FlyCo: Foundation Model-Empowered Drones for Autonomous 3D Structure Scanning in Open-World Environments

Jan 12, 2026Abstract:Autonomous 3D scanning of open-world target structures via drones remains challenging despite broad applications. Existing paradigms rely on restrictive assumptions or effortful human priors, limiting practicality, efficiency, and adaptability. Recent foundation models (FMs) offer great potential to bridge this gap. This paper investigates a critical research problem: What system architecture can effectively integrate FM knowledge for this task? We answer it with FlyCo, a principled FM-empowered perception-prediction-planning loop enabling fully autonomous, prompt-driven 3D target scanning in diverse unknown open-world environments. FlyCo directly translates low-effort human prompts (text, visual annotations) into precise adaptive scanning flights via three coordinated stages: (1) perception fuses streaming sensor data with vision-language FMs for robust target grounding and tracking; (2) prediction distills FM knowledge and combines multi-modal cues to infer the partially observed target's complete geometry; (3) planning leverages predictive foresight to generate efficient and safe paths with comprehensive target coverage. Building on this, we further design key components to boost open-world target grounding efficiency and robustness, enhance prediction quality in terms of shape accuracy, zero-shot generalization, and temporal stability, and balance long-horizon flight efficiency with real-time computability and online collision avoidance. Extensive challenging real-world and simulation experiments show FlyCo delivers precise scene understanding, high efficiency, and real-time safety, outperforming existing paradigms with lower human effort and verifying the proposed architecture's practicality. Comprehensive ablations validate each component's contribution. FlyCo also serves as a flexible, extensible blueprint, readily leveraging future FM and robotics advances. Code will be released.

Vision-Language-Action Models for Autonomous Driving: Past, Present, and Future

Dec 18, 2025Abstract:Autonomous driving has long relied on modular "Perception-Decision-Action" pipelines, where hand-crafted interfaces and rule-based components often break down in complex or long-tailed scenarios. Their cascaded design further propagates perception errors, degrading downstream planning and control. Vision-Action (VA) models address some limitations by learning direct mappings from visual inputs to actions, but they remain opaque, sensitive to distribution shifts, and lack structured reasoning or instruction-following capabilities. Recent progress in Large Language Models (LLMs) and multimodal learning has motivated the emergence of Vision-Language-Action (VLA) frameworks, which integrate perception with language-grounded decision making. By unifying visual understanding, linguistic reasoning, and actionable outputs, VLAs offer a pathway toward more interpretable, generalizable, and human-aligned driving policies. This work provides a structured characterization of the emerging VLA landscape for autonomous driving. We trace the evolution from early VA approaches to modern VLA frameworks and organize existing methods into two principal paradigms: End-to-End VLA, which integrates perception, reasoning, and planning within a single model, and Dual-System VLA, which separates slow deliberation (via VLMs) from fast, safety-critical execution (via planners). Within these paradigms, we further distinguish subclasses such as textual vs. numerical action generators and explicit vs. implicit guidance mechanisms. We also summarize representative datasets and benchmarks for evaluating VLA-based driving systems and highlight key challenges and open directions, including robustness, interpretability, and instruction fidelity. Overall, this work aims to establish a coherent foundation for advancing human-compatible autonomous driving systems.

Temporal and Rotational Calibration for Event-Centric Multi-Sensor Systems

Aug 18, 2025Abstract:Event cameras generate asynchronous signals in response to pixel-level brightness changes, offering a sensing paradigm with theoretically microsecond-scale latency that can significantly enhance the performance of multi-sensor systems. Extrinsic calibration is a critical prerequisite for effective sensor fusion; however, the configuration that involves event cameras remains an understudied topic. In this paper, we propose a motion-based temporal and rotational calibration framework tailored for event-centric multi-sensor systems, eliminating the need for dedicated calibration targets. Our method uses as input the rotational motion estimates obtained from event cameras and other heterogeneous sensors, respectively. Different from conventional approaches that rely on event-to-frame conversion, our method efficiently estimates angular velocity from normal flow observations, which are derived from the spatio-temporal profile of event data. The overall calibration pipeline adopts a two-step approach: it first initializes the temporal offset and rotational extrinsics by exploiting kinematic correlations in the spirit of Canonical Correlation Analysis (CCA), and then refines both temporal and rotational parameters through a joint non-linear optimization using a continuous-time parametrization in SO(3). Extensive evaluations on both publicly available and self-collected datasets validate that the proposed method achieves calibration accuracy comparable to target-based methods, while exhibiting superior stability over purely CCA-based methods, and highlighting its precision, robustness and flexibility. To facilitate future research, our implementation will be made open-source. Code: https://github.com/NAIL-HNU/EvMultiCalib.

Fast and Scalable Game-Theoretic Trajectory Planning with Intentional Uncertainties

Jul 16, 2025Abstract:Trajectory planning involving multi-agent interactions has been a long-standing challenge in the field of robotics, primarily burdened by the inherent yet intricate interactions among agents. While game-theoretic methods are widely acknowledged for their effectiveness in managing multi-agent interactions, significant impediments persist when it comes to accommodating the intentional uncertainties of agents. In the context of intentional uncertainties, the heavy computational burdens associated with existing game-theoretic methods are induced, leading to inefficiencies and poor scalability. In this paper, we propose a novel game-theoretic interactive trajectory planning method to effectively address the intentional uncertainties of agents, and it demonstrates both high efficiency and enhanced scalability. As the underpinning basis, we model the interactions between agents under intentional uncertainties as a general Bayesian game, and we show that its agent-form equivalence can be represented as a potential game under certain minor assumptions. The existence and attainability of the optimal interactive trajectories are illustrated, as the corresponding Bayesian Nash equilibrium can be attained by optimizing a unified optimization problem. Additionally, we present a distributed algorithm based on the dual consensus alternating direction method of multipliers (ADMM) tailored to the parallel solving of the problem, thereby significantly improving the scalability. The attendant outcomes from simulations and experiments demonstrate that the proposed method is effective across a range of scenarios characterized by general forms of intentional uncertainties. Its scalability surpasses that of existing centralized and decentralized baselines, allowing for real-time interactive trajectory planning in uncertain game settings.

Foresight in Motion: Reinforcing Trajectory Prediction with Reward Heuristics

Jul 16, 2025

Abstract:Motion forecasting for on-road traffic agents presents both a significant challenge and a critical necessity for ensuring safety in autonomous driving systems. In contrast to most existing data-driven approaches that directly predict future trajectories, we rethink this task from a planning perspective, advocating a "First Reasoning, Then Forecasting" strategy that explicitly incorporates behavior intentions as spatial guidance for trajectory prediction. To achieve this, we introduce an interpretable, reward-driven intention reasoner grounded in a novel query-centric Inverse Reinforcement Learning (IRL) scheme. Our method first encodes traffic agents and scene elements into a unified vectorized representation, then aggregates contextual features through a query-centric paradigm. This enables the derivation of a reward distribution, a compact yet informative representation of the target agent's behavior within the given scene context via IRL. Guided by this reward heuristic, we perform policy rollouts to reason about multiple plausible intentions, providing valuable priors for subsequent trajectory generation. Finally, we develop a hierarchical DETR-like decoder integrated with bidirectional selective state space models to produce accurate future trajectories along with their associated probabilities. Extensive experiments on the large-scale Argoverse and nuScenes motion forecasting datasets demonstrate that our approach significantly enhances trajectory prediction confidence, achieving highly competitive performance relative to state-of-the-art methods.

Autonomous Flights inside Narrow Tunnels

May 26, 2025

Abstract:Multirotors are usually desired to enter confined narrow tunnels that are barely accessible to humans in various applications including inspection, search and rescue, and so on. This task is extremely challenging since the lack of geometric features and illuminations, together with the limited field of view, cause problems in perception; the restricted space and significant ego airflow disturbances induce control issues. This paper introduces an autonomous aerial system designed for navigation through tunnels as narrow as 0.5 m in diameter. The real-time and online system includes a virtual omni-directional perception module tailored for the mission and a novel motion planner that incorporates perception and ego airflow disturbance factors modeled using camera projections and computational fluid dynamics analyses, respectively. Extensive flight experiments on a custom-designed quadrotor are conducted in multiple realistic narrow tunnels to validate the superior performance of the system, even over human pilots, proving its potential for real applications. Additionally, a deployment pipeline on other multirotor platforms is outlined and open-source packages are provided for future developments.

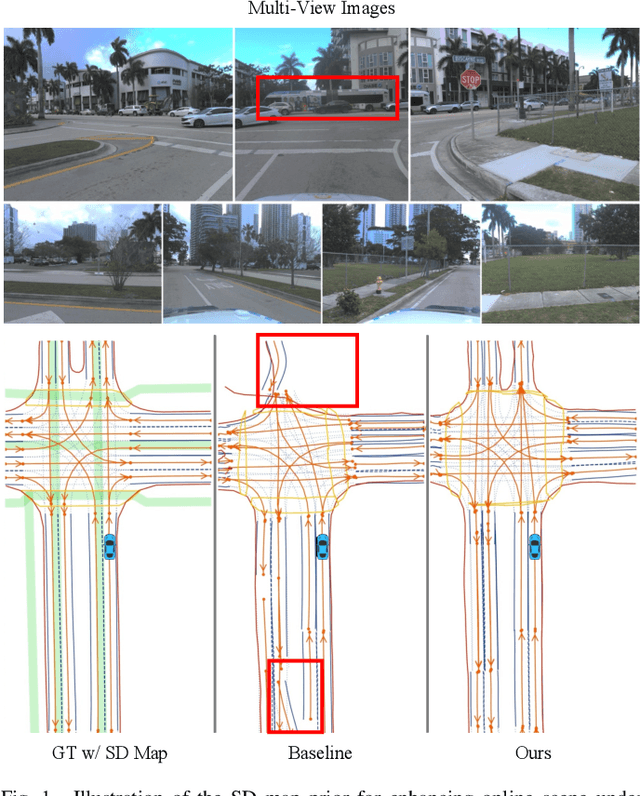

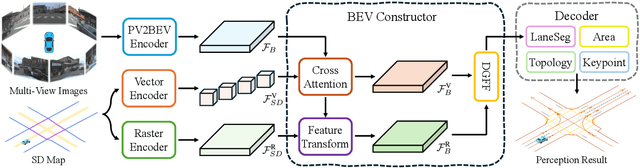

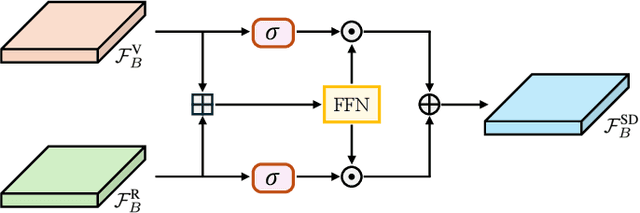

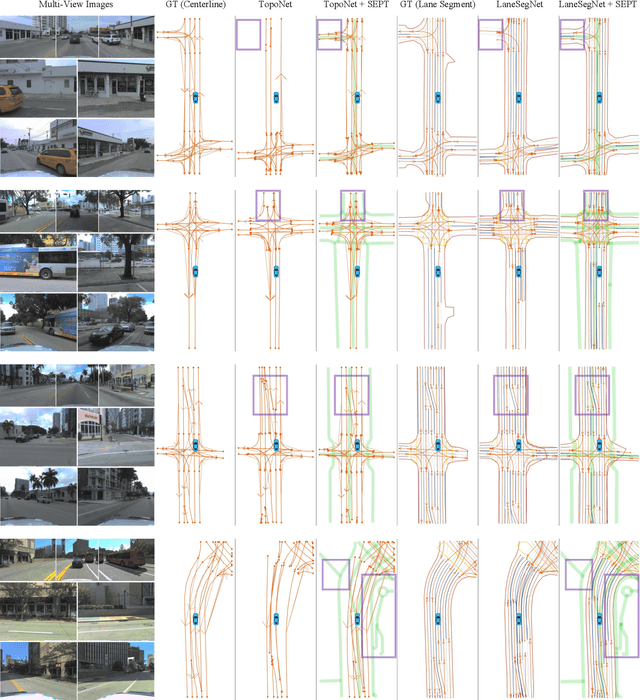

SEPT: Standard-Definition Map Enhanced Scene Perception and Topology Reasoning for Autonomous Driving

May 18, 2025

Abstract:Online scene perception and topology reasoning are critical for autonomous vehicles to understand their driving environments, particularly for mapless driving systems that endeavor to reduce reliance on costly High-Definition (HD) maps. However, recent advances in online scene understanding still face limitations, especially in long-range or occluded scenarios, due to the inherent constraints of onboard sensors. To address this challenge, we propose a Standard-Definition (SD) Map Enhanced scene Perception and Topology reasoning (SEPT) framework, which explores how to effectively incorporate the SD map as prior knowledge into existing perception and reasoning pipelines. Specifically, we introduce a novel hybrid feature fusion strategy that combines SD maps with Bird's-Eye-View (BEV) features, considering both rasterized and vectorized representations, while mitigating potential misalignment between SD maps and BEV feature spaces. Additionally, we leverage the SD map characteristics to design an auxiliary intersection-aware keypoint detection task, which further enhances the overall scene understanding performance. Experimental results on the large-scale OpenLane-V2 dataset demonstrate that by effectively integrating SD map priors, our framework significantly improves both scene perception and topology reasoning, outperforming existing methods by a substantial margin.

Active Contact Engagement for Aerial Navigation in Unknown Environments with Glass

May 01, 2025Abstract:Autonomous aerial robots are increasingly being deployed in real-world scenarios, where transparent glass obstacles present significant challenges to reliable navigation. Researchers have investigated the use of non-contact sensors and passive contact-resilient aerial vehicle designs to detect glass surfaces, which are often limited in terms of robustness and efficiency. In this work, we propose a novel approach for robust autonomous aerial navigation in unknown environments with transparent glass obstacles, combining the strengths of both sensor-based and contact-based glass detection. The proposed system begins with the incremental detection and information maintenance about potential glass surfaces using visual sensor measurements. The vehicle then actively engages in touch actions with the visually detected potential glass surfaces using a pair of lightweight contact-sensing modules to confirm or invalidate their presence. Following this, the volumetric map is efficiently updated with the glass surface information and safe trajectories are replanned on the fly to circumvent the glass obstacles. We validate the proposed system through real-world experiments in various scenarios, demonstrating its effectiveness in enabling efficient and robust autonomous aerial navigation in complex real-world environments with glass obstacles.

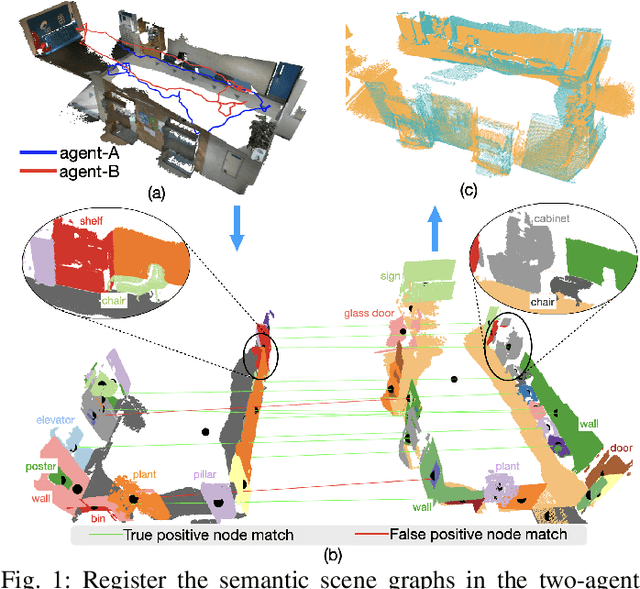

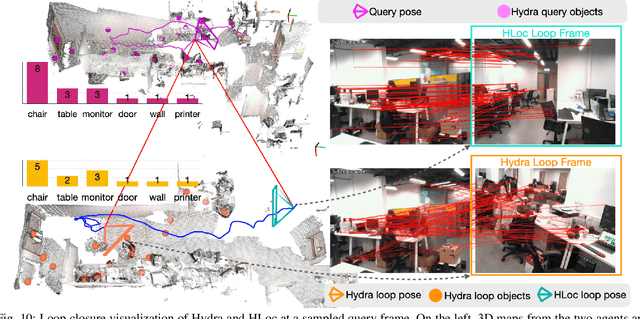

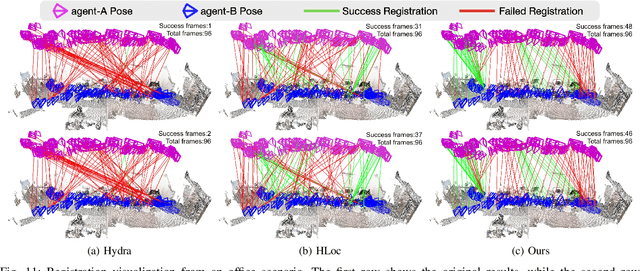

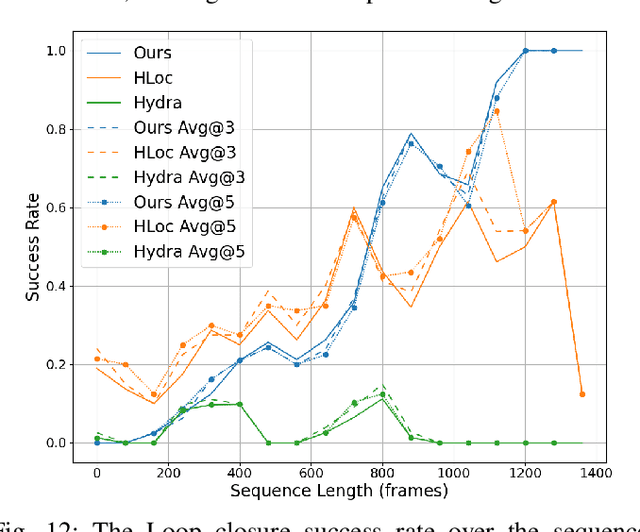

SG-Reg: Generalizable and Efficient Scene Graph Registration

Apr 20, 2025

Abstract:This paper addresses the challenges of registering two rigid semantic scene graphs, an essential capability when an autonomous agent needs to register its map against a remote agent, or against a prior map. The hand-crafted descriptors in classical semantic-aided registration, or the ground-truth annotation reliance in learning-based scene graph registration, impede their application in practical real-world environments. To address the challenges, we design a scene graph network to encode multiple modalities of semantic nodes: open-set semantic feature, local topology with spatial awareness, and shape feature. These modalities are fused to create compact semantic node features. The matching layers then search for correspondences in a coarse-to-fine manner. In the back-end, we employ a robust pose estimator to decide transformation according to the correspondences. We manage to maintain a sparse and hierarchical scene representation. Our approach demands fewer GPU resources and fewer communication bandwidth in multi-agent tasks. Moreover, we design a new data generation approach using vision foundation models and a semantic mapping module to reconstruct semantic scene graphs. It differs significantly from previous works, which rely on ground-truth semantic annotations to generate data. We validate our method in a two-agent SLAM benchmark. It significantly outperforms the hand-crafted baseline in terms of registration success rate. Compared to visual loop closure networks, our method achieves a slightly higher registration recall while requiring only 52 KB of communication bandwidth for each query frame. Code available at: \href{http://github.com/HKUST-Aerial-Robotics/SG-Reg}{http://github.com/HKUST-Aerial-Robotics/SG-Reg}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge