Haichao Liu

The Hong Kong University of Science and Technology

An Intention-driven Lane Change Framework Considering Heterogeneous Dynamic Cooperation in Mixed-traffic Environment

Sep 26, 2025

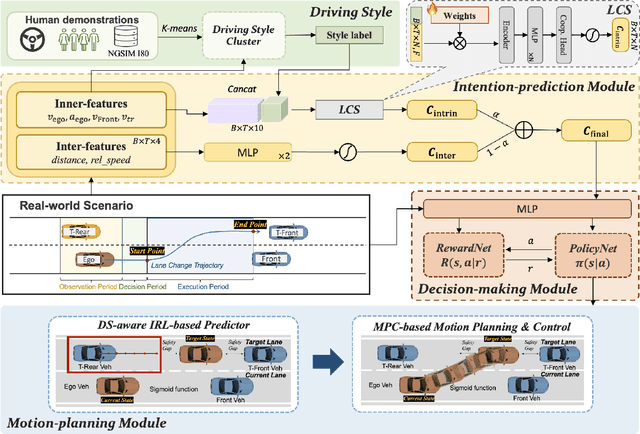

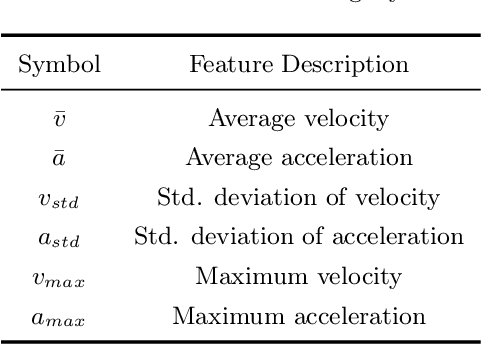

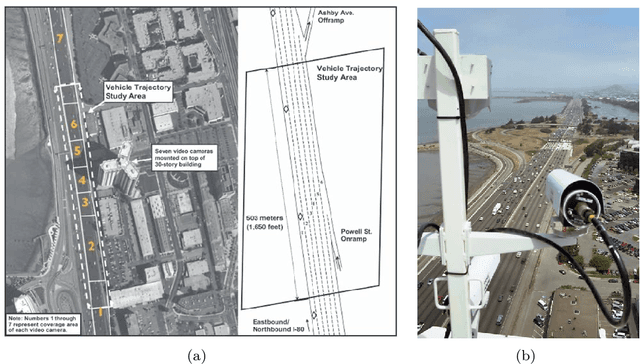

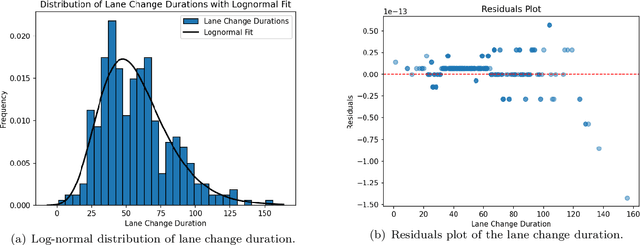

Abstract:In mixed-traffic environments, where autonomous vehicles (AVs) interact with diverse human-driven vehicles (HVs), unpredictable intentions and heterogeneous behaviors make safe and efficient lane change maneuvers highly challenging. Existing methods often oversimplify these interactions by assuming uniform patterns. We propose an intention-driven lane change framework that integrates driving-style recognition, cooperation-aware decision-making, and coordinated motion planning. A deep learning classifier trained on the NGSIM dataset identifies human driving styles in real time. A cooperation score with intrinsic and interactive components estimates surrounding drivers' intentions and quantifies their willingness to cooperate with the ego vehicle. Decision-making combines behavior cloning with inverse reinforcement learning to determine whether a lane change should be initiated. For trajectory generation, model predictive control is integrated with IRL-based intention inference to produce collision-free and socially compliant maneuvers. Experiments show that the proposed model achieves 94.2\% accuracy and 94.3\% F1-score, outperforming rule-based and learning-based baselines by 4-15\% in lane change recognition. These results highlight the benefit of modeling inter-driver heterogeneity and demonstrate the potential of the framework to advance context-aware and human-like autonomous driving in complex traffic environments.

RoboDexVLM: Visual Language Model-Enabled Task Planning and Motion Control for Dexterous Robot Manipulation

Mar 03, 2025

Abstract:This paper introduces RoboDexVLM, an innovative framework for robot task planning and grasp detection tailored for a collaborative manipulator equipped with a dexterous hand. Previous methods focus on simplified and limited manipulation tasks, which often neglect the complexities associated with grasping a diverse array of objects in a long-horizon manner. In contrast, our proposed framework utilizes a dexterous hand capable of grasping objects of varying shapes and sizes while executing tasks based on natural language commands. The proposed approach has the following core components: First, a robust task planner with a task-level recovery mechanism that leverages vision-language models (VLMs) is designed, which enables the system to interpret and execute open-vocabulary commands for long sequence tasks. Second, a language-guided dexterous grasp perception algorithm is presented based on robot kinematics and formal methods, tailored for zero-shot dexterous manipulation with diverse objects and commands. Comprehensive experimental results validate the effectiveness, adaptability, and robustness of RoboDexVLM in handling long-horizon scenarios and performing dexterous grasping. These results highlight the framework's ability to operate in complex environments, showcasing its potential for open-vocabulary dexterous manipulation. Our open-source project page can be found at https://henryhcliu.github.io/robodexvlm.

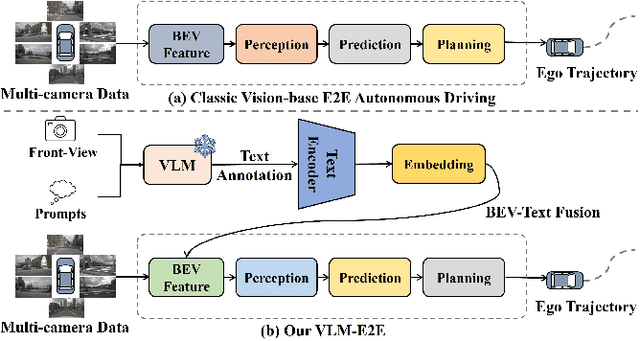

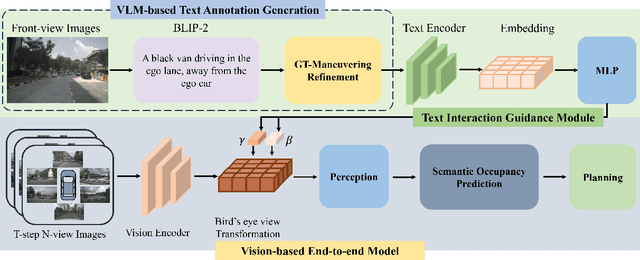

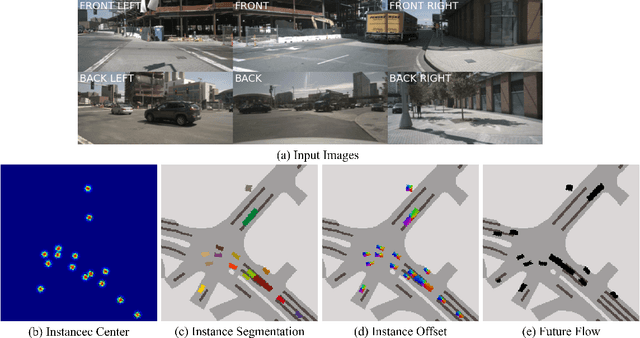

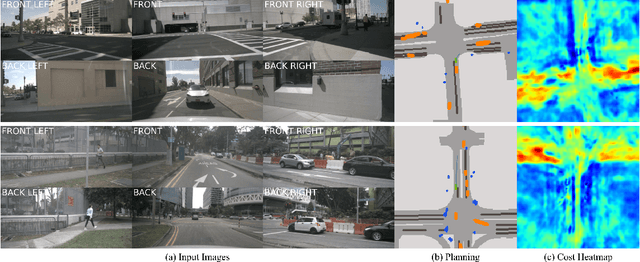

VLM-E2E: Enhancing End-to-End Autonomous Driving with Multimodal Driver Attention Fusion

Feb 25, 2025

Abstract:Human drivers adeptly navigate complex scenarios by utilizing rich attentional semantics, but the current autonomous systems struggle to replicate this ability, as they often lose critical semantic information when converting 2D observations into 3D space. In this sense, it hinders their effective deployment in dynamic and complex environments. Leveraging the superior scene understanding and reasoning abilities of Vision-Language Models (VLMs), we propose VLM-E2E, a novel framework that uses the VLMs to enhance training by providing attentional cues. Our method integrates textual representations into Bird's-Eye-View (BEV) features for semantic supervision, which enables the model to learn richer feature representations that explicitly capture the driver's attentional semantics. By focusing on attentional semantics, VLM-E2E better aligns with human-like driving behavior, which is critical for navigating dynamic and complex environments. Furthermore, we introduce a BEV-Text learnable weighted fusion strategy to address the issue of modality importance imbalance in fusing multimodal information. This approach dynamically balances the contributions of BEV and text features, ensuring that the complementary information from visual and textual modality is effectively utilized. By explicitly addressing the imbalance in multimodal fusion, our method facilitates a more holistic and robust representation of driving environments. We evaluate VLM-E2E on the nuScenes dataset and demonstrate its superiority over state-of-the-art approaches, showcasing significant improvements in performance.

CoDriveVLM: VLM-Enhanced Urban Cooperative Dispatching and Motion Planning for Future Autonomous Mobility on Demand Systems

Jan 10, 2025

Abstract:The increasing demand for flexible and efficient urban transportation solutions has spotlighted the limitations of traditional Demand Responsive Transport (DRT) systems, particularly in accommodating diverse passenger needs and dynamic urban environments. Autonomous Mobility-on-Demand (AMoD) systems have emerged as a promising alternative, leveraging connected and autonomous vehicles (CAVs) to provide responsive and adaptable services. However, existing methods primarily focus on either vehicle scheduling or path planning, which often simplify complex urban layouts and neglect the necessity for simultaneous coordination and mutual avoidance among CAVs. This oversimplification poses significant challenges to the deployment of AMoD systems in real-world scenarios. To address these gaps, we propose CoDriveVLM, a novel framework that integrates high-fidelity simultaneous dispatching and cooperative motion planning for future AMoD systems. Our method harnesses Vision-Language Models (VLMs) to enhance multi-modality information processing, and this enables comprehensive dispatching and collision risk evaluation. The VLM-enhanced CAV dispatching coordinator is introduced to effectively manage complex and unforeseen AMoD conditions, thus supporting efficient scheduling decision-making. Furthermore, we propose a scalable decentralized cooperative motion planning method via consensus alternating direction method of multipliers (ADMM) focusing on collision risk evaluation and decentralized trajectory optimization. Simulation results demonstrate the feasibility and robustness of CoDriveVLM in various traffic conditions, showcasing its potential to significantly improve the fidelity and effectiveness of AMoD systems in future urban transportation networks. The code is available at https://github.com/henryhcliu/CoDriveVLM.git.

UDMC: Unified Decision-Making and Control Framework for Urban Autonomous Driving with Motion Prediction of Traffic Participants

Jan 05, 2025

Abstract:Current autonomous driving systems often struggle to balance decision-making and motion control while ensuring safety and traffic rule compliance, especially in complex urban environments. Existing methods may fall short due to separate handling of these functionalities, leading to inefficiencies and safety compromises. To address these challenges, we introduce UDMC, an interpretable and unified Level 4 autonomous driving framework. UDMC integrates decision-making and motion control into a single optimal control problem (OCP), considering the dynamic interactions with surrounding vehicles, pedestrians, road lanes, and traffic signals. By employing innovative potential functions to model traffic participants and regulations, and incorporating a specialized motion prediction module, our framework enhances on-road safety and rule adherence. The integrated design allows for real-time execution of flexible maneuvers suited to diverse driving scenarios. High-fidelity simulations conducted in CARLA exemplify the framework's computational efficiency, robustness, and safety, resulting in superior driving performance when compared against various baseline models. Our open-source project is available at https://github.com/henryhcliu/udmc_carla.git.

CALMM-Drive: Confidence-Aware Autonomous Driving with Large Multimodal Model

Dec 05, 2024

Abstract:Decision-making and motion planning are pivotal in ensuring the safety and efficiency of Autonomous Vehicles (AVs). Existing methodologies typically adopt two paradigms: decision then planning or generation then scoring. However, the former often struggles with misalignment between decisions and planning, while the latter encounters significant challenges in integrating short-term operational utility with long-term tactical efficacy. To address these issues, we introduce CALMM-Drive, a novel Confidence-Aware Large Multimodal Model (LMM) empowered Autonomous Driving framework. Our approach employs Top-K confidence elicitation, which facilitates the generation of multiple candidate decisions along with their confidence levels. Furthermore, we propose a novel planning module that integrates a diffusion model for trajectory generation and a hierarchical refinement process to find the optimal path. This framework enables the selection of the best plan accounting for both low-level solution quality and high-level tactical confidence, which mitigates the risks of one-shot decisions and overcomes the limitations induced by short-sighted scoring mechanisms. Comprehensive evaluations in nuPlan closed-loop simulation environments demonstrate the effectiveness of CALMM-Drive in achieving reliable and flexible driving performance, showcasing a significant advancement in the integration of uncertainty in LMM-empowered AVs. The code will be released upon acceptance.

Synergizing Decision Making and Trajectory Planning Using Two-Stage Optimization for Autonomous Vehicles

Nov 28, 2024

Abstract:This paper introduces a local planner that synergizes the decision making and trajectory planning modules towards autonomous driving. The decision making and trajectory planning tasks are jointly formulated as a nonlinear programming problem with an integrated objective function. However, integrating the discrete decision variables into the continuous trajectory optimization leads to a mixed-integer programming (MIP) problem with inherent nonlinearity and nonconvexity. To address the challenge in solving the problem, the original problem is decomposed into two sub-stages, and a two-stage optimization (TSO) based approach is presented to ensure the coherence in outcomes for the two stages. The optimization problem in the first stage determines the optimal decision sequence that acts as an informed initialization. With the outputs from the first stage, the second stage necessitates the use of a high-fidelity vehicle model and strict enforcement of the collision avoidance constraints as part of the trajectory planning problem. We evaluate the effectiveness of our proposed planner across diverse multi-lane scenarios. The results demonstrate that the proposed planner simultaneously generates a sequence of optimal decisions and the corresponding trajectory that significantly improves driving performance in terms of driving safety and traveling efficiency as compared to alternative methods. Additionally, we implement the closed-loop simulation in CARLA, and the results showcase the effectiveness of the proposed planner to adapt to changing driving situations with high computational efficiency.

LMMCoDrive: Cooperative Driving with Large Multimodal Model

Sep 18, 2024Abstract:To address the intricate challenges of decentralized cooperative scheduling and motion planning in Autonomous Mobility-on-Demand (AMoD) systems, this paper introduces LMMCoDrive, a novel cooperative driving framework that leverages a Large Multimodal Model (LMM) to enhance traffic efficiency in dynamic urban environments. This framework seamlessly integrates scheduling and motion planning processes to ensure the effective operation of Cooperative Autonomous Vehicles (CAVs). The spatial relationship between CAVs and passenger requests is abstracted into a Bird's-Eye View (BEV) to fully exploit the potential of the LMM. Besides, trajectories are cautiously refined for each CAV while ensuring collision avoidance through safety constraints. A decentralized optimization strategy, facilitated by the Alternating Direction Method of Multipliers (ADMM) within the LMM framework, is proposed to drive the graph evolution of CAVs. Simulation results demonstrate the pivotal role and significant impact of LMM in optimizing CAV scheduling and enhancing decentralized cooperative optimization process for each vehicle. This marks a substantial stride towards achieving practical, efficient, and safe AMoD systems that are poised to revolutionize urban transportation. The code is available at https://github.com/henryhcliu/LMMCoDrive.

Enhance Planning with Physics-informed Safety Controllor for End-to-end Autonomous Driving

May 01, 2024Abstract:Recent years have seen a growing research interest in applications of Deep Neural Networks (DNN) on autonomous vehicle technology. The trend started with perception and prediction a few years ago and it is gradually being applied to motion planning tasks. Despite the performance of networks improve over time, DNN planners inherit the natural drawbacks of Deep Learning. Learning-based planners have limitations in achieving perfect accuracy on the training dataset and network performance can be affected by out-of-distribution problem. In this paper, we propose FusionAssurance, a novel trajectory-based end-to-end driving fusion framework which combines physics-informed control for safety assurance. By incorporating Potential Field into Model Predictive Control, FusionAssurance is capable of navigating through scenarios that are not included in the training dataset and scenarios where neural network fail to generalize. The effectiveness of the approach is demonstrated by extensive experiments under various scenarios on the CARLA benchmark.

Robot Navigation in Unknown and Cluttered Workspace with Dynamical System Modulation in Starshaped Roadmap

Mar 18, 2024Abstract:This paper presents a novel reactive motion planning framework for navigating robots in unknown and cluttered 2D workspace. Typical existing methods are developed by enforcing the robot staying in free regions represented by the locally extracted ellipse or polygon. Instead, we navigate the robot in free space with an alternate starshaped decomposition, which is calculated directly from real-time sensor data. Additionally, a roadmap is constructed incrementally to maintain the connectivity information of the starshaped regions. Compared to the roadmap built upon connected polygons or ellipses in the conventional approaches, the concave starshaped region is better suited to capture the natural distribution of sensor data, so that the perception information can be fully exploited for robot navigation. In this sense, conservative and myopic behaviors are avoided with the proposed approach, and intricate obstacle configurations can be suitably accommodated in unknown and cluttered environments. Then, we design a heuristic exploration algorithm on the roadmap to determine the frontier points of the starshaped regions, from which short-term goals are selected to attract the robot towards the goal configuration. It is noteworthy that, a recovery mechanism is developed on the roadmap that is triggered once a non-extendable short-term goal is reached. This mechanism renders it possible to deal with dead-end situations that can be typically encountered in unknown and cluttered environments. Furthermore, safe and smooth motion within the starshaped regions is generated by employing the Dynamical System Modulation (DSM) approach on the constructed roadmap. Through comprehensive evaluation in both simulations and real-world experiments, the proposed method outperforms the benchmark methods in terms of success rate and traveling time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge