Yiding Ji

Physics-informed Diffusion Mamba Transformer for Real-world Driving

Jan 31, 2026Abstract:Autonomous driving systems demand trajectory planners that not only model the inherent uncertainty of future motions but also respect complex temporal dependencies and underlying physical laws. While diffusion-based generative models excel at capturing multi-modal distributions, they often fail to incorporate long-term sequential contexts and domain-specific physical priors. In this work, we bridge these gaps with two key innovations. First, we introduce a Diffusion Mamba Transformer architecture that embeds mamba and attention into the diffusion process, enabling more effective aggregation of sequential input contexts from sensor streams and past motion histories. Second, we design a Port-Hamiltonian Neural Network module that seamlessly integrates energy-based physical constraints into the diffusion model, thereby enhancing trajectory predictions with both consistency and interpretability. Extensive evaluations on standard autonomous driving benchmarks demonstrate that our unified framework significantly outperforms state-of-the-art baselines in predictive accuracy, physical plausibility, and robustness, thereby advancing safe and reliable motion planning.

Scalable Signal Temporal Logic Guided Reinforcement Learning via Value Function Space Optimization

Aug 04, 2024

Abstract:The integration of reinforcement learning (RL) and formal methods has emerged as a promising framework for solving long-horizon planning problems. Conventional approaches typically involve abstraction of the state and action spaces and manually created labeling functions or predicates. However, the efficiency of these approaches deteriorates as the tasks become increasingly complex, which results in exponential growth in the size of labeling functions or predicates. To address these issues, we propose a scalable model-based RL framework, called VFSTL, which schedules pre-trained skills to follow unseen STL specifications without using hand-crafted predicates. Given a set of value functions obtained by goal-conditioned RL, we formulate an optimization problem to maximize the robustness value of Signal Temporal Logic (STL) defined specifications, which is computed using value functions as predicates. To further reduce the computation burden, we abstract the environment state space into the value function space (VFS). Then the optimization problem is solved by Model-Based Reinforcement Learning. Simulation results show that STL with value functions as predicates approximates the ground truth robustness and the planning in VFS directly achieves unseen specifications using data from sensors.

Sample-efficient Imitative Multi-token Decision Transformer for Generalizable Real World Driving

Jun 18, 2024

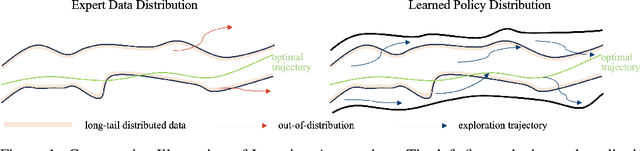

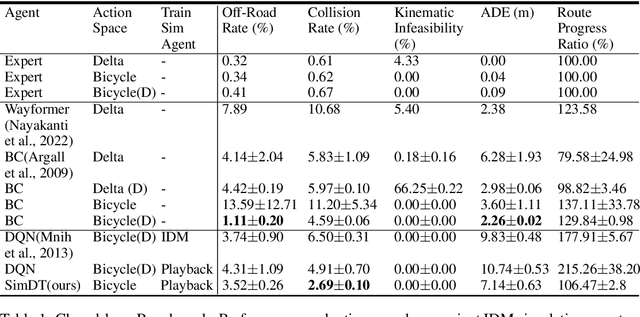

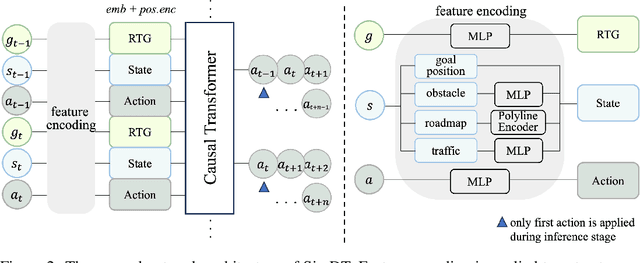

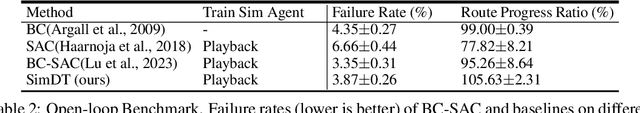

Abstract:Reinforcement learning via sequence modeling has shown remarkable promise in autonomous systems, harnessing the power of offline datasets to make informed decisions in simulated environments. However, the full potential of such methods in complex dynamic environments remain to be discovered. In autonomous driving domain, learning-based agents face significant challenges when transferring knowledge from simulated to real-world settings and the performance is also significantly impacted by data distribution shift. To address these issue, we propose Sample-efficient Imitative Multi-token Decision Transformer (SimDT). SimDT introduces multi-token prediction, imitative online learning and prioritized experience replay to Decision Transformer. The performance is evaluated through empirical experiments and results exceed popular imitation and reinforcement learning algorithms on Waymax benchmark.

Enhance Planning with Physics-informed Safety Controllor for End-to-end Autonomous Driving

May 01, 2024Abstract:Recent years have seen a growing research interest in applications of Deep Neural Networks (DNN) on autonomous vehicle technology. The trend started with perception and prediction a few years ago and it is gradually being applied to motion planning tasks. Despite the performance of networks improve over time, DNN planners inherit the natural drawbacks of Deep Learning. Learning-based planners have limitations in achieving perfect accuracy on the training dataset and network performance can be affected by out-of-distribution problem. In this paper, we propose FusionAssurance, a novel trajectory-based end-to-end driving fusion framework which combines physics-informed control for safety assurance. By incorporating Potential Field into Model Predictive Control, FusionAssurance is capable of navigating through scenarios that are not included in the training dataset and scenarios where neural network fail to generalize. The effectiveness of the approach is demonstrated by extensive experiments under various scenarios on the CARLA benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge