Sample-efficient Imitative Multi-token Decision Transformer for Generalizable Real World Driving

Paper and Code

Jun 18, 2024

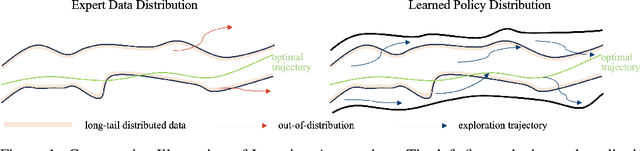

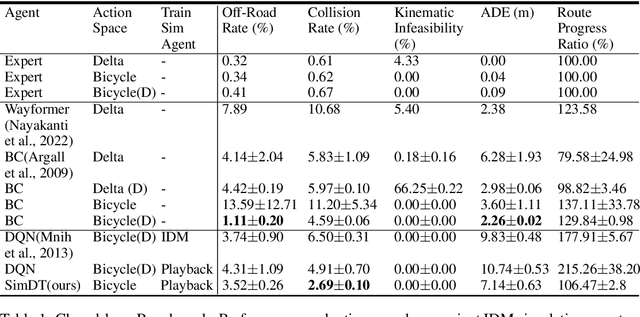

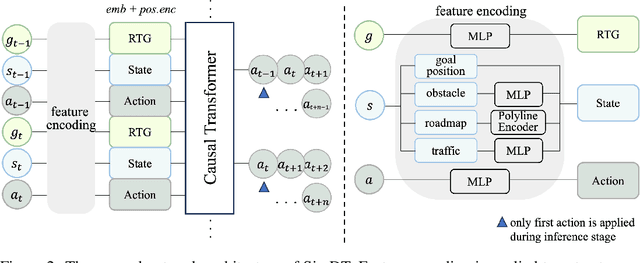

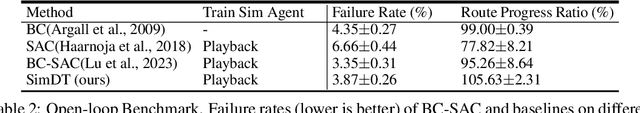

Reinforcement learning via sequence modeling has shown remarkable promise in autonomous systems, harnessing the power of offline datasets to make informed decisions in simulated environments. However, the full potential of such methods in complex dynamic environments remain to be discovered. In autonomous driving domain, learning-based agents face significant challenges when transferring knowledge from simulated to real-world settings and the performance is also significantly impacted by data distribution shift. To address these issue, we propose Sample-efficient Imitative Multi-token Decision Transformer (SimDT). SimDT introduces multi-token prediction, imitative online learning and prioritized experience replay to Decision Transformer. The performance is evaluated through empirical experiments and results exceed popular imitation and reinforcement learning algorithms on Waymax benchmark.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge