Zicheng Zhu

Multi-Agent Systems Shape Social Norms for Prosocial Behavior Change

Feb 07, 2026Abstract:Social norm interventions are used promote prosocial behaviors by highlighting prevalent actions, but their effectiveness is often limited in heterogeneous populations where shared understandings of desirable behaviors are lacking. This study explores whether multi-agent systems can establish "virtual social norms" to encourage donation behavior. We conducted an online experiment where participants interacted with a group of agents to discuss donation behaviors. Changes in perceived social norms, conformity, donation behavior, and user experience were measured pre- and postdiscussion. Results show that multi-agent interactions effectively increased perceived social norms and donation willingness. Notably, in-group agents led to stronger perceived social norms, higher conformity, and greater donation increases compared to out-group agents. Our findings demonstrate the potential of multi-agent systems for creating social norm interventions and offer insights into leveraging social identity dynamics to promote prosocial behavior in virtual environments.

AI-exhibited Personality Traits Can Shape Human Self-concept through Conversations

Jan 19, 2026Abstract:Recent Large Language Model (LLM) based AI can exhibit recognizable and measurable personality traits during conversations to improve user experience. However, as human understandings of their personality traits can be affected by their interaction partners' traits, a potential risk is that AI traits may shape and bias users' self-concept of their own traits. To explore the possibility, we conducted a randomized behavioral experiment. Our results indicate that after conversations about personal topics with an LLM-based AI chatbot using GPT-4o default personality traits, users' self-concepts aligned with the AI's measured personality traits. The longer the conversation, the greater the alignment. This alignment led to increased homogeneity in self-concepts among users. We also observed that the degree of self-concept alignment was positively associated with users' conversation enjoyment. Our findings uncover how AI personality traits can shape users' self-concepts through human-AI conversation, highlighting both risks and opportunities. We provide important design implications for developing more responsible and ethical AI systems.

Exploring the Effects of Chatbot Anthropomorphism and Human Empathy on Human Prosocial Behavior Toward Chatbots

Jun 25, 2025Abstract:Chatbots are increasingly integrated into people's lives and are widely used to help people. Recently, there has also been growing interest in the reverse direction-humans help chatbots-due to a wide range of benefits including better chatbot performance, human well-being, and collaborative outcomes. However, little research has explored the factors that motivate people to help chatbots. To address this gap, we draw on the Computers Are Social Actors (CASA) framework to examine how chatbot anthropomorphism-including human-like identity, emotional expression, and non-verbal expression-influences human empathy toward chatbots and their subsequent prosocial behaviors and intentions. We also explore people's own interpretations of their prosocial behaviors toward chatbots. We conducted an online experiment (N = 244) in which chatbots made mistakes in a collaborative image labeling task and explained the reasons to participants. We then measured participants' prosocial behaviors and intentions toward the chatbots. Our findings revealed that human identity and emotional expression of chatbots increased participants' prosocial behavior and intention toward chatbots, with empathy mediating these effects. Qualitative analysis further identified two motivations for participants' prosocial behaviors: empathy for the chatbot and perceiving the chatbot as human-like. We discuss the implications of these results for understanding and promoting human prosocial behaviors toward chatbots.

Multi-Agents are Social Groups: Investigating Social Influence of Multiple Agents in Human-Agent Interactions

Nov 07, 2024

Abstract:Multi-agent systems - systems with multiple independent AI agents working together to achieve a common goal - are becoming increasingly prevalent in daily life. Drawing inspiration from the phenomenon of human group social influence, we investigate whether a group of AI agents can create social pressure on users to agree with them, potentially changing their stance on a topic. We conducted a study in which participants discussed social issues with either a single or multiple AI agents, and where the agents either agreed or disagreed with the user's stance on the topic. We found that conversing with multiple agents (holding conversation content constant) increased the social pressure felt by participants, and caused a greater shift in opinion towards the agents' stances on each topic. Our study shows the potential advantages of multi-agent systems over single-agent platforms in causing opinion change. We discuss design implications for possible multi-agent systems that promote social good, as well as the potential for malicious actors to use these systems to manipulate public opinion.

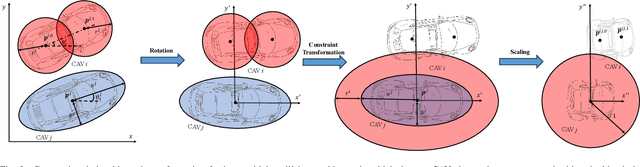

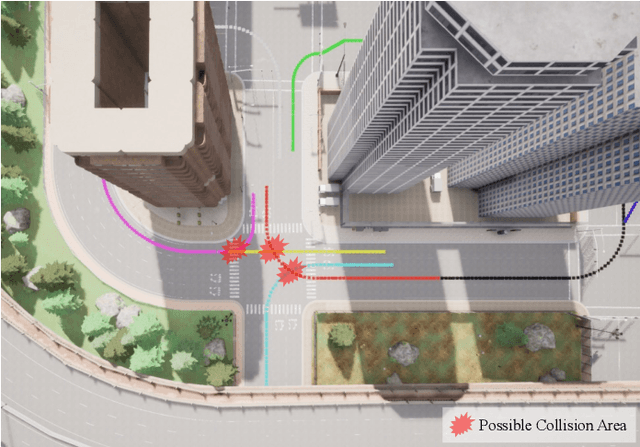

Improved Consensus ADMM for Cooperative Motion Planning of Large-Scale Connected Autonomous Vehicles with Limited Communication

Jan 17, 2024

Abstract:This paper investigates a cooperative motion planning problem for large-scale connected autonomous vehicles (CAVs) under limited communications, which addresses the challenges of high communication and computing resource requirements. Our proposed methodology incorporates a parallel optimization algorithm with improved consensus ADMM considering a more realistic locally connected topology network, and time complexity of O(N) is achieved by exploiting the sparsity in the dual update process. To further enhance the computational efficiency, we employ a lightweight evolution strategy for the dynamic connectivity graph of CAVs, and each sub-problem split from the consensus ADMM only requires managing a small group of CAVs. The proposed method implemented with the receding horizon scheme is validated thoroughly, and comparisons with existing numerical solvers and approaches demonstrate the efficiency of our proposed algorithm. Also, simulations on large-scale cooperative driving tasks involving 80 vehicles are performed in the high-fidelity CARLA simulator, which highlights the remarkable computational efficiency, scalability, and effectiveness of our proposed development. Demonstration videos are available at https://henryhcliu.github.io/icadmm_cmp_carla.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge