Fumin Zhang

A Vision-Based Collision Sensing Method for Stable Circular Object Grasping with A Soft Gripper System

Aug 07, 2025Abstract:External collisions to robot actuators typically pose risks to grasping circular objects. This work presents a vision-based sensing module capable of detecting collisions to maintain stable grasping with a soft gripper system. The system employs an eye-in-palm camera with a broad field of view to simultaneously monitor the motion of fingers and the grasped object. Furthermore, we have developed a collision-rich grasping strategy to ensure the stability and security of the entire dynamic grasping process. A physical soft gripper was manufactured and affixed to a collaborative robotic arm to evaluate the performance of the collision detection mechanism. An experiment regarding testing the response time of the mechanism confirmed the system has the capability to react to the collision instantaneously. A dodging test was conducted to demonstrate the gripper can detect the direction and scale of external collisions precisely.

VIMS: A Visual-Inertial-Magnetic-Sonar SLAM System in Underwater Environments

Jun 18, 2025

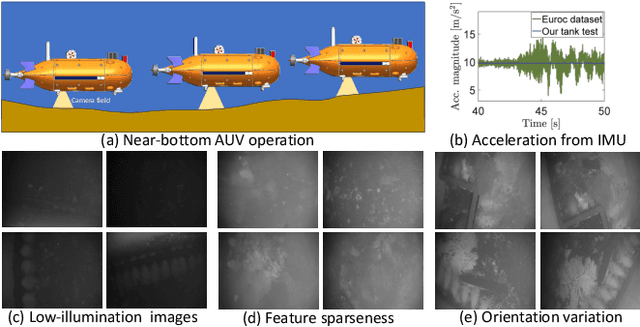

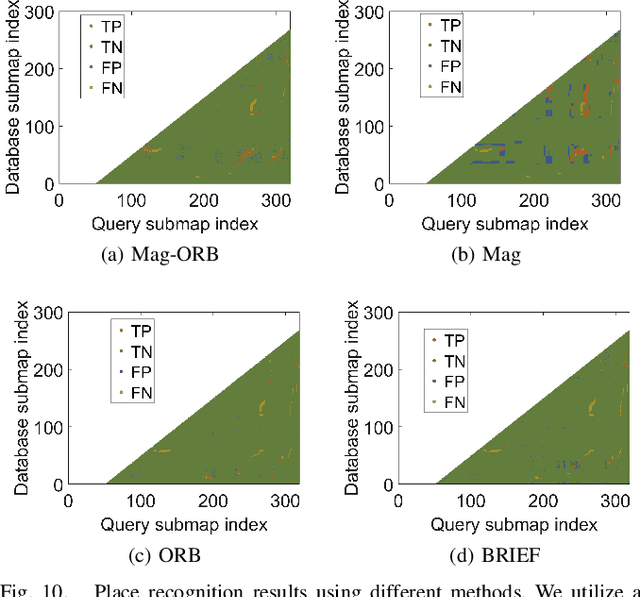

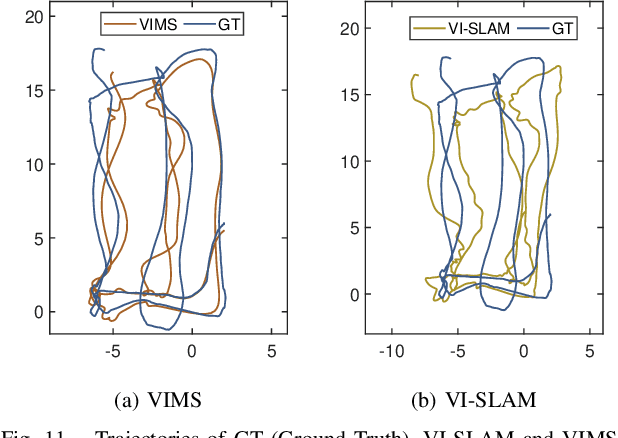

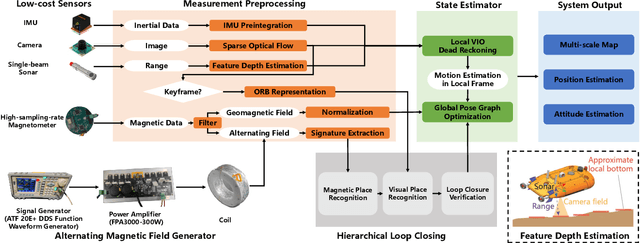

Abstract:In this study, we present a novel simultaneous localization and mapping (SLAM) system, VIMS, designed for underwater navigation. Conventional visual-inertial state estimators encounter significant practical challenges in perceptually degraded underwater environments, particularly in scale estimation and loop closing. To address these issues, we first propose leveraging a low-cost single-beam sonar to improve scale estimation. Then, VIMS integrates a high-sampling-rate magnetometer for place recognition by utilizing magnetic signatures generated by an economical magnetic field coil. Building on this, a hierarchical scheme is developed for visual-magnetic place recognition, enabling robust loop closure. Furthermore, VIMS achieves a balance between local feature tracking and descriptor-based loop closing, avoiding additional computational burden on the front end. Experimental results highlight the efficacy of the proposed VIMS, demonstrating significant improvements in both the robustness and accuracy of state estimation within underwater environments.

Design of a Formation Control System to Assist Human Operators in Flying a Swarm of Robotic Blimps

May 14, 2025Abstract:Formation control is essential for swarm robotics, enabling coordinated behavior in complex environments. In this paper, we introduce a novel formation control system for an indoor blimp swarm using a specialized leader-follower approach enhanced with a dynamic leader-switching mechanism. This strategy allows any blimp to take on the leader role, distributing maneuvering demands across the swarm and enhancing overall formation stability. Only the leader blimp is manually controlled by a human operator, while follower blimps use onboard monocular cameras and a laser altimeter for relative position and altitude estimation. A leader-switching scheme is proposed to assist the human operator to maintain stability of the swarm, especially when a sharp turn is performed. Experimental results confirm that the leader-switching mechanism effectively maintains stable formations and adapts to dynamic indoor environments while assisting human operator.

SLABIM: A SLAM-BIM Coupled Dataset in HKUST Main Building

Feb 24, 2025

Abstract:Existing indoor SLAM datasets primarily focus on robot sensing, often lacking building architectures. To address this gap, we design and construct the first dataset to couple the SLAM and BIM, named SLABIM. This dataset provides BIM and SLAM-oriented sensor data, both modeling a university building at HKUST. The as-designed BIM is decomposed and converted for ease of use. We employ a multi-sensor suite for multi-session data collection and mapping to obtain the as-built model. All the related data are timestamped and organized, enabling users to deploy and test effectively. Furthermore, we deploy advanced methods and report the experimental results on three tasks: registration, localization and semantic mapping, demonstrating the effectiveness and practicality of SLABIM. We make our dataset open-source at https://github.com/HKUST-Aerial-Robotics/SLABIM.

BEINGS: Bayesian Embodied Image-goal Navigation with Gaussian Splatting

Sep 16, 2024

Abstract:Image-goal navigation enables a robot to reach the location where a target image was captured, using visual cues for guidance. However, current methods either rely heavily on data and computationally expensive learning-based approaches or lack efficiency in complex environments due to insufficient exploration strategies. To address these limitations, we propose Bayesian Embodied Image-goal Navigation Using Gaussian Splatting, a novel method that formulates ImageNav as an optimal control problem within a model predictive control framework. BEINGS leverages 3D Gaussian Splatting as a scene prior to predict future observations, enabling efficient, real-time navigation decisions grounded in the robot's sensory experiences. By integrating Bayesian updates, our method dynamically refines the robot's strategy without requiring extensive prior experience or data. Our algorithm is validated through extensive simulations and physical experiments, showcasing its potential for embodied robot systems in visually complex scenarios.

A Hybrid Controller Design for Human-Assistive Piloting of an Underactuated Blimp

Jun 15, 2024

Abstract:This paper introduces a novel solution to the manual control challenge for indoor blimps. The problem's complexity arises from the conflicting demands of executing human commands while maintaining stability through automatic control for underactuated robots. To tackle this challenge, we introduced an assisted piloting hybrid controller with a preemptive mechanism, that seamlessly switches between executing human commands and activating automatic stabilization control. Our algorithm ensures that the automatic stabilization controller operates within the time delay between human observation and perception, providing assistance to the driver in a way that remains imperceptible.

NuRF: Nudging the Particle Filter in Radiance Fields for Robot Visual Localization

Jun 01, 2024

Abstract:Can we localize a robot in radiance fields only using monocular vision? This study presents NuRF, a nudged particle filter framework for 6-DoF robot visual localization in radiance fields. NuRF sets anchors in SE(3) to leverage visual place recognition, which provides image comparisons to guide the sampling process. This guidance could improve the convergence and robustness of particle filters for robot localization. Additionally, an adaptive scheme is designed to enhance the performance of NuRF, thus enabling both global visual localization and local pose tracking. Real-world experiments are conducted with comprehensive tests to demonstrate the effectiveness of NuRF. The results showcase the advantages of NuRF in terms of accuracy and efficiency, including comparisons with alternative approaches. Furthermore, we report our findings for future studies and advancements in robot navigation in radiance fields.

Speak the Same Language: Global LiDAR Registration on BIM Using Pose Hough Transform

May 07, 2024

Abstract:The construction and robotic sensing data originate from disparate sources and are associated with distinct frames of reference. The primary objective of this study is to align LiDAR point clouds with building information modeling (BIM) using a global point cloud registration approach, aimed at establishing a shared understanding between the two modalities, i.e., ``speak the same language''. To achieve this, we design a cross-modality registration method, spanning from front end the back end. At the front end, we extract descriptors by identifying walls and capturing the intersected corners. Subsequently, for the back-end pose estimation, we employ the Hough transform for pose estimation and estimate multiple pose candidates. The final pose is verified by wall-pixel correlation. To evaluate the effectiveness of our method, we conducted real-world multi-session experiments in a large-scale university building, involving two different types of LiDAR sensors. We also report our findings and plan to make our collected dataset open-sourced.

OceanPlan: Hierarchical Planning and Replanning for Natural Language AUV Piloting in Large-scale Unexplored Ocean Environments

Mar 22, 2024Abstract:We develop a hierarchical LLM-task-motion planning and replanning framework to efficiently ground an abstracted human command into tangible Autonomous Underwater Vehicle (AUV) control through enhanced representations of the world. We also incorporate a holistic replanner to provide real-world feedback with all planners for robust AUV operation. While there has been extensive research in bridging the gap between LLMs and robotic missions, they are unable to guarantee success of AUV applications in the vast and unknown ocean environment. To tackle specific challenges in marine robotics, we design a hierarchical planner to compose executable motion plans, which achieves planning efficiency and solution quality by decomposing long-horizon missions into sub-tasks. At the same time, real-time data stream is obtained by a replanner to address environmental uncertainties during plan execution. Experiments validate that our proposed framework delivers successful AUV performance of long-duration missions through natural language piloting.

Trust-Preserved Human-Robot Shared Autonomy enabled by Bayesian Relational Event Modeling

Nov 03, 2023

Abstract:Shared autonomy functions as a flexible framework that empowers robots to operate across a spectrum of autonomy levels, allowing for efficient task execution with minimal human oversight. However, humans might be intimidated by the autonomous decision-making capabilities of robots due to perceived risks and a lack of trust. This paper proposed a trust-preserved shared autonomy strategy that grants robots to seamlessly adjust their autonomy level, striving to optimize team performance and enhance their acceptance among human collaborators. By enhancing the Relational Event Modeling framework with Bayesian learning techniques, this paper enables dynamic inference of human trust based solely on time-stamped relational events within human-robot teams. Adopting a longitudinal perspective on trust development and calibration in human-robot teams, the proposed shared autonomy strategy warrants robots to preserve human trust by not only passively adapting to it but also actively participating in trust repair when violations occur. We validate the effectiveness of the proposed approach through a user study on human-robot collaborative search and rescue scenarios. The objective and subjective evaluations demonstrate its merits over teleoperation on both task execution and user acceptability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge