Huan Yin

VIMS: A Visual-Inertial-Magnetic-Sonar SLAM System in Underwater Environments

Jun 18, 2025

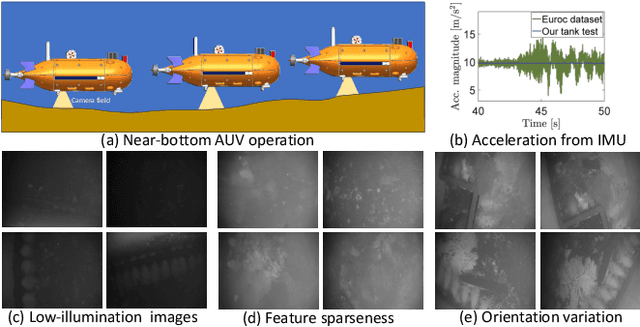

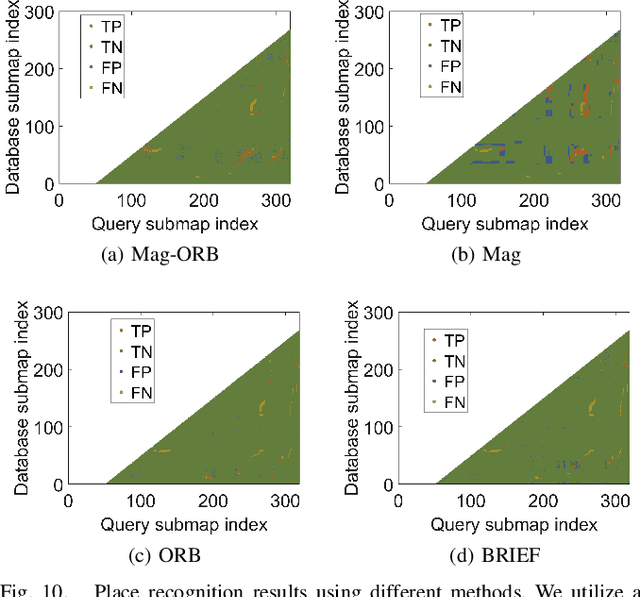

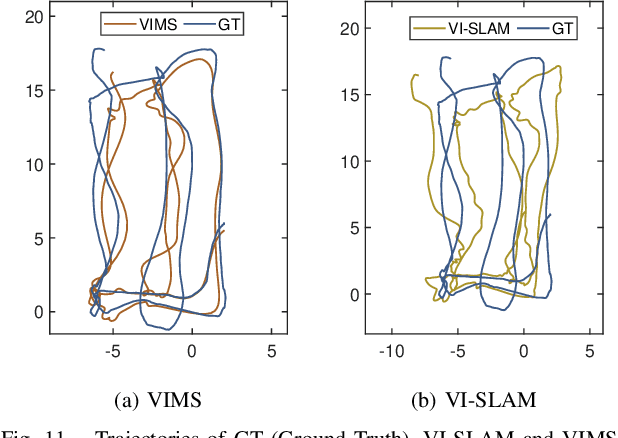

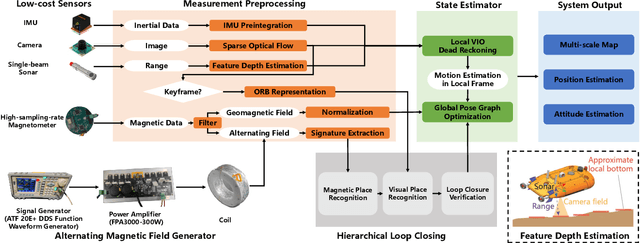

Abstract:In this study, we present a novel simultaneous localization and mapping (SLAM) system, VIMS, designed for underwater navigation. Conventional visual-inertial state estimators encounter significant practical challenges in perceptually degraded underwater environments, particularly in scale estimation and loop closing. To address these issues, we first propose leveraging a low-cost single-beam sonar to improve scale estimation. Then, VIMS integrates a high-sampling-rate magnetometer for place recognition by utilizing magnetic signatures generated by an economical magnetic field coil. Building on this, a hierarchical scheme is developed for visual-magnetic place recognition, enabling robust loop closure. Furthermore, VIMS achieves a balance between local feature tracking and descriptor-based loop closing, avoiding additional computational burden on the front end. Experimental results highlight the efficacy of the proposed VIMS, demonstrating significant improvements in both the robustness and accuracy of state estimation within underwater environments.

SLABIM: A SLAM-BIM Coupled Dataset in HKUST Main Building

Feb 24, 2025

Abstract:Existing indoor SLAM datasets primarily focus on robot sensing, often lacking building architectures. To address this gap, we design and construct the first dataset to couple the SLAM and BIM, named SLABIM. This dataset provides BIM and SLAM-oriented sensor data, both modeling a university building at HKUST. The as-designed BIM is decomposed and converted for ease of use. We employ a multi-sensor suite for multi-session data collection and mapping to obtain the as-built model. All the related data are timestamped and organized, enabling users to deploy and test effectively. Furthermore, we deploy advanced methods and report the experimental results on three tasks: registration, localization and semantic mapping, demonstrating the effectiveness and practicality of SLABIM. We make our dataset open-source at https://github.com/HKUST-Aerial-Robotics/SLABIM.

Multi-cam Multi-map Visual Inertial Localization: System, Validation and Dataset

Dec 05, 2024

Abstract:Map-based localization is crucial for the autonomous movement of robots as it provides real-time positional feedback. However, existing VINS and SLAM systems cannot be directly integrated into the robot's control loop. Although VINS offers high-frequency position estimates, it suffers from drift in long-term operation. And the drift-free trajectory output by SLAM is post-processed with loop correction, which is non-causal. In practical control, it is impossible to update the current pose with future information. Furthermore, existing SLAM evaluation systems measure accuracy after aligning the entire trajectory, which overlooks the transformation error between the odometry start frame and the ground truth frame. To address these issues, we propose a multi-cam multi-map visual inertial localization system, which provides real-time, causal and drift-free position feedback to the robot control loop. Additionally, we analyze the error composition of map-based localization systems and propose a set of evaluation metric suitable for measuring causal localization performance. To validate our system, we design a multi-camera IMU hardware setup and collect a long-term challenging campus dataset. Experimental results demonstrate the higher real-time localization accuracy of the proposed system. To foster community development, both the system and the dataset have been made open source https://github.com/zoeylove/Multi-cam-Multi-map-VILO/tree/main.

BEINGS: Bayesian Embodied Image-goal Navigation with Gaussian Splatting

Sep 16, 2024

Abstract:Image-goal navigation enables a robot to reach the location where a target image was captured, using visual cues for guidance. However, current methods either rely heavily on data and computationally expensive learning-based approaches or lack efficiency in complex environments due to insufficient exploration strategies. To address these limitations, we propose Bayesian Embodied Image-goal Navigation Using Gaussian Splatting, a novel method that formulates ImageNav as an optimal control problem within a model predictive control framework. BEINGS leverages 3D Gaussian Splatting as a scene prior to predict future observations, enabling efficient, real-time navigation decisions grounded in the robot's sensory experiences. By integrating Bayesian updates, our method dynamically refines the robot's strategy without requiring extensive prior experience or data. Our algorithm is validated through extensive simulations and physical experiments, showcasing its potential for embodied robot systems in visually complex scenarios.

SLIM: Scalable and Lightweight LiDAR Mapping in Urban Environments

Sep 13, 2024Abstract:LiDAR point cloud maps are extensively utilized on roads for robot navigation due to their high consistency. However, dense point clouds face challenges of high memory consumption and reduced maintainability for long-term operations. In this study, we introduce SLIM, a scalable and lightweight mapping system for long-term LiDAR mapping in urban environments. The system begins by parameterizing structural point clouds into lines and planes. These lightweight and structural representations meet the requirements of map merging, pose graph optimization, and bundle adjustment, ensuring incremental management and local consistency. For long-term operations, a map-centric nonlinear factor recovery method is designed to sparsify poses while preserving mapping accuracy. We validate the SLIM system with multi-session real-world LiDAR data from classical LiDAR mapping datasets, including KITTI, NCLT, and HeLiPR. The experiments demonstrate its capabilities in mapping accuracy, lightweightness, and scalability. Map re-use is also verified through map-based robot localization. Ultimately, with multi-session LiDAR data, the SLIM system provides a globally consistent map with low memory consumption (130 KB/km). We have made our code open-source to benefit the community.

NuRF: Nudging the Particle Filter in Radiance Fields for Robot Visual Localization

Jun 01, 2024

Abstract:Can we localize a robot in radiance fields only using monocular vision? This study presents NuRF, a nudged particle filter framework for 6-DoF robot visual localization in radiance fields. NuRF sets anchors in SE(3) to leverage visual place recognition, which provides image comparisons to guide the sampling process. This guidance could improve the convergence and robustness of particle filters for robot localization. Additionally, an adaptive scheme is designed to enhance the performance of NuRF, thus enabling both global visual localization and local pose tracking. Real-world experiments are conducted with comprehensive tests to demonstrate the effectiveness of NuRF. The results showcase the advantages of NuRF in terms of accuracy and efficiency, including comparisons with alternative approaches. Furthermore, we report our findings for future studies and advancements in robot navigation in radiance fields.

Speak the Same Language: Global LiDAR Registration on BIM Using Pose Hough Transform

May 07, 2024

Abstract:The construction and robotic sensing data originate from disparate sources and are associated with distinct frames of reference. The primary objective of this study is to align LiDAR point clouds with building information modeling (BIM) using a global point cloud registration approach, aimed at establishing a shared understanding between the two modalities, i.e., ``speak the same language''. To achieve this, we design a cross-modality registration method, spanning from front end the back end. At the front end, we extract descriptors by identifying walls and capturing the intersected corners. Subsequently, for the back-end pose estimation, we employ the Hough transform for pose estimation and estimate multiple pose candidates. The final pose is verified by wall-pixel correlation. To evaluate the effectiveness of our method, we conducted real-world multi-session experiments in a large-scale university building, involving two different types of LiDAR sensors. We also report our findings and plan to make our collected dataset open-sourced.

Modeling Point Uncertainty in Radar SLAM

Feb 25, 2024Abstract:While visual and laser-based simultaneous localization and mapping (SLAM) techniques have gained significant attention, radar SLAM remains a robust option for challenging conditions. This paper aims to improve the performance of radar SLAM by modeling point uncertainty. The basic SLAM system is a radar-inertial odometry (RIO) system that leverages velocity-aided radar points and high-frequency inertial measurements. We first propose to model the uncertainty of radar points in polar coordinates by considering the nature of radar sensing. Then in the SLAM system, the uncertainty model is designed into the data association module and is incorporated to weight the motion estimation. Real-world experiments on public and self-collected datasets validate the effectiveness of the proposed models and approaches. The findings highlight the potential of incorporating radar point uncertainty modeling to improve the radar SLAM system in adverse environments.

Less is More: Physical-enhanced Radar-Inertial Odometry

Feb 03, 2024Abstract:Radar offers the advantage of providing additional physical properties related to observed objects. In this study, we design a physical-enhanced radar-inertial odometry system that capitalizes on the Doppler velocities and radar cross-section information. The filter for static radar points, correspondence estimation, and residual functions are all strengthened by integrating the physical properties. We conduct experiments on both public datasets and our self-collected data, with different mobile platforms and sensor types. Our quantitative results demonstrate that the proposed radar-inertial odometry system outperforms alternative methods using the physical-enhanced components. Our findings also reveal that using the physical properties results in fewer radar points for odometry estimation, but the performance is still guaranteed and even improved, thus aligning with the ``less is more'' principle.

G3Reg: Pyramid Graph-based Global Registration using Gaussian Ellipsoid Model

Aug 22, 2023

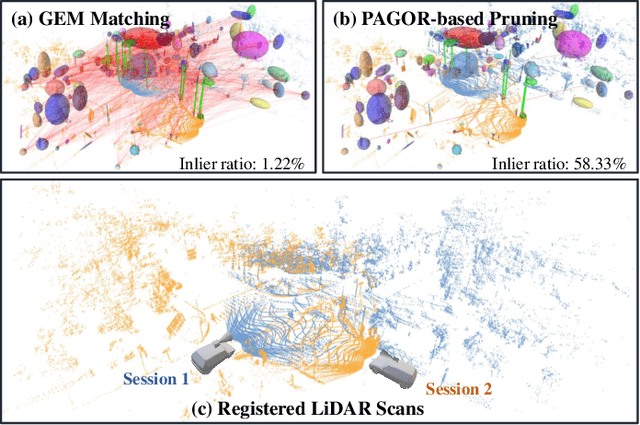

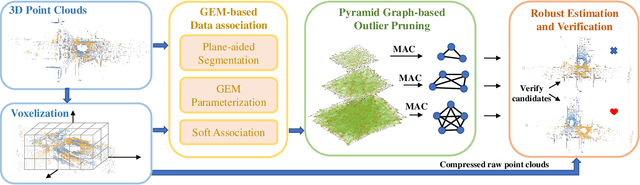

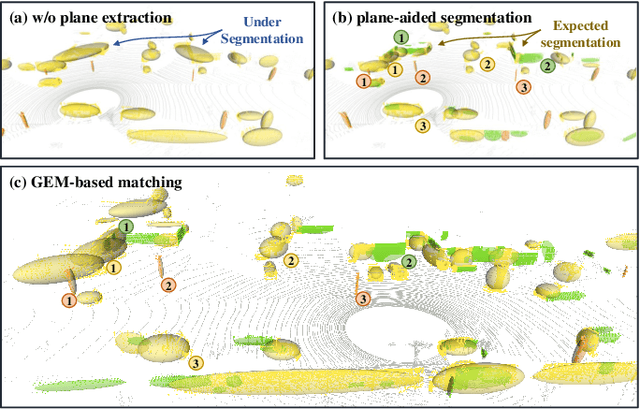

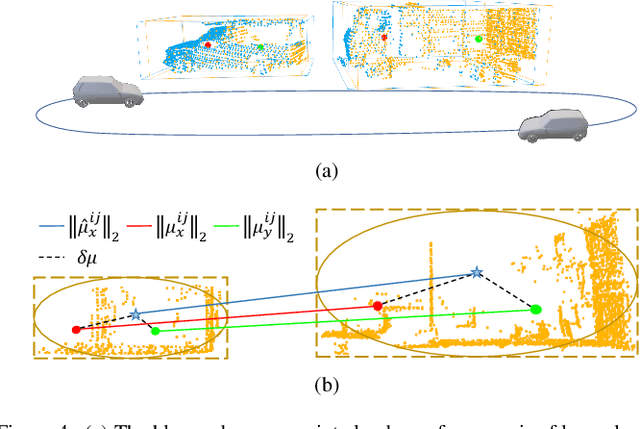

Abstract:This study introduces a novel framework, G3Reg, for fast and robust global registration of LiDAR point clouds. In contrast to conventional complex keypoints and descriptors, we extract fundamental geometric primitives including planes, clusters, and lines (PCL) from the raw point cloud to obtain low-level semantic segments. Each segment is formulated as a unified Gaussian Ellipsoid Model (GEM) by employing a probability ellipsoid to ensure the ground truth centers are encompassed with a certain degree of probability. Utilizing these GEMs, we then present a distrust-and-verify scheme based on a Pyramid Compatibility Graph for Global Registration (PAGOR). Specifically, we establish an upper bound, which can be traversed based on the confidence level for compatibility testing to construct the pyramid graph. Gradually, we solve multiple maximum cliques (MAC) for each level of the graph, generating numerous transformation candidates. In the verification phase, we adopt a precise and efficient metric for point cloud alignment quality, founded on geometric primitives, to identify the optimal candidate. The performance of the algorithm is extensively validated on three publicly available datasets and a self-collected multi-session dataset, without changing any parameter settings in the experimental evaluation. The results exhibit superior robustness and real-time performance of the G3Reg framework compared to state-of-the-art methods. Furthermore, we demonstrate the potential for integrating individual GEM and PAGOR components into other algorithmic frameworks to enhance their efficacy. To advance further research and promote community understanding, we have publicly shared the source code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge