Xiaqing Ding

Multi-cam Multi-map Visual Inertial Localization: System, Validation and Dataset

Dec 05, 2024

Abstract:Map-based localization is crucial for the autonomous movement of robots as it provides real-time positional feedback. However, existing VINS and SLAM systems cannot be directly integrated into the robot's control loop. Although VINS offers high-frequency position estimates, it suffers from drift in long-term operation. And the drift-free trajectory output by SLAM is post-processed with loop correction, which is non-causal. In practical control, it is impossible to update the current pose with future information. Furthermore, existing SLAM evaluation systems measure accuracy after aligning the entire trajectory, which overlooks the transformation error between the odometry start frame and the ground truth frame. To address these issues, we propose a multi-cam multi-map visual inertial localization system, which provides real-time, causal and drift-free position feedback to the robot control loop. Additionally, we analyze the error composition of map-based localization systems and propose a set of evaluation metric suitable for measuring causal localization performance. To validate our system, we design a multi-camera IMU hardware setup and collect a long-term challenging campus dataset. Experimental results demonstrate the higher real-time localization accuracy of the proposed system. To foster community development, both the system and the dataset have been made open source https://github.com/zoeylove/Multi-cam-Multi-map-VILO/tree/main.

Leveraging BEV Representation for 360-degree Visual Place Recognition

May 23, 2023

Abstract:This paper investigates the advantages of using Bird's Eye View (BEV) representation in 360-degree visual place recognition (VPR). We propose a novel network architecture that utilizes the BEV representation in feature extraction, feature aggregation, and vision-LiDAR fusion, which bridges visual cues and spatial awareness. Our method extracts image features using standard convolutional networks and combines the features according to pre-defined 3D grid spatial points. To alleviate the mechanical and time misalignments between cameras, we further introduce deformable attention to learn the compensation. Upon the BEV feature representation, we then employ the polar transform and the Discrete Fourier transform for aggregation, which is shown to be rotation-invariant. In addition, the image and point cloud cues can be easily stated in the same coordinates, which benefits sensor fusion for place recognition. The proposed BEV-based method is evaluated in ablation and comparative studies on two datasets, including on-the-road and off-the-road scenarios. The experimental results verify the hypothesis that BEV can benefit VPR by its superior performance compared to baseline methods. To the best of our knowledge, this is the first trial of employing BEV representation in this task.

DXQ-Net: Differentiable LiDAR-Camera Extrinsic Calibration Using Quality-aware Flow

Mar 17, 2022

Abstract:Accurate LiDAR-camera extrinsic calibration is a precondition for many multi-sensor systems in mobile robots. Most calibration methods rely on laborious manual operations and calibration targets. While working online, the calibration methods should be able to extract information from the environment to construct the cross-modal data association. Convolutional neural networks (CNNs) have powerful feature extraction ability and have been used for calibration. However, most of the past methods solve the extrinsic as a regression task, without considering the geometric constraints involved. In this paper, we propose a novel end-to-end extrinsic calibration method named DXQ-Net, using a differentiable pose estimation module for generalization. We formulate a probabilistic model for LiDAR-camera calibration flow, yielding a prediction of uncertainty to measure the quality of LiDAR-camera data association. Testing experiments illustrate that our method achieves a competitive with other methods for the translation component and state-of-the-art performance for the rotation component. Generalization experiments illustrate that the generalization performance of our method is significantly better than other deep learning-based methods.

Translation Invariant Global Estimation of Heading Angle Using Sinogram of LiDAR Point Cloud

Mar 02, 2022

Abstract:Global point cloud registration is an essential module for localization, of which the main difficulty exists in estimating the rotation globally without initial value. With the aid of gravity alignment, the degree of freedom in point cloud registration could be reduced to 4DoF, in which only the heading angle is required for rotation estimation. In this paper, we propose a fast and accurate global heading angle estimation method for gravity-aligned point clouds. Our key idea is that we generate a translation invariant representation based on Radon Transform, allowing us to solve the decoupled heading angle globally with circular cross-correlation. Besides, for heading angle estimation between point clouds with different distributions, we implement this heading angle estimator as a differentiable module to train a feature extraction network end- to-end. The experimental results validate the effectiveness of the proposed method in heading angle estimation and show better performance compared with other methods.

Robust localization for planar moving robot in changing environment: A perspective on density of correspondence and depth

Nov 01, 2020

Abstract:Visual localization for planar moving robot is important to various indoor service robotic applications. To handle the textureless areas and frequent human activities in indoor environments, a novel robust visual localization algorithm which leverages dense correspondence and sparse depth for planar moving robot is proposed. The key component is a minimal solution which computes the absolute camera pose with one 3D-2D correspondence and one 2D-2D correspondence. The advantages are obvious in two aspects. First, the robustness is enhanced as the sample set for pose estimation is maximal by utilizing all correspondences with or without depth. Second, no extra effort for dense map construction is required to exploit dense correspondences for handling textureless and repetitive texture scenes. That is meaningful as building a dense map is computational expensive especially in large scale. Moreover, a probabilistic analysis among different solutions is presented and an automatic solution selection mechanism is designed to maximize the success rate by selecting appropriate solutions in different environmental characteristics. Finally, a complete visual localization pipeline considering situations from the perspective of correspondence and depth density is summarized and validated on both simulation and public real-world indoor localization dataset. The code is released on github.

Improving the generalization of network based relative pose regression: dimension reduction as a regularizer

Oct 24, 2020

Abstract:Visual localization occupies an important position in many areas such as Augmented Reality, robotics and 3D reconstruction. The state-of-the-art visual localization methods perform pose estimation using geometry based solver within the RANSAC framework. However, these methods require accurate pixel-level matching at high image resolution, which is hard to satisfy under significant changes from appearance, dynamics or perspective of view. End-to-end learning based regression networks provide a solution to circumvent the requirement for precise pixel-level correspondences, but demonstrate poor performance towards cross-scene generalization. In this paper, we explicitly add a learnable matching layer within the network to isolate the pose regression solver from the absolute image feature values, and apply dimension regularization on both the correlation feature channel and the image scale to further improve performance towards generalization and large viewpoint change. We implement this dimension regularization strategy within a two-layer pyramid based framework to regress the localization results from coarse to fine. In addition, the depth information is fused for absolute translational scale recovery. Through experiments on real world RGBD datasets we validate the effectiveness of our design in terms of improving both generalization performance and robustness towards viewpoint change, and also show the potential of regression based visual localization networks towards challenging occasions that are difficult for geometry based visual localization methods.

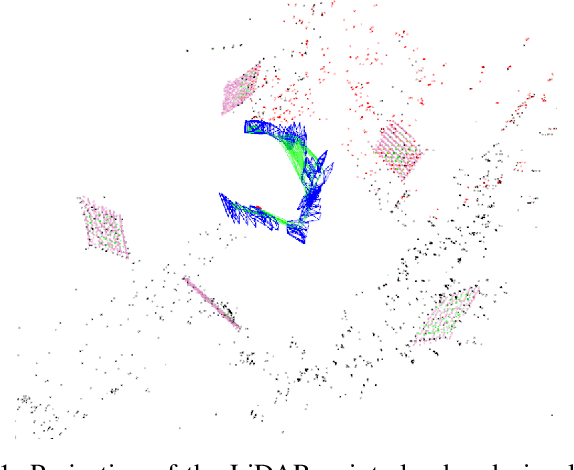

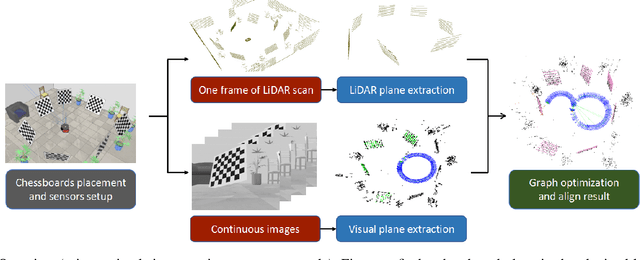

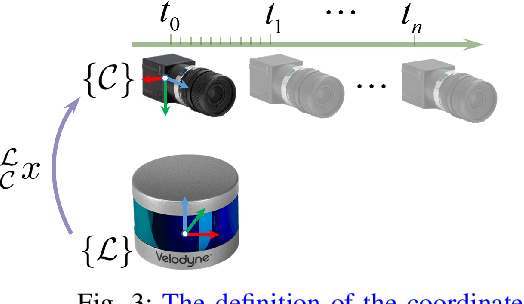

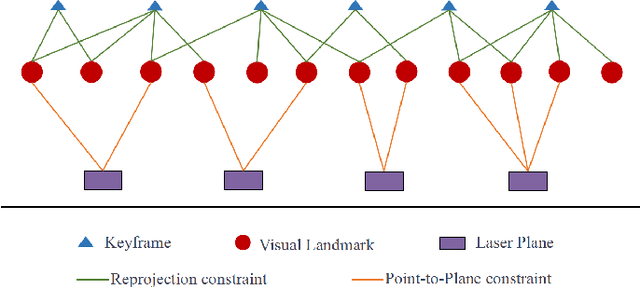

Spatiotemporal Decoupling Based LiDAR-Camera Calibration under Arbitrary Configurations

Mar 14, 2019

Abstract:LiDAR-camera calibration is a precondition for many heterogeneous systems that fuse data from LiDAR and camera. However, the constraint from the common field of view and the requirement for strict time synchronization make the calibration a challenging problem. In this paper, we propose a hybrid LiDAR-camera calibration method aiming to solve these two difficulties. The configuration between LiDAR and camera is free from their common field of view as we move the camera to cover the scenario observed by LiDAR. 3D visual reconstruction of the environment can be achieved from the sequential visual images obtained by the moving camera, which later can be aligned with the single 3D laser scan captured when both the scene and the equipment are stationary. Under this design, our method can further get rid of the influence from time synchronization between LiDAR and camera. Moreover, the extended field of view obtained by the moving camera can improve the calibration accuracy. We derive the conditions of minimal observability for our method and discuss the influence on calibration accuracy from different placements of chessboards, which can be utilized as a guideline for designing high-accuracy calibration procedures. We validate our method on both simulation platform and real-world datasets. Experiments show that our method can achieve higher accuracy than other comparable methods.

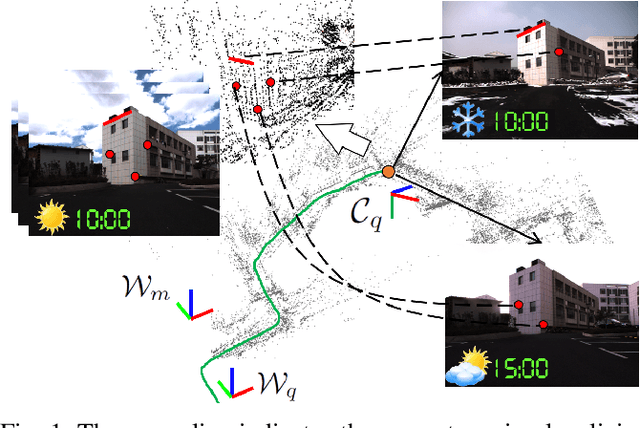

Communication constrained cloud-based long-term visual localization in real time

Mar 10, 2019

Abstract:Visual localization is one of the primary capabilities for mobile robots. Long-term visual localization in real time is particularly challenging, in which the robot is required to efficiently localize itself using visual data where appearance may change significantly over time. In this paper, we propose a cloud-based visual localization system targeting at long-term localization in real time. On the robot, we employ two estimators to achieve accurate and real-time performance. One is a sliding-window based visual inertial odometry, which integrates constraints from consecutive observations and self-motion measurements, as well as the constraints induced by localization on the cloud. This estimator builds a local visual submap as the virtual observation which is then sent to the cloud as new localization constraints. The other one is a delayed state Extended Kalman Filter to fuse the pose of the robot localized from the cloud, the local odometry and the high-frequency inertial measurements. On the cloud, we propose a longer sliding-window based localization method to aggregate the virtual observations for larger field of view, leading to more robust alignment between virtual observations and the map. Under this architecture, the robot can achieve drift-free and real-time localization using onboard resources even in a network with limited bandwidth, high latency and existence of package loss, which enables the autonomous navigation in real-world environment. We evaluate the effectiveness of our system on a dataset with challenging seasonal and illuminative variations. We further validate the robustness of the system under challenging network conditions.

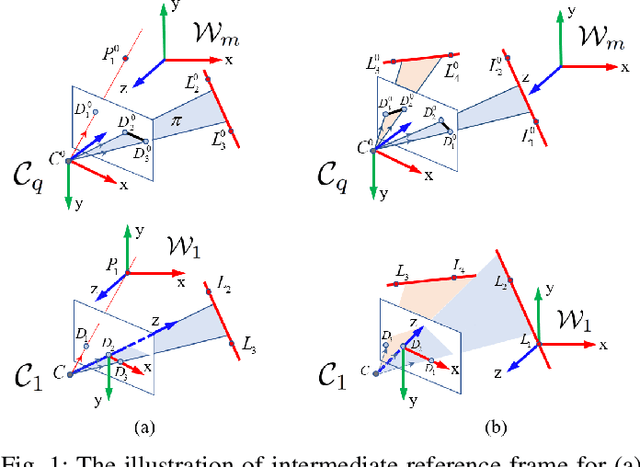

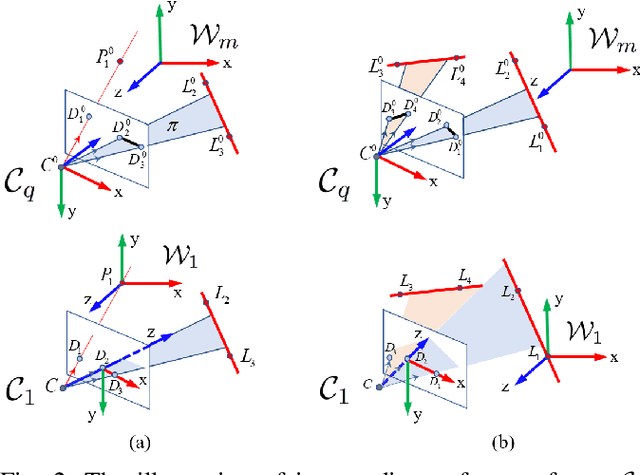

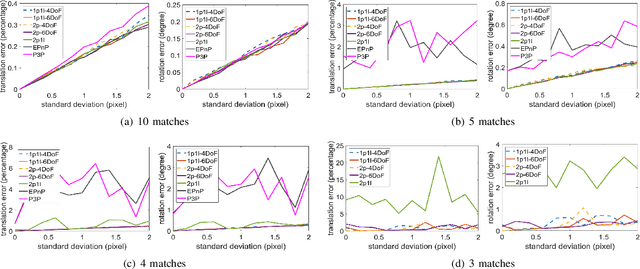

2-Entity RANSAC for robust visual localization in changing environment

Mar 10, 2019

Abstract:Visual localization has attracted considerable attention due to its low-cost and stable sensor, which is desired in many applications, such as autonomous driving, inspection robots and unmanned aerial vehicles. However, current visual localization methods still struggle with environmental changes across weathers and seasons, as there is significant appearance variation between the map and the query image. The crucial challenge in this situation is that the percentage of outliers, i.e. incorrect feature matches, is high. In this paper, we derive minimal closed form solutions for 3D-2D localization with the aid of inertial measurements, using only 2 pairs of point matches or 1 pair of point match and 1 pair of line match. These solutions are further utilized in the proposed 2-entity RANSAC, which is more robust to outliers as both line and point features can be used simultaneously and the number of matches required for pose calculation is reduced. Furthermore, we introduce three feature sampling strategies with different advantages, enabling an automatic selection mechanism. With the mechanism, our 2-entity RANSAC can be adaptive to the environments with different distribution of feature types in different segments. Finally, we evaluate the method on both synthetic and real-world datasets, validating its performance and effectiveness in inter-session scenarios.

Multi-session Map Construction in Outdoor Dynamic Environment

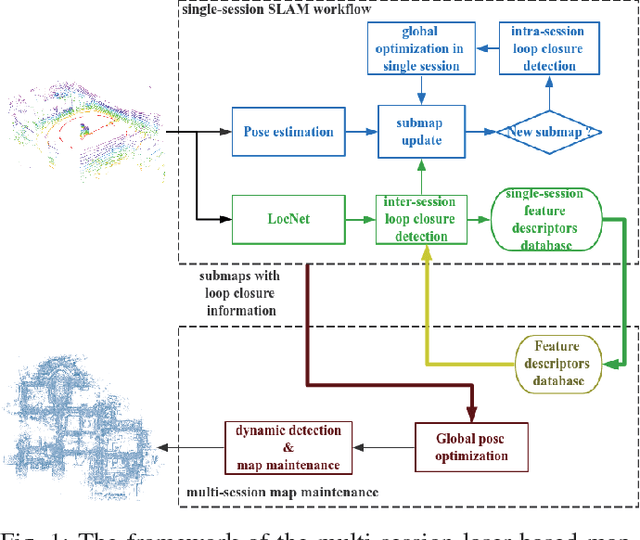

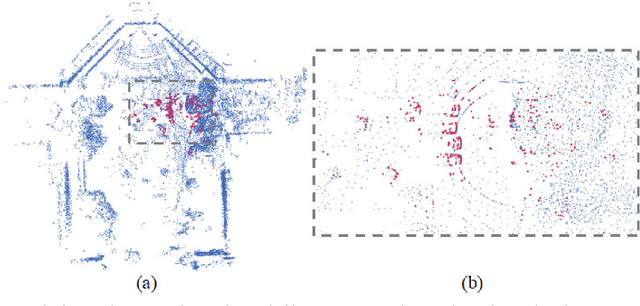

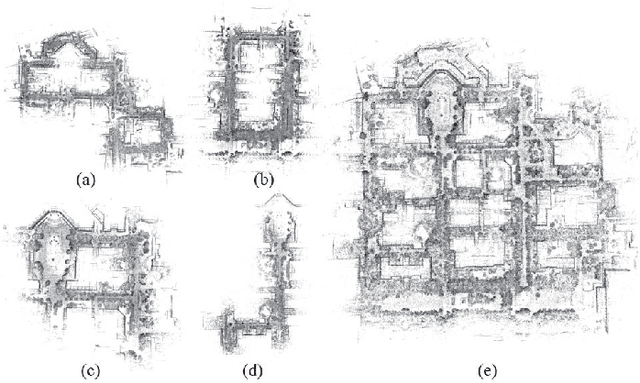

Jul 21, 2018

Abstract:Map construction in large scale outdoor environment is of importance for robots to robustly fulfill their tasks. Massive sessions of data should be merged to distinguish low dynamics in the map, which otherwise might debase the performance of localization and navigation algorithms. In this paper we propose a method for multi-session map construction in large scale outdoor environment using 3D LiDAR. To efficiently align the maps from different sessions, a laser-based loop closure detection method is integrated and the sequential information within the submaps is utilized for higher robustness. Furthermore, a dynamic detection method is proposed to detect dynamics in the overlapping areas among sessions of maps. We test the method in the real-world environment with a VLP-16 Velodyne LiDAR and the experimental results prove the validity and robustness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge