Spatiotemporal Decoupling Based LiDAR-Camera Calibration under Arbitrary Configurations

Paper and Code

Mar 14, 2019

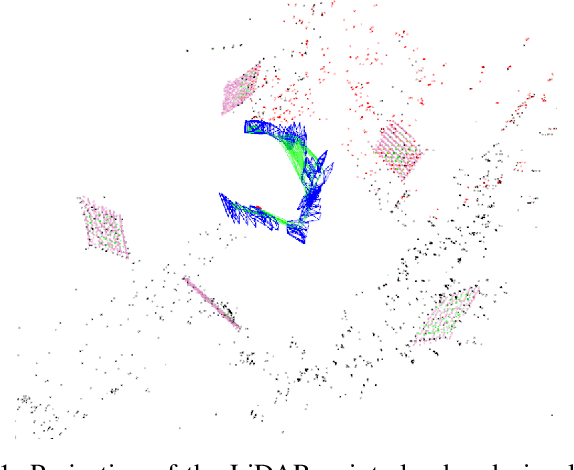

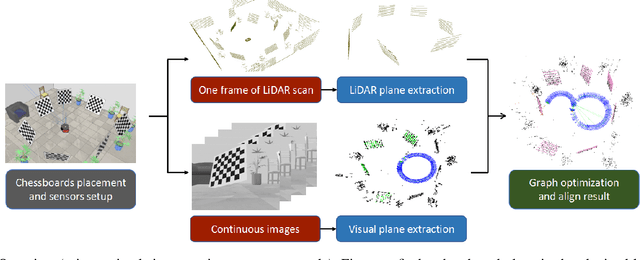

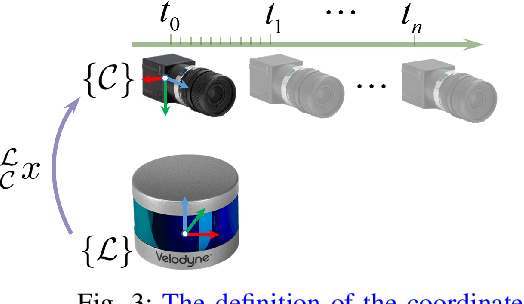

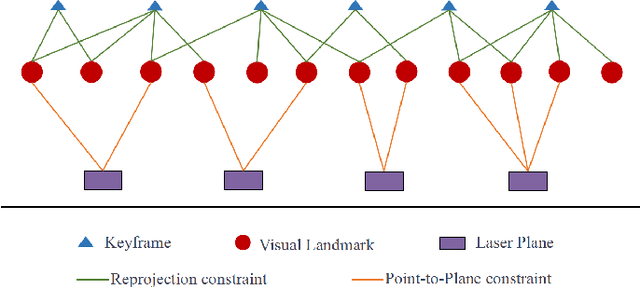

LiDAR-camera calibration is a precondition for many heterogeneous systems that fuse data from LiDAR and camera. However, the constraint from the common field of view and the requirement for strict time synchronization make the calibration a challenging problem. In this paper, we propose a hybrid LiDAR-camera calibration method aiming to solve these two difficulties. The configuration between LiDAR and camera is free from their common field of view as we move the camera to cover the scenario observed by LiDAR. 3D visual reconstruction of the environment can be achieved from the sequential visual images obtained by the moving camera, which later can be aligned with the single 3D laser scan captured when both the scene and the equipment are stationary. Under this design, our method can further get rid of the influence from time synchronization between LiDAR and camera. Moreover, the extended field of view obtained by the moving camera can improve the calibration accuracy. We derive the conditions of minimal observability for our method and discuss the influence on calibration accuracy from different placements of chessboards, which can be utilized as a guideline for designing high-accuracy calibration procedures. We validate our method on both simulation platform and real-world datasets. Experiments show that our method can achieve higher accuracy than other comparable methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge