Yiyuan Pan

Multi-cam Multi-map Visual Inertial Localization: System, Validation and Dataset

Dec 05, 2024

Abstract:Map-based localization is crucial for the autonomous movement of robots as it provides real-time positional feedback. However, existing VINS and SLAM systems cannot be directly integrated into the robot's control loop. Although VINS offers high-frequency position estimates, it suffers from drift in long-term operation. And the drift-free trajectory output by SLAM is post-processed with loop correction, which is non-causal. In practical control, it is impossible to update the current pose with future information. Furthermore, existing SLAM evaluation systems measure accuracy after aligning the entire trajectory, which overlooks the transformation error between the odometry start frame and the ground truth frame. To address these issues, we propose a multi-cam multi-map visual inertial localization system, which provides real-time, causal and drift-free position feedback to the robot control loop. Additionally, we analyze the error composition of map-based localization systems and propose a set of evaluation metric suitable for measuring causal localization performance. To validate our system, we design a multi-camera IMU hardware setup and collect a long-term challenging campus dataset. Experimental results demonstrate the higher real-time localization accuracy of the proposed system. To foster community development, both the system and the dataset have been made open source https://github.com/zoeylove/Multi-cam-Multi-map-VILO/tree/main.

Planning from Imagination: Episodic Simulation and Episodic Memory for Vision-and-Language Navigation

Nov 30, 2024Abstract:Humans navigate unfamiliar environments using the capabilities of episodic simulation and episodic memory. Developing imagination-based memory, analogous to episodic simulation and episodic memory, can enhance embodied agents' comprehension of the complex relationship between environments and objects. However, existing Vision-and-Language Navigation (VLN) agents fail to perform the aforementioned mechanism. We propose a novel architecture to help agents build a recurrent imaginative memory system. Specifically, the agent can maintain a reality-imagination hybrid global memory during navigation and expand the memory map through imaginative mechanisms and navigation actions. Correspondingly, we design a series of pre-training tasks to help the agent acquire fine-grained imaginative abilities. Our agents improve the state-of-the-art (SoTA) success rate (SR) by 7% while simultaneously imagining high-fidelity RGB representations for future scenes.

FLAME: Learning to Navigate with Multimodal LLM in Urban Environments

Aug 20, 2024Abstract:Large Language Models (LLMs) have demonstrated potential in Vision-and-Language Navigation (VLN) tasks, yet current applications face challenges. While LLMs excel in general conversation scenarios, they struggle with specialized navigation tasks, yielding suboptimal performance compared to specialized VLN models. We introduce FLAME (FLAMingo-Architected Embodied Agent), a novel Multimodal LLM-based agent and architecture designed for urban VLN tasks that efficiently handles multiple observations. Our approach implements a three-phase tuning technique for effective adaptation to navigation tasks, including single perception tuning for street view description, multiple perception tuning for trajectory summarization, and end-to-end training on VLN datasets. The augmented datasets are synthesized automatically. Experimental results demonstrate FLAME's superiority over existing methods, surpassing state-of-the-art methods by a 7.3% increase in task completion rate on Touchdown dataset. This work showcases the potential of Multimodal LLMs (MLLMs) in complex navigation tasks, representing an advancement towards practical applications of MLLMs in embodied AI. Project page: https://flame-sjtu.github.io

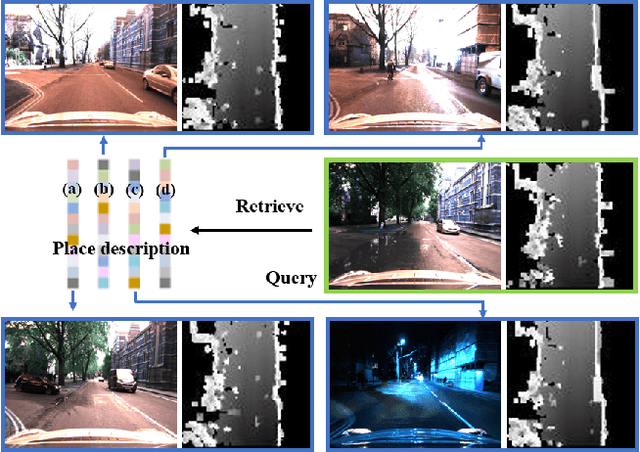

CORAL: Colored structural representation for bi-modal place recognition

Nov 22, 2020

Abstract:Place recognition is indispensable for drift-free localization system. Due to the variations of the environment, place recognition using single modality has limitations. In this paper, we propose a bi-modal place recognition method, which can extract compound global descriptor from the two modalities, vision and LiDAR. Specifically, we build elevation image generated from point cloud modality as a discriminative structural representation. Based on the 3D information, we derive the correspondences between 3D points and image pixels, by which the pixel-wise visual features can be inserted into the elevation map grids. In this way, we fuse the structural features and visual features in the consistent bird-eye view frame, yielding a semantic feature representation with sensible geometry, namely CORAL. Comparisons on the Oxford RobotCar show that CORAL has superior performance against other state-of-the-art methods. We also demonstrate that our network can be generalized to other scenes and sensor configurations using cross-city datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge